Operating Systems Exam 4单词卡 | Quizlet

1/59

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

60 Terms

One of the four conditions necessary for deadlock is hold and wait. What is meant by hold and wait in this context?

A process is holding at least one resource and waiting to acquire one or more additional resources that are held by other processes.

Deadlock prevention tries to eliminate deadlock by eliminating one of the four conditions. How can hold and wait be eliminated?

It can be eliminated by ensuring that when a process requests a resource it is not holding any other resources. That would either require requesting all resources at once (at the beginning of the program or when the first resources is needed), or releasing any current resources and reacquiring it along with any new resources at the same time.

This technique is not effective since it is difficult to know in advance what resources will be needed, and wastefully ties up unneeded resources, and it allows starvation.

If a resource allocation graph does not contain a cycle, there is no deadlock. If it does contain a cycle, is there deadlock or not? Explain.

If there is a cycle, there may or may not be deadlock. If there are only singles instances of all resources, then there is deadlock. If there are multiple resource instances, there is the possibility of deadlock.

The basic idea of deadlock detection is to look for deadlock occasionally. Assuming deadlock is found, what must be done to break the deadlock?

One or more of the deadlocked processes must be terminated.

The four conditions that characterize deadlock are mutual exclusion, no preemption, hold and wait, and circular wait. If the system is deadlocked, will all four of these conditions hold? Answer Yes or No, and then explain.

Yes, since these conditions are necessary for deadlock.

If all four of these conditions hold, will the system always be deadlocked if there are single instances of all resources? Answer Yes or No, and then explain.

Yes, because for single resource instances these conditions are sufficient for deadlock.

Deadlock can be prevented if the "mutual exclusion" criterion can be avoided. Explain how mutual exclusion can be avoided for certain types of files and thus prevent deadlock from occurring.

Deadlock can be avoided for read-only files, like binaries, since multiple processes can read them simultaneously. Similarly, most personal files are not accessed by others at the same time, so exclusive access is unnecessary in these cases.

As we try to determine this sequence of processes, what do we do if we find a process that has resource needs less than or equal to the available resources?

We assume that it runs, and then afterward, its allocated resources are added to the available resources.

In the Banker's Algorithm for deadlock avoidance, we define the system as being in a "safe state" if there exists a sequence of all processes in the system such that, for each process, the resources that the process can still request can be satisfied by the currently available resources plus resources held by all the earlier processes in the sequence. As we try to determine this sequence of processes, what do we do if we find a process that has resource needs greater than the available resources?

We assume that it has to wait until sufficient resources are available, and if we run this process it may request more resources than we have available and put the system in an unsafe state, so we move on to examine the next process.

Given the simplified form of a Resource Allocation Graph called a Wait-For Graph, how can deadlock be detected for single resource instances?

Search the graph for a cycle, and if a cycle is found, deadlock has been detected.

List the 4 necessary and sufficient conditions for deadlock.

Mutual exclusion

No preemption

Hold and wait

Circular wait

It is theoretically possible to prevent deadlock by forcing a process to request all resources at the beginning of its execution and release them at the end of its execution. Why would this prevent deadlock?

It eliminates the hold and wait condition, which is necessary for deadlock.

In an operating system, we are concerned about deadlock involving "resources". List three examples of operating system resources that we may be concerned about in this context.

CPU cycles, memory space, I/O devices, file, printer, network connection, mutex locks.

It is theoretically possible to prevent deadlock by forcing a process to request all resources at the beginning of its execution and release them at the end of its execution. Why is this solution impractical?

It is difficult to predict in advance which resources will be needed. This approach is also inefficient, as it ties up resources unnecessarily for long periods, reducing overall utilization. Additionally, it can lead to starvation.

The four necessary conditions for deadlock are mutual exclusion, no preemption, hold and wait, and circular wait. In terms of deadlock, what is meant by "no preemption"?

A resource can only be released voluntarily by the process holding it, typically after completing its task. Neither another process nor the operating system can force a process to release a resource.

In the context of Deadlock Avoidance, what is a safe state?

A safe state is a state in which there exists a sequence of resource allocations that will allow all processes to compete their execution.

In terms of deadlock, what is meant by "circular wait"?

There must exist a set of waiting processes such that P0 is waiting for a resource held by P1, P1 is waiting for a resource held by P2, ... Pn-1 is waiting for a resource held by Pn, and Pn is waiting for a resource held by P0.

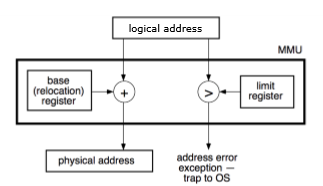

The MMU contains a Base Register. What is stored in this register?

It holds physical address where the process is loaded in memory — the "base" of the process in memory.

It is possible to avoid the "hold and wait" criterion and prevent deadlock by requiring processes to request, and be allocated, all of its resources before it begins execution. Is this a good solution? Explain.

No, it is not a good solution. It is difficult to know in advance what resources will be needed, it wastefully ties up resources it may not currently be using, and starvation is possible.

How does the MMU use the value in the Base Register to produce the physical address?

The MMU adds the Base Register value to a logical address in the process to get a physical address.

The MMU also provides protection. What is meant by "protection" in this context?

It prevents the process for accessing any memory locations past the highest address in the process, thus preventing it from accessing memory that belongs to another process.

Deadlock can be prevented if the "mutual exclusion" criterion can be avoided. Explain how printer spooling can avoid mutual exclusion and thus prevent deadlock from occurring.

A process that wants to print hands the job to the print spooler, which manages print jobs sequentially to maintain mutually exclusive access to the printer. However, the process continues execution immediately after handing off the job. This creates the illusion that multiple processes are printing simultaneously, even though the spooler ensures the jobs are printed one at a time.

The swapper, sometimes known as the medium-term scheduler, swaps processes between physical memory and swap space, also called backing store. Under what conditions would a process that is in swap space be swapped back into physical memory?

If the CPU scheduler chooses a process to run, and it is in swap space, it must be swapped back into physical memory before it can run.

With contiguous allocation, the entire process must be placed into memory as one contiguous unit using either fixed-sized partitions or dynamic partitions. Why is this a problem?

It may be difficult to find a large enough space in memory.

What is external fragmentation?

Wasted space external to the partitions being used - essentially a large number of small unused spaces, most of which are too small to hold a process.

One solution to reducing external fragmentation in memory is compaction. Is this a practical solution or not? Explain.

It is not very practical. Accessing memory is rather slow, and moving huge blocks of memory around would be a lot of overhead.

In the Banker's Algorithm for deadlock avoidance, what does it mean to say the system is in a "safe" state, and what does it mean to say the system is in an "unsafe" state?

If the system is in a safe state, the total resources (available plus allocated) are sufficient to allow all processes to complete in some order, assuming each may request its maximum and releases all resources upon completion. In an unsafe state, the resources are insufficient to guarantee all processes can finish, making deadlock possible (though not certain, as some processes may not request their maximum).

Segmentation breaks a process up into a collection of segments. What criteria are used to determine what is in each segment?

The segments come from the program, and are logical units within the program, such as the code, data, and the stack. Segments may also come from portions of the code, such as individual procedures or functions, or portions of the data, such as an array.

The Banker's algorithm can be used to avoid deadlock for multiple resource instances, yet it isn't used in practice. Why not?

For several reasons:

(1) the maximum resource requirement for each process must be stated in advance,

(2) there must be a fixed number of processes and resources,

(3) processes must be independent, and

(4) there is a huge overhead to run this algorithm every time a resource is requested.

Segmentation uses a Segment Table to assist with the address translation. What is stored in this Segment Table?

The segment's base and limit.

When deadlock is detected in a system, the deadlock can be detected by terminating a process. How is the process to be terminated selected?

There are several options. The OS can terminate all deadlocked processes, though this is often unnecessary. A more selective approach is to terminate one process at a time, based on factors such as the number of resources it holds, its priority or "cost," the amount of CPU time it has used, and whether it has performed I/O or interacted with files.

One of the big advantages of paging over segmentation is that paging does not need to use complicated methods such as first-fit or best-fit to find a free space in memory. Explain.

All frames in memory are the same size, so one is just as good as another. All that is necessary is to find one that is free.

When a program is loaded into memory at a location specified by the operating system, any references to addresses within the process's memory space must be adjusted to reflect the process's actual location in memory. Give an example of an address that must be modified.

Addresses of (static) variables, addresses in jump / branch instructions. Alternate answer: any address in the process, since all addresses assume the program is loaded at location 0.

Paging uses a Page Table to assist with the address translation. Where is this Page Table stored?

It is stored in the Process Control Block for that process, which is stored in memory as part of the operating system's kernel.

What information is in the Page Table?

For each page, the page's corresponding frame in memory.

Paging can occasionally suffer from internal fragmentation. Explain.

It is unlikely that each process is exactly a multiple of the page size in length, so the very last frame in the process is probably only partly full. On average, the internal fragmentation is half of the frame size.

What is the Translation Lookaside Buffer (TLB) and how is it used?

It is a cache for recent pairs of page numbers / frame numbers. Since accessing the page table requires a (slow) memory access, the paging hardware first searches the TLB to see if the page number is there, and if so, uses the corresponding frame number. If the page number isn't there, then it is retrieved from the page table, and also cached in the TLB in case it is needed again sometime soon.

When a program is loaded into memory at a location specified by the operating system, any references to addresses within the process's memory space, such as the address in a Load or Store instruction, must be modified before that instruction can execute. Explain why this is the case.

When the program is compiled, the compiler assumes the process will start at location 0 in memory. If it is loaded at another location, the base location must be added to any addresses in the program so that they address the proper address within the process's memory space.

The Memory Management Unit (MMU) has a dual role - it converts logical addresses to physical addresses, and it protects the process's memory. For Dynamic Relocation, draw a diagram showing what the MMU does.

The logical address is added to the base register value to produce a physical address. The logical address is also compared to the limit register values, and if it is greater than the limit, there is an address exception.

What is the relationship between the CPU scheduler and swapping?

If the process that the CPU scheduler chooses is not in memory (currently swapped out), the swapper must swap a process out to make room for that process, and then swap it back into memory.

Segmentation uses a Segment Table to assist with the address translation. Where is this Segment Table stored?

It is stored in the Process Control Block for that process, which is stored in memory as part of the operating system's kernel.

What information is in the Segment Table?

For each segment, the segment's base and limit.

Consider paging versus contiguous memory allocation. What advantages does paging provide over contiguous memory allocation?

The entire process does not have to be contiguous, code or data can be shared between processes, there are no complex memory allocation methods (e.g., first-fit, best-fit) needed, and there is no external fragmentation.

What disadvantages does paging share with contiguous memory allocation?

Both require the entire process to be in memory, and both require the entire process to be swapped in and out. Additionally, both require addresses to be dynamically translated by hardware. Finally, they both have some fragmentation, although contiguous memory has external fragmentation and paging may have internal fragmentation.

The Translation Lookaside Buffer (TLB) is a table that holds page numbers and corresponding frame numbers. How is it used in paging for address translation?

It is used as a cache for recent pairs of page numbers / frame numbers, and if the page number is found in the TLB, there is no need to get the frame number from the page table (which is much slower).

How is the TLB different from the normal memory cache?

The "normal" cache that sits between the CPU and the memory contains arbitrary instructions or data loaded from memory, whereas the TLB is much more specialized and only contains page and frame numbers.

What is the Limit Register used for?

It stores the highest logical address of the process. The MMU compares this value with the logical address being accessed; if the address exceeds the limit, an address error exception is triggered.

Explain the basic concepts of swapping.

If there isn't enough memory for all processes, some can be swapped out to make room. The operating system does this by saving the entire state of a process to disk. When the process becomes active again, it must be swapped back into memory. The entire process must be swapped out during this operation.

What is internal fragmentation?

Wasted space internal to the partitions being used.

Finding a free frame when using paging is much simpler than finding a free hole in memory using contiguous memory allocation. Explain the "first fit" memory allocation technique.

Allocate the first hole that is large enough.

Explain how paging finds a free frame.

A bit map is used to track free frames, with 1 indicating a free frame. Most CPUs have an instruction that returns the offset of the first 1 bit in a register.

How is the TLB similar to a cache?

It is much faster than physical memory, so just like a normal cache looks up addresses and retrieves data faster than getting that data from main memory, the TLB looks up page numbers and retrieves frame numbers faster than getting that data from the page table in main memory.

Why is it necessary for the loader to adjust addresses? Be specific.

An executable object file assumes a starting base address of zero. If the process is loaded starting at some other base address, every address in the process must be changed to the sum of the original address and that base address.

Distinguish between "logical memory" and "physical memory".

Logical memory is the address space seen by a process, which always appears to start at address 0 and extends to a maximum value, regardless of its actual location in physical memory. Physical memory refers to the actual DRAM in the computer, shared by the operating system and all running processes.

What is the function of the Memory Management Unit (MMU)?

Programs are compiled and linked as if they will be loaded at address 0, with all instruction and data addresses relative to that base. When the program is actually loaded elsewhere in memory, the MMU adjusts memory references by adding the program’s base address to each logical address, converting it into a physical address.

One of the algorithms that can be used for memory management, partitioning in particular, is Best Fit. Briefly explain this algorithm.

Search all partitions, and of those sufficiently large enough, choose the smallest.

Explain why best fit algorithm may not be the best choice for memory management.

Choosing the smallest possible block sizes often results in many small, unusable memory partitions, leading to significant external fragmentation. Additionally, the sorting required to make the best choice is more time-consuming than simpler algorithms like First Fit.

At first glance, segmentation and variable size partitions may appear similar. What advantages does segmentation have over variable size partitions?

What disadvantages do the two techniques share?

The entire program doesn't have to be placed contiguously in memory. Segments can be shared between processes.

A complex memory allocation scheme is needed (e.g., first-fit, best-fit). External fragmentation is possible. The entire process must still be placed in memory.

When segmentation is used, how is a process prevented from accessing memory locations outside its segments? Be specific.

The offset in the logical address is compared to the segment's limit, and if the offset exceeds this limit, an address error exception is triggered. Without this check, adding the offset to the base address in physical memory could generate an address outside the allowed range for that segment. It's not possible for a process to access an address lower than the segment's range, as negative virtual addresses are not generated by the compiler or assembler, which always produce positive or unsigned values.

How to prevent Circular wait?

Impose a total ordering of all resources types, and require that each process requests resources in an increasing order of enumeration

- Logical sequence that R are usually acquired

- Allow process to release all resources, and start requesting over.