Computer graphics revision

1/174

Earn XP

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No study sessions yet.

175 Terms

Framebuffer

An in-memory map of the display, one memory location per pixel.

Backface culling

A technique in computer graphics to increase rendering efficiency by only rendering the polygons facing towards the viewer.

Shading methods

Flat, Gouraud, Phong, Phong and Gouraud

Flat Shading

Uses polygon normals. It’s fast, and avoids interpolation

Gouraud Shading

Interpolates intensities between vertices. Basic shading, good for diffuse reflections. Cons: poor rending of highlights and specular reflections

Phong Shading

Interpolates normals. Pros: highest quality w/out volumetric rendering. Cons: 4-5 times slower than Gouraud.

Phong and Gouraud Shading

Uses Phong for surfaces with specular reflections (large ks), and uses Gouraud for diffuse surfaces (ks ~ 0)

Mocap def

Short for motion capture. A technique used to digitally record human movements for use in animation, video games, and virtual reality. Employed in various industries such as animation, video games, and virtual reality to capture and record human movements.

Rendering pipeline def

The sequence of stages that graphics data goes through to be displayed on a screen. It includes vertex processing, rasterization, fragment processing, and output.

Z-buffer algorithm

Used to determine screen locations of vertices. Is independent of model representation

Parallel projection versus Perspective projection

Parallel projection: Projection where lines remain parallel in the 3D space.

Perspective projection: Objects appear smaller with distance due to converging lines towards a vanishing point.

uses of normals in computer graphics

determine the direction a surface is facing, calculate lighting effects, and perform shading calculations.

Polygon Mesh

A collection of vertices, edges, and faces that define the shape of a 3D object. Properties include connectivity, topology, and geometry.

Oscilloscope

Device that shows you “different signals”

Raster display

Array of pixels

Image resolution

Density of points describing image

Properties of a pixel

Color, intensity

What is human color vision based on

Three ‘cone’ cell types, that respond to light energy in different bands of wavelengths

Subtractive color mixing

Cyan, Magenta, and Yellow (middle intersection is black)

Additive color mixing

Red, Green, Blue (middle intersection is white)

How is the frame buffer addressed and read?

It is addressed in any order (convenient), but is read out to hardware sequentially (efficient).

Cartesian coordinates have what and are limited by what?

They have infinite precision, and are limited by being drawn on a display with finite resolution.

Rasterization

Algorithm for converting infinite precision specification into pixels. (The conversion of ideal, mathematical graphic primitives onto raster displays)

How are pixels individually set or cleared?

Random access

Graphical objects and framebuffer: discrete or continuous?

Graphical objects are continuous, while framebuffer is discrete.

Steps in building an object

Primitives (basic shapes) → Object (shapes that are given coordinates on a plane, constructed in local coordinates) → Visual Attributes (render primitives with visual attributes)

2D Graphics render pipeline (complex to simple)

Modelling (static), Animation (dynamic), Visualisation (semantic, eg: visualization of thunderstorms, brain activity, etc..) → Curves, Surfaces, Volume, Material, Illumination → Points, Lines, Polygons, Normals, Colors → Pixel, RGBA

2D Graphics pipeline

Model Scene

Build objects from primitives

Object constructed in local coordinates

Object placed in world coordinates

View Scene

Specify area of a scene to view: window

Specify area of display on which to view viewport

Clip scene to window

Map scene to window

Can take output from several scenes

Output (image we want to see)

Rasterize vectors to pixels

Rasterization works on primitives

Attributes determine appearance

Coordinate types

Local coordinates

Specify locations of primitive in object

Arbitrary units, chosen by modeller

World coordinates

aka user coords

Specify location of objects in scene

Specify location of clipping window

Units depend on graphic API

Device coordinates

black = screen coordinates

specify locations of viewports

measured in real-time PHYSICAL units (eg pixels, mm)

How are pixel colors determined by

RGB values between 0 and 255

Examples of linear transformations

Rotation, Scaling, Shear, Mirroring

What are things rotated around?

The origin of the coordinate system

Scaling: what happens when you give equal or unequal entries on diagonal?

Equal entries give isotropic scaling, while unequal entries give anisotropic scaling

Affine transformations

Transformations that preserve lines and parallelism, but not necessarily euclidian distances/angles

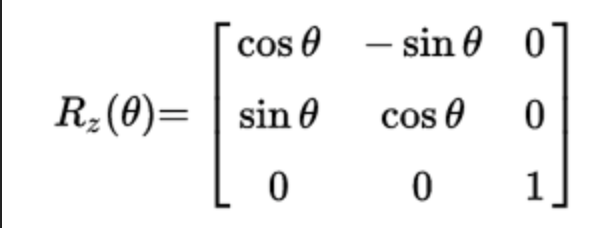

3D Rotations by theta around the the z-axis

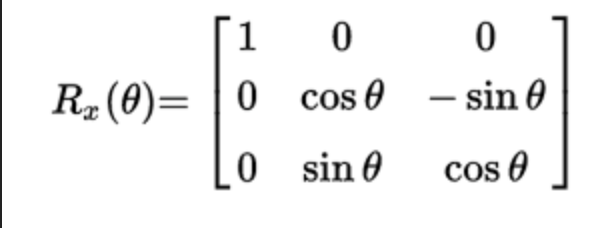

3D Rotations by theta around the x-axis

3D Rotations by theta around the y axis

Start and end of vertex coordinates

local

coords of objects relative to their local origin

world

coords in respect of larger world

relative to some global origin of world

view

coords as seen from camera/viewers POV

clip

processed between -1 to 1 range, and determine which vertices will end up on scene

can add perspective

screen

viewport transformed to screen coords

Aliasing

Is caused by undersampling (not taking enough discrete samples to capture a fast rate of change)

Super-Sampling Anti-Aliasing (SSAA)

Take many point samples per pixel, and assign grey level as the weighted average value. For example, a 2x SSAA will render 4 pixels per screen pixel, etc.

Results in smoother, nicer looking results. Ridiculously expensive, can lead to low performance and framerate. Sometimes implemented in hardware.

Multisample anti-aliasing (MSAA)

Faster processing by processing fragment shaders only once, and only supersampling depth and stencil buffers.

Fast approximate anti-aliasing (FXAA)

Pixel-level fast algorithm (implemented as fragment shader). Identify depth edges in the image. Smooth edges at a pixel level.

Anti-aliasing and Texture Maps: Compression

From texture space → to screen space. Several texels map to a single pixel. To avoid aliasing errors, texture images should be sampled many times to calculate pixels, but very expensive

Anti-aliasing and Texture Maps: Magnification

From texture space → to screen space. Single pixel lies within texel. Higher resolution texture map required.

MIP Mapping

Pre-calculated, optimized sequences of images. Approximate pre-image of pixels in texture space by a square of size 2n. Permits pre-calculation for anti-aliasing.

MIP Mapping: Precompute…

Subsampled copies of texture map for sizes 2n, 2n-1, … 1.

MIP Mapping: Select…

A texel from the appropriately sampled map. If surface is close to camera, use high resolution map. If surface is distant from camera, use low resolution map.

MIP Mapping: Linear interpolation…

Is done between levels and improves quality

MIP Mapping: Cost…

is constant per pixel

MIP Mapping with OpenGL

MIP mapping is enabled in OpenGL by calling glGenerateMipmap(GL TEXTURE 2D); and selecting one of the mipmap filtering modes:

GL_NEAREST_MIPMAP_NEAREST (selects the closest mipmap and the closest texel)

GL_LINEAR_MIPMAP_NEAREST (selects the closest mipmap and interpolates nearest texels)

GL_NEAREST_MIPMAP_LINEAR (uses the two closest mipmaps and uses)

GL_NEAREST on each and average them.

GL_LINEAR_MIPMAP_LINEAR (selects the two closest mipmaps, uses GL_LINEAR on them and average the result)

Bilinear filtering, Trilinear filtering

Ways to filter in MIP Mapping

Bilinear filtering

Approximates pixel values from pixels around it

Trilinear filtering

Extension of bilinear filtering, which also performs linear interpolation between levels of MIPmaps

Anisotropic filtering

A method of enhancing image quality of textures on surfaces of computer graphics that are at oblique viewing angles with respect to the camera.

A solution to blurring on surfaces at oblique viewing angle.

Anti-aliasing in methods Computer Graphics

Area sampling, super-sampling, MIP mapping

Anti-aliasing filtering

Low-pass filtering, convolution with smoothing filter function: weighted average

FULL Rendering pipeline

Vertex Specification → Vertex Shader → Tessellation → Geometry Shader → Vertex Post-Processing → Primitive Assembly → Rasterization → Fragment Shader → Per-Sample Operations

In OpenGL’s rendering pipeline, what are the programmable stages called?

Shaders, and they can be programmed in C-like language called GL Shading Language (GLSL)

What do vertex shaders do?

Perform basic processing of individual vertices in the scene (eg: viewpoint transformation)

Fragment shaders

Process fragments generated by the rasterization

Vertex locations

Where texture coordinate information is stored

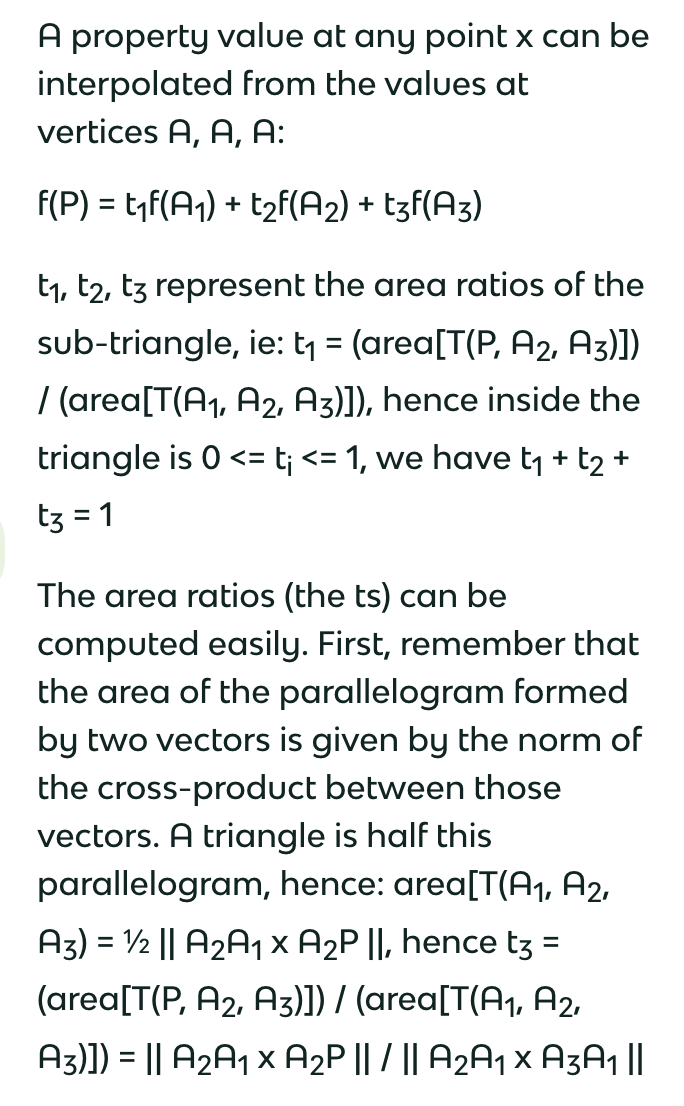

Barycentric Interpolation

A method of linear interpolation for a fragment’s texture coordinates

Linear Interpolation using Barycentric Coordinates

A property value at any point x can be interpolated from the values at vertices A, A, A:

f(P) = t1f(A1) + t2f(A2) + t3f(A3)

t1, t2, t3 represent the area ratios of the sub-triangle, ie: t1 = (area[T(P, A2, A3)]) / (area[T(A1, A2, A3)]), hence inside the triangle is 0 <= ti <= 1, we have t1 + t2 + t3 = 1

The area ratios (the ts) can be computed easily. First, remember that the area of the parallelogram formed by two vectors is given by the norm of the cross-product between those vectors. A triangle is half this parallelogram, hence: area[T(A1, A2, A3) = ½ || A2A1 x A2P ||, hence t3 = (area[T(P, A2, A3)]) / (area[T(A1, A2, A3)]) = || A2A1 x A2P || / || A2A1 x A3A1 ||

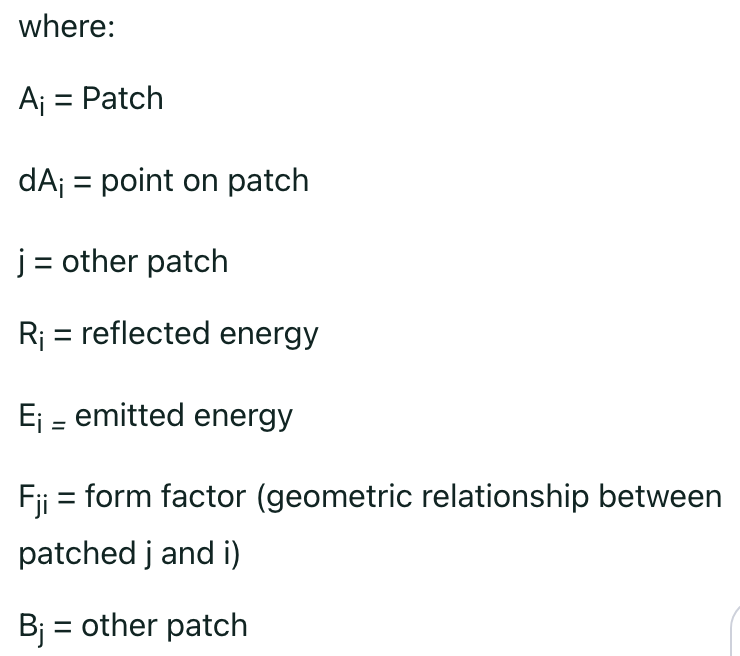

Radiosity

Energy per unit area leaving a patch per unit time. Invariant to viewpoint changes, it is view-independent

Meshing

The process of dividing a scene into patches. Meshing artifacts are scene dependent.

Idea of adaptive meshing

To re-compute the mesh a part of radiosity calculation

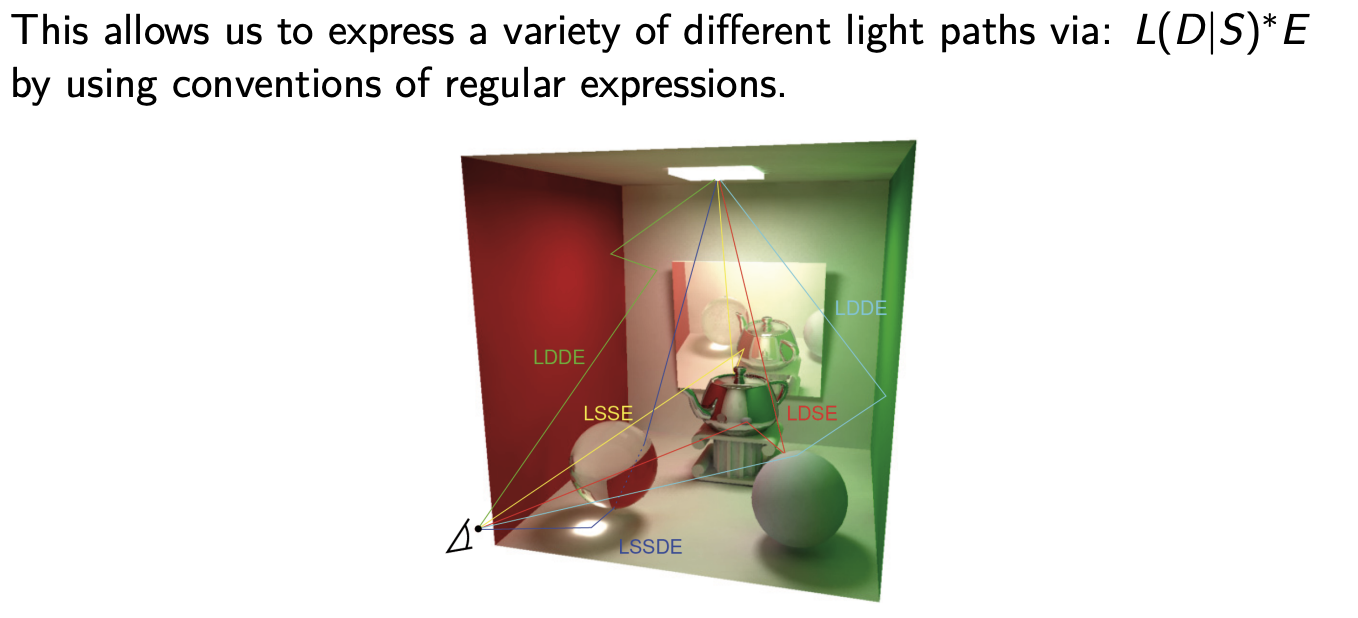

Light paths notation

L: light source

E: eye

D: diffuse reflection

S: specular reflection or refraction

What paths are considered in Whitted Ray Tracing

LS*E and LDS*E

Characteristics of Whitted Ray Tracing

Captures specular-specular interaction

Diffuse reflection only for direct light rays

LSDE, LDDE, LSSDE are not considered

Only perfect specular reflections

Hard shadows

Path tracing

An extension of ray tracing proposed by Kajiya in 1986. Uses multiple rays per fragment pixel instead of single ray. At each object, makes a “smart” decision how to continue tracing.

Double buffering

A technique using two frame buffers to prevent screen flickering during rendering.

Similarities between Path Tracing and Ray Tracing

Both use backward tracing and are view-dependent algorithms

Light ray

Aka shadow ray- for direct diffuse reflection. Ray from the point to the light source. Way to check if object will create a shadow.

What step is Vertex Processing in the rendering pipeline

The first step of the rendering pipeline

In the rendering pipeline, what is vertex processing, and what does it entail

This initial stage involves manipulating the vertices of 3D objects to convert them into 2D coordinates on the screen. Tasks such as transformations, lighting calculations, and culling are performed here.

In the rendering pipeline, what is rasterization and what does it entail

It is the second step in the rendering pipeline. After the vertices are processed in vertex processing, the 2D primitives are generated from the processed vertices. These primitives are then broken down into pixels to be colored.

In the rendering pipeline, what is fragment processing and what does it entail

Happens after rasterization, once the pixels are determined. This stage involves determining the final color of each pixel based on factors such as textures, lighting, and shading.

In the rendering pipeline, what is output and what does it entail

Finally, after fragment processing, the processed pixels are sent to the display for rendering. This stage involves blending the pixels together to create the final image that is presented to the user.

What step is Rasterization in the rendering pipeline

The second step of the rendering process

What step is Fragment processing in the rendering pipeline

The third step of the rendering pipeline

What step is Output in the rendering pipeline

The fourth step of the rendering pipeline

What does mocap involve

It involves tracking the movements of actors or performers wearing special markers or sensors (reflective markers), which are then translated into digital data. A marker’s position is estimated by triangulating from all camera views. Finally, the skeleton is animated according to recorded motion.

What does mocap bring and allow

Brings a level of realism and authenticity to animated characters and virtual environments that would be challenging to achieve through traditional animation methods. Allows for more natural and fluid movements in animations.

Radiosity light path

LD*E (models diffuse-to-diffuse interactions)

Radiosity x area should be sum of..

emitted energy and reflected energy

Radiosity formula

BidAi = EidAi + Ri∑jBjFjidAj

Global illumination

Considers all interactions of light with the scene, lighting directly from the light sources, and indirect lighting. Starts from all points of all light sources, and follows every ray of light as it travels through the scene.

Hemicube

Solution to the fact that direct computation of approximate form factors equation is expensive to compute. Divided into small “pixel” areas and form factors are computed for each.

How so solve occlusion problem

Ray tracing (does it neatly)

Convex hull property of Bezier curves

Bezier curves will be contained inside convex hull of control points

When rendering a scene with transparent objects, which algorithm is a better choice? Painters or Z-buffer?

Painters

Painters algorithm

An algorithm for visible surface determination in 3D computer graphics that works on a polygon-by-polygon basis rather than a pixel-by-pixel, row by row, or area by area basis of other Hidden-Surface Removal algorithms.

(Just like an artist paints, who starts their painting with an empty canvas, the first thing the artist will do is to create a background layer for the painting, after this layer they start creating other layers of objects one-by-one. In this way he completes his painting, by covering the previous layer partially or fully according to the requirement of the painting.)

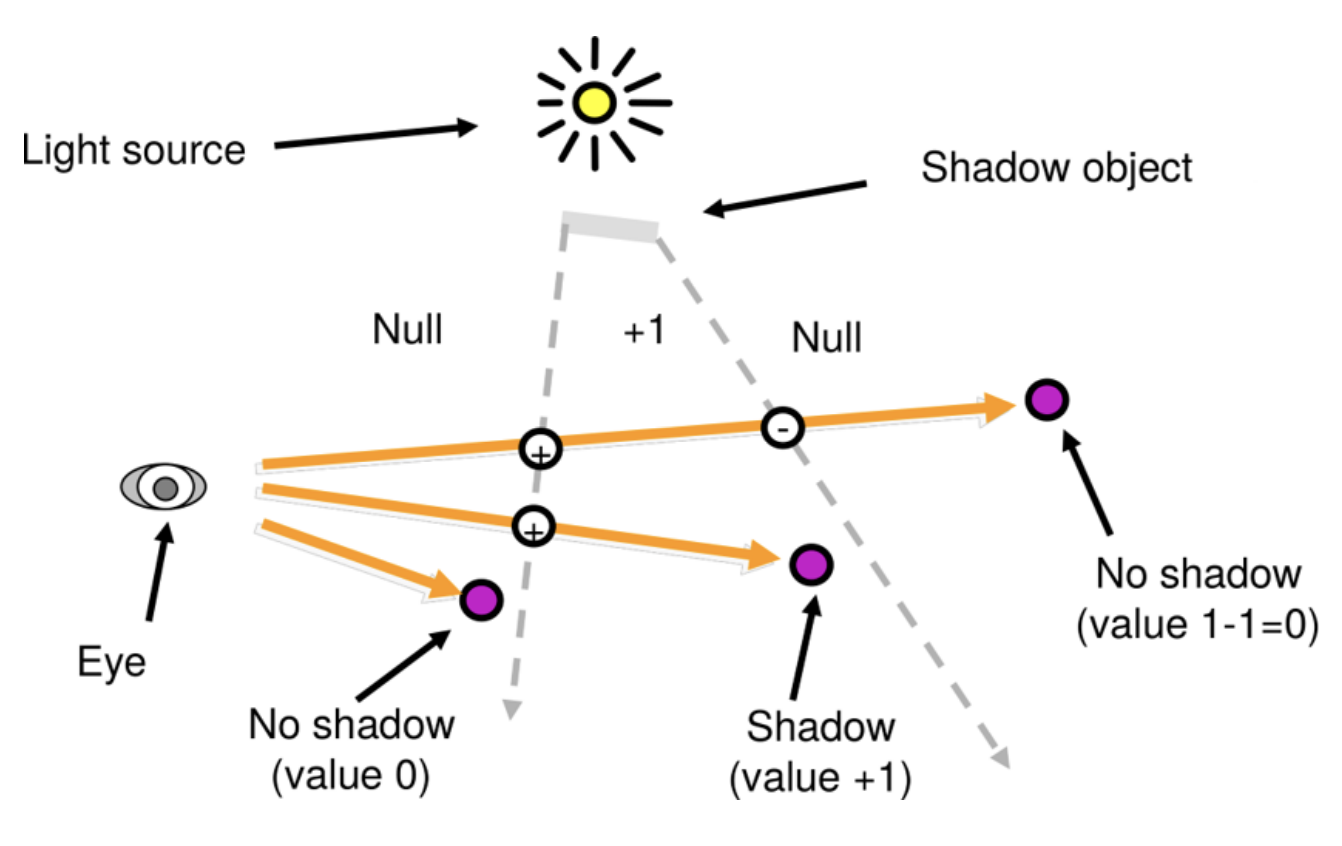

Shadow mapping

Bump mapping

Simulates height variations by adjusting directions of surface normals

Heightmap (elevation map)

A texture provided to shader hat defines for each pixel on the model’s surface how far from the polygonal mesh’s surface it should lie.

Steps to developing heightmap:

Look up the heightmap for the current surface position (implemented as texture lookup)

Calculate surface normal perturbation at this location from the heightmap

Combine the calculated normal with the original one

Calculate illumination for the new normal using a standard shading

Bump mapping limitation

Silhouettes and shadows remain unaffected

Direct shadow methods

Shadow volumes, shadow maps

Indirect shadow methods

Raytracing, radiosity, photon mapping

Shadow volumes

Determine volumes which are created by shadows. A point is in the shadow if it is in the volume