Perceptual Similarity and distance CGSC433

1/85

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

86 Terms

when speech sounds differ from one another in more than 1 acoustic dimension

may be more useful to express confusion rate with perceptual similarity

the perceptual similarity between speech sounds can be

measured

these speech sounds can be put on a

map

sounds closer together are

perceptually more similar

sounds farther apart are

perceptually more distinct

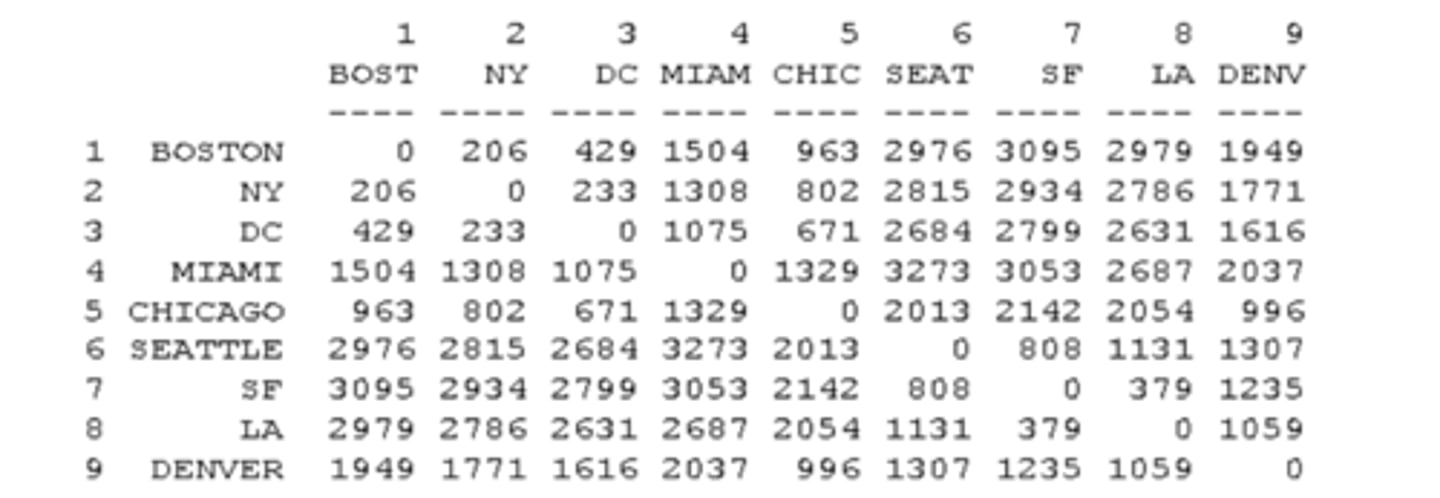

English fricative perception Miller and Nicely 1955

subjects are played [f] and [θ] and asked to identify the sounds as "f" or "th"

![<p>subjects are played [f] and [θ] and asked to identify the sounds as "f" or "th"</p>](https://knowt-user-attachments.s3.amazonaws.com/9dc1c41a-02d8-472e-b5a3-f06080a077b3.jpg)

when investigating speech sounds that differ along multiple (unknown) dimensions

the "confusion scores" give us a way to think about their similarity

when one sound is easily confused with another

we can think of the two sounds as being close together in perceptual space, they are similar

when one sound is not easily confused with another

we can think of the two sounds as being far apart in perceptual space, not similar

these ideas can be made precise with statistical techniques called

Multidimensional scaling (MDS) which is like triangulation, and clustering analyses

essentially multidimensional scaling allows us to

draw a perceptual map in n dimensions where n is any number

we usually use

2 dimensions, so we can see it easily and then we can try to interpret these dimensions

when we make maps, we need to find a way for

all the distances to get along. This is MDS

represent perceptual differences between

stimuli as ratio/proportion

estimate

perceptual distance- confusability plus "Shepard's Law"

pin down points in the space with

multidimensional scaling (MDS)

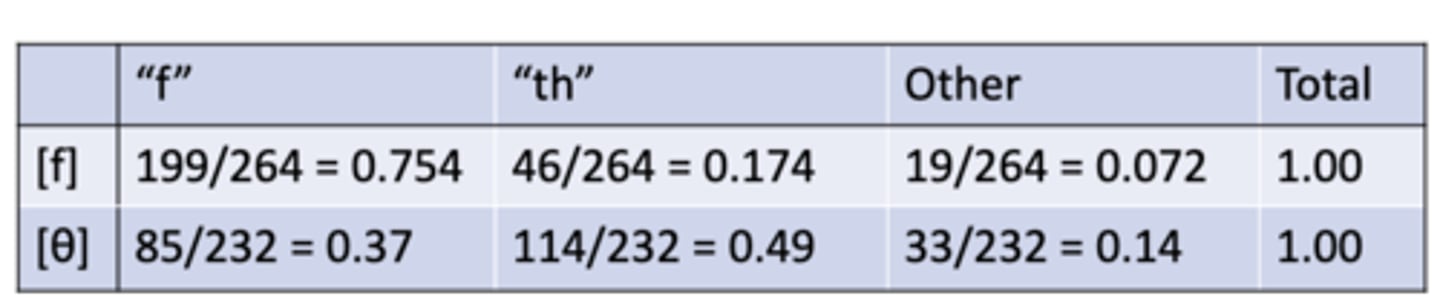

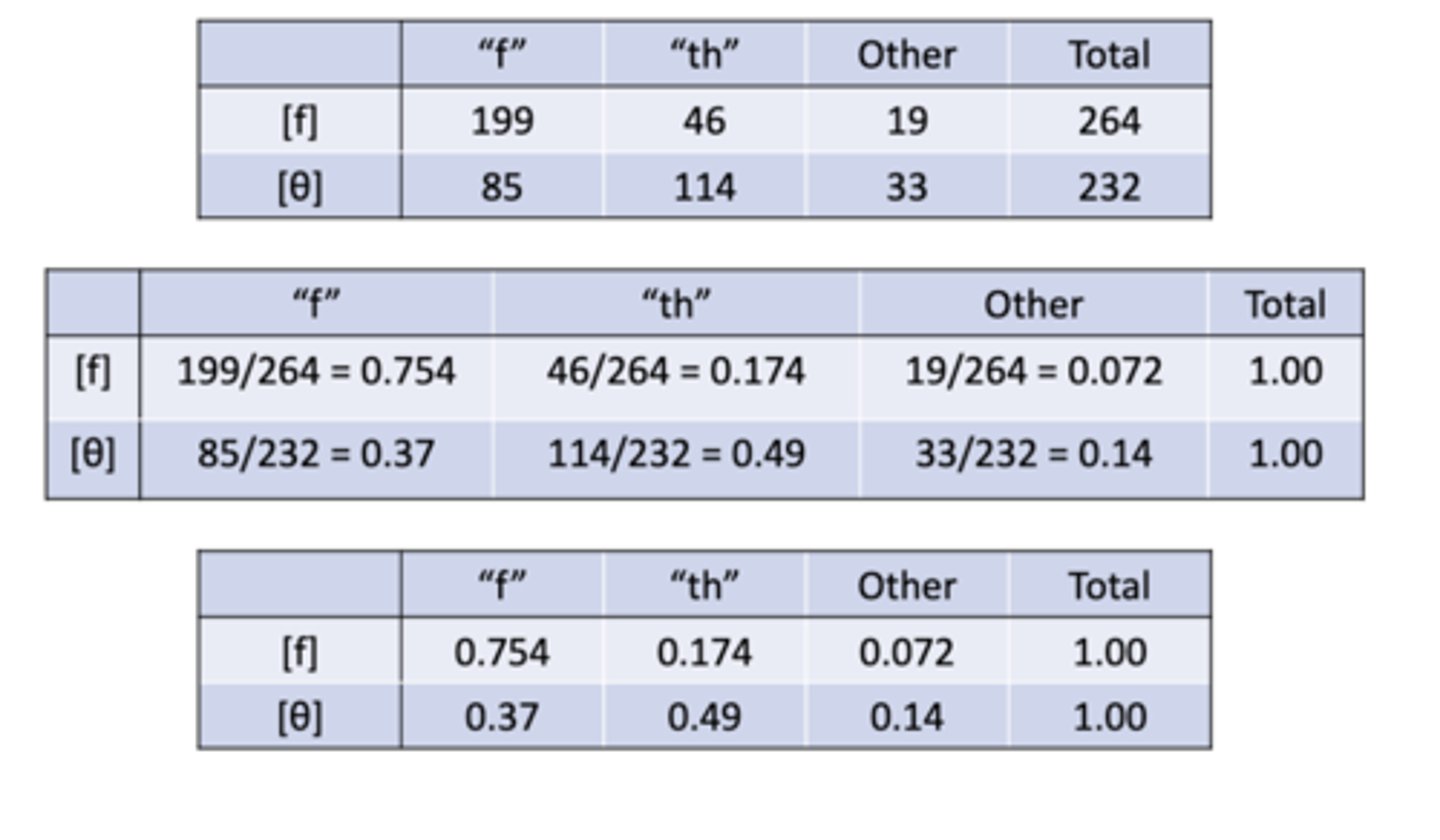

English fricative perception Miller and Nicely data expressed as ratios

calculating proportions from raw data- English fricative perception Miller and Nicely

1st step in finding perceptual distance

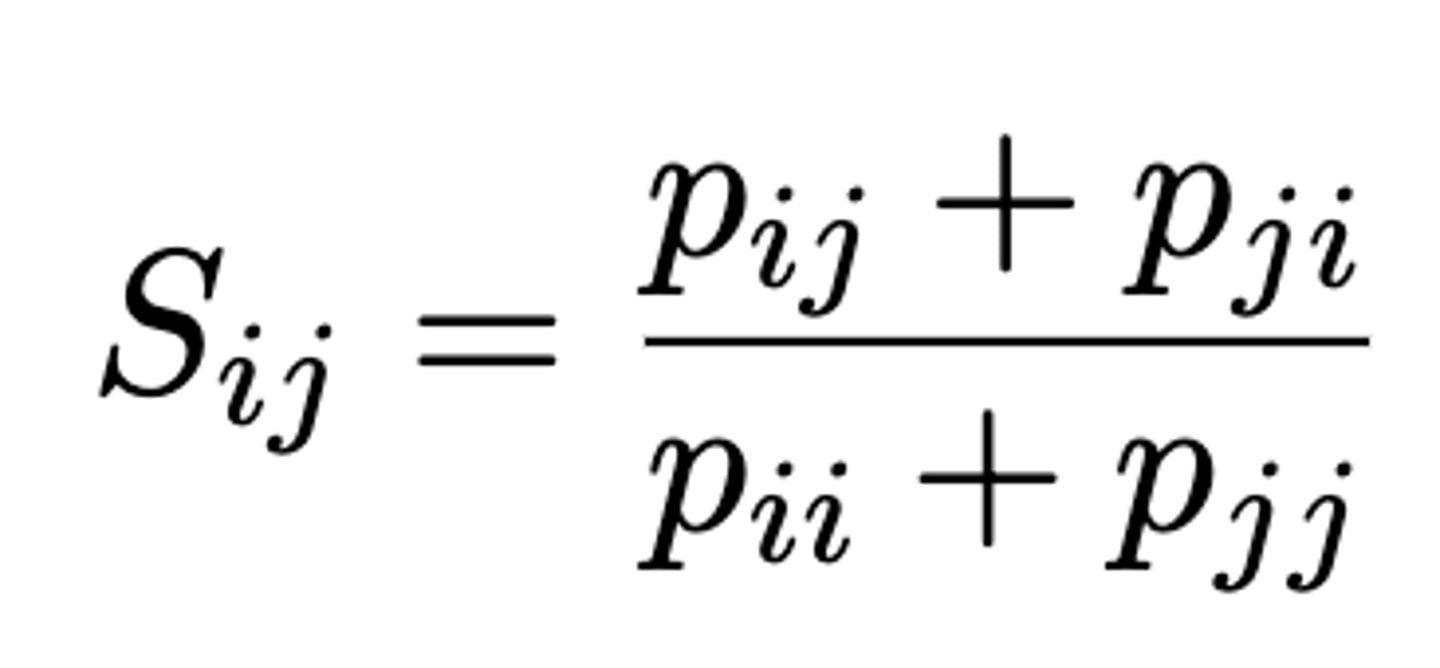

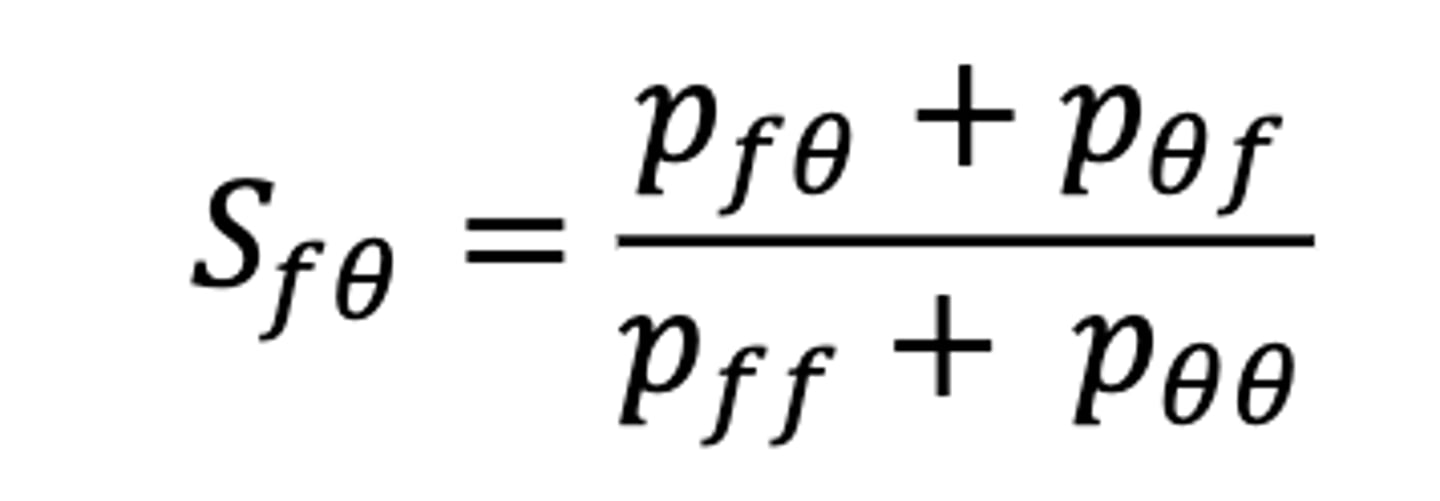

determine similarity of these pairs with this equation

S=

similarity

P=

proportion

I and j are

variables representing two sounds under comparison

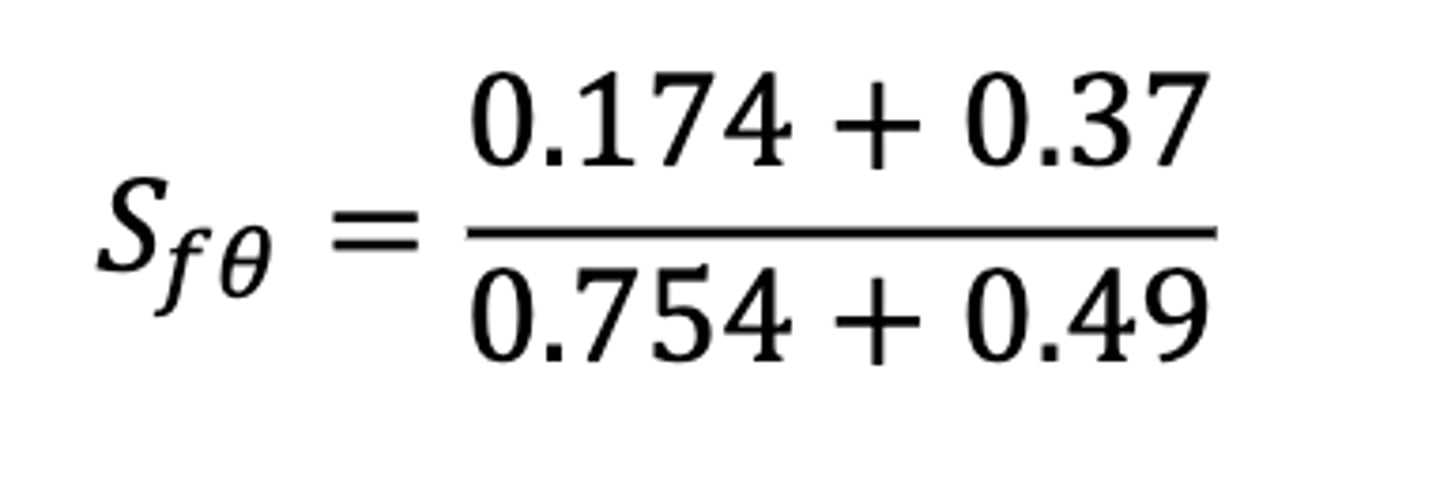

confusability of [f] with [θ]

confusability of [f] and [θ] continued

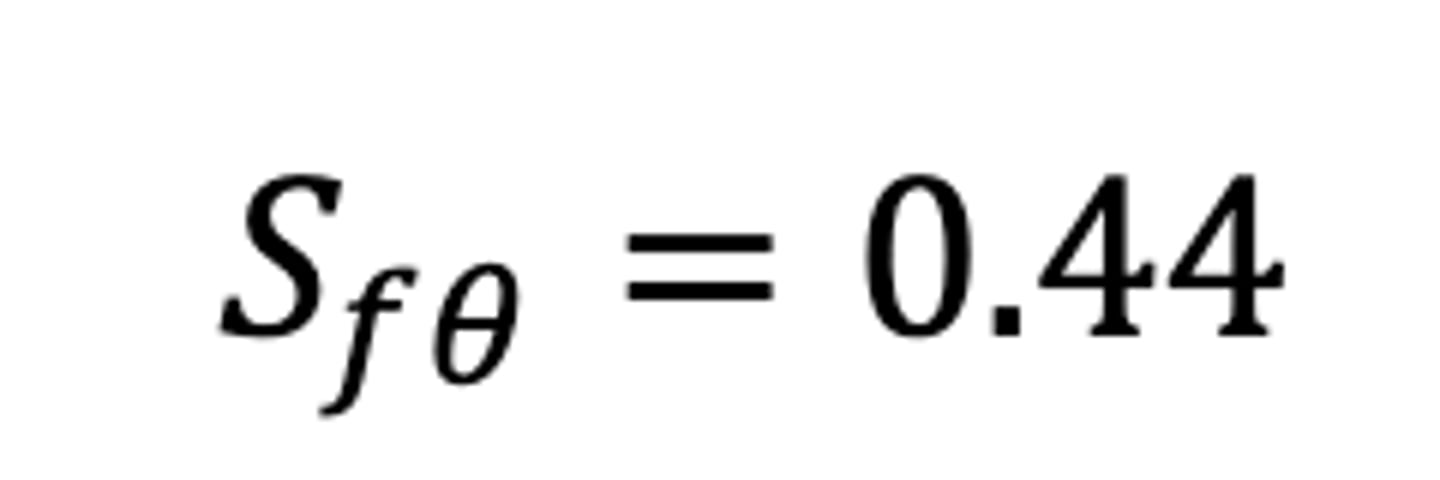

confusability of [f] and [θ] answer

S=1 means

exactly the same

S=0 means

totally different

if 2 sounds are exactly the same S=1

perceptual distance should be zero-Shepard's law

if 2 sounds are completely different S=0

perceptual distance should be very large-Shepard's law

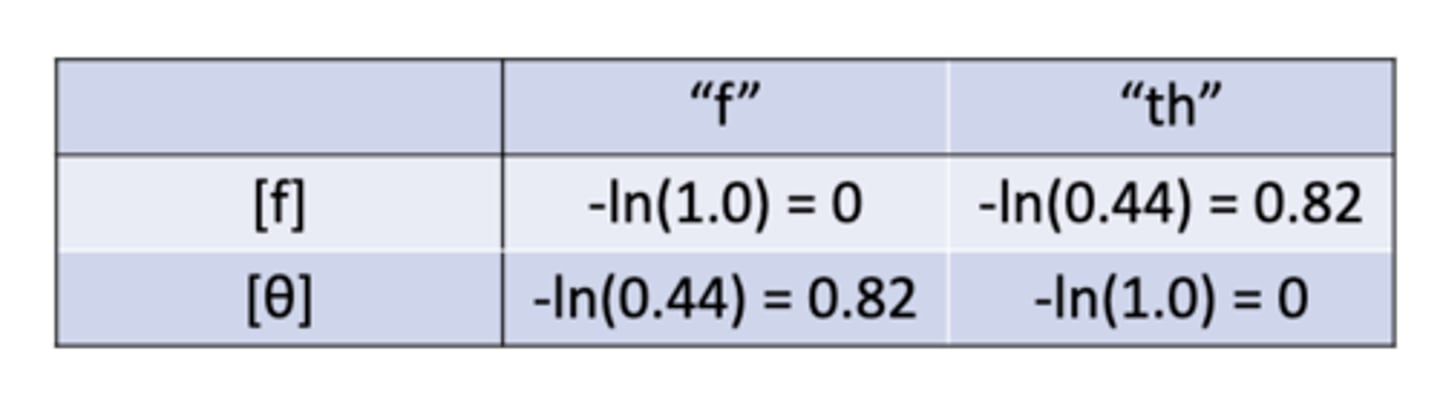

perceptual distance is

negative natural log (ln) of similarity: d=-ln(S)

perceptual distance in [f] and [θ]

do all humans perceive speech the same way, regardless of their native language?

no, our own language also affects the way we perceive individual speech sounds

speakers of different languages have

different perceptual maps of the same sounds

perceptual vowel space is similar to

acoustic vowel space

pure auditory differences

matter in all languages

perception of speech can be affected by both

auditory ability and our own language experience

phonetic coherence refers to

our ability to force incoherent signals into a unifying picture--> one example duplex perception

people not only hear the chirp but they also perceive

what is heard from the right ear as [da] or [ga]- the missing piece has been filled in by the ear

people also rely on

visual signals when perceiving speech- example-McGurk effect

McGurk

an error in perception that occurs when we misperceive sounds because the audio and visual parts of the speech are mismatched.

for people who aren't sensitive to the McGurk effect could be for a variety of reasons including

some people are more sensitive to auditory cues than visual cues, bilingualism or learning a second language can change sensory perception

bilinguals experience McGurk effect more than

monolinguals

multisensory speech perception- somatosensation

tactile sensation- in one experiment people heard a syllable starting with either a voiced or voiceless stop, on some trails they also received a puff of air on their hand or neck, speech was presented in masking noise to reduce overall accuracy

the ganong effect

one where your lexical knowledge (the list of words you know) affects your speech perception

more people say they hear dog than gog because

dog is a real word and gog isn't

the real word acts as

a perceptual magnet compared to the non-word

speech perception is more than

acoustic analysis of speech signal, matching analysis with a string of phonemes, putting phoneme string together, identifying word that matches sound sequence, top down processing

lexical knowledge-top down processing helps with

phonetic coherence

sound missing from a speech signal can be

restored by brain and may disappear to be heard

[s] is perceived even when its not there due to

knowledge of word legislation

speech replaced with

3 sine waves that vary in frequency through time

strong evidence that we use knowledge of our own language to

understand speech-top down processing

factors affecting speech perception include

auditory ability, phonetic knowledge, our own language experiences, visual/other sensory cues

despite the great variety of different speakers, different conditions, and coarticulation involved in speech (known as the lack of invariance problem)

listeners perceive vowels and consonants as constant categories

two different approaches

motor theory of speech perception (Liberman et al. 1967, Liberman and Mattingly, 1985) and general auditory approach

motor theory of speech perception basis

relation of phoneme to acoustic is context-dependent

lack of invariance problem

variability problem

general audition and learning cannot account for

uniqueness of human ability to perceive speech, speech-specific linguistic module is needed, speech motor system could provide this

internal synthesizer

mappings between intended articulators/motor commands and acoustic consequences

listeners use the acoustic signal and knowledge of these mappings to

determine intended articulatory gestures of the speaker

speech is understood in terms of

how it is produced

modified--> hypothesized that

articulatory events recovered by human listeners are neuromotor commands to the articulators, rather than actual articulatory movements or gestures

according to the motor theory of speech perception

human ability to perceive speech sounds is special and can't be attributed to general mechanisms of audition and perceptual learning

perception thought to depend on

a specialized decoder or module that is speech-specific, unique to humans, and innately organized as part of human specialization for language

a major benefit of motor theory of speech production

perception and production governed by the same types of units/mechanisms

support for the motor theory of speech perception comes from

McGurk effect-where visual cues of articulation seem to have an effect on the listeners perception of the sound, duplex perception, and speech shadowing was shown to be faster after hearing spoken words

general auditory approaches

acoustic events are the objects of perception

general auditory approaches have

no special-speech module: perception uses the same perceptual systems and learning mechanisms that other animals have

general auditory approach is a term coined by

Diehl and colleagues to describe non-motor alternatives

GAA say context dependence of acoustics is

not a problem: listeners make use of multiple cues and can interpret with acoustic context

support for general auditory approaches

lack of acoustic invariance is not problematic, categorical perception- synthetic sounds can be perceived categorically and non-human animals exhibit categorical perception

drawbacks against general auditory approaches

would be surprising is there were not processes and resources devoted to the perception of speech

research actually supports a special status for speech in auditory perception

evidence suggests that human neonates prefer sounds of speech to non-speech

adults are able to distinguish speech from non-speech based on

visual cues alone

infants 4-6 months can

detect based on visual cues alone, when a speaker changes from one language to another, though all but those in bilingual households lost that ability by 8 months

perceptual judgements by clinicians and even family members of a client play

a major role in understanding diagnosis and management of the disorder

it also matters from the client/patient standpoint

what they can perceive when they have particular communication/hearing disorders

ataxic dysarthria

associated with cerebellar disorders, involves irregular or slow rhythm of speech, with pauses and abrupt explosions of sound and abnormal or excessively equal stress on every syllable

a feature of ataxic dysarthria is

inability to regulate long-short-long-short patterning of syllable durations in multi-syllabic utterance

normal listeners will have trouble

identifying word onset locations in speech of someone with ataxic dysarthria

SLPs understanding of

link between neural disease, speech timing problems, and lexical access as an important part of speech perception is crucial which allows more effective intervention on speech timing issue to benefit both speaker and listener

standardized articulation tests evaluate

goodness of consonant production in word-initial, medial, and final positions such as /s/ in sock, messy, and bus

listeners rely mostly on

word-initial consonants for word identification in continuous speech

audiometric testing

identification of frequencies of hearing loss

significant hearing loss in higher frequencies 4,000-8,000 Hz compared with

lower frequencies 250-2,000 Hz will likely affect processing of sounds like /s/, /ʃ/, and /t/

knowledge of speech perception is important for

matching amplification characteristics of hearing aids to hearing loss patterns across frequency spectrum