Operating Systems

1/32

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

33 Terms

What is an OS?

A software program that manages all the hardware and software on a computer

Acting as the bridge between the user and the computer's physical components

Allowing you to interact with the device by managing tasks like file management, memory allocation, and running applications

Coordinating all computer's resources: CPU, memory, storage devices, and peripherals (keyboard, mouse), to ensure smooth operation

Provides a visual interface (desktop) for users to interact with the computer and launch applications

Hardware

all the physical electronic and mechanical elements forming part of a computer system

provides basic computing resources (CPU, memory, I/O devices)

Software

the instructions or programs that the hardware needs in order to function

Main Tasks of OS

File management: Creating, deleting, organizing, and accessing files on the computer

Memory management: Allocating memory to different running applications

Process management: Managing the execution of multiple programs simultaneously

Input/Output handling: Managing communication between the computer and external devices like printers and scanners

Security & Access Control: Protects system integrity, user data, and prevents unauthorized access

Multitasking & Scheduling: Manages task execution efficiently using scheduling algorithms

OS Purpose

Manages and allocates resources

Control the execution of user programs and operations of I/O devices

Kernel

The core software running the system. It is “the Operating System” essentially

It, and your programs, run in different modes: user-mode vs kernel-mode

These terms are used to talk about the restrictions and security different types of programs can have

2 common designs of Kernels

Monolithic kernels are single programs that contain all kernel functions. It is used in most modern OSs, including Windows, Mac OS X, and Linux

Micro-kernels split areas of functionality up into different programs, running a minimal kernel and as much as possible running in user-space instead

How does the Kernel work?

Loads into memory during start-up and remains in memory until the system shuts down

Has unlimited access to the hardware, while user applications have limited access

Intervenes to repair errors that occur in user mode

Modern operating systems (Windows, Linux, and macOS) use two distinct execution modes: Kernel Mode and User Mode. These modes help ensure system security and stability by separating application-level execution from core system operations

Kernel-Mode Code

Code has unrestricted access to the machine

Do anything, access anything

It can directly access any memory address in the computer

It can access any hardware

Needs to run in this mode so it can control the hardware and provide the process abstraction for user-mode programs

For this to work, the hardware (CPU) needs to have a distinction between privileged and non-privileged modes of operation

In early computers and simpler embedded systems, all the code runs in this mode, completely controlling the hardware with no other programs running. Downside – your program can do anything

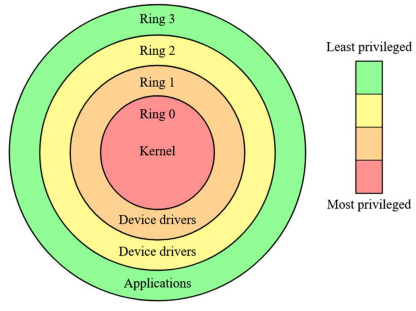

Protection Rings

The ordered protection domains

It is a security architecture used to regulate access to system resources and prevent unauthorized actions

Provide different privilege levels, ensuring that critical system components are safeguarded from user applications

This idea is implemented in different ways on different CPUs

Structure of Protection Rings

Most modern operating systems implement a four-ring model, though x86 architectures use only two rings (Ring 0 & Ring 3):

Ring 0 (Kernel Mode): Full access to hardware and system resources (OS kernel and device drivers)

Ring 1: Handles system services and privileged drivers

Ring 2: Used for additional security, managing I/O operations

Ring 3 (User Mode): Runs applications with restricted privileges (user programs and software)

Purpose of Protection Rings

Security: Prevents unauthorized code from directly accessing hardware

Stability: Ensures user applications don’t crash the entire system

Efficiency: Controls privilege levels to manage resources effectively

Improving fault tolerance

x86 and ARM CPU Modes

Implement protection rings as part of its real/protected mode distinction:

The CPU starts in real mode and can do anything

Typically, it boots the operating system kernel program, which configures the basics of protection levels and then switches to protected mode

Protected mode hardware-enforces whatever the different protection ring settings are

The kernel will have a ring that allows it do to everything, there will be a ring for “normal” user programs, and there could be more levels of ring in-between depending on the operating system design (eg, a ring for device drivers that can do more than user code but less than the full kernel)

ARM CPUs have 3/4 protection levels for user programs, kernel, hypervisor, firmware (ARM 8+)

User-Mode Code

Code running in this mode is restricted:

It will only be able to access the memory the kernel has allowed it to

It may not be able to call some opcodes, like changing mode

The concept of a user-space program running under a kernel is abstracted into the idea of a process

In this mode, applications run with limited privileges to prevent direct access to hardware, ensuring system stability

What happens when User-Mode Code attempts Direct Hardware Access?

The processor will detect the unauthorized operation and generate a trap or fault (e.g., a segmentation fault)

The operating system’s kernel will interfere and either terminate the offending process or handle the situation smoothly (e.g., returning an error to the application)

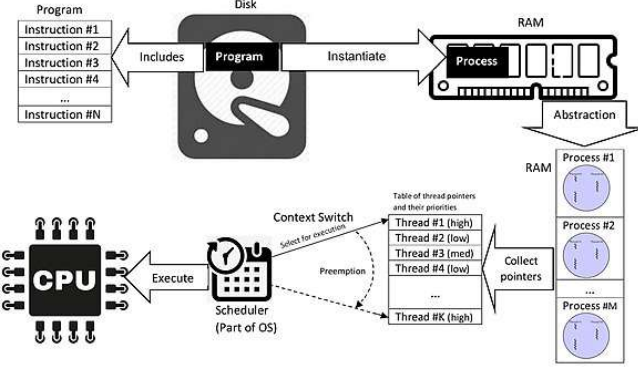

Processes

A program running on the machine, managed by the OS

The OS will keep track of the following for a process:

The code it is executing

Memory that belongs to it, and perhaps shared access to other memory

Any system resources it has - open files, network sockets, locks, etc.

Security permissions – what can the program access, which user is running it

The hardware context

Process/thread/task – caution as terminology across different OS

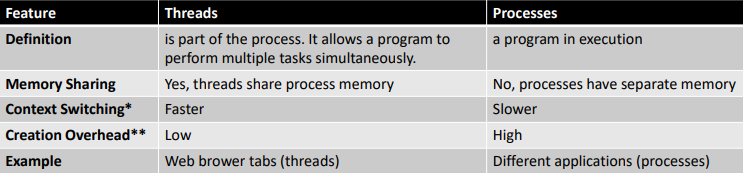

Threads

A process can have one or multiple. Each one shares the same resources as its parent process but executes independently

Lightweight: use fewer system resources compared to full processes

Shared Memory: within the same process share memory and global variables

Fast Context Switching: switch faster than processes since they don’t require memory remapping

Parallel Execution: enable multitasking and parallelism within a process

Threads vs Processes

The process (parent) is the main program running

Inside the process, multiple threads (T1, T2, T3) run independently but share the same memory and resources

This allows for faster execution and better efficiency compared to creating multiple processes

Threads improve performance by enabling parallel execution of tasks within a process. They are widely used in web servers, operating systems, and modern applications to make programs more efficient and responsive

Context Switching

the process of storing and restoring the state of a thread or process so that execution can resume from the same point later. It allows multiple tasks to share a CPU efficiently

Creation Overhead

Refers to the time and computational cost required to create a new thread or new process

Threads generally have lower overhead than processes because they share memory and resources, whereas processes require independent memory and resource allocation

Program vs Process vs Thread

Scheduling

The method by which the OS decides which processes or threads will be assigned to the CPU for execution

It ensures efficient CPU utilization and fair process execution by managing multiple processes and threads in a multitasking environment

Multitasking

allows multiple processes or tasks to run simultaneously by sharing CPU time

improves system efficiency by quickly switching between tasks, making it appear as though multiple programs are running at the same time

this may be actual parallel running of processes (e.g, multiple CPUs or cores)

and/or time slicing between processes - quickly and frequently switching between which process is running to give the impression they are all running simultaneously

Types of Multitasking

Pre-emptive Multitasking: OS decides how long each task gets CPU time and forcefully switches between tasks. E.g. Windows, Linux, macOS

Cooperative Multitasking: Each task voluntarily gives up control of the CPU, requiring well-behaved programs. E.g. Older systems like Windows 3.x and classic Mac OS

Types of Time Slicing

Cooperative – the process indicates when it is ok to stop for a moment

Pre-emptive – the OS decides when to switch and it’s done without the process’s involvement

Scheduling Algorithms

First-Come, First-Served (FCFS) – Non-pre-emptive, runs in order of arrival

Shortest Job Next (SJN or SJF) – Runs the shortest process first

Round Robin (RR) – Each process gets a fixed time slice (time quantum)

Priority Scheduling – Higher priority processes execute first

Multilevel Queue Scheduling – Divides processes into different priority queues

Types of Scheduling

Long-Term Scheduling (Job Scheduling)

Short-Term Scheduling (CPU Scheduling)

Medium-Term Scheduling

I/O Scheduling

Long-Term Scheduling (Job Scheduling)

Decides which processes will be admitted into the system for processing

Controls degree of multiprogramming (number of concurrent processes)

Short-Term Scheduling (CPU Scheduling)

Decides which process gets the CPU next

Runs frequently (every few milliseconds)

Uses scheduling algorithms like FCFS, Round Robin, SJF, Priority Scheduling

Medium-Term Scheduling

Temporarily removes processes from memory (swapping) to improve performance

I/O Scheduling

Manages I/O requests and determines the order of disk accesses

Disk Scheduling

Needs a scheduling algorithm to know what I/O jobs to dispatch to disks in what order

Different devices will have different needs/algorithms

Why is Scheduling Important?

Ensures fairness: No process is left waiting indefinitely

Maximises CPU utilisation: Prevents CPU idleness

Minimises response time: Provides quick interaction in time-sharing systems

Balances system load: Distributes work efficiently among processors