DAT 255 Deep learning - Lecture 16 Transformers for natural language processing

1/3

Earn XP

Description and Tags

Flashcards about Transformers for NLP

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

4 Terms

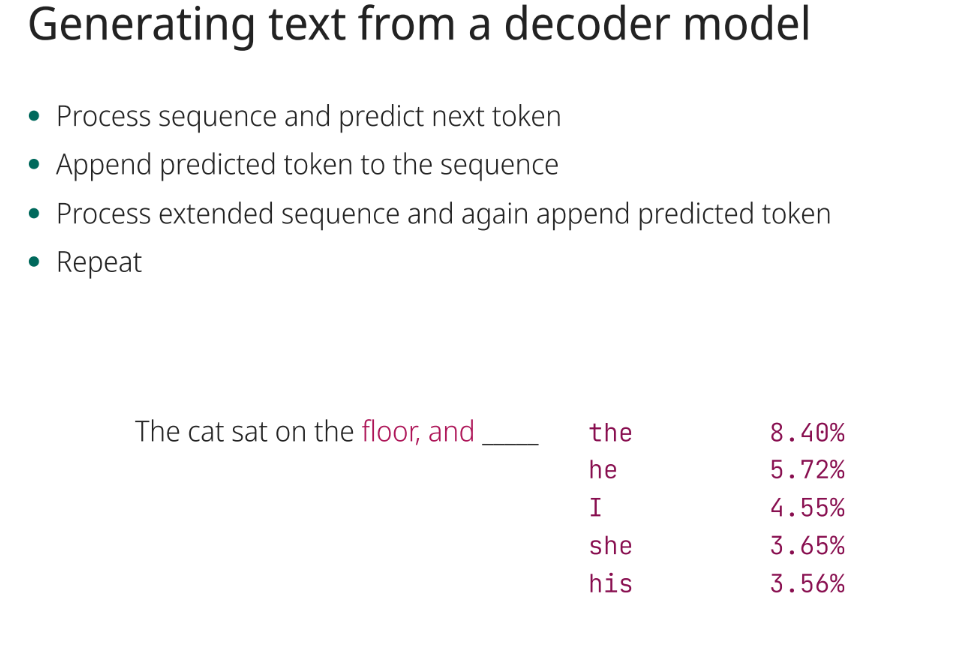

How is text generated from a decoder model?

Process sequence and predict next token, Append predicted token to the sequence, Process extended sequence and again append predicted token, Repeat (Stop if predicting end-of-sequence token)

How does Beam search work and what are its limitations?

Keep track of several possible branches of output sequences, and select the sentence with highest probability, but is computationally expensive and can get stuck in loops.

How does Top-K sampling work?

Sample among the K tokens with the highest score.

How does Adjusted Softmax sampling work?

Add a parameter T called temperature in the softmax function. Low T samples among best tokens, High T samples among all tokens making output more random.