6 DL: Neural Networks

1/13

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

14 Terms

You can explain the difference between AI, ML, and DL (AI > ML > DL)

AI is de algemene term

ML is een subgebied dat leert van data

DL is een subset van ML met diepe neurale netwerken voor complexe patronen.

You know what Latent means in DL (Latend Space)

Something hidden

Present, but not directly observable

You understand what latent space means in DL (Latent Space)

Gecomprimeerde representatie van de belangrijkste kenmerken in data, niet rechtstreeks zichtbaar maar betekenisvol voor het model.

You know what encoding and decoding mean (Encoding/Decoding)

Encoderen = data samendrukken tot latente features.

Decoderen = vanuit latente features terug naar bruikbare output genereren.

You understand how artificial neurons work (Artificial Neuron)

Een artificiële neuron ontvangt inputs, weegt ze, telt ze op en gebruikt een activatiefunctie om te beslissen of hij “vuurt”.

You can describe the architecture of a neural network (Neural Network Layers)

Input Layer

Takes the raw input data (e.g., pixel values, features).

One neuron per input feature.

No computation here — just forwards the data.

Hidden Layers

Perform most of the learning.

Each neuron:

Computes a weighted sum of its inputs

Adds a bias

Applies an activation function (e.g., ReLU, Sigmoid)

There can be one or many hidden layers (hence "deep" learning).

The more layers → the more complex patterns the network can learn.

Output Layer

Produces the final prediction:

Regression → 1 neuron (e.g., predicted price)

Binary classification → 1 neuron with sigmoid

Multi-class classification → N neurons with softmax

You know the difference between theoretical and practical models (Universal Approximator)

Theoretical:

1 hidden (very wide) layer

with non-linear activations is enough to solve any problem.

Practical:

Computationally very hard

Does not generalize well to unseen data

You understand training and interfering in the training cycle of a neural network (Training vs Inferencing)

Training = gewichten aanpassen met data.

Inferencing = voorspellingen maken met een getraind model.

You know the learning cycle of a neural network (Learning Cycle)

1.Forward Pass

Run batch of training samples through network

2.Calculate error (or loss)

Supervised learning = we need an error to guide our search for good weight settings

3.Use optimizer

Figure out how to adjust the weights a little for that last layer

4.Go backwards (one layer at a time)

Figure out how to adjust, go back one layer,…

repeat all the way back to the first layer

You can explain the forward and backward pass (Forward & Backpropagation)

Forward pass:

data door het netwerk -> output.

Loss berekenen.

Backward pass:

Fout terugpropageren door netwerk om gewichten aan te passen (backpropagation).

You know what an epoch is (Epoch)

Een epoch is één volledige ronde waarbij je het hele trainingsdataset één keer door je model laat gaan.

Represents one iteration over the entire dataset.

You know how training is performed in batches (Batches)

We cannot pass the entire dataset into the neural network at once. So, we divide the dataset into number of batches.

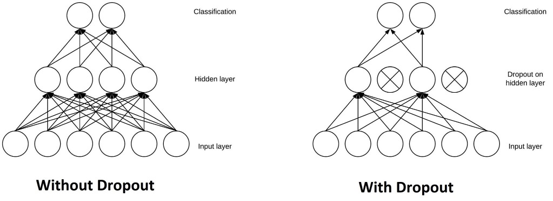

You understand the use of dropout as regularization (Dropout)

Dropout schakelt willekeurige neuronen tijdelijk uit tijdens training om overfitting te voorkomen.

You can work with tf.keras Sequential and Functional API (Keras APIs)

Sequential API = laag per laag model.

Functional API = flexibeler, laat meerdere inputs/outputs of complexe architecturen toe