Week 9 Impulsivity & Delayed DIscounting

1/33

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

34 Terms

what is intertemporal choice?

what is temporal discounting?

intertempiral choice = decisions where time is a factor in calculating value/utility

temporal discounting = the effect of time on value

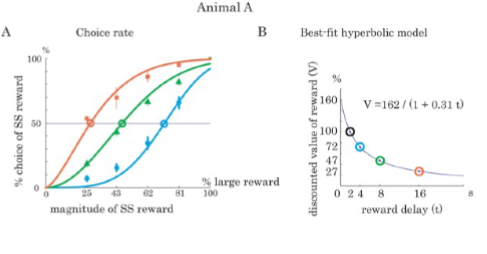

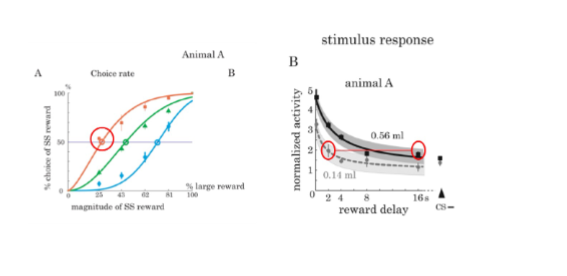

Kobayashi and Schultz (2008) - infuence of reward delays on response of dope neurons

experimental design

1) had rats fixate on a dot in the center then present two choices on peripheral

2) one choice = short delay small reward (SS), other choice = long delay big reward (LL)

3) measured the ml release of dopamine for different ranges of delays

operant/ instrumental conditionin

Kobayashi and Schultz (2008) - infuence of reward delays on response of dope neurons

results

when the delay is long (16,8,4 sec) animal prefers SS (2 sec) reward > 50%

when delay is short enough animal prefer LL reward; <50)

the point where prob of choosing SS 50% = point of subjectice equivalence / indifference point

overall, when delays long even if reward large, monkey prefers SS. but if large reward only delayed by a little then monkey will take LL

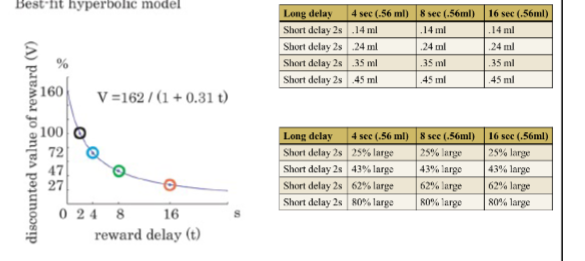

Kobayashi and Schultz (2008)

what is the point of indifference and how does it change with delay?

1) point of indifference tells us where small/immediate reward is same utility as large/delayed reward (delay is so long might as well get the small immediate reward)

2) at point of indifference, the larger reward has been discounted

3) plotting point of indiff for each delay gives us a hyperbolic function where x-axis = delay, y-axis = discounted value

4) we can quantify how time leads to discounting of the large reward; and say things like for the 16s 0.56ml reward, it has been discounted 25% making it be valued the same as the 2s 0.14ml reward.

math: 0.56 × 0.25 = 0.14

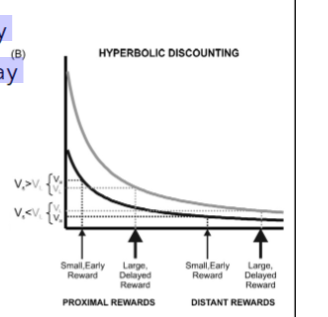

true or false hyperbolic function predicts that will be preference reversal as proximity (proximal vs distant) of SS and LL are shifted in time

true or false, it fits behavior better than exponential

true

true

Kashi and Schultz (2008)

researchers presented monkey with

SS: 0.28ml 1 sec delay

LL: 0.56ml 5 sec delay

what was monkey preference?

what about when they added 5sec to both?

what about adding 1 sec to the shortes delay?

As delay increases, the perceived value of a reward decreases rapidly at first, then more slowly.

For proximal rewards (short delays), monkeys may prefer the small-sooner (SS) option.

For distant rewards (long delays), the large-later (LL) option starts to seem better, since both are “far away” and discounted similarly.

Short and small stimulus = 0.28 ml 1 sec delay

• Long and large stimulus = 0.56 ml 5 sec delay

Monkey A preferred SS

• Short and small stimulus = 0.28 ml 6 sec delay (+5s)

• Long and large stimulus = 0.56 ml 10 sec delay (+5)

Monkey prefers LL

Add 1 second to shortest delay and ended up with ~indifference

conclusion: monkey rely on hyperbolic model to drive choice behavior

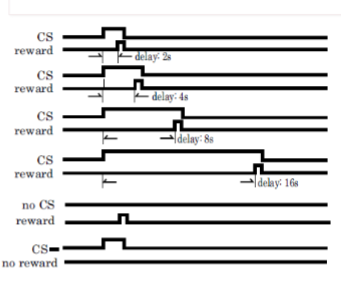

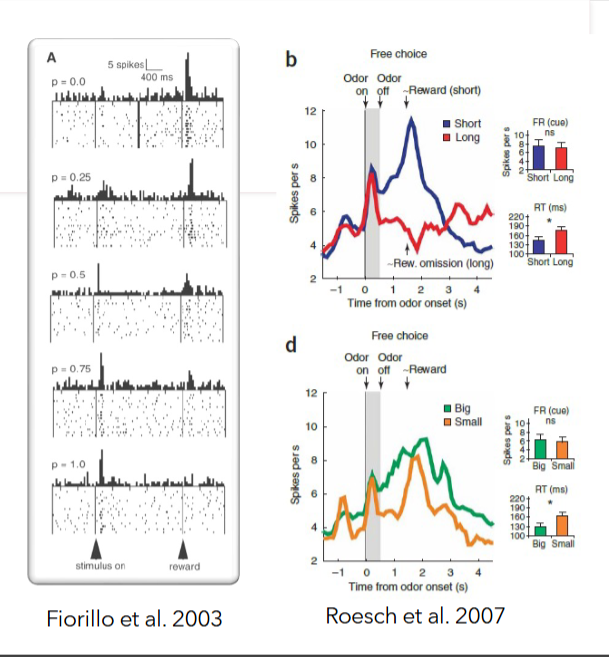

Kashi and Schultz (2008)

what kind of conditioning is this?

Why did the authors do this task?

This is classical (Pavlovian) conditioning - cue given followed by reward with various delays between cue and reward.

To measure how neural activity changes as the delay between cue and reward increases — revealing how the brain encodes temporal discounting.

Kashi and Schultz (2008)

What was results to the pavlovian delay task?

1) licking behavior data show monkey starts licking to at the time the delay predicts

2) as delay predicted by cue increases, neuron activity following cue decreases

conclusion: value of the reward is being discounted by time. dopamine neurons encode discounted value and this decrease in acitvity of dope neurons follows a hyperbolic function.

Kashi and Schultz (2008)

what does graph show us about neuron data and behavior

Researchers compared neuron response when 0.14ml was given at various delayes compared to 0.56ml given at various delays.

1) Dopmaine neurons response is discounted by delay, fits hyperbolic function

2) The 16s/.56ml outcome and the 2s/.14ml have the same activity = encode the same value = monkey should be indifferent to the choice types > this is shown in the behaivor data with the graph on the left you see on the orange line, you get indifference at 25% of 0.56ml which is 0.14ml.

Kashi and Schultz (2008)

Behavior, dopamine neurons, dopamine & behavior

Behavior: value discountd by delay, fits hyerbolic

Dopamine neurons: encode value discounted by delay (activity decrease with delay), activity fits hyperbolic

Dopamine & behavior: relate dopamine response to value signal used in intertemporal choice task

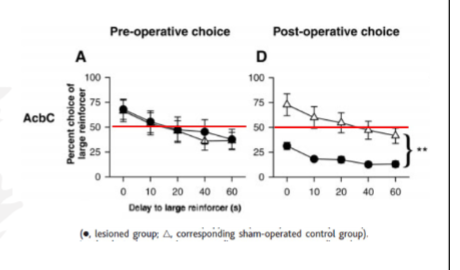

Cardinal et al. (2001)

Experimental Design

Experimental Design: rats trained on choice task,

1) house light comes at beginning of the trial and at the food tray

2) 10s to poke sensor with nose then two levers appear and food tray light turns off

4) rat can choose lever for 2 pellets immediate or 4 pellets after various delays

5) rat makes choice, house light turns off and food tray light turns on

6) rat needs to nose poke a 2nd time and tray light turns off

**intertrial interval controlled my researcher to make sure the length of time between trials is identical between delayed and imediate reward choice controls

group 1: NA lesion mice

group 2: control group

Cardinal et al. (2001)

NA lesions what does data show

white triangle = control

black circle = NA lesion

rats with lesions = more impulse

Summary: NA regulates impulsive behavior, NA appears critical for tolerating delays in appetitive reinforcement.

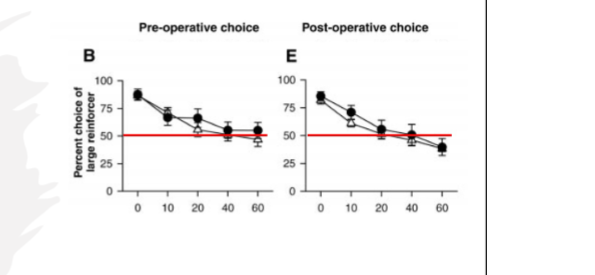

Cardinal et al. (2001)

anterior cingulate lesions what does data show

anterior cingulate no impact on impulsivity

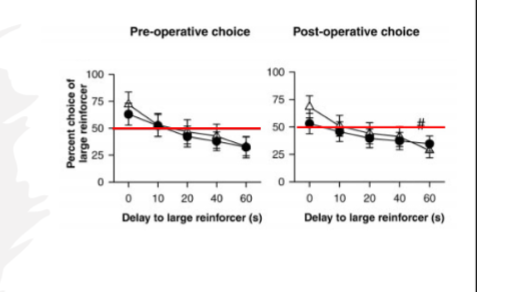

Cardinal et al. (2001)

medial prefrontal lesions what does data show

medial prefrontal no impact on impulsivity

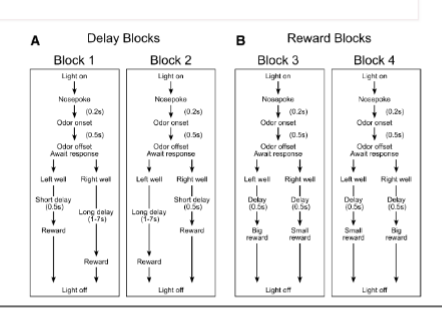

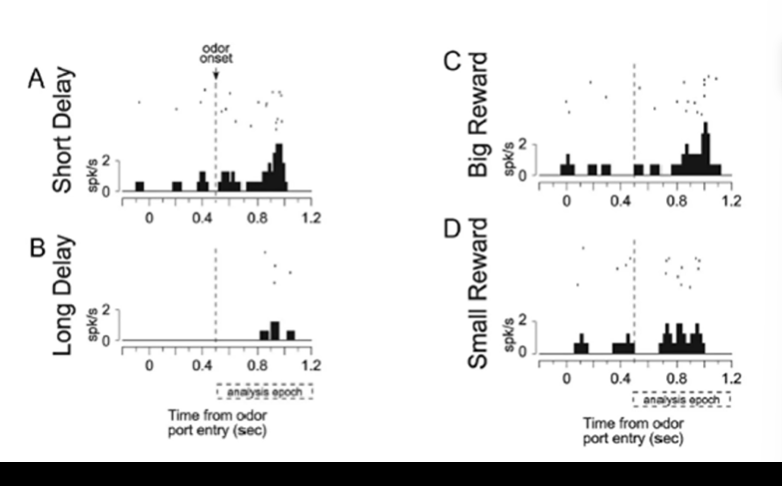

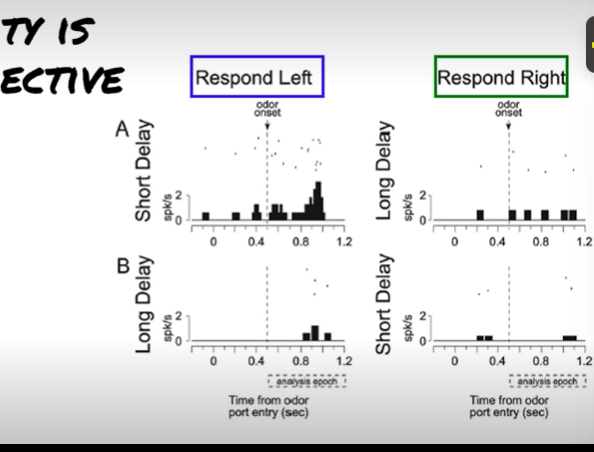

Roesch et al (2006)- encoding of time-discounted rewards in orbitofrontal cortex is independent of value representation

experimental design

odor point with three diff odors.

odor 1 = go left

odor 2 = go right

odot 3 = free choice

delay block = block 1 & block 2

block 1 = odor 1 go to the left for 0.5s delay or go right for unkown delay

block 2 = odor 2 go to the right for 0.5s delay or left for unkown delay

reward block = block 2 & block 3

block 3 = odor 3 go either well for reward both 0.5s delay

block 4 = go either left or right for both 0.5s delay

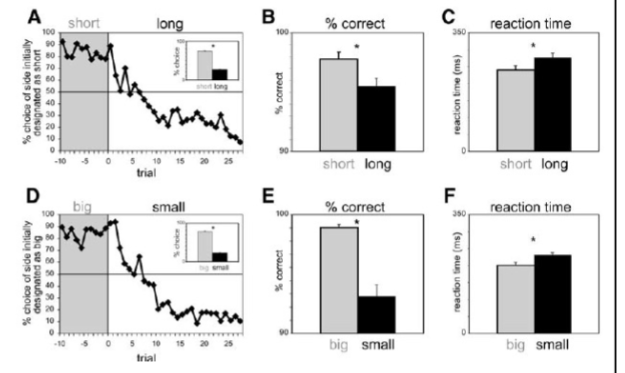

rats less likely to make mistakes when odor tells them to go to the short delayed reward, so they are more motivated to obstain the short delayed rewards. rats also faster to react to big reward.

Roesch et al (2006)

what were the results of the wells changing between each block?

1) rats less likely to make mistake when odor tells them go to the short delay well or the big reward well

2) rats react faster when odor tells them go to the short delayed reward

Roesch et al (2006)

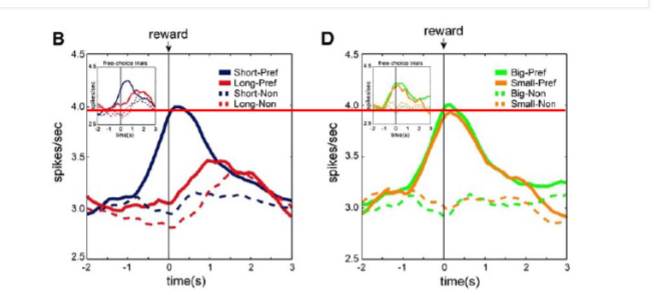

free choice trials data neural response to short and long rewards

activity is the same before the reward but OFC neurosn that prefer short delay ramp up activity before rewards delviered. OFC fire strongly for rewards that come sooner than for delayed ones even though the reward size is the same.OFC activity is discounted by time delays.

HOWEVER OFC neuron response to big and small delays it the same! OFC neurons are sensitive to timing, not magnitude.

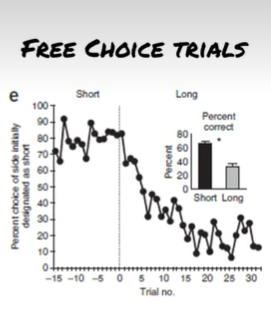

Roesch et al (2006)

what do free choice trials tell us?

as task transitions from block 1 to block 2, the well that leads to short delay reward switches to lead to the long delay reward

this graph shows last 15 trials block1 and first 30 of block 2 where going left changes from short delay to long delay

when the well begins to deliver the long delay the rat stops choosing that well > rat hsa learning capabilities and preference for short delay reward

Roesch et al (2006)

conclusion

OFC neurons do not encode value of discounted rewards in common currency, its time

Activity may relate to OFCs role in learning and guiding

behaviorOdor task good for seeing how time and reward mag impact choices and reward value, can study PDE in learning

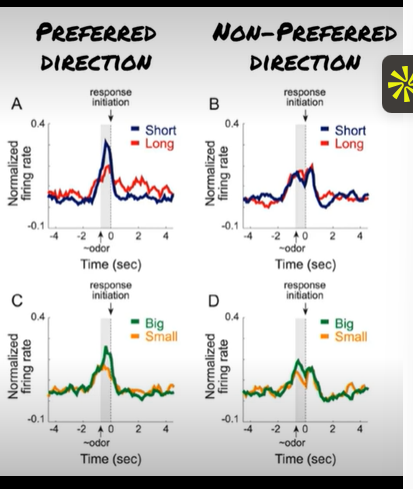

Roesch et al (2009) - ventral staital neurons

do VS neurons incode info about predicted value?

VS neurons respond more to cues predicting short delayed rewards than to cue predicting long delayed reward > so VS neurons incode info about predicted value

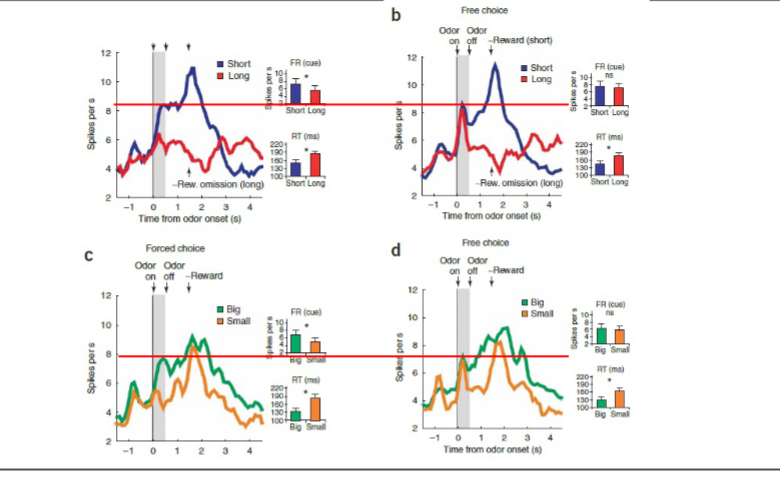

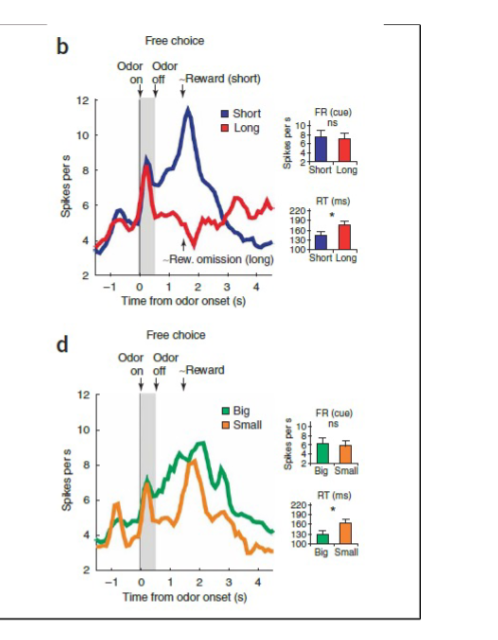

Forced choice task vs free choice task data Roesch et al (2007)

for forced task the VT neurons encode the value of the upcoming outcome. in forced choice , animal has to do what cue says to obstain reward, there is only really one option avail, so the neurons encode for the value of the preferred option = a large signal for preferred outcome and lower signal for less preferred outcome

for free choice VT neurosn encode the value of the BEST option, the signal is independent of the animlas choice and the signal does evolve to represent the value of the outcome. in the free choice, animals can sample the cue and not be penalized for choosing whatever, there are two options avail. So, the signal at the time of the cue encodes the value of the potential value of the best option avail, but it does not matter what the animal choocses to do.

in the free choice task, does the animal appear to use the cue to signal to guide behaivor?

no, the dopamine cue signal does not force the animals to behave in a way that would achieve the high value reward

conclusions from Roesch et al (2007) paper

animals are NOT FORCED to use cue signal to make decisions about what action to select

signal relate to learning what cue predicts rewards

Prediction error

Dopamine neurons increased or decreased firing when rewards were better or worse than expected.

Value coding

Stronger cue responses for immediate or larger rewards.

Relative value

Neurons responded to the contextually “better” reward, not absolute size or timing.

Choice encoding

Before decision → signal best option; after decision → reflect chosen option.

Computational model

Matches Q-learning: dopamine encodes the best available reward.

substantia nigra which type of prediction?

ventral tegmental area which type of prediction?

substantia nigra

cue response encodes expected value

one might think this guides behavior

projects to structures that make link value and action (action prediction)

ventral tegmental area

cue response encodes best available optoin

does not edtermine behavioral outcome

projects to areas invovled in learning what cues predict value (value prediction)

did Roesch et al (2009) find that VS activity action selective?

1) VS neurons encode action and value

2) VS respond more to cues that predict big reward

3) Neurons only respond to cue that tell them left port

VS neurons encode prediction info about value of future outcomes > these value presentations include temporal discounting of future rewards and magnitude outcomes.

the value response is linked to the left response

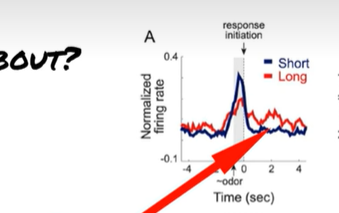

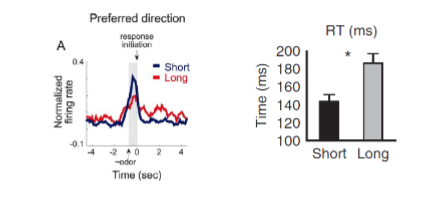

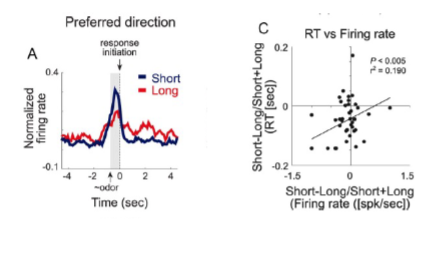

what does the population response tell us? Roesch et al (2009) - ventral staital neurons

In Roesch et al. (2009), “response initiation” on the graph marks the moment when the rat leaves the odor port and starts moving toward the reward well

This figure shows that ventral striatal neurons fire more when rats expect a high-value reward (short delay or big size), but only when the rat moves in the neuron’s preferred direction. That means the activity reflects the expected value of a specific action

VS encodes value of the upcoming action is reflected in activity

neurons in the VS are direction selective.

whats up with this acitivty? Roesch et al (2009)

while waiting, the more motivated the antimal was for the short delay trial, the

greater the activity assocaited with the long delay outcome while waiting

one reason is rats need to exert more effort to stay in well during delay trials

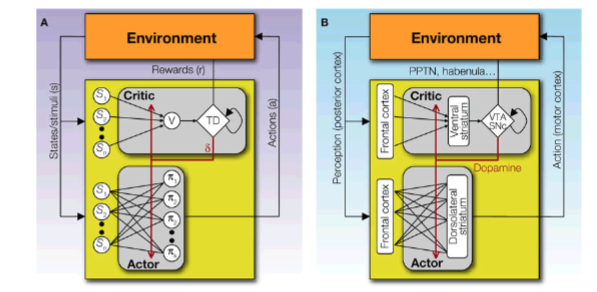

Takahashi et al. 2008

In the actor-critic model PPTN (pedunculopontine nucleus) and the lateral habenula represent what?

cue signals vs environmental signals

who is the actor

The PPTN (pedunculopontine nucleus) and the lateral habenula represent the intrinsic value of rewards (R in TDLR)

Cue signals from the frontal cortex activate value signals in the ventral straitum (V in TDLR)

Environment signals activate prediction error signals in VTA and SNc

Critic = PPTN and lateral habenula represent reward info (intrinsic value of rewards; R of TDLR)

Actor = signals from frontal cortex activate highest value (policy) assigned to them at a given time. PDE update action.value representations based on feedback from environment

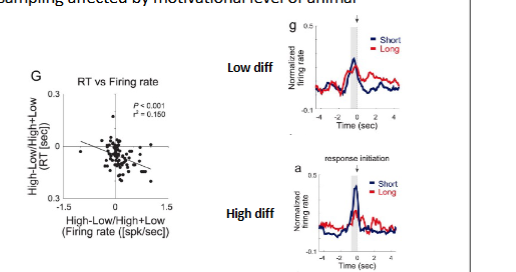

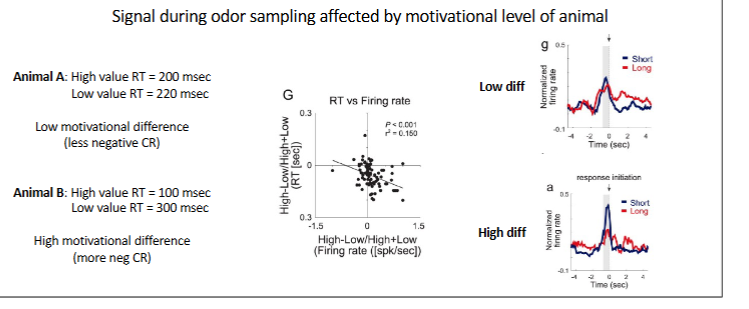

how to quantify motivation through the contrast ratio

CR = (High value - Low value) / (High value + Low value)

(Short/big) (Long/small) (Short/big) (Long/small)

Neuron: (20 Hz – 10 Hz) / (20 Hz + 10 Hz) = .33

Behavior: (140 ms – 190 ms) / (140 ms + 190 ms) = -.15

When neural firing rate is greater for higher value reward the CR will be positive

When the reaction time is faster (more motivation implied) the CR will be negative

Neuron CR can range from -1 to 1, Reaction Time CR can range cant be 1 or -1

Relationship between neural activity and RT CR?

greater CR neural responses for animals that show a greater difference between short and long reaction times.

With the higher value reward leading to faster reaction times. This means the CR for reaction time should get more to -1

relationship between CR and motivation

what does scatter plot represent?

Scatterplot combines plots CS for neural data (x axis) and RT (y axis) across all big/small and long/short trials; just looking at value of CS and it’s

relationship to reaction time and neural activity

Two groups:

1) you see the activity prior to the response differs between groups.

more motivated animals = more value discrimination in signal between short and long delay. clear signal that distinguishes between the high and

low value outcome. > more negative CR

2) less motivated animals = less value discrimination in signal betweeen short and long delay and an elevated signal during long waiting period trials. > less negative CR

What’s interesting about this is the idea that motivation is affecting the value

representation of the upcoming action. Recall that these neurons are direction

selective.

Suggests that VS neurons integrate predictive value signals related to value

of action needed to obtain reward and motivational signals related to task

engagement.

what is the relationship between neural activity and CR generlsly?

what about during waiting period?

more negative RT CR = more positive the neural CR

shorter RT on high value trials = higher firing rates on high value trials vs short delayed trials after the cue and before reward delivery

during waiting period, there is higehr actiivty for neurons preferring long delay

when the AcbC part of the VS is lesioned,

AcbC damage reduces willingness to wait for larger rewards, meaning the animals become more impulsive.

true or false

Nucleus accumbens (part of ventral striatum) appears to be important for

impulse control

true

• ventral striatum = direction selective

Activity relates to upcoming action or outcome of decision

• ventral striatum signal value of the upcoming action

Signal is present only in the preferred direction of a given neuron

Signal is action dependent

• Neural activity in the ventral striatum is correlated with motivation

Activity prior to initiating action

Activity in the period of time that the animal has to wait for reward

• Prediction: modulating ventral striatum activity during the wait period should

systematically bias motivation/impulsivity