research methods (psychology)

1/81

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

82 Terms

what are the ethical guidelines of research?

CARDUD

Consent

Anonymity

Right to withdraw

Deception

Undue stress or harm

Debriefing

what are the ethical guidelines of reporting results?

SCCAR

Social implications

Continued anonymity/confidentiality

Continued protection of participants

Avoidance of deception

Right to withdraw - ppt can withdraw their research results in a window of time

what are the 4 types of research methods/experiments?

lab

field

natural

quasi

define lab experiment

an experiment that takes place in a controlled environment the researcher manipulates the IV and records the effect on the DV

pros of lab experiments

- high level of control (easier to control extraneous variables, increases internal validity)

- standardised (easy to keep same procedure, which increases reliability and allows for replication)

- availability of specialist equipment

cons of lab experiments

Artificial environment

findings from the lab study can not be applied to a range of real world situations because they fail to represent everyday behaviours, meaning results might not be generalisable

Aware of study

Demand characteristics: pp's may also alter their behaviour to match the aim of the study

Hawthorne effect: aware of being studied and change their behaviour

Social desirability bias: ppt respond to task in a way they believe the experimenter expects them to or in a way that'll make them look good

define field experiment

an experiment that takes place in a natural setting to avoid artificiality. the researcher manipulates the IV and records the effect on the DV. there's much less control over the experiment

pros of field experiments

more genersalisable - pp's behave more naturally in their normal environment, making it more likely any behaviour observed can be applied to other natural settings

ecological validity is high, reducing demand characteristics

cons of field experiments

- extraneous variables hard to control

- hard to standardise procedures

- internal validity is low

- reliability is lower, harder to repeat experiment consistently

define natural experiment

a study that uses a naturally occurring IV and the researcher measures the change in the DV

pros of natural experiments (in field settings)

examples of real behaviour occurring in the real world, free of demand characteristics + higher ecological validity

pros of natural experiments (in lab settings)

- can do natural experiment instead if it's impossible/unethical to control IV

- increased confidence that only IV is affecting DV

- increased internal validity

- higher standardisation, can be replicated

- easier to control EV

cons of natural experiments

- lacks replicability

- difficult to control extraneous variables

- expensive/time consuming

- only possible when differences arise naturally

define quasi experiment

Aims to establish a cause-and-effect relationship between an independent and dependent variable. However, the experiment does not rely on random assignment. Instead, participants are assigned to groups based on non-random criteria, e.g. gender or age.

pros of quasi experiments

- realistic, high generalisability as they take place in natural settings

- behave naturally, increasing the validity & replicability of the findings.

- ecological validity is high - findings are more generalisable to real life settings.

cons of quasi experiments

- low internal validity - there may be variables influencing both the dependent variable and independent variable (e.g. difference between groups before experiment even begins) making it difficult to establish cause and effect relationship

researcher could be biased

- The lack of control over the pre-existing variables means that researchers can't be quite certain in determining cause-and-effect relationships.

- Reliability is lower, harder to repeat the procedure consistently.

define an observation

researcher watches and record behaviour of pp's

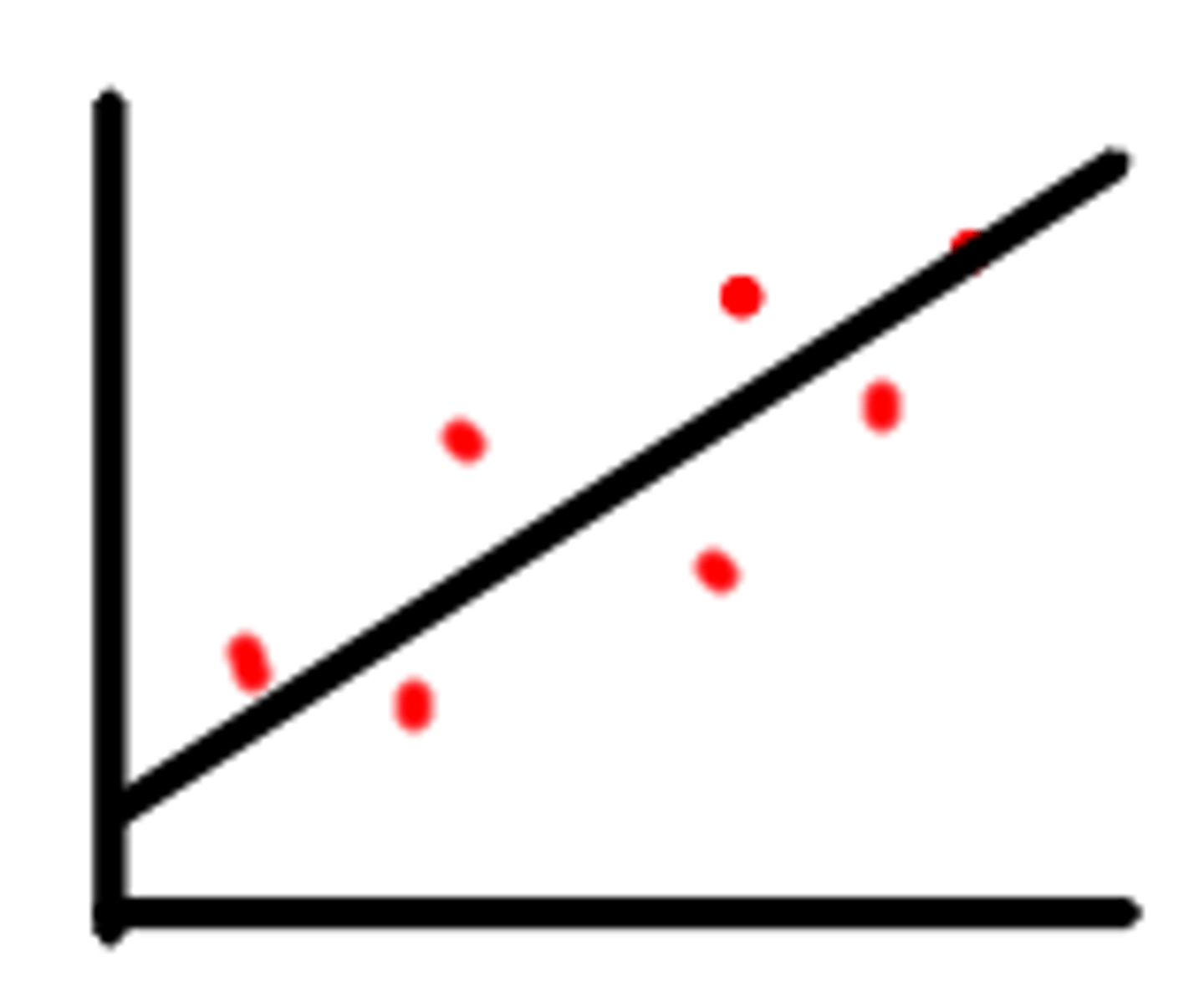

define a correlation

the relationship between two or more variables

explain the difference between a correlation and an experiment

experiments involve the manipulation of an IV and a measurement of the DV upon the change. whereas a correlation, no variables are manipulated, they're just measured to look for a relationship. correlations can't establish. a cause-and-effect relationship.

criteria for variables when conducting a correlation

variables must:

- exist over a range

- be able to be measured numerically

reliability of correlation depends on whether methods used were reliable. objective measures are more reliable than subjective.

what are the two type of errors

systematic error - happening consistently, probably due to fault equipment, method etc

random error - unpredictable factors e.g. participant, environment, tools

evaluation of correlations

correlations only highlight the relationship/strength of a relationship between two variables. but they don't explain whether one change in variable is the direct cause of the other variable, they only show there's an ASSOCIATION between the variables.

what does a correlation show?

1 of 4 things:

1) the 1st variable may be affecting the second one

2) the 2nd variable may be affecting the first one

3) the correlation may be random

4) there is a hidden 3rd variable affecting both of the correlated variables (aka ALTERNATIVE EXPLANATION)

define theory

An attempt to explain why something exists or occur

what are the key components of a theory?

1) summarise different outcomes

2) provide the simplest account of outcomes

3) provide ideas for future research

4) falsifiable

criteria of a theory

T - Testable

E - Evidence based

A - Applicable

C - Construct validity (concepts clearly defined)

U - Unbiased

P - Predict behaviour

define population

a group sharing one or more characteristics, from which a sample is drawn

define target population

group that we want to research and generalise findings for

define sample

a small group of the target population

define an aim

tells the purpose of the research

define a hypothesis

a testable statement predicting the difference between levels of the IV and DV

define a null hypothesis

when there is 0 difference between the DV and change in IV

define an alternative hypothesis

there is a relationship between a change in IV and DV

define a directional / one tailed hypothesis

states there is a difference and says the direction the results will go

define a non directional / two tailed hypothesis

states there is a difference but doesn't say the direction the results will go

define standardisation

keeping the research procedure for each ppt the same to ensure any differences between ppt condition are due to the variable invesitgated

how do pyschologist achieve standardised procedures?

1) standardised instructions

2) standardised manipulation of IV/controls

3) standardised equipment/tests --> means that measure change of variables same way each time

list the 5 types of sampling in psychological research

random

opportunity

volunteer

purposive

snowball

define random sampling

each member of the target population has an equal chance of being picked for the sample

explain how to conduct a random sample

researcher needs a full list of the target population

all names are put into a container

all number of names are equal to the sample required

the computer randomly pulls out the names

pros of random sampling

- Removes experimenter bias because the researcher cant choose the ppt's they want for the sample, they avoid picking ppt's they want for a desired result (unbiased)

- More likely to be representative - give every member of a population an equal opportunity to be chosen

- Easy(ish)

cons of random sampling

- ppt's could be picked that produce an unrepresentative sample, thus not reflecting the population eg, not enough boys (biased)

- time-consuming to identify all population members

define opportunity sample

researcher asks available members of the target population to take part

pros of opportunity sampling

- Quick and done based on availability, reduce time it takes to select sample

- Convenient for researcher

- Economical (Saves time + money)

cons of opportunity sampling

- Researcher decides who takes part, they may select ppt's who they feel are likely to give the desired result (BIASED)

- Ppt's may feel forced into participation

- Unlikely to be representative as ppt's could have many similarities (e.g. if researcher asks people who come from the same place)

define volunteer sampling

participants are invited to participate in a study, and those who accept select themselves to become to sample

pros of volunteer sampling

- quick and easy as ppt comes to researcher

- as a result there's also no researcher bias

- ethical as ppt want to be part of the study, no pressure

- good for unusual target population

cons of volunteer sampling

- those who respond to the call for volunteers may all display similar characteristics, reducing generalizability (BIAS)

- therefore unlikely to be representative

- expensive to place adverts and pay participants

- only certain people see the advert

define purposive sampling

Participants are chosen because they possess characteristics that are relevant to a given research study

pros of purposive sampling

- sample is filled quickly

- effective method when target population is limited

- highly representative sample of characteristics researcher wants

cons of purposive sampling

- over-represents sub-groups in the population that happen to be more available

- some bias

define snowball sampling

participants who are already in a study help the researcher recruit more participants. Researchers start with just one or two people and then grow their "snowball" sample by adding more and more participants to the initial sampling.

pros of snowball sampling

good for finding populations that are rare to find

cons of snowball sampling

- anonymity is lost (as it's pass on a friend)

- unlikely to yield a representative sample

- may not be able to recruit participants

what are the 3 types of experimental design

independent groups design

matched pairs design

repeated measures design

define independent groups design

different participants are used in each condition of the experiment. they should be randomly allocated to avoid researcher bias

define repeated measures design

the same participants take part in all conditions

define matched pairs design

A research design where participants are paired based on specific characteristics, ensuring each pair has one member assigned to each condition of the experiment, with one person in each pair getting the treatment and the other the control. This aims to control for variables and reduce individual differences.

define independent variable

variable that is manipulated to test the effect on the DV

define dependant variable

variable that is measured

define extraneous variables

different factors that could affect the DV but are not ones being tested

define confounding variables

undesired variables that influence the relationship between the independent and dependent variables

why must variables be operationalised

being clearly defined allows for manipulation of the independent variable and also means the dependent variable can be precisely measured

define participant variables

individual differences between participants that may affect the DV. eg, age, sex

define situational variables

uncontrolled features of an environment that affect the DV

what are 2 ways to control participant variables

random allocation- removes potential bias and limit the influence of participant variables

matched pairs - reduces participant variability because participants in each condition are matched on key characteristics, so any differences in results are more likely due to the manipulation of the independent variable rather than individual differences between participants.

how to control extraneous variables

counter balancing- half the pp's complete condition a first and b second, the other half does b first and a second. this helps to reduce order effects (e.g. fatigue, boredom).

how to control situational variables

standardised procedures and instructions are used to ensure that conditions are the same for all participants so all pp's have the same experience

how to remove demand characteristics? (cues in study that make ppt guess the aim and change their behaviour)

1) changing sample - ppt’s with less psychological knowledge may not be able to guess the aim

2) deceiving the ppt, then debriefing them - makes it difficult for ppt to guess the aim altogether

how to remove social desirability bias?

1) getting someone else to do it on that person's behalf - removes the participant’s motivation to present themselves in a socially acceptable way.

2) asking the same question in the opposite way - detects and discourages patterned, socially acceptable responding.

3) observing ppt instead

what are the main ethical considerations in research

informed consent

debriefing

right to withdraw

confidentiality

protection from harm

explain informed consent

participants should have detailed information about the research to be able to make a informed decision about taking part

if participants are below the age of 16, parental consent needs to be gained

explain debriefing

all relevant information should be explained to participants both before and after the study takes place

explain right to withdraw

participants should be aware they have the right to withdraw from the study at any time, even after it has finished

explain confidentiality

pp's personal data should be kept securely by the researcher. their identities should not be revealed

explain protection from harm

participants must leave the research in the same physical and mental state they started and be protected from both physical and mental harm

how to get around informed consent

prior general consent- pp's agree to potential features of a study

retroactive consent- researcher asks for consent after the study

define reliability

consistency of results. eg, if the researchers replicate the study, they'll get the same results

what are the 2 types of validity

internal validity: the extent to which a test measures or predicts what it is supposed to

external validity: the extent the study can be applied beyond the research settings

methods to test reliability

test-retest - gives the same test to the same participant's twice on different occasions. measures external reliability.

inter-observer (type of internal reliability) - when all observers have the same observations and come to the same conclusions

what are the different types of external validity

Ecological validity: Reflects the behaviour in the real world

Historical validity: Can be applied over different periods of time

Population validity: Can be applied to other people in other places and cultures

whats the difference between the null and alternate hypothesis

the null suggests no casual relationship exists between the IV and DV, whereas the alternate hypothesis suggests a casual relationship