Bitch ass CS

1/78

Earn XP

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

79 Terms

Object-Oriented Programming (OOP)

A programming paradigm based on the concept of "objects," which can contain data and code to manipulate that data.

Encapsulation

implementation details are hidden or encapsulated in objects

Inheritance

child classes can inherit from parent classes and module

Polymorphism

objects and names exist in many forms. So, the same attribute or method can exist in multiple classes and mean different things, etc

Abstraction

handling a concept rather than the implementation details

Interpreters

Analyzes source code, generates byte code, and initializes the Python Virtual Machine (PVM). Ours for python is called CPython

Scope

LEGB Rule

Python Lists

Ordered and changeable collections in Python, written with square brackets, allowing for heterogeneous elements. The list can be homogeneous or heterogeneous, Element-wise operation is not possible on the list, Python list is by default 1-dimensional. But we can create an N-Dimensional list. But then too it will be 1 D list storing another 1D list, Elements of a list need not be contiguous in memory.

Numpy Arrays

Structured lists of numbers: Vectors, Matrices, Images, Tensors, ConvNet. Arrays can have any number of dimensions, including zero (a scalar) Arrays are typed: np.uint8, np.int64, np.float32, np.float64. Arrays are dense. Each element of the array exists and has the same type. Arrays are faster than python lists (consume less memory). Can only combine arrays of the same shape!

Data Types in Python

Various types including str, int, float, list, dict, set, bool, bytes, and NoneType, each serving different purposes.

Classes

contain 3 types: static, class, and instance

Truthiness

The evaluation of values in conditional statements, where certain values equate to true or false.

Central Tendencies

represents the center point of “typical” value of a dataset. As a rule, we replace null values with the mean when the data is normally distributed and replace null values with the median when the data is skewed.

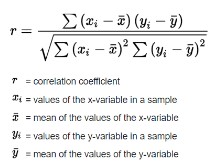

Correlation Coefficient

A statistical measure indicating the strength and direction of a relationship between two variables, ranging from -1 to 1. also it looks like this

Random Variables

x, is a variable where the possible outcomes are a

function of a random phenomena. The probability for any event is between 0 and 1, inclusive. The summation of the probabilities of each outcome equals 1

Random State

A method to generate pseudo-random numbers in computing.

Central Limit Theorem

A statistical principle stating that sample means will be normally distributed regardless of the population's distribution, given a large enough sample size.

Discrete Data

Finite and countable data values.

Continuous Data

Infinite data values that can take any numeric value.

Law of Large Numbers

as the sample size increases the sample mean gets closer to the population mean

Scales of Measurement

Different levels of data categorization including nominal, ordinal, interval, and ratio, each with unique properties.

Stdin and Stdout

Standard input and output streams for data processing in programming.

Data Cleaning

The process of correcting errors and inconsistencies in data to improve quality.

Preprocessing

cleaning up all null values, data cleaning (dashes, odd characters, handle missing and extreme outliers). One hot encoding, convert categorical to numerical sometimes its the same as onehotencod. Standardization/Normalization, deal with multicollinearity which can be cause by 3

Machine Learning

is a branch of artificial intelligence where algorithms use data to improve automatically through experience, without explicit programming. These algorithms identify patterns in large datasets, encompassing numbers, words, images, and more, enabling predictions or decisions. ML allows systems to adapt to new data without human intervention.

Supervised Learning

A type of machine learning using labeled training data to predict outcomes of classification or categorization using discrete values

Unsupervised Learning

A type of machine learning that does not use labeled data, focusing on finding patterns clustering from discrete values

Cross-Validation

testing performance of a machine learning model by training a model using the subset of the data and test the performance using a different subset with or without replacement.

Overfitting

problem that occurs when the model cannot make generalizations and fits too closely to the training data

Underfitting

problem that occurs when the model is overgeneralized.

Feature Selection

The process of selecting a subset of relevant features for model training to improve performance and reduce complexity.

KNN (K-Nearest Neighbors)

predicts the group of a datapoint based on majority “votes” from

nearest neighbors K represents the hyperparameter which

indicates how many data points any new datapoint must listen to in order to decide what class it is in

Linear Regression

is to model and predict the relationship between independent and dependent variables. Univariate linear regression predicts a

dependent variable from ONE independent variable whereas multiple linear regression predicts a dependent variable from MULTIPLE independent variables :y = mx + b

Multiple Regression

predicts a dependent variable from MULTIPLE

independent variables. “Multivariate” means the result is a vector. We look at correlations, we compare the R² values before and after a feature is added, and sklearn.feature_selection has many functions to assist with feature selection. * y = m1x1 + m2x2 + m3x3 + ... + b

Logistic Regression

Used when trying to predict the answer to a yes/no question or any binary question, response follows a S shaped curve.

Confusion Matrix

A table used to evaluate the performance of a classification algorithm by comparing predicted and actual outcomes.

Accuracy

number of correct predictions/ total predictions

Precision

true positive results / total predicted positives, indicating the accuracy of positive predictions.

Recall

The ability of a classifier to identify all relevant instances, measuring the proportion of true positives among actual positives. : tp/tp +fn

Relational Databases

Databases that use tables to store and manage structured data.

Cloud Databases

Databases that reside on cloud computing platforms, allowing for flexible data storage and access.

Distributed Databases

Databases that consist of data stored across multiple locations or sites.

Object-Oriented Databases

Databases designed to handle complex data types and relationships efficiently.

NoSQL Databases

Non-relational databases that allow for stattistacal analysis

What are the 4 scales of measurement

Nominal, Ordinal, Interval, and Ratio

What is Nominal

Categories that do not have a natural order Ex. blood type, zipcode, race

Ordinal

categories where order matters but the difference between them is neither clear nor even. Ex. satisfaction scores, happiness level from 1-10

Interval

There is an order and the difference between two values is meaningful. Ex. Temp(Cand F), credit scores, pH

Ratio

The same as interval except it has a concept of 0. There are no negative numbers Ex. concentration, Kelvin, weight

gitignore files

untracked files that are files that have been created within your repo's working directory but have not yet been added to the repository's tracking index using the git add command. Most files .File are hidden by defalut

Text types

str

Numeric Types

int, float, complex

Sequence

list, tupule, range

Mapping

dict

Set types

set, frozensets

Boolean type

bool

Binary types

bytes, bytearray, memoryview

NoneType

data that does not fit into any of these categories

Supervised and Unsupervised greatest difference

The biggest difference between supervised and unsupervised machine learning is the type of data used. Supervised learning uses labeled training data, and unsupervised learning does not. More simply, supervised learning models have a baseline understanding of what the correct output values should be.

How does KNN evaluate its performance

via accuracy

Multiple regression evaluation of performance

Use mean squared error and R² (R-squared) to validate model performance. *really, this depends on the shape of the data. There are other statistical models and tests like ANOVA that we won't discuss here

How to evaluate performance of Linear regression

a method we can use to understand the relationship between one or more predictor variables (Xi) and a response variable (Y). R Square/Adjusted R Square.:Mean Square Error(MSE)/Root Mean Square Error(RMSE), Mean Absolute Error(MAE), illustrate Residual of model as a normal distribution ( bell shape), By OLS from statemodels.formula.

complete separation

happens when the outcome variable separates a predictor variable or a combination of predictor variables completely.

class method

can modify class state and cant modify object state. Its used for factory functions

Static Method

cant access class state and cant access object state. Used for utility functions.

Instance method

can modify class srtate and can modify object state

What are factory functions

separate the process of creating an object from the code that depends on the interface of the object

Utility functions

handle logic and checks(see if the person on the website is over 18, see if inputs meet particular requirements

Local Scope

only available to other code in this scope. A function, for example, only has access to the names defined in that function or passed into it via arguments.

Eclosing- scope

only exists for nested functions. Inner nests can have access to the names in outer nests

Global- Scope

vailable to all your code and can pass through modules, classes, etc

Built-in*Scope

all names that are created by Python when you run a script

Uniform Distribution

or a random variable, expected value is (a+b)/2, where a is the maximum possible value and b is the minimum

Binomial distribution

mean is the expected value, which is equal to n trials

* p probability

Standard normal distribution

mean is the expected value

Structured Databases

data that has no inherent structure and is usally stored as different types of files. Ex. text docs, Pdfs, images, and videos

Quasi-Structured

Textual data with erratic formats that can be formatted with effort and software tools Ex. clickstream data

Semi-structured

textual data files with an apparent patern enabling analysis Ex. Spreadsheets and XML files

Structured

Data having defined data model, format, and structure. Ex. database