Cognitive and Affective

1/14

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

15 Terms

Multi-store architecture of memory

The multi-store model of memory, also called the modal model, was introduced by Richard Atkinson and Richard Shiffrin in 1968. This influential framework conceptualizes memory as a system composed of three distinct structural components: sensory memory, short-term memory (STM), and long-term memory (LTM). These components are connected through a series of control processes that actively manipulate and transfer information through the system.

Sensory memory is the initial stage in the memory system. It holds all incoming sensory information for a very brief period of time, typically fractions of a second. This allows us to briefly retain an impression of a stimulus even after it has disappeared — a phenomenon known as the persistence of vision. A key demonstration of sensory memory comes from Sperling’s (1960) experiments, which showed that: People could briefly take in a large amount of visual information (iconic memory), but the memory faded rapidly — usually within one second. When auditory cues were given immediately (partial report), participants could recall much more than when asked to recall all items (whole report), or when the cue was delayed. There are different types of sensory memory: Iconic memory (visual): e.g., briefly seeing letters on a screen. Echoic memory (auditory): e.g., realizing what someone said just after saying "What?"

Short-term memory holds a limited amount of information for a short duration — typically 15–20 seconds, unless actively maintained through rehearsal (repeating info over and over again). It is the site of conscious processing and immediate awareness. STM has a limited capacity, estimated between 4 and 9 items, though this can be extended through chunking — grouping individual items into meaningful units (Miller, 1956). For example, remembering a phone number as "555-1996" rather than "5-5-5-1-9-9-6." There is also an ongoing debate on whether STM capacity should be measured by number of items or amount of detail stored. Over time, the understanding of STM evolved into the concept of working memory, proposed by Baddeley and Hitch (1974). Working memory expands on STM by emphasizing its role in processing and manipulating complex tasks (e.g., remembering numbers while reading a paragraph) in addition to temporary storage. It consists of three main components:

Phonological loop: Holds verbal and auditory information (e.g., repeating a name in your head).

Includes the phonological store (holds sound-based info briefly) and the articulatory rehearsal process (can keep items in the phonological store from decaying).

Visuospatial sketchpad: Holds visual and spatial information (e.g., picturing a map).

Central executive: The “manager” that coordinates attention, pulls information from LTM, and directs the phonological loop and sketchpad. It helps manage competing tasks focusing on specific parts/information and deciding how to divide attention between different tasks.

Long-term memory is the system responsible for storing information over extended periods—from 30 seconds to a lifetime. Unlike STM, which is limited in both duration and capacity, LTM can hold a vast amount of information permanently. Information enters/is stored into LTM from STM through a process called encoding, and it is retrieved/remembering it when needed through retrieval. LTM includes different types of memories: Episodic memory: Personal events and experiences. Procedural memory: Skills and actions (e.g., riding a bike).

Coding refers to the form in which stimuli are represented and there are different ways to code such as visual coding (coding in the mind in the form of a visual image; remembering your fifth-grade teacher’s face), auditory coding (coding in the mind in the form of a sound; when you “play” a song in your head), and semantic coding (coding in the mind in terms of meaning; Recalling the general plot of a novel you read last week.

Control processes are strategies used by individuals to regulate the flow and transformation of information between memory stores. These include:

Rehearsal: Keeping information active in STM through repetition.

Maintenance rehearsal: Repetition without attaching meaning — less effective for LTM storage.

Elaborative rehearsal: Repetition but with linking new information to prior knowledge or meaning — much more effective for encoding into LTM.

Selective attention: Focusing on relevant incoming stimuli to allow entry into STM.

Mnemonics or association strategies: Making a stimulus more meaningful to enhance encoding.

Though these different types of memory are explained by division into separate memory components, they actually interact and share mechanisms. For example:

A phone number is first registered in sensory memory.

If attention is directed to it, it enters STM, where you might repeat it to keep it active.

If you use elaborative rehearsal (e.g., associating the number with a birth year), it becomes encoded into LTM.

Later, when you want to recall the number, it is retrieved from LTM back into STM so that you can use it again.

Levels or Depths of Processing

The Levels of Processing Theory proposed by Craik and Lockhart explains that memory retrieval from LTM is affected/improved by how the items are encoded. According to this theory, memory depends on the depth of processing that an item receives. Depth of processing distinguishes between shallow processing and deep processing.

Shallow processing involves little attention to meaning (e.g., when a phone number is repeated over and over). In contrast, Deep processing involves close attention and elaborative rehearsal that focuses on an item’s meaning and its relationship to something else. Craik and Endel Tulving (1975) presented words to participants and asked them three different types of questions to create different levels of processing: (1) physical features = shallow processing; (2) rhyming = deeper processing; (3) fill in the blanks = deepest processing. After participants responded to these three types of questions, they were given a memory test to see how well they recalled the words and found that deep processing results in better memory than shallow processing.

Paired-associate learning: Bower and Winzenz (1970) used a procedure called paired-associate learning, in which a list of word pairs is presented (e.g., boat-tree) and found that using visual imagery—generating images in your head to connect words visually—enhanced memory in comparison to just repeating the word pairs.

Self-reference effect: Memory is better if you are asked to relate a word to yourself.

Generation effect: Participants either read pairs of words (read group) or generated the list of word pairs based on the word and first two letters of the second word, filling in the blank (generate group). Participants who had generated the second word in each pair were able to reproduce 28% more word pairs than participants who had just read the word pairs.

Presenting material in an organized way: Participants spontaneously organize items as they recall them (for example, apple, plum, cherry; shoe, coat, pants) are grouped together). Remembering words in a particular category may serve as a retrieval cue—a word or other stimulus that helps a person remember information stored in memory.

The Retrieval Practice Effect: Better memory can be achieved by testing memory, or, to practice memory retrieval. When studying, use techniques that result in elaborative processing, and keep testing yourself, even after the material is “learned,” because testing provides a way of elaborating the material (the testing effect).

Elaborative rehearsal: Repetition but with linking new information to prior knowledge or meaning. Thinking deeply about the meaning of the term to be memorized, as opposed to simply repeating the word to yourself repeatedly.

Chunking: the process of breaking down information into smaller, more manageable units or "chunks" to improve understanding, memory, and processing. (e.g., Remembering a phone number (555-123-4567) is easier when broken down into chunks (555, 123, 4567) than trying to recall it as a single string of ten digits.)

Episodic versus semantic memory

How episodic memory (memory for experiences) and semantic memory (memory for facts) are considered to be two different types of because of (1) the type of experience associated with episodic and semantic memories, (2) how brain damage affects each one, and (3) the fMRI responses to each one.

Differences in Experience: Episodic memory involves mental time travel and is the experience of traveling back in time to reconnect with events that happened in the past. It often includes remembering the emotions and reliving it. Tulving described the experience of episode memory as “self-knowing” or “remembering”. In contrast, the experience of semantic memory involves accessing knowledge about the world that does NOT have to be tied to remembering a personal experience. When we experience semantic memory, we are not traveling back to a specific event from our past, but we are accessing things we are familiar with and know about (e.g., facts, vocabulary, numbers, and concepts). Tulving referred to this as “knowing.”

Neuropsychological Evidence: Neuropsychological case studies support the idea that episodic and semantic memory are distinct systems in the brain. KC, a man who suffered brain damage lost his episodic memory (he cannot recall personal experiences or relive past events) but his semantic memory is intact. He knows factual information, such as the layout of his kitchen and definitions of terms, but cannot recall how or when he learned those facts. In contrast, LP, an Italian woman who had encephalitis experienced the opposite pattern. She lost semantic memory, struggling to recognize words, famous people, or historical facts, but retained episodic memory, recalling daily events and personal experiences. These cases demonstrate a double dissociation: damage can impair one type of memory while sparing the other, suggesting separate neural mechanisms for episodic and semantic memory.

fMRI/brain imaging studies: Interpreting neurophysiological cases is complex due to individual differences in brain damage and testing methods. Therefore, findings from brain-damaged patients are often supplemented with brain imaging studies using fMRI to further understand how these memory systems function. fMRI results indicate that there are major differences in brain activation between episodic and semantic memories, however, there can also be great overlap in how they interact.

Implicit Learning

Long-term memory can be divided into explicit memory and implicit memory. Explicit memories are memories we are aware of, conscious, and there are two types of explicit memory, episodic (personal events) and semantic (facts, knowledge). Implicit memory occurs when learning from experience is not accompanied by conscious remembering. Procedural memory, priming, and classical conditioning involve implicit memory.

Procedural memory is memory for physical actions, also called skill memory, because it is memory for doing things that usually involve learned skills. This has been studied in patients with amnesia, as amnesiac patients can also learn new skills even though they don’t remember any of the practice that led to the learning. The main effect of procedural memories is that they enable us to carry out skilled acts without thinking about what we are doing. Expert-induced amnesia happens when someone is so skilled at a task that they perform it automatically, without consciously thinking about each step. Because it doesn’t require focused attention, experts often can’t explain exactly what they did afterward—it’s as if they don’t remember doing it. There is evidence, based on testing a brain-damaged woman, that there is a connection between procedural memory and semantic memories related to motor skills because although she had lost most of her knowledge of the world, she was able to answer questions related to things that involved procedural memory.

Priming occurs when the presentation of one stimulus (the priming stimulus) changes the way a person responds to another stimulus (the test stimulus). One type of priming, repetition priming, occurs when the test stimulus is the same as or resembles the priming stimulus. For example, seeing the word bird may cause you to respond more quickly to a later presentation of the word bird than to a word you have not seen, even though you may not remember seeing bird earlier. Repetition priming is called implicit memory because the priming effect can occur even though participants may not remember the original presentation of the priming stimuli. Priming is not just a laboratory phenomenon but also occurs in real life with the The propaganda effect is when participants are more likely to rate statements they have read or heard before as being true, simply because they have been exposed to them before. The propaganda effect involves implicit memory because it can operate even when people are not aware that they have heard or seen a statement before, and may even have thought it was false when they first heard it. This is related to the illusory truth effect.

Classical conditioning occurs when a neutral stimulus is paired with a stimulus that elicits a response, so that the neutral stimulus then elicits the response. Classically conditioned emotions occur in everyday experience, but doesn’t illustrate implicit memory, if one is aware of what was causing their conditioned response. for example, you meet someone who seems familiar but you can’t remember how you know him or her. Have you ever had this experience and also felt positively or negatively about the person, without knowing why? If so, your emotional reaction was an example of implicit memory.

What are major sources of forgetting from long-term memory?

Ebbinghaus was interested in determining the nature of memory and forgetting from LTM—specifically, how rapidly information that is learned is lost over time. He studied how quickly we forget by memorizing lists of nonsense syllables and measuring how long it took to relearn them after a delay. He calculated “savings” as the difference between the original learning time and the relearning time. The decrease in savings (remembering) with increasing delays indicates that forgetting occurs rapidly over the first 2 days and then occurs more slowly after that, visually shown by the savings curve. Forgetting from LTM can be useful by reducing access to painful memories, getting rid of outdated information for improved decision-making, and allowing us to understand the whole picture of information rather than very specific details. Theories of forgetting include: Dude I Remember My Cute Clinician (Decay, Interference, Repression, Motivated forgetting, Cue-dependent forgetting, consolidation and reconsolidation)

Decay. Forgetting occurs because the substrate (physical or chemical) traces of a memory in the brain naturally deteriorate over time if not accessed or reinforced. Often ignored by most theorists and is thought to occur because decay processes happen within memory traces. Example: You forget the details of a dream you had last week because you never thought about it again after waking up.

Interference.

Proactive— forgetting that results from the disruption of memory by previous learning. This occurs when there is competition between the correct response and one or more incorrect ones. More competition when the incorrect response or responses are associated with the same stimulus as the correct response. A major cause of practice interference is that it is hard to exclude incorrect responses from the retrieval process. Example: Difficulty in remembering a friend's new phone number after having previously learned the old number.

Retroactive— forgetting which involves disruption of memory for what was previously learned (old memory) by other NEW learning or processing that resembles previous learning. Example: After learning the names of your new coworkers, you struggle to remember the names of coworkers from your previous job.

Motivated forgetting.

Repression: Originally proposed by Freud and is defined as threatening or traumatic memories often cannot gain access to conscious awareness which can be hidden/buried in both active and intentional processes, but also automatically. Repressing memories reduces conflict within the individual.

Direct forgetting: a phenomenon involving impaired LT memory triggered by instructions to forget information that has been presented for learning. Often several items are presented with directions to forget or remember the word. In an experiment, you're told to remember a list of words but are told to forget a few of them. you are more likely to recall the “not-to-be-forgotten-words” than the “to-be-forgotten” words.

Think/No-Think suppression: ntentional attempts to suppress specific memories by avoiding thinking about them often using substitution or mental blocking. Substitution is associating a different, non-target word with each cue word and or direct suppression is focusing on the cue word and blocking out the associated target word. Suppression instructions triggers inhibitory processes, which are mental mechanisms that help keep unwanted thoughts out of awareness, and restrict the formation of new long-term memories. Example: When shown the word “pain” and trying not to think about a past injury, you instead think about “sunshine” to block the painful memory.

Cue-dependent forgetting: forgetting is due to the weak overlap between relevant memory traces and information available at retrieval. Forgetting occurs because the cues present at the time of learning are not available at retrieval. When we store information about an event, we also store information about the context of the event. According to the encoding specificity principle, memory is better when the retrieval context (internal or external) is the same as that at learning. Example: You study for an exam in a quiet room, but during the noisy exam, you struggle to recall the information.

Consolidation and reconsolidation.

Consolidation. This long-lasting physiological process fixes information into long term memory, but recently formed memories still being consolidated are especially vulnerable to interference and forgetting. This theory aims to explain why the rate of forgetting decreases over time. Hippocampus is thought to play a role in consolidation of memories, stored in neocortex. Evidence comes from people with retrograde amnesia in which most impaired memories are those closest to the impairing event and less forgetting as we move away from event.

Reconsolidation. Reactivation of a memory trace previously consolidated. This puts it back into the fragile state in which the fragility of the memory trace allows it to be updated and altered. Example: You recall a story you heard last year, but after someone adds new (inaccurate) details, your memory updates to include them—even if they’re wrong.

Classical Conditioning vs Operant Conditioning

Classical Conditioning: Classical conditioning was posited by Pavlov as a learning process involving reflexes mediated by the nervous system (e.g., falling down and stopping oneself). In classical conditioning, an unconditioned stimulus US (a stimulus that automatically elicits a response; e.g. food eliciting salivation) elicits an unconditional response (e.g. salivating). When a neutral stimulus NS is paired with the unconditioned stimulus, it is termed the conditioned stimulus if it comes to elicit a similar unconditioned response, now termed the conditioned response. The pairing must occur so that the CS occurs before the US because the CS can provide information regarding the availability of the US. The CR often mimics the UR, but they may differ.

The Little Albert experiment was conducted by behaviorist John B. Watson to demonstrate how emotions like fear can be learned through classical conditioning.They started with a baby named Albert, who initially showed no fear of a white rat. Then, they began pairing the rat (a neutral stimulus) with a loud, scary noise (an unconditioned stimulus) that naturally made Albert cry (the unconditioned response). After several pairings, Albert began to cry just at the sight of the rat, even when no noise was made. At that point, the white rat became a conditioned stimulus, and the crying became a conditioned response. Eventually, Albert also showed fear toward other similar white, fluffy objects—this is called stimulus generalization, where the learned response spreads to things that resemble the original stimulus. This generalization can be reduced through discrimination training, which involves only presenting the new stimulus (like a bell or a white toy) without the unconditioned stimulus (no scary noise or no food). Over time, the person or animal learns that these similar stimuli do not predict the scary or rewarding outcome, and the fear or response fades.

Operant Conditioning: Operant conditioning is a learning process first coined by B.F. Skinner. It refers to how the consequences of a behavior influence the likelihood of that behavior occurring again in the future. Unlike classical conditioning—where a stimulus automatically elicits a response—operant conditioning involves non-reflexive behaviors that are emitted by an organism and have an effect on the environment.

In operant conditioning, behaviors followed by favorable consequences (such as social approval) are more likely to increase in frequency, while those followed by unfavorable consequences (such as social rejection) tend to decrease. These consequences are known as reinforcers or punishers. A reinforcer is any stimulus that increases/strengthens the frequency of a behavior/response and can be positive or negative. Positive reinforcement refers to adding a pleasant or rewarding stimulus after a behavior, such as food or social approval, while Negative reinforcement means removing or withdrawing an unpleasant (negative) stimulus, such as removing shock. A punisher is a stimulus that follows a response that decreases the subsequent frequency of the response. Reinforcers can be categorized as: Primary reinforcers, which fulfill biological needs (e.g., food, water) or Secondary reinforcers, which gain their value through association with primary reinforcers (e.g., money used to buy food or water).

Skinner argued that much of human behavior—whether conscious or unconscious—is shaped by past reinforcement. For example, tipping a waitress to receive better service is a conscious operant response, while gravitating toward sugary foods because of the pleasurable sugar high may be more automatic.

Operant responses can also be shaped through a process called successive approximation. This involves reinforcing closer and closer versions of the desired behavior until the full behavior is achieved—commonly seen in animal training.

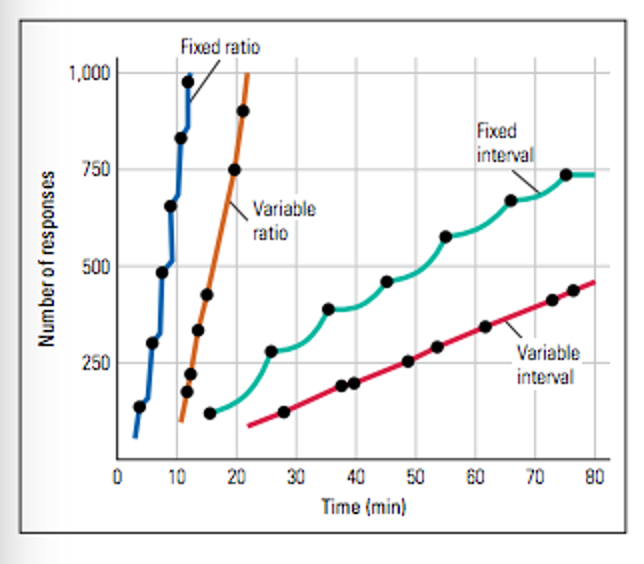

Reinforcement can be delivered in different ways:

Continuous reinforcement: the behavior is reinforced every time it occurs.

Partial reinforcement: the behavior is reinforced only some of the time, based on specific schedules:

Fixed ratio: reinforcement after a set number of responses.

Variable ratio: reinforcement after a variable number of responses.

Fixed interval: reinforcement after a fixed amount of time.

Variable interval: reinforcement after varying amounts of time.

Differences: Operant and classical conditioning are both forms of associative learning, but they differ in the way associations are established. In classical conditioning, learning occurs passively as a neutral stimulus becomes linked with an automatic, reflexive response—the stimulus triggers the behavior without conscious effort. In contrast, operant conditioning involves active learning, where voluntary behaviors are shaped by their consequences; the organism performs a behavior, and the environment responds with reinforcement or punishment.

Similarities: When reinforcement is absent and response rates decline, extinction in operant conditioning occurs. This is similar to classical conditioning in that the response is not truly unlearned but hibernates and can be spontaneously recovered even with a single reinforcer.

Extinction/Fear Extinction

Fear extinction is defined as the decline in a conditioned fear responses (CR) following non-reinforced exposure to a feared conditioned stimulus (CS). Behavioral evidence suggests that extinction is best understood as a form of inhibitory learning. Rather than erasing the original fear memory, extinction involves learning a new association that suppresses or inhibits the expression of fear. This is evident in phenomena such as spontaneous recovery, where extinguished fear responses can resurface over time. The original learning remains intact; what changes is the addition of new learning that signals safety. Thus, extinction reflects not the loss of old learning, but the formation of a competing memory that can override, but not eliminate, the initial fear response.

Gray and Bjorklund (2014) describe this process as a part of exposure treatment, typically used with specific phobias. This treatment is utilized for fears that are conditioned (e.g., learned experience that led to the fear) or unconditioned (e.g., no experience with the stimulus).

Example:

Imagine a child gets bitten by a dog and develops a strong fear of dogs. Every time they see a dog, their heart races and they feel anxious. This is classical conditioning:

Dog → Bite → Fear

Later, the child begins exposure therapy, where they are gradually exposed to friendly dogs in safe, controlled situations—without being bitten. Over time, their fear decreases. This is extinction.

But weeks or months later, the child sees a dog in a different context (like at a park), and the fear returns. This is spontaneous recovery, showing that the original fear memory still exists. The therapy didn’t erase the fear—it taught the brain something new:

Dog → No bite → Safety

This new learning inhibits the fear response but doesn’t remove the original memory. In this way, fear extinction is a form of inhibitory learning: the brain forms a new “safe” memory that competes with the old “danger” memory, helping to regulate fear responses over time.

In classical conditioning, in order to remove the association between the CS and the CR, the CS must be presented without the presence of the US repeatedly (e.g., present the bell without the food to extinguish salivation being paired with the bell). Without the CS signaling the US, the CR will cease. Research suggests that this pairing is not lost during extinction but rather inhibited. One can bring the CR back by presenting the CS-US pairing after time has passed (spontaneous recovery). In operant conditioning, an operant response declines in rate and eventually disappears if it is no longer being reinforced. Thus, the absence of reinforcement of the response and the consequent declining in response rate both lead to extinction. However, just like classical conditioning, spontaneous recovery can happen, such that a single reinforced response following extinction can lead the individual to respond again at a rapid rate.

Different schedules of reinforcement

Schedules of reinforcement refer to operant conditioning in which an organism learns that the consequence of a response influences the future production of that response. Reinforcement schedules refer to the different patterns of reinforcement given to an organism. Ratio schedules give reinforcement after a certain number of behaviors and interval schedules give reinforcement after a certain amount of time has passed. Unlike continuous reinforcement, if an organism has been trained on variable-ratio or variable-interval reinforcement, the behavior is difficult to extinguish due to being reinforced to wait for long periods of time with no response, making them persistent. For example, a rat is trained on continuous reinforcement schedule, then moved to extinction condition, the rat will make a few presses of lever then quit. But if rat has been shifted gradually from continuous reinforcement to a variable schedule then finally to extinction, the rat will make hundreds of unreinforced responses before quitting. Gambling relies on hooking people with variable ratio schedule of payoff.

Fixed-ratio -> a reinforcer occurs after every nth response, where n is some whole number greater than 1. (E.g., in a fixed-ratio 5 schedule every fifth response is reinforced)

Variable-ratio -> A reinforcer occurs after an unpredictable number (nth) of responses, usually around some average. E.g., in a variable-ratio 5 schedule reinforcement might come after 7 responses on one trial, after 3 on another, and so on, in random manner, but the average number of responses required for reinforcement would be 5.

Fixed-interval -> Reinforcer occurs after a fixed period in time elapses between one reinforced response and the next. Any response occurring before that time elapses is not reinforced (E.g., in a fixed-interval 30-second schedule, only the first response that occurs after 30 seconds since the last reinforcement will be rewarded.)

Variable-interval -> Reinforcer occurs after an interval time between reinforced responses, but that time period varies predictably around an average. E.g., in a variable-interval 30-second schedule the average period required before the next response will be reinforced is 30 seconds, but the exact time varies each trial.

Evidence that classical conditioning depends on predictive value of CS (Conditioned Stimulus)

While Pavlov posited a behaviorist theory in classical conditioning, some researchers argue for a stimulus-stimulus theory that is more cognitive in nature. More specifically, the expectancy theory explains how a conditioned response can look very different from an unconditioned response. For example, additional responses to food in the dog example (e.g., salivating and wagging tail, begging for food) likely occur because the dog expects the food because creatures naturally seek relationships between events/stimuli. In other words, these responses were not elicited by the unconditioned response originally, so some other explanation must be occurring. This theory is supported in multiple ways.

It is more effective if the conditioned stimulus (bell) comes immediately before the unconditioned stimulus (food); in fact, conditioning does not occur if they are paired simultaneously or after the unconditioned stimulus. This is likely because an organism is trying to predict, and a stimulus that does not precede the unconditioned stimulus (e.g., food) is useless in predicting future events as the association simply won’t be made.

The conditioned stimulus (bell) must signal heightened probability of occurrence of the unconditioned stimulus (food). This means conditioning is dependent on both the total number of pairings and the number of times they occur without being paired, with conditioning strengthened when paired and weakened when not. It is likely that these probabilities are weighed against each other, and a conditioned response will only occur if the probability they will be paired is greater.

Finally, conditioning is ineffective when the animal already has a good predictor. In other words, if one conditioned stimulus already reliably comes before an unconditioned stimulus, a new stimulus, presented simultaneously with the original conditioned stimulus, generally does not become a conditioned stimulus. Even if presented multiple times, the new stimulus will not create a conditioned response on its own due to a blocking effect. This is likely because the organism can already cognitively predict the unconditioned stimulus and has no need for a new one. The blocking effect is only worked around when the unconditioned stimulus is larger in magnitude or different from the original (e.g., much larger and fresher dog food), so the new stimulus provides new information for prediction.

Discriminant stimulus

In operant conditioning (OC), a discriminative stimulus (SD) is an antecedent stimulus that signals that a specific response will be reinforced (or punished), because in the past, that response was consistently reinforced (or punished)in the presence of that stimulus, and not in its absence (e.g., during extinction). In other words, it indicates to the organism that a reinforcer is avaliable and a response should occur to gain the reinforcer. For example, Tone -> pigeon presses lever -> seeds. In this case, the tone is the discriminant stimulus that indicates the likelihood that a particular response will be reinforced.

Subjects may have a difficult time discriminating between closely related stimuli, similar to generalization; however, this makes discriminative stimuli useful when studying animals’ and humans’ abilities to form sensory processes and cognitive concepts. For example, research with infants has shown that they respond differently—by increasing or decreasing their sucking behavior—to stories they heard in utero versus unfamiliar ones. This suggests that even without language, infants can detect differences between auditory stimuli. On the other hand, pigeons have been shown to respond equally to similar discriminative stimuli, such as pictures of slightly different trees, showing that pigeons can generalize a concept (in this case, trees) across diverse images of trees, indicating more complex cognitive processing than simple stimulus-response learning.

Preparedness in classical conditioning

Preparedness is the notion in classical conditioning that it is easier for certain species to associate certain stimuli, responses, and reinforcers depending on a biological basis. This stems from Biological Preparedness, which refers to the process of innate structures that ensure that organisms will be physically able to perform species-typical behaviors and will be motivated to learn what they must do (e.g., babies have the innate ability to suckle). For instance, preparedness posits that it is easier to induce phobias related to survival than any other kinds of fears due to humans’ evolutionary past. In other words, organisms learn to fear environmental threats faster to survive and reproduce more often due to this preparedness conditioning. Certain CS/US pairings are more adaptive to survival and are thus more easily formed. For example, eating something poisonous can be very easily conditioned because it corresponds with a threat to our survival.If a person eats berries (initially a neutral stimulus) and then becomes sick (the unconditioned stimulus), they will likely develop a lasting avoidance of those berries. After this experience, the berries become a conditioned stimulus that triggers the conditioned response of avoidance, even without the person getting sick again. and will likely lead to the person ever eating again.

In contrast to preparedness: According to research, equipotentiality among learning in classical conditioning posts that the laws of learning should remain consistent regardless of the stimuli, reinforcements, or responses used. Equipotentiality proposes that all forms of associative learning, either classical or operant, involve the same underlying principle: the rate of learning is independent of the combination of stimuli, reinforcer, or responses involved in conditioning. In classical conditioning, any conditioned stimulus has equal potential to become associated with any unconditioned stimulus. In operant conditioning, any response can be strengthened by any reinforcer. Equipotentiality was important for behavior theorists because their goal was to construct a general process learning theory that could be applied equally across all conditions.

Punishment versus negative reinforcement

Punishment is the opposite of reinforcement. Punishment refers to a process where the consequence of a behavior decreases the likelihood that the behavior will happen again. Punishment can be either positive or negative. Positive punishment involves adding an aversive stimulus to reduce a behavior—for example, delivering a mild shock to a rat after it presses a lever to decrease lever pressing. In contrast, negative punishment involves removing a pleasurable stimulus to reduce behavior, such as taking away money from an employee who is frequently late to discourage tardiness.

Reinforcement, on the other hand, is any consequence that increases the likelihood that a behavior will recur. Like punishment, reinforcement can be positive or negative. Positive reinforcement occurs when a desirable stimulus is added after a behavior, making that behavior more likely in the future. For instance, giving verbal praise to encourage good behavior is positive reinforcement. Negative reinforcement happens when an aversive stimulus is removed following a behavior, which also increases the likelihood of that behavior. An example is putting on a seatbelt to stop the annoying dinging sound in a car—the removal of the dinging noise (an aversive stimulus) reinforces the behavior of buckling up.

In summary, the key difference between punishment and negative reinforcement lies in their effects on behavior. Punishment always aims to decrease the target behavior—either by adding something unpleasant (positive punishment) or removing something pleasant (negative punishment). Negative reinforcement, however, always increases the desired behavior by removing an unpleasant stimulus, such as putting on a seatbelt to eliminate the dinging sound.

Describe the Law of Effect

Thorndike’s Law of Effect states that behaviors followed by satisfying consequences are more likely to occur again in the same situation, while behaviors followed by discomforting consequences are less likely to be repeated. This principle was an early foundation for understanding how consequences influence behavior.

Thorndike’s law of effect differs from classical conditioning in that the animals he used had some control over their environment and actions compared to Pavlov’s dogs who could only rely on the different stimuli to tell them when food would come. Thorndike’s cats were able to access food under their own effort. For example, he placed hungry cats inside puzzle boxes with food visible just outside. At first, the cats engaged in random behaviors (e.g., scratching at bars, pushing at ceiling, digging at floor, howling) until, often by accident, they pressed the lever that opened the door to food and freedom. Thorndike repeated this process many times and found that in early trials the cats made many useless movements before happening upon the one that released them from the puzzle box. But, on average they escaped more quickly with each successive trial. —demonstrating that the behavior leading to a satisfying result (escaping the box and accessing food) was strengthened.

Thorndike emphasized the importance of the situation in which the behavior occurred—this included all environmental cues such as sights, sounds, smells, and even the animal’s internal state. These situational elements did not reflexively trigger the correct response, but rather set the stage for many possible behaviors. Only the behavior that successfully opened the latch and led to food and freedom was reinforced. As a result, the next time the cat was placed in the same situation, it was more likely to perform the effective behavior again.

In summary, the Law of Effect explains how consequences shape behavior over time, and laid the groundwork for later theories of learning, including operant conditioning

Flashbulb Memory

Flashbulb memories refer to a person’s memory for the circumstances surrounding shocking, highly charged events. It refers to memory for the circumstances surrounding how a person heard about an event, not memory for the event itself. For example, a flashbulb memory for 9/11 would be memory for where a person was and what they were doing when they found out about the terrorist attack. Therefore, flashbulb memories give importance to events that otherwise would be unexceptional and go unnoticed/remembered. (e.g., talking to the receptionist daily vs talking to her the moment it happened).

Brown and Kulik originally believed that flashbulb memories are highly emotional circumstances, but they are remembered for long periods of time and are especially vivid and detailed. Brown and Kulik’s idea that flashbulb memories are like a photograph was based on their finding that people were able to describe in some detail what they were doing when they heard about highly emotional events like 9/11. The problem with this procedure is that there was no way to determine whether the reported memories were accurate. The only way to check for accuracy is to compare the person’s memory to what actually happened or to memory reports collected immediately after the event, called recall. Recall research has found that although people report that memories surrounding flash- bulb events are especially vivid, they are often inaccurate or lacking in detail.

The idea that memory can be affected by what happens after an event is the basis of Ulric Neisser and coworkers (1996) narrative rehearsal hypothesis, which states that we may remember events like those that happened on 9/11 not because of a special mechanism but because we rehearse these events after they occur (e.g., seeing pictures of the planes on the tv, reading newspapers about 9/11, etc.)

Memories that are suppposed to be flashbulb memories decay just like regular memories. In fact, many flashbulb memory researchers have expressed doubt that flashbulb memories are much different from regular memories. eople’s memories for flashbulb events remain more vivid than everyday memories and people believe that flashbulb memories remain accurate, while everyday memories don’t. Thus, we can say that flashbulb memories are both special (vivid; likely to be remem- bered) and ordinary (may not be accurate) at the same time. (people do remember them—even if inaccurately— whereas less noteworthy events are less likely to be remembered.)

Schema