Chapter 9: Memory Management in Operating Systems

1/97

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

98 Terms

What is required for a program to run in memory?

A program must be brought from disk into memory and placed within a process.

What are the only storage units that the CPU can access directly?

Main memory and registers.

What does the memory unit see during operations?

A stream of addresses with read requests or addresses with data and write requests.

How does register access compare to main memory access in terms of speed?

Register access is done in one CPU clock or less, while main memory can take many cycles, causing a stall.

What component sits between main memory and CPU registers?

Cache.

Why is memory protection necessary?

To ensure that a process can access only those addresses in its address space.

How can memory protection be provided?

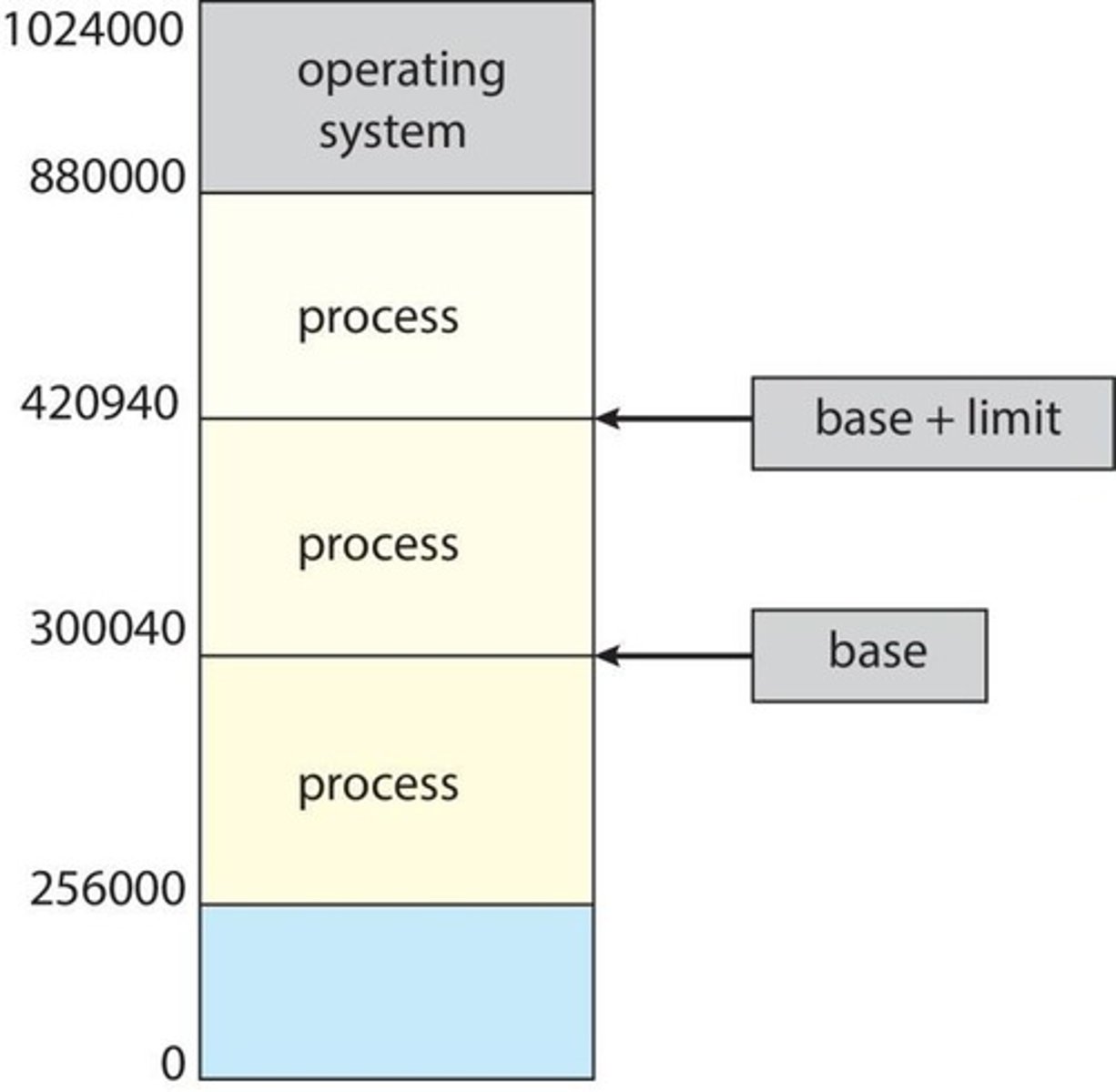

By using a pair of base and limit registers that define the logical address space of a process.

What must the CPU check for every memory access in user mode?

The CPU must check that the access is between the base and limit for that user.

What type of instructions are privileged regarding memory protection?

Instructions for loading the base and limit registers.

What is the input queue in the context of address binding?

Programs on disk, ready to be brought into memory to execute.

What is a disadvantage of loading the first user process at physical address 0000?

It is inconvenient to have the first user process always at that address.

What are the different representations of addresses at various stages of a program's life?

Source code addresses are usually symbolic, compiled code addresses bind to relocatable addresses, and linkers or loaders bind relocatable addresses to absolute addresses.

What are the three stages of address binding for instructions and data?

Compile time, load time, and execution time.

What happens at compile time in address binding?

If the memory location is known a priori, absolute code can be generated; recompilation is necessary if the starting location changes.

What is generated at load time if the memory location is not known at compile time?

Relocatable code.

What occurs during execution time in address binding?

Binding is delayed until run time if the process can be moved during execution from one memory segment to another.

What hardware support is needed for address maps?

Base and limit registers.

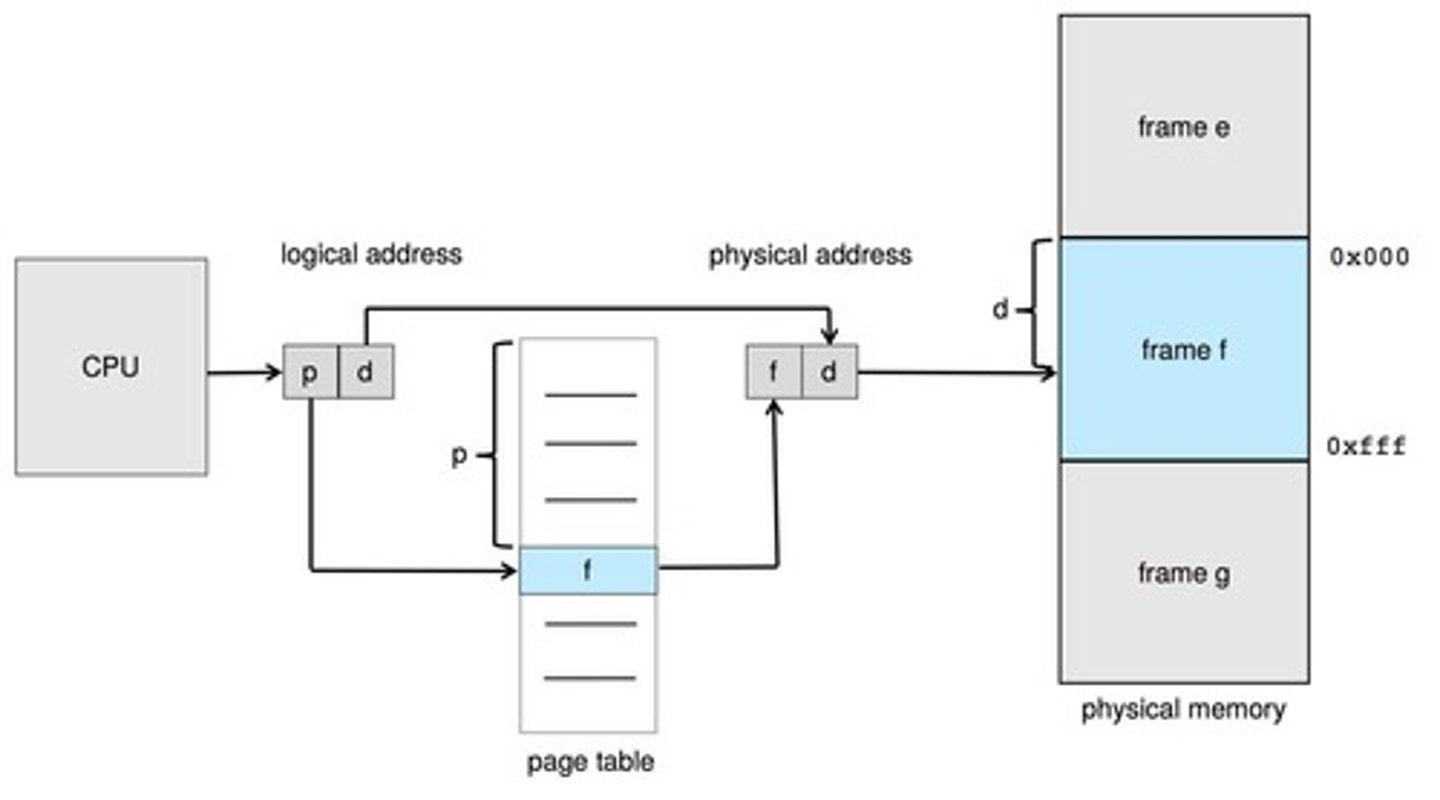

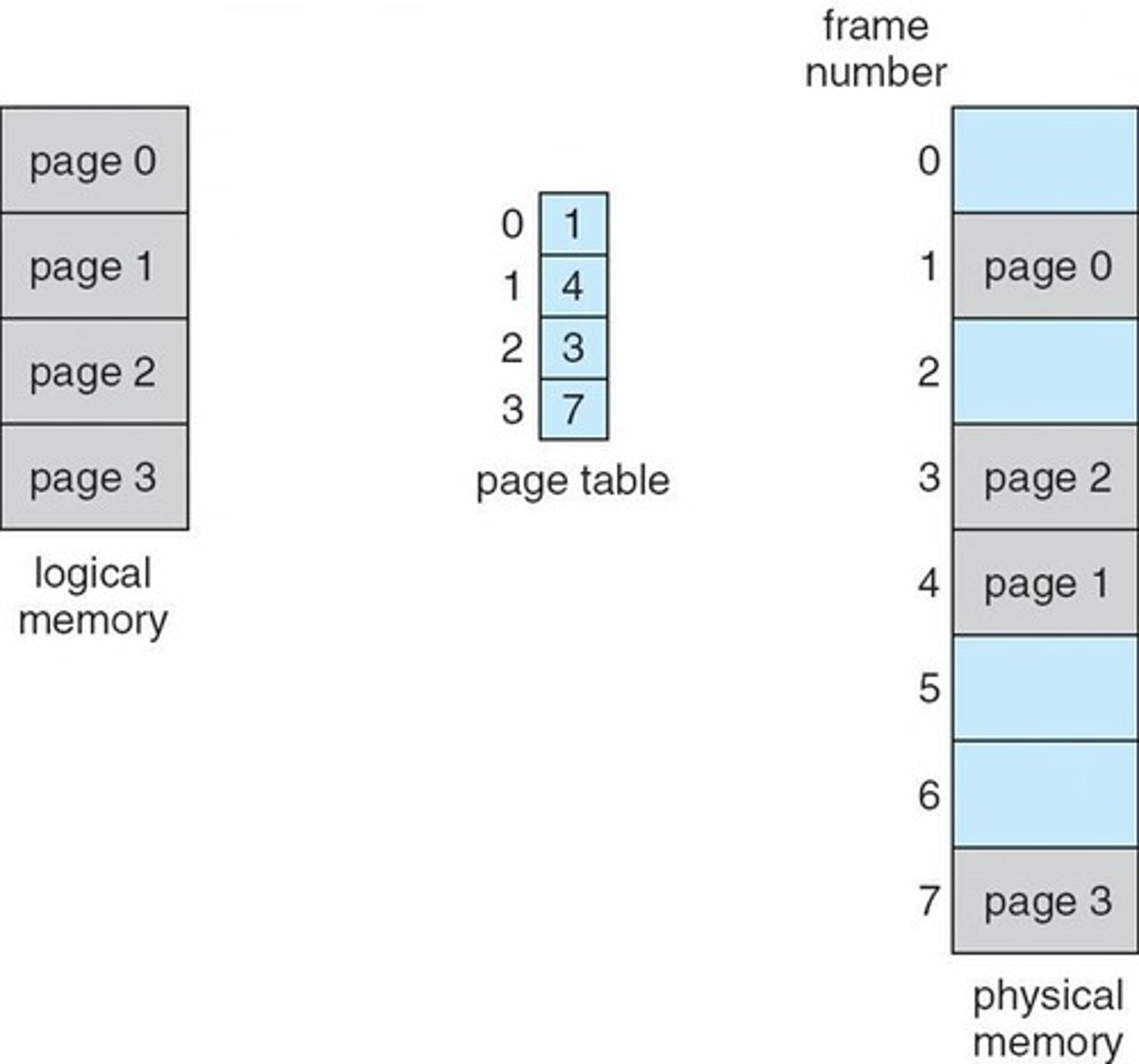

What is the purpose of the page table in memory management?

To manage the mapping of virtual addresses to physical addresses.

What are the two types of memory allocation discussed?

Contiguous Memory Allocation and Paging.

What architectures are used as examples in memory management?

Intel 32 and 64-bit Architectures and ARMv8 Architecture.

What is the main objective of Chapter 9 in the Operating System Concepts book?

To provide a detailed description of various ways of organizing memory hardware and discuss memory-management techniques.

What is the central concept of memory management regarding address spaces?

The concept of a logical address space that is bound to a separate physical address space.

What is a logical address?

An address generated by the CPU, also referred to as a virtual address.

What is a physical address?

The address seen by the memory unit.

When are logical and physical addresses the same?

They are the same in compile-time and load-time address-binding schemes.

How do logical and physical addresses differ in execution-time address-binding schemes?

Logical (virtual) and physical addresses differ in execution-time address-binding schemes.

What is the logical address space?

The set of all logical addresses generated by a program.

What is the physical address space?

The set of all physical addresses generated by a program.

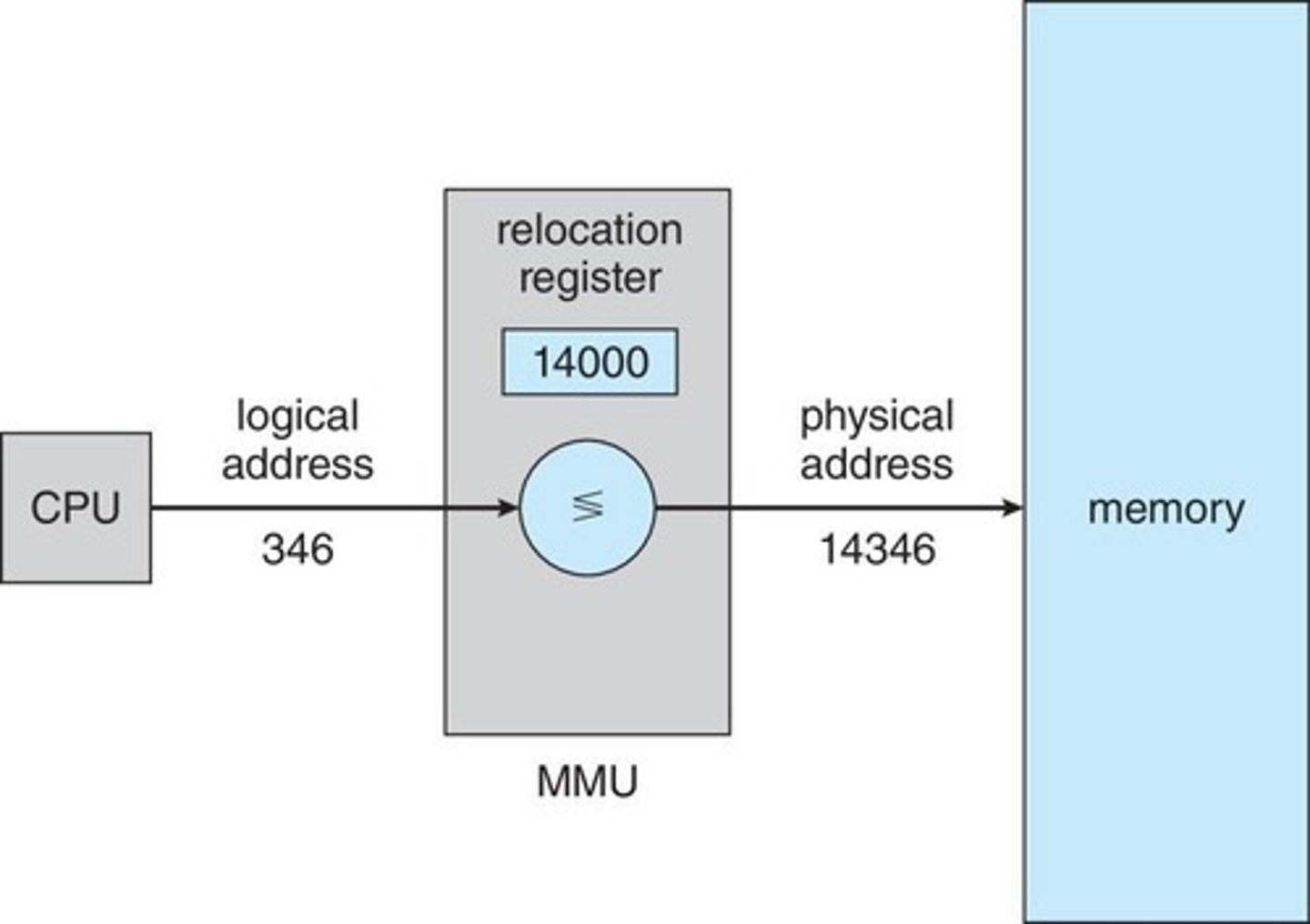

What does the Memory-Management Unit (MMU) do?

It is a hardware device that maps virtual addresses to physical addresses at run time.

What is the role of the relocation register in memory management?

The value in the relocation register is added to every address generated by a user process at the time it is sent to memory.

What type of addresses does a user program deal with?

A user program deals with logical addresses and never sees the real physical addresses.

When does execution-time binding occur?

Execution-time binding occurs when a reference is made to a location in memory.

What happens to logical addresses during execution-time binding?

Logical addresses are bound to physical addresses.

What is a simple scheme in memory management?

It is a generalization of the base-register scheme, where the base register is now called the relocation register.

What is the function of the relocation register in memory management?

The value in the relocation register is added to every address generated by a user process at the time it is sent to memory.

What is dynamic loading in operating systems?

Dynamic loading allows a program to execute without needing the entire program in memory; routines are loaded only when called.

What are the benefits of dynamic loading?

Better memory-space utilization since unused routines are never loaded, and all routines are kept on disk in relocatable load format.

What is dynamic linking?

Dynamic linking postpones linking until execution time, using a stub to locate the appropriate memory-resident library routine.

What happens if a routine is not in a process's memory address during dynamic linking?

The operating system adds the routine to the address space if it is not present.

What is the difference between static linking and dynamic linking?

Static linking combines system libraries and program code into the binary program image, while dynamic linking occurs at execution time.

What is contiguous allocation in memory management?

Contiguous allocation is a method where main memory is divided into two partitions: one for the resident operating system and one for user processes.

How does contiguous allocation protect user processes?

Relocation registers are used to protect user processes from each other and from changing operating-system code and data.

What do the base and limit registers do in contiguous allocation?

The base register contains the smallest physical address, and the limit register defines the range of logical addresses allowed.

What is the purpose of the MMU in memory management?

The Memory Management Unit (MMU) maps logical addresses dynamically.

What is variable partition allocation?

Variable partition allocation allows multiple-partition sizes for efficiency, with holes of various sizes scattered throughout memory.

What is a 'hole' in the context of variable partition allocation?

A hole is a block of available memory that can accommodate a process when it arrives.

What happens when a process exits in variable partition allocation?

The partition is freed, and adjacent free partitions are combined.

What information does the operating system maintain regarding memory partitions?

The operating system maintains information about allocated partitions and free partitions (holes).

What is the dynamic storage-allocation problem?

The problem of how to satisfy a request of size n from a list of free holes.

What is the significance of dynamic loading for large code bases?

It is useful when large amounts of code are needed to handle infrequently occurring cases.

What is the role of the operating system in dynamic loading?

The OS can provide libraries to implement dynamic loading.

What is a stub in the context of dynamic linking?

A small piece of code used to locate the appropriate memory-resident library routine.

Why might versioning be needed in dynamic linking?

Versioning may be needed to manage updates and compatibility of system libraries.

How does contiguous allocation affect the degree of multiprogramming?

The degree of multiprogramming is limited by the number of partitions available.

What is the main memory structure in contiguous allocation?

Main memory is usually divided into a low memory area for the operating system and a high memory area for user processes.

What is the first-fit memory allocation strategy?

Allocate the first hole that is big enough.

What is the best-fit memory allocation strategy?

Allocate the smallest hole that is big enough; must search the entire list unless ordered by size.

What is the worst-fit memory allocation strategy?

Allocate the largest hole; must also search the entire list.

Which memory allocation strategies are better in terms of speed and storage utilization?

First-fit and best-fit are better than worst-fit.

What is external fragmentation?

Total memory space exists to satisfy a request, but it is not contiguous.

What is internal fragmentation?

Allocated memory may be slightly larger than requested memory; this size difference is memory internal to a partition but not being used.

What does the first fit analysis reveal about fragmentation?

Given N blocks allocated, 0.5 N blocks are lost to fragmentation, with 1/3 potentially being unusable (50-percent rule).

How can external fragmentation be reduced?

By compaction, which involves shuffling memory contents to place all free memory together in one large block.

What is required for compaction to be possible?

Relocation must be dynamic and done at execution time.

What is the I/O problem related to compaction?

Latch job in memory while it is involved in I/O and do I/O only into OS buffers.

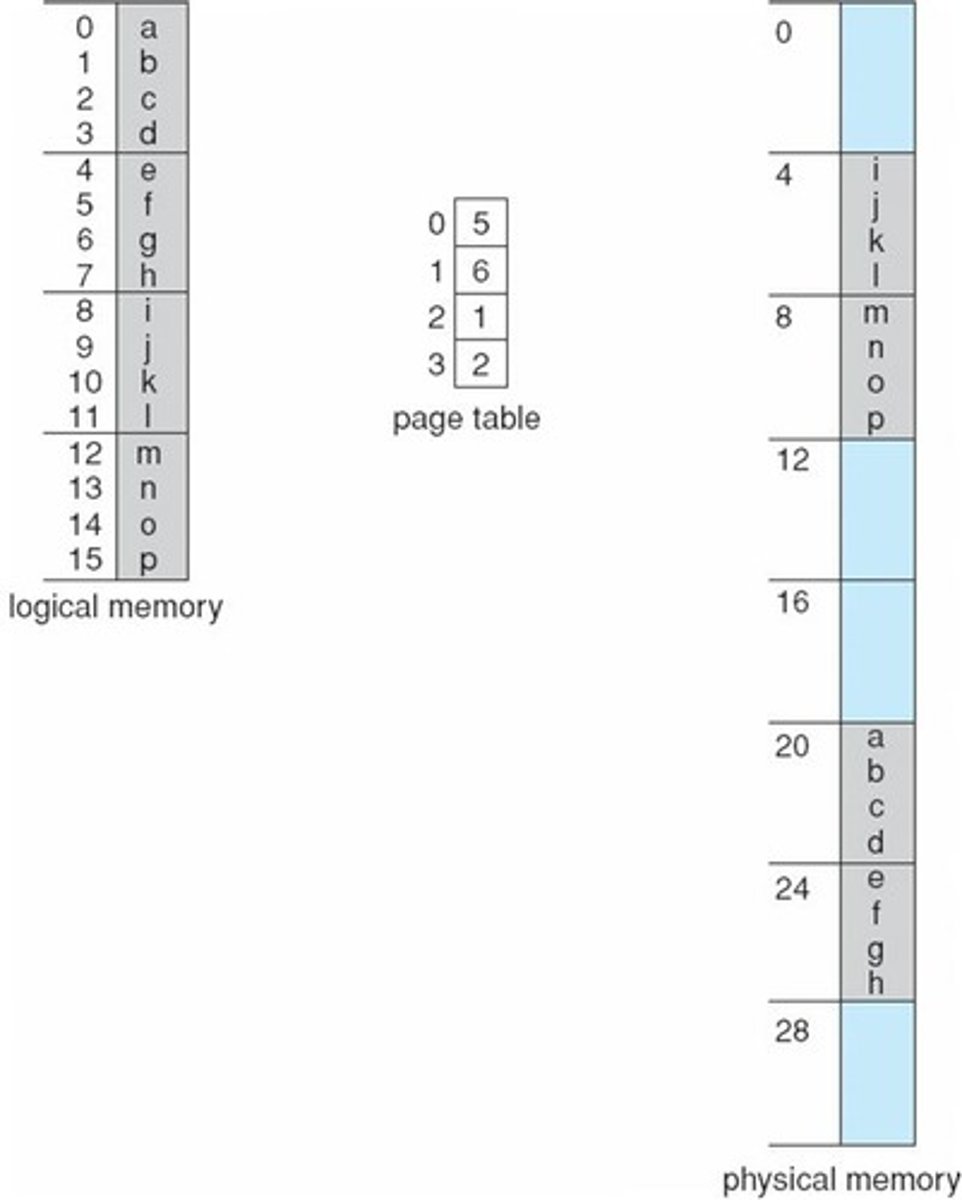

What is paging in memory management?

A method where the physical address space of a process can be noncontiguous, avoiding external fragmentation and the problem of varying sized memory chunks.

How is physical memory divided in paging?

Into fixed-sized blocks called frames, with sizes that are powers of 2, between 512 bytes and 16 Mbytes.

How is logical memory divided in paging?

Into blocks of the same size called pages.

What is the purpose of a page table in paging?

To translate logical addresses to physical addresses.

What is the address translation scheme in paging?

The address generated by the CPU is divided into a page number (used as an index into a page table) and a page offset (combined with the base address to define the physical memory address).

What are the components of a logical address in paging?

For a logical address space of 2^m and page size of 2^n, it is divided into page number (p) and page offset (d).

What is the internal fragmentation in paging?

The unused space within a page when the allocated memory is larger than the requested memory.

How is internal fragmentation calculated in a paging example?

For a page size of 2,048 bytes and a process size of 72,766 bytes, the internal fragmentation is 2,048 - 1,086 = 962 bytes.

What is the worst-case fragmentation scenario in paging?

1 frame - 1 byte.

What is the average fragmentation in paging?

1/2 of the frame size.

Are small frame sizes desirable in paging?

Yes, small frame sizes are generally desirable to minimize internal fragmentation.

What is the purpose of a page table entry in memory management?

To track memory allocation, but it consumes memory itself.

What are the two page sizes supported by Solaris?

8 KB and 4 MB.

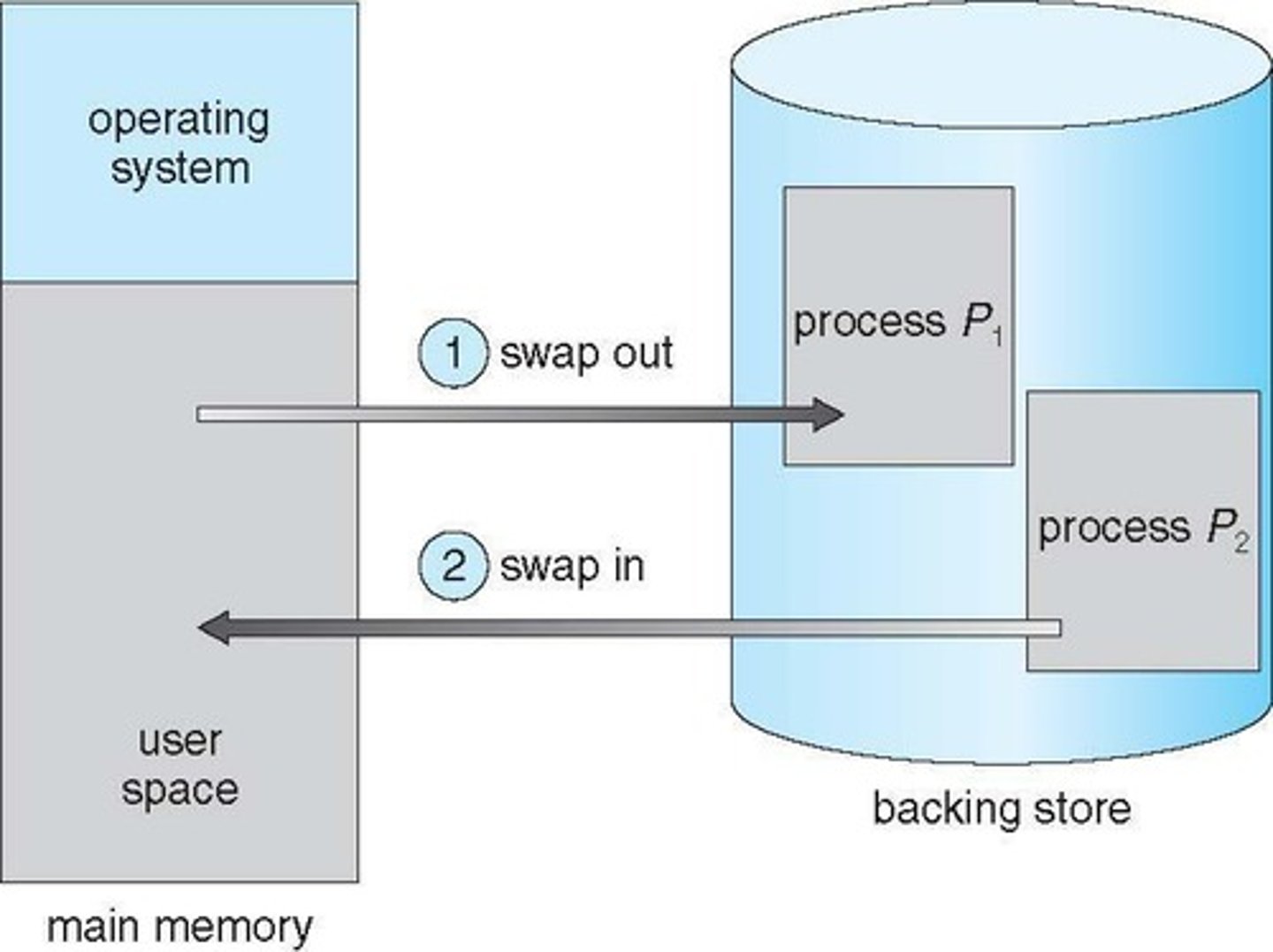

What is the process of swapping in operating systems?

Temporarily moving a process out of memory to a backing store and bringing it back for execution.

What is a backing store in the context of swapping?

A fast disk large enough to accommodate copies of all memory images for users, providing direct access to these images.

What is the 'roll out, roll in' swapping variant?

A method used in priority-based scheduling where lower-priority processes are swapped out to allow higher-priority processes to execute.

What is the relationship between swap time and memory size?

Total transfer time is directly proportional to the amount of memory swapped.

What does the system maintain to manage processes in memory?

A ready queue of processes that are ready to run and have their memory images on disk.

Does a swapped-out process need to return to the same physical address?

It depends on the address binding method used.

What happens to pending I/O operations during swapping?

Pending I/O cannot occur as it would affect the wrong process.

What is double buffering in the context of swapping?

Transferring I/O to kernel space before sending it to the I/O device, which adds overhead.

When is standard swapping typically not used in modern operating systems?

It is not used unless free memory is extremely low.

What is the context switch time when swapping is involved?

It can be very high if the next process is not in memory, requiring swapping of processes.

How long can a context switch take when swapping a 100MB process at a transfer rate of 50MB/sec?

Total context switch time can be 4000 ms (4 seconds) for swapping out and in.

What system calls can reduce the size of memory swapped?

request_memory() and release_memory() to inform the OS of memory usage.

Why is swapping not typically supported on mobile systems?

Due to limited flash memory space, limited write cycles, and poor throughput between flash memory and CPU.

How does iOS manage low memory conditions?

It asks apps to voluntarily relinquish allocated memory, allowing read-only data to be reloaded from flash if needed.

What does Android do when free memory is low?

It terminates apps but first writes the application state to flash for fast restart.

What memory management method is supported by both iOS and Android?

Paging.

What is a major drawback of swapping in modern operating systems?

It can significantly increase context switch times and overall system latency.

What is the impact of swapping on system performance?

Swapping can degrade performance due to high context switch times and the overhead of managing memory.

What is the significance of a threshold in memory allocation?

Swapping is started if memory allocation exceeds a certain threshold and disabled when below it.

What is the effect of modified versions of swapping found in systems like UNIX and Windows?

They optimize memory management by only swapping when free memory is critically low.