L12: Syntax

1/21

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

22 Terms

What are the rules that govern sentence form?

Moving from fixed forms (e.g. ‘apple’) to derivational forms:

play → play, played, playing

I, you, admire → “I admire you”

Morphology and syntax

• In all languages, the formation of words and sentences follows highly regular patterns

• How are the regulations and exceptions represented?

The study and analysis of language production in children reveals common and persistent patterns

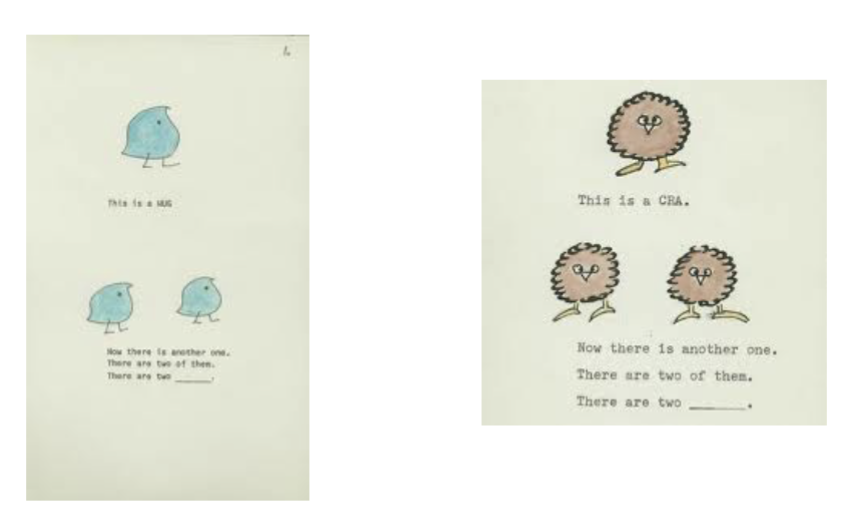

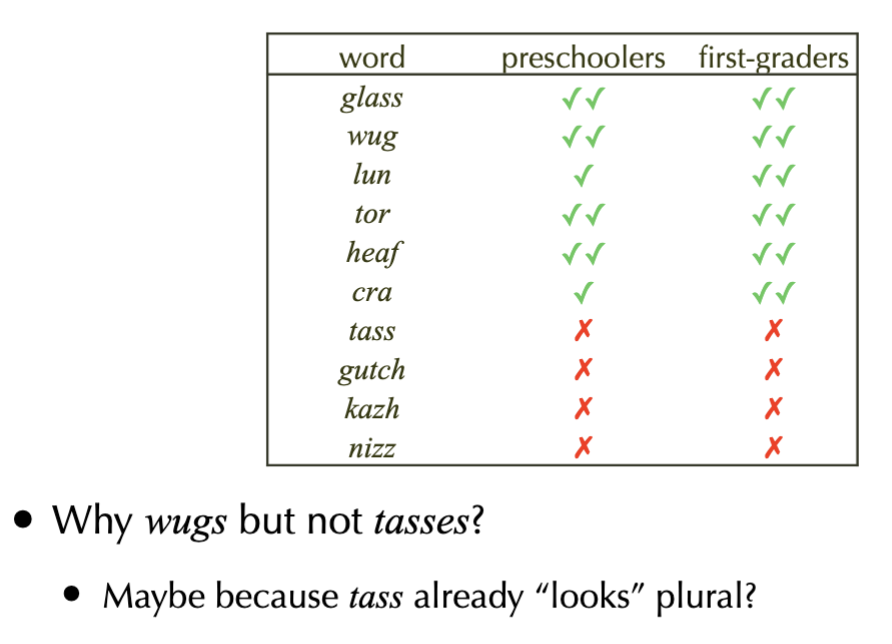

What is the Wug Test (Berko, 1958)

Experiment for Morphology

How was children’s performance on the Wug Test

showed young children have a natural abstract morphology thinking

Describe the two aspects of the case study: Learning English Past Tense

The problem of English past tense formation:

Regular formation: stem + ‘ed’

Irregulars do show some patterns

No-change: hit → hit

Vowel-change: ring → rang, sing → sang

Over-regularizations are common: goed

These errors often occur after the child has already produced the correct irregular form: went

Describe the learning curve of children learning morphology and the 4 aspects

Observed U-shaped learning curve:

Imitation: an early phase of conservative language use

Generalization: general regularities are applied to new forms

Overgeneralization: occasional misapplication of general patterns

Recovery: over time, overgeneralization errors cease to happen

Lack of Negative Evidence

Children do not receive reliable corrective feedback from

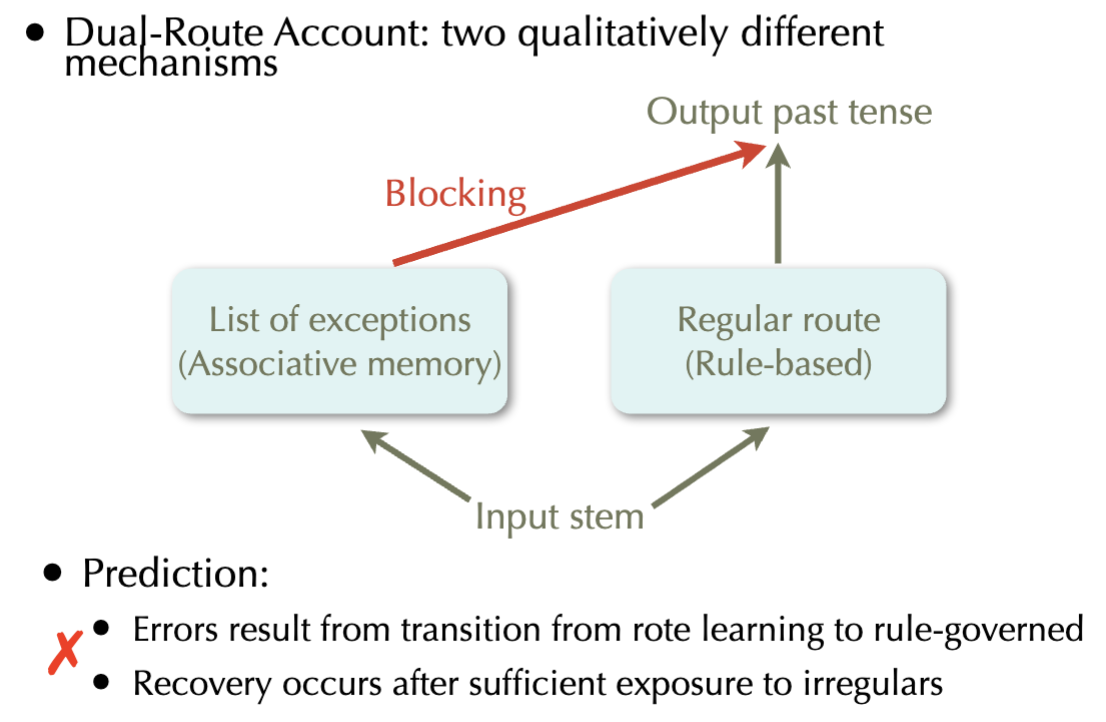

A Symbolic Account of English Past Tense - Model

Describe 2 Predictions of the Dual-Route Account model

Prediction:

Errors result from transition from rote learning to rule-governed

Recovery occurs after sufficient exposure to irregulars

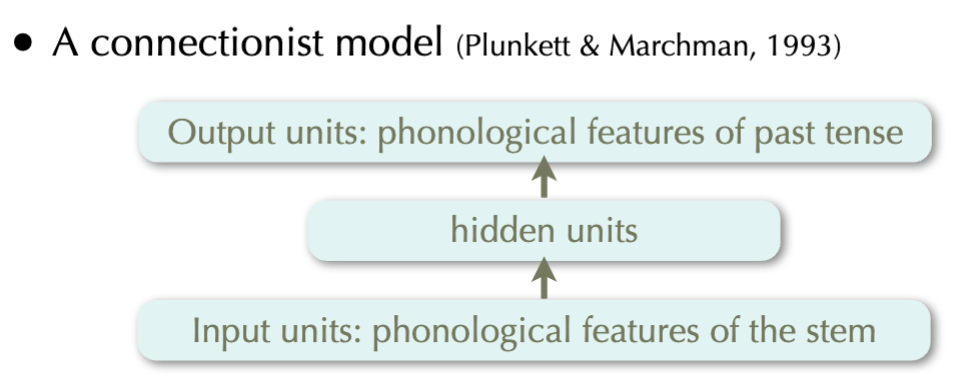

A Connectionist Account of Learning English Past - Model

Describe 2 properties of the connectionist model

Properties:

Early in training, the model shows tendency to overgeneralize; by the end of training, it exhibits near perfect performance

U-shaped performance is achieved using a single learning mechanism, but depends on sudden change in the training size

Describe the innateness of Language - Syntax

Central claim: humans have innate knowledge of language

Assumption: all languages have a common structural basis

Argument from the Poverty of the Stimulus (Chomsky 1965)

Linguistic experience of children is not sufficiently rich for learning the grammar of the language, hence they must have some innate specification of grammar

Assumption: knowing a language involves knowing a grammar

Universal Grammar (UG)

A set of rules which organize language in the human brain

What are 3 principles and parameters of universal grammar?

A finite set of fundamental principles that are common to all languages

E.g., “a sentence must have a subject”

A finite set of parameters that determine syntactic variability amongst languages

E.g., a binary parameter that determines whether the subject of a sentence must be overtly pronounced

Learning involves identifying the correct grammar

I.e., setting UG parameters to proper values for the current language

Describe the general approach of computational implementation of the principles and parameters of universal grammar

Formal parameter setting models for a small set of grammars

Clark 1992, Gibson & Wexler 1994, Niyogi & Berwick 1996, Briscoe 2000

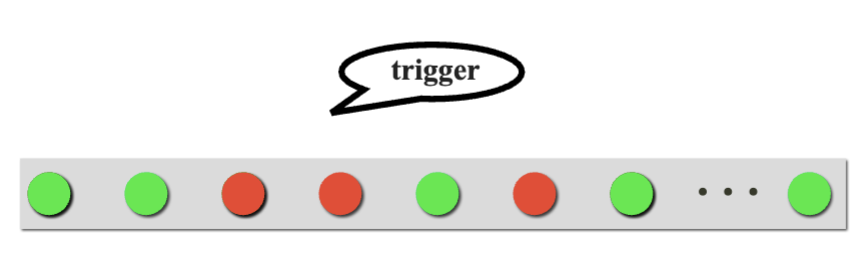

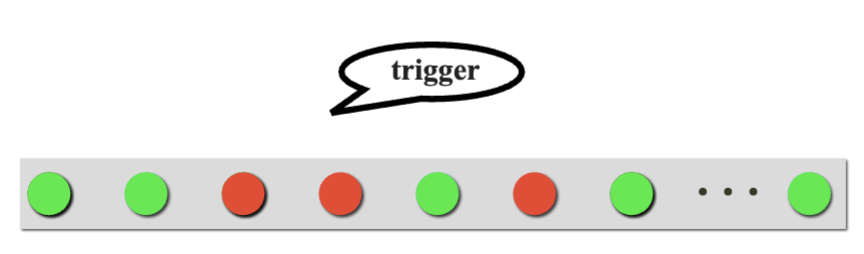

General approach:

Analyze current input string and set the parameters accordingly

Set a parameter when receiving evidence from an example which exhibits that parameter (trigger)

Representative models:

Triggering Learning Algorithm or TLA

Describe the 3 represenative models for computational implementation of the principles and parameters of universal grammarrepresentative

TLA: randomly modifies a parameter value if it cannot parse the input

STL: learns sub-trees (treelets) as parameter values

VL: assigns a weight to each parameter, and rewards or penalizes these weights depending on parsing success

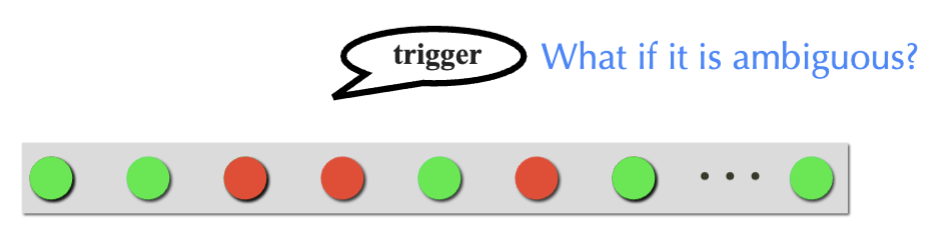

Describe the 3 representative models for computational implementation of the principles and parameters of universal grammar BUT with an ambiguous trigger

TLA: chooses one of the possible interpretations of the ambiguous trigger

STL: ignores ambiguous triggers and waits for unambiguous ones

VL: each interpretation is parsed and the parameter weights

What are the Computational Challenges of the principles and parameters of universal grammar (4)

Practical limitations:

Formalizing a UG that covers existing languages is a challenge

Learning relies on well-formed sentences as input

P&P framework predicts a huge space of possible grammars

20 binary parameters lead to > 1 million grammars

Search spaces for a grammar contain local maxima

I.e. learner may converge to an incorrect grammar

Most of the P&P models are psychologically implausible

They predict that a child may repeatedly revisit the same

Describe Usage-based Accounts of Language Acquisition

Main claims:

Children learn language regularities from input alone, without guidance from innate principles

Mechanisms of language learning are not domain-specific

Verb Island Hypothesis (Tomasello, 1992)

Children build their linguistic knowledge around individual items rather than adjusting general grammar rules they already possess

Children use cognitive processes to gradually categorize the syntactic structure of their item-based constructions

Distributional Representation of Grammar

Knowing a language is not equated with knowing a grammar

Knowledge of language is developed to perform communicative tasks of comprehension and production

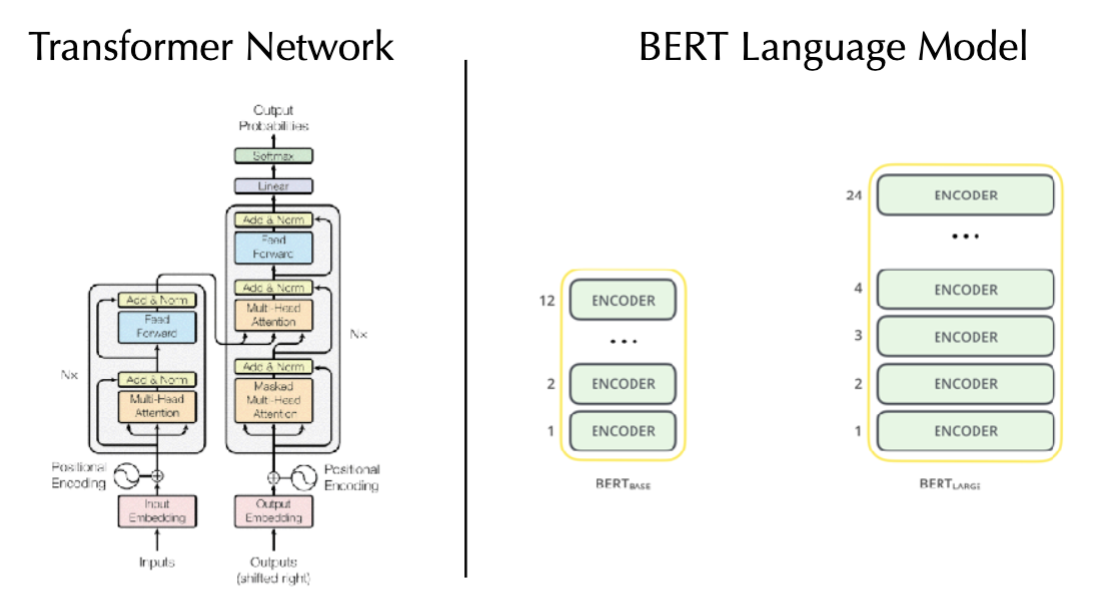

Neural networks for language representation and acquisition

Different levels of linguistic representation are emergent structures that a network develops in the course of learning

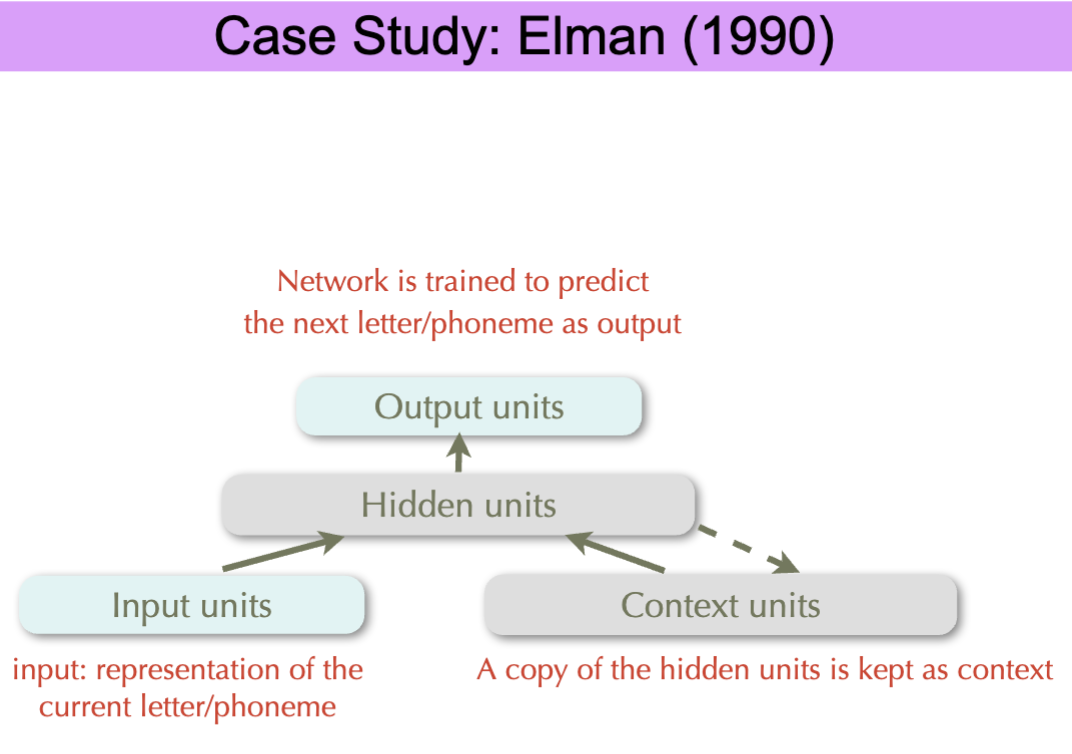

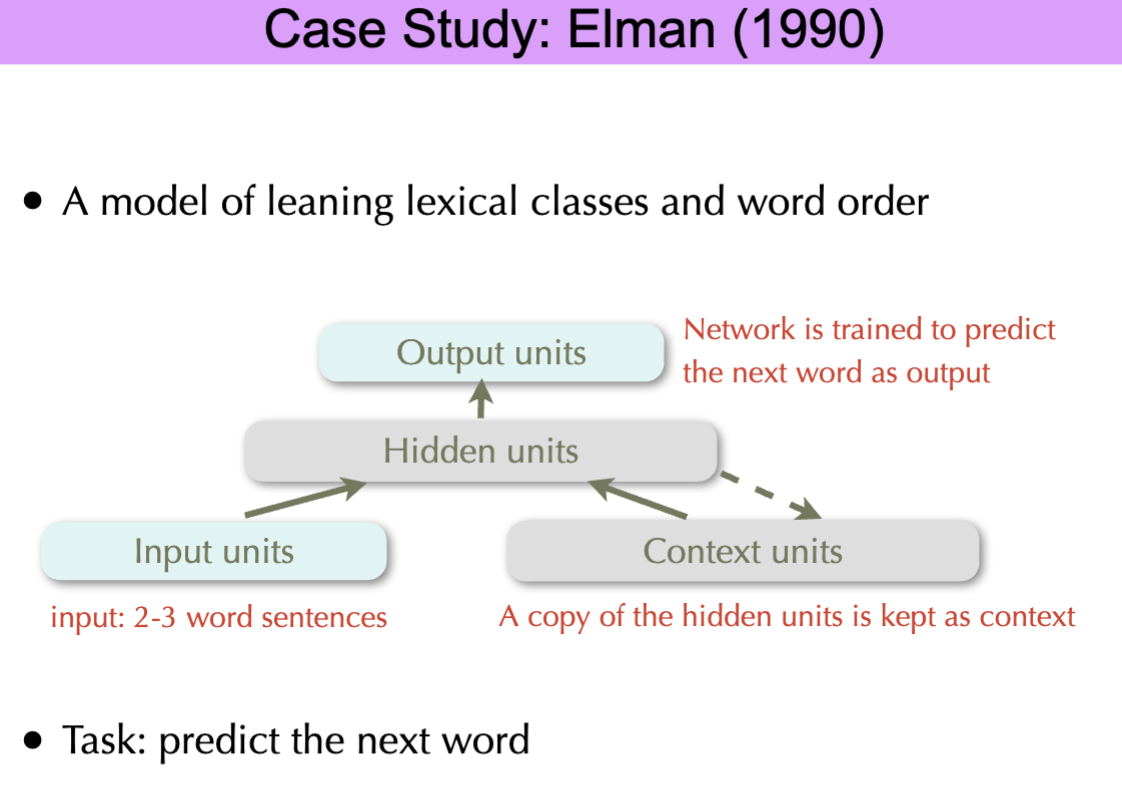

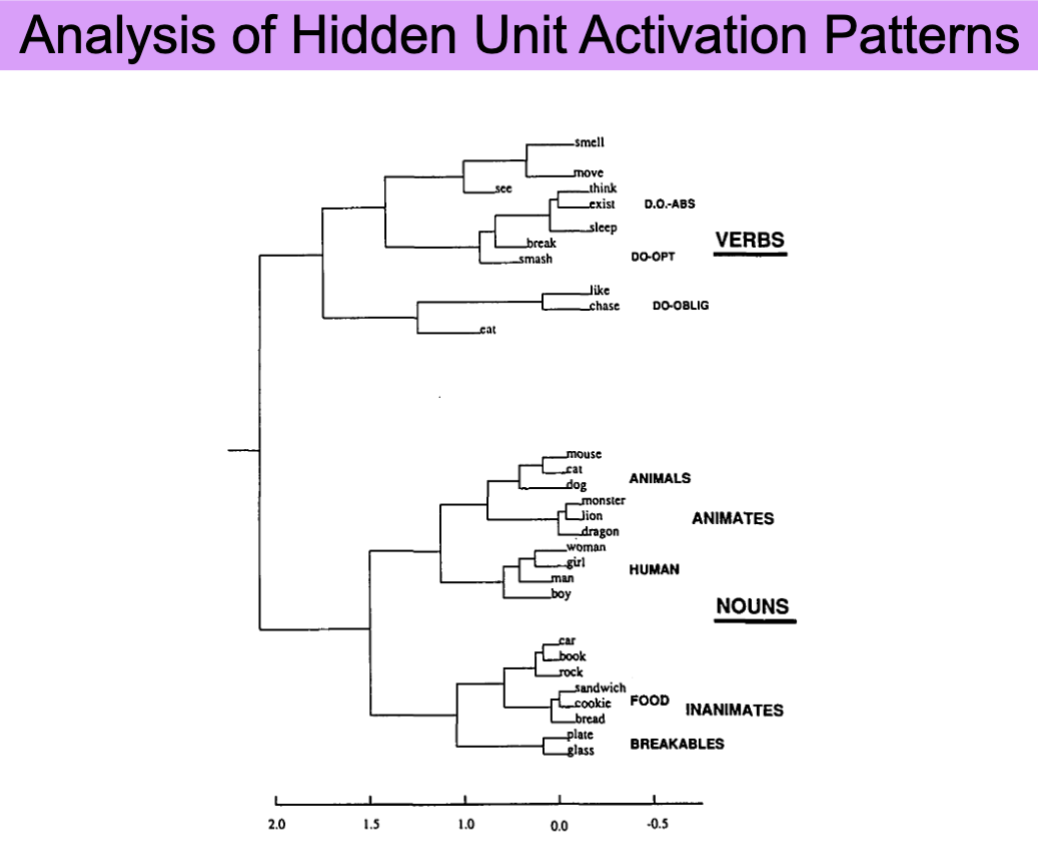

Case study: Elman (1990)

Case study: Elman (1990)

*The model learns to categorize words into appropriate classes, and expect the correct order between them in a sentence

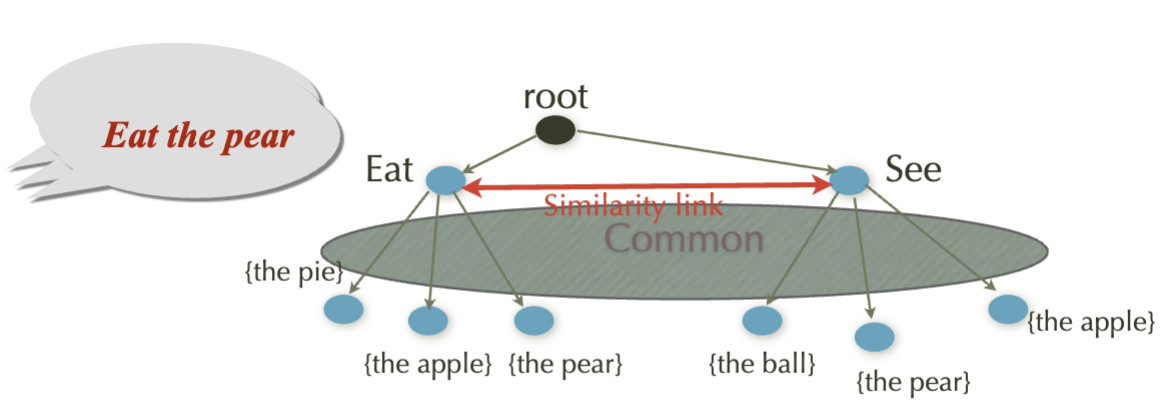

Describe the Case Study: MOSAIC

MOSAIC (Model Of Syntax Acquisition In Children

Learns from raw text, and produces utterances similar to what children produce using a discrimination network

Underlying mechanisms

Learning: expand the network based on input data

production: traverse the network and output contents of the nodes

Generalization

Similarity links allow limited generalization abilities

Lack of semantic knowledge prevents meaningful generalization

Generalized sentences are limited to high-frequency terms

Evaluation

The model was trained on a subset of CHILDES

It was used to simulate verb island phenomenon, optional infinitive in English, subject omission

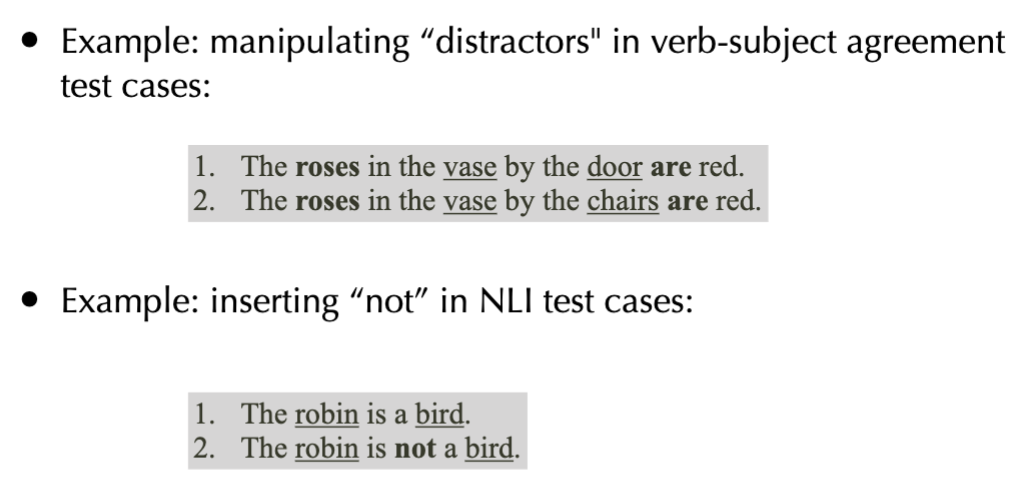

Describe 2 examples for Treating LLM’s as Human Subjects

Systematically manipulate aspects of input and monitor model output

Describe the process of Treating LLM’s as Human Subjects

Use a small-scale, controlled test set

... often borrowed from a published psycholinguistic study

Subject an LLM to these tests

Make conclusions about

the nature of linguistic knowledge learned by the LLM

the processing mechanisms employed by the LLM

Compare their performance to that of human subjects

Open questions

How various aspects of language acquisition interact with each other?

Various learning procedures are most probably interleaved (e.g.,word leaning and syntax acquisition)

Most of the existing models of language acquisition focus on one aspect, and simplify the problem

How to evaluate the models on realistic data?

Large collections of child-directed utterances/speech are available, but no such collection of semantic input

A wide-spread evaluation approach is lacking in the community