L9 Detectors

1/39

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

40 Terms

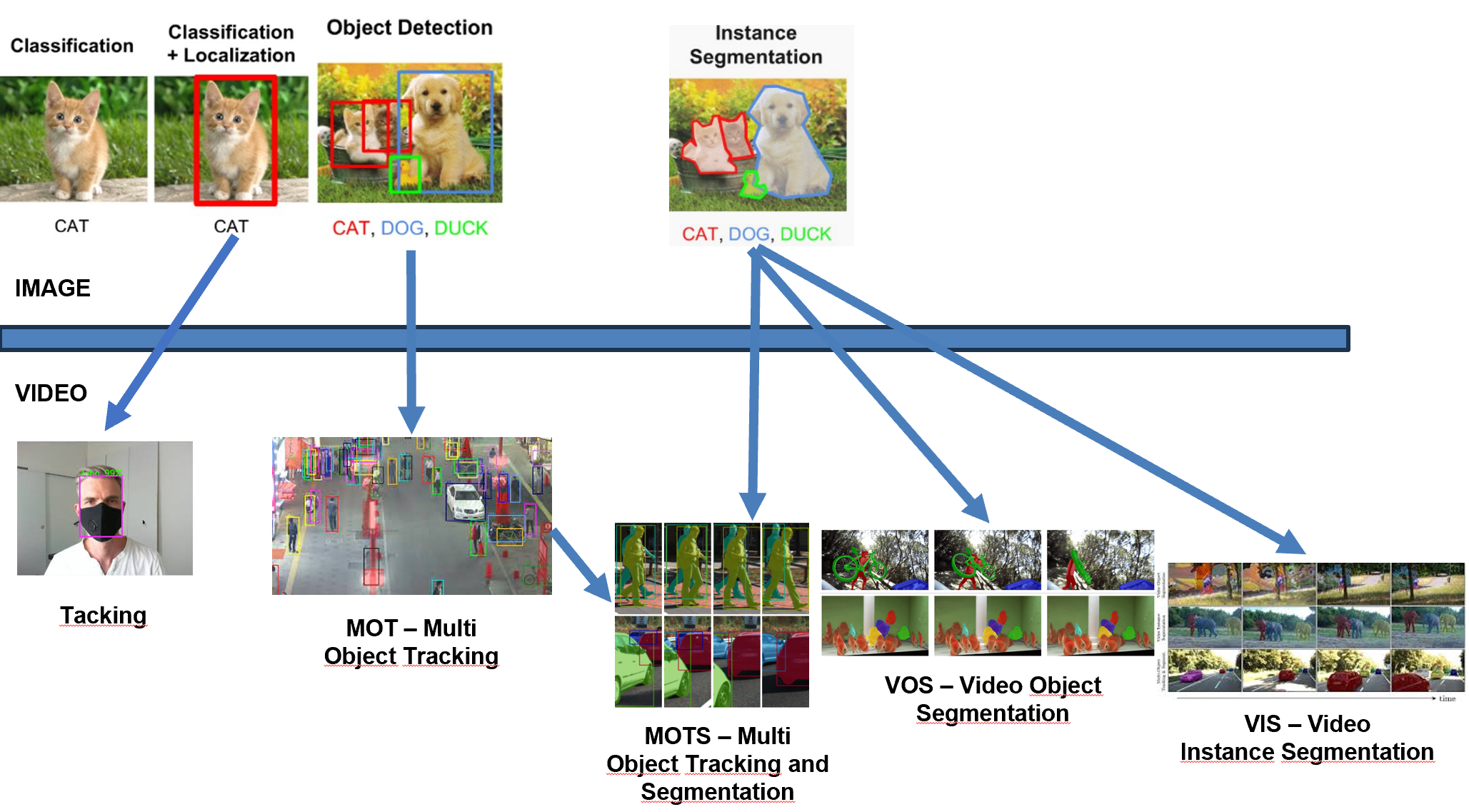

Machine vision tasks on images

classification

localization

object detection

segmentation

Image classification

Assigns a label to the entire image.

Single object task

Example datasets: ImageNet

Localization

Identifies the location of an object from a predefined list of classes using bounding boxes.

Example: Locate a car in an image.

The training data contains not just the one-hot class labels, but bx, by, bw and bh for defining the bounding box and a pc binary value, which signals whether there is an object in the image at all.

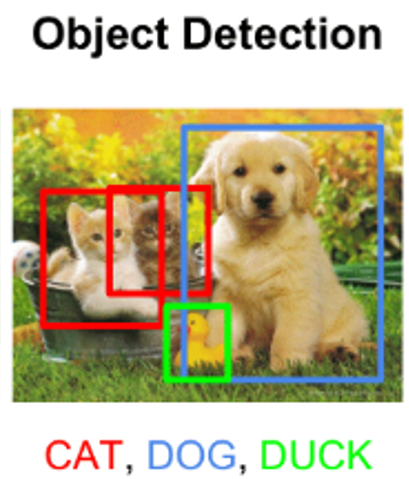

Object detection

Detects and localizes multiple objects from certain classes within an image.

Combines classification and localization.

Can run a sliding window accross the whole image and make a convolutional network predict class probabilities for each, OR just pass the whole image through the network, and the resulting matrix will contain the values for each sliding window position

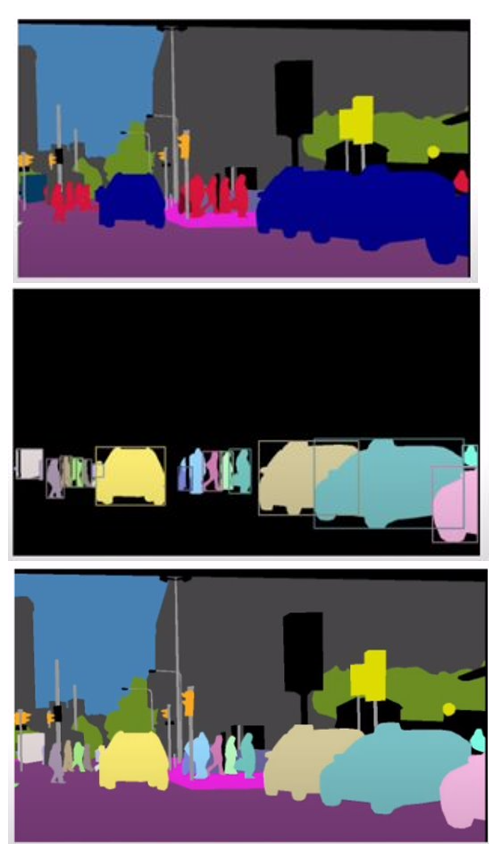

Segmentation tasks

Semantic Segmentation: Groups pixels by category (e.g., sky, road).

Instance Segmentation: Groups pixels by their instance membership to certain classes

Panoptic Segmentation: Combines semantic and instance segmentation for a comprehensive output. → assigns a class to each pixel, but differentiates instances too

Segmentation datasets

Pascal VOC (20 object categories for instance segmentation)

MS COCO (80 things categories, 91 stuff categories)

Cityscapes (semantic segmentation from 50 different cities)

Two main object types in CV tasks

Things: objects that have properly defined geometry and are countable, like a person, cars, animals

Stuff: objects that don’t have proper geometry but are heavily identified by the texture and material like the sky, road, water bodies, etc.

Machine vision tasks on video

Tracking: Monitoring object movement across frames, using bounding boxes

MOT (Multi Object Tracking): Tracks multiple objects simultaneously.

MOTS (Multi Object Tracking and Segmentation): Combines MOT with instance segmentation

VOS (Video Object Segmentation): Separates moving objects from the background.

VIS (Video Instance Segmentation)

MOTS

Combines tracking (following objects through frames) and instance segmentation (differentiating individual objects in a category).

Outputs instance masks for each tracked object in each frame

Use case: autonomous driving, where each car or pedestrian is uniquely identified over time.

VOS

Segments moving objects in a video without focusing on identifying or tracking individual instances.

Emphasis on object-background separation.

Doesn’t do any tracking

Use case: video editing, where a moving object (e.g., a person or animal) needs to be isolated for further processing.

VIS

Combines tracking, segmenting, and classifying instances.

VIS focuses on classification (e.g., identifying a "cat" or "dog"), while MOTS may not always require classifying objects but focuses on tracking and segmentation.

Example datasets: YouTube VIS, OVIS (occluded)

IoU

Intersection over union

=> the measure of the overlap between two bounding boxes

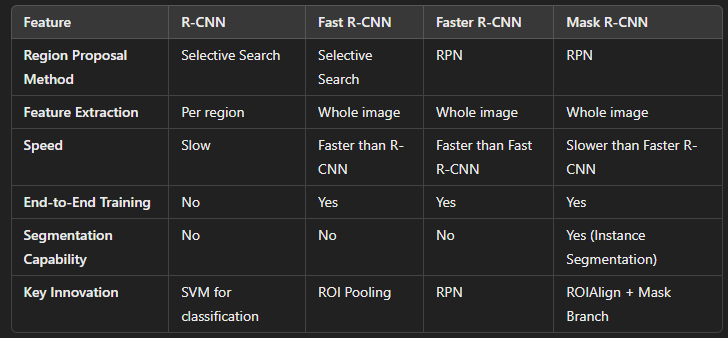

R-CNN family

R-CNN

Uses ImageNet-pretrained backbone.

Employs Non-Max Suppression (NMS) and Hard Negative Mining.

Fast R-CNN

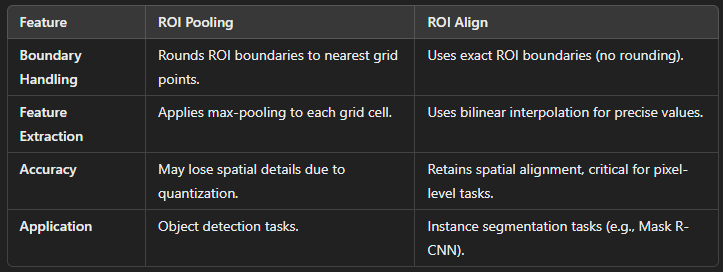

Introduces ROI Pooling for efficient region extraction.

Runs the CNN only once on the whole image to extract features

Faster R-CNN

End-to-end training with Region Proposal Networks (RPN).

Utilizes anchors for predefined bounding boxes.

Mask R-CNN

Extends Faster R-CNN to instance segmentation tasks.

Uses ROI Align instead of ROI Pooling

Adds FPN to get multi-scale features

Selective search

Purpose:

Generates region proposals for object detection by grouping similar pixels in an image.

How It Works:

Initial Segmentation:

Divides the image into small regions using a segmentation algorithm

Region Similarity Calculation:

Measures similarity between adjacent regions using color histograms, texture patterns, size similarity, shape compatibility

Hierarchical Grouping:

Recursively merges similar regions to form larger regions.

Produces a hierarchy of region proposals at multiple scales.

Region Proposal Output:

Outputs ~2000 candidate regions with bounding boxes.

Advantages:

Simple and effective for generating object proposals.

Class-agnostic and doesn’t require training.

Drawbacks:

Computationally expensive and slow.

Not optimized for specific datasets or tasks.

Applications:

Used in early object detection models like R-CNN and Fast R-CNN.

Non-Max Supression (NMS)

Purpose: select the best bounding box from overlapping detections to avoid duplicate predictions.

Process:

Calculate a confidence score for each detected object (e.g., from the object classification network).

Sort all boxes by confidence scores in descending order.

For the box with the highest score:

Retain it as a final detection.

Compare it to all other boxes. Remove those with an Intersection over Union (IoU) above a certain threshold.

Repeat for the next highest-scoring box.

Key Parameters:

Confidence score threshold: Filters low-confidence boxes.

IoU threshold: Determines the overlap level allowed between boxes.

Applications:

Used in object detection models like R-CNN, YOLO, and Faster R-CNN.

Challenges:

Can suppress valid detections in cases of high overlap.

Hard Negative Mining

Purpose:

Improves model performance by focusing on difficult examples (false positives and false negatives).

Process:

During training, identify incorrectly classified examples (e.g., background classified as an object or vice versa).

Add these hard negatives to the training data with higher weights.

Retrain the model to better differentiate between background and objects.

Applications:

Commonly used in object detection to reduce false detections.

Challenges:

Computationally expensive as it requires analyzing the entire dataset for hard negatives.

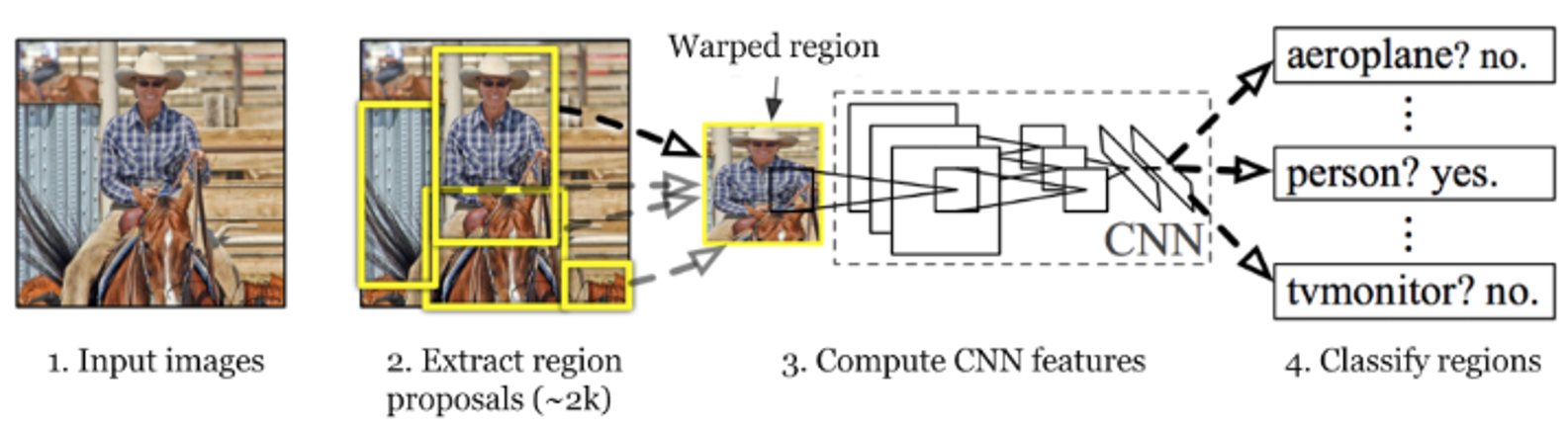

R-CNN

How It Works:

Region Proposals:

Uses an external algorithm (Selective Search) to generate ~2000 region proposals.

Feature Extraction:

Applies a convolutional neural network (CNN) (e.g., ImageNet) to each region proposal to extract features.

Classification:

A Support Vector Machine (SVM) classifies each region as an object or background.

Bounding Box Regression:

Refines the bounding box coordinates.

NMS:

Selects the best bounding box from overlapping detections

Hard Negative Mining:

After initial training, analyze the classifier’s performance on the dataset, apply hard negative mining to retrain the SVM for better performance.

Advantages:

Significant improvement over traditional object detection methods.

Drawbacks:

Computationally expensive due to:

Extracting features for every region.

Using separate pipelines for feature extraction, classification, and bounding box regression.

The ROIs get warped to the same size before being fed to the CNN → can distort information

Region of Interest (ROI) Pooling

Purpose:

Extracts fixed-size feature maps for regions of interest (ROIs) in an image.

Process:

Input:

Feature map (from a convolutional layer).

ROIs (bounding boxes proposed by a region proposal network).

Divide each ROI into a grid of fixed dimensions (e.g., 7x7).

Apply max-pooling within each grid cell to reduce the region to a fixed size.

Advantages:

Enables downstream fully connected layers to process regions of different sizes.

Reduces computation while retaining important features.

Applications:

Integral to Fast R-CNN and Faster R-CNN architectures.

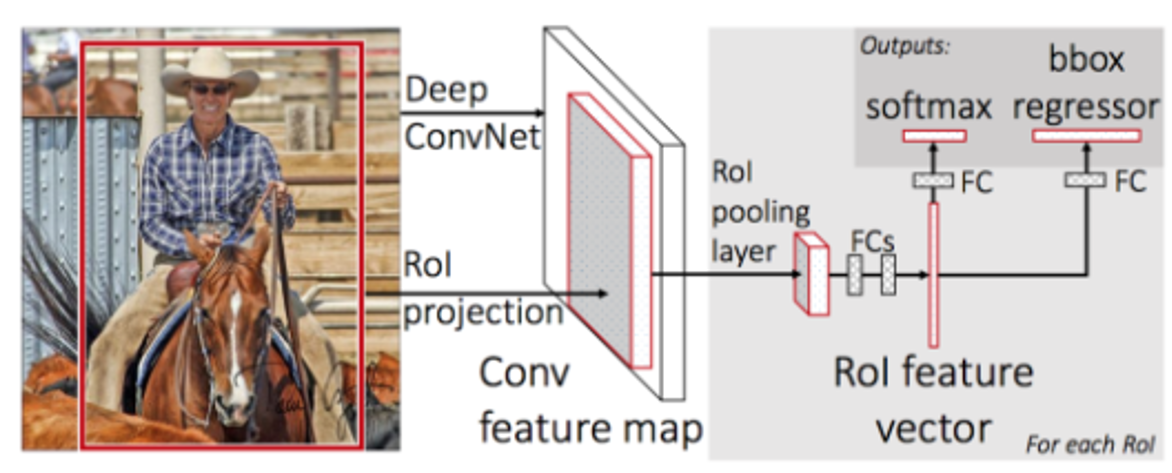

Fast R-CNN

How It Works:

Single Forward Pass:

Applies a CNN (e.g., VGG16) to the entire image to generate a feature map.

ROI Projection:

mapping ROIs from the original image space onto the feature map

ROI coordinates are scaled to match the feature map’s dimensions

ROI Pooling:

Extracts fixed-size feature maps for region proposals (from Selective Search) using ROI pooling.

Unified Network:

A single fully connected network performs classification and bounding box regression simultaneously.

Advantages:

Faster than R-CNN:

Feature extraction is done once for the entire image.

Combines classification and bounding box regression into one step.

End-to-end trainable.

Drawbacks:

Still relies on Selective Search for region proposals, which is computationally expensive.

Anchor boxes

Purpose:

Predefined bounding boxes used in RPNs to detect objects of different sizes and aspect ratios.

Characteristics:

Anchors are centered at specific points on the feature map.

Each anchor has a predefined scale (e.g., small, medium, large) and aspect ratio (e.g., 1:1, 1:2, 2:1).

Process:

RPNs predict adjustments (deltas) to the anchors to refine the bounding boxes.

Advantages:

Enables detection of objects with varying scales and shapes.

Challenges:

Requires careful tuning of anchor sizes and aspect ratios to match the dataset.

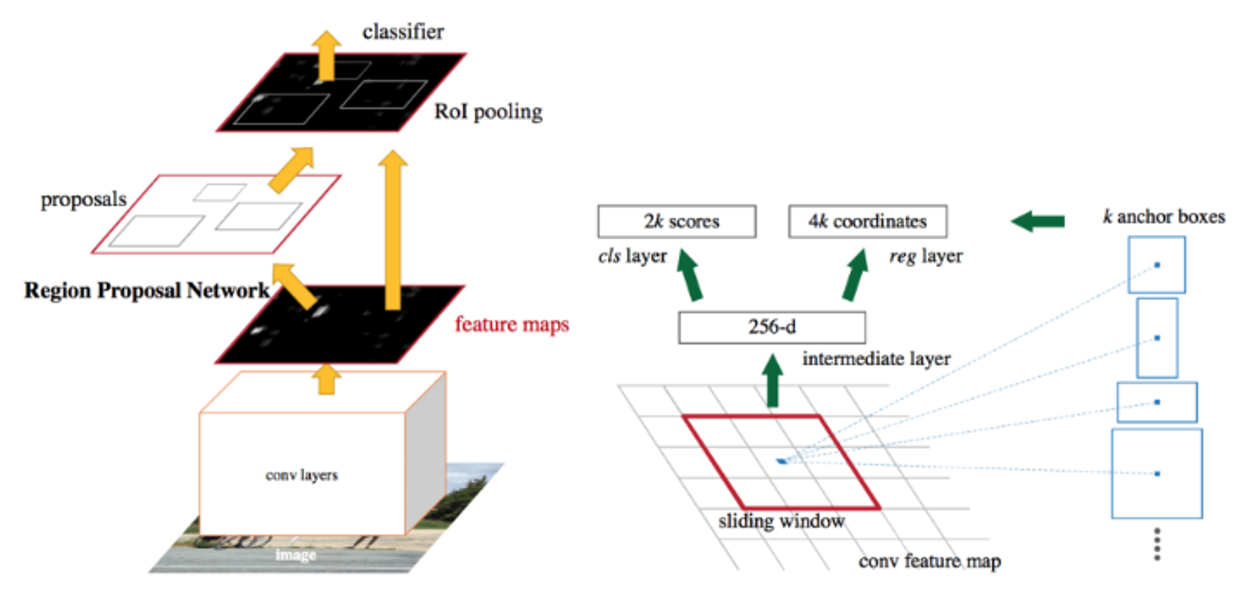

Region Proposal Network (RPN)

Purpose:

Generates candidate object bounding boxes (region proposals) for object detection.

Process:

Generates anchor points which are placed at regular intervals over the feature map

For each anchor point, we have k predefined anchor boxes of different sizes and aspect ratios

For each achor box:

Predicts objectness scores (whether the region contains an object).

Regresses bounding box coordinates.

Applies NMS to filter overlapping proposals.

Advantages:

End-to-end trainable with the detection network.

Faster and more efficient than traditional methods like Selective Search.

Faster R-CNN

How It Works:

Feature Extraction:

A CNN generates a feature map for the entire image.

Region Proposal Network (RPN):

Replaces Selective Search with an RPN to generate region proposals on the feature map

RPN is end-to-end trainable, making it faster and more efficient.

ROI Pooling:

Extracts fixed-size feature maps for the proposed regions.

Classification and Bounding Box Regression:

Performed on the ROI feature maps.

Advantages:

Faster than Fast R-CNN due to the RPN.

Fully end-to-end trainable.

Drawbacks:

Struggles with pixel-level segmentation tasks.

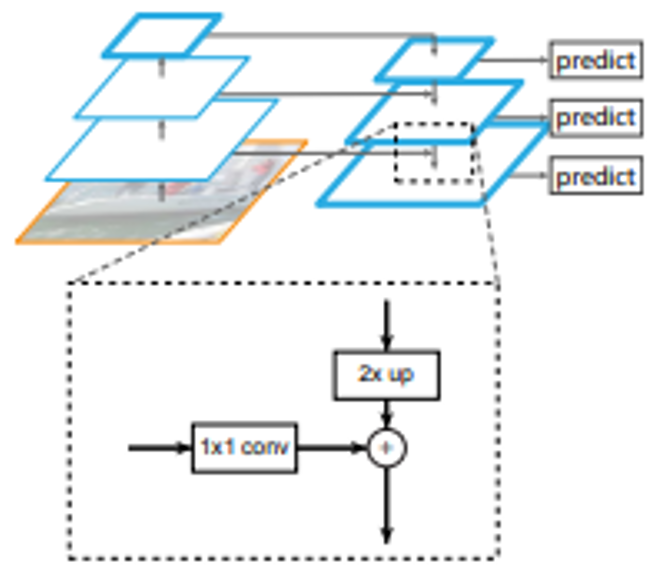

Feature Pyramid Network (FPN)

Purpose:

Enhance object detection by effectively utilizing multi-scale features for detecting objects of various sizes.

Make high-level semantic features “flow back down” to low level features, which have the most spatial information

How It Works:

Feature Extraction:

Extracts feature maps from different levels of the CNN backbone (e.g., ResNet), corresponding to different spatial resolutions.

Top-Down Pathway:

High-level semantic features (e.g., from deeper layers, with smaller spatial dimensions but richer in object context) are upsampled progressively.

Lateral Connections:

Combines upsampled features with lower-level features (higher spatial resolution) through lateral connections to retain spatial details

→ merged by addition

Pyramid Outputs:

Produces a pyramid of feature maps at multiple scales, each containing both spatial details and semantic context.

Usage in Detection:

Each scale in the pyramid is used for detecting objects of corresponding sizes.

Advantages:

Improves detection of small objects (e.g., detecting small pedestrians or distant cars).

Efficient multi-scale feature representation.

ROI Align

Purpose:

To map bounding boxes (Region Proposals) from the original image space to the feature map with high precision.

To avoid the spatial misalignment caused by the rounding operations in ROI Pooling.

Essential for tasks like instance segmentation, where pixel-level accuracy is required.

How it works:

Each ROI (bounding box) is mapped to the continuous coordinates on the feature map based on the downsampling ratio of the CNN backbone.

Instead of rounding ROI boundaries to the nearest grid point (as in ROI Pooling), ROI Align uses the exact floating-point coordinates.

At each grid cell, calculates the feature value using bilinear interpolation:

Interpolates the values of neighboring feature map points to compute the precise value for the grid cell.

Output:

Produces a fixed-size feature map for each ROI, preserving spatial details.

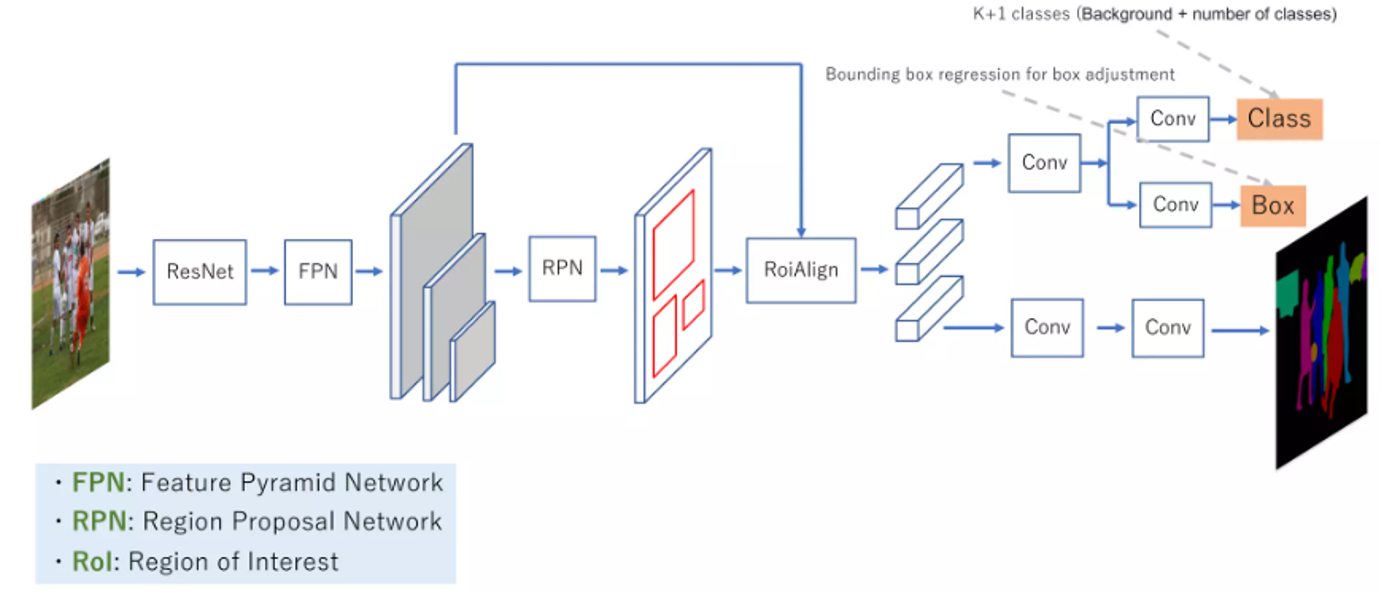

Mask R-CNN

How It Works:

Builds on Faster R-CNN:

Adds a branch to predict segmentation masks for each region.

Uses FPN to get multi-scale features

Mask Branch:

For each ROI, predicts a binary mask (pixel-wise classification) for the object.

ROIAlign:

Improves ROI pooling by avoiding quantization errors, leading to better mask accuracy.

Multi-task Learning:

Simultaneously performs:

Object classification.

Bounding box regression.

Instance segmentation.

Advantages:

Handles pixel-level segmentation (instance segmentation).

ROIAlign improves spatial precision compared to ROI Pooling.

Drawbacks:

More computationally expensive than Faster R-CNN.

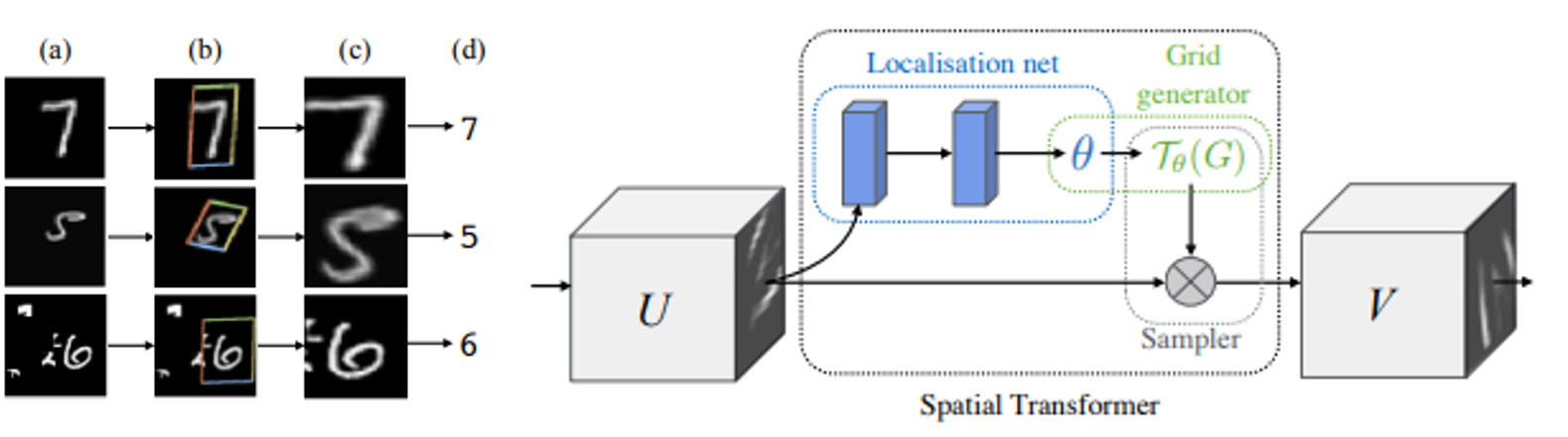

Spatial Transformer Networks (STN)

Purpose:

To make neural networks spatially invariant by dynamically transforming input feature maps, improving robustness to variations like rotation, scaling, and translation.

How It Works:

Input:

A feature map or image that may have spatial distortions.

Three Components:

Localization Network:

Predicts transformation parameters (e.g., rotation, scaling, translation).

Typically implemented as a small CNN.

Grid Generator:

Creates a sampling grid based on the transformation parameters.

Sampler:

Maps input pixels to the new locations defined by the grid.

Output:

A transformed feature map that is easier for the network to process.

Advantages:

Reduces the need for extensive data augmentation.

Improves model performance on tasks involving spatial variations (e.g., rotated text recognition or detecting objects in tilted images).

Can be added to existing architectures without significant modifications.

Limitations:

Additional computational overhead due to transformation steps.

Requires careful tuning of the localization network

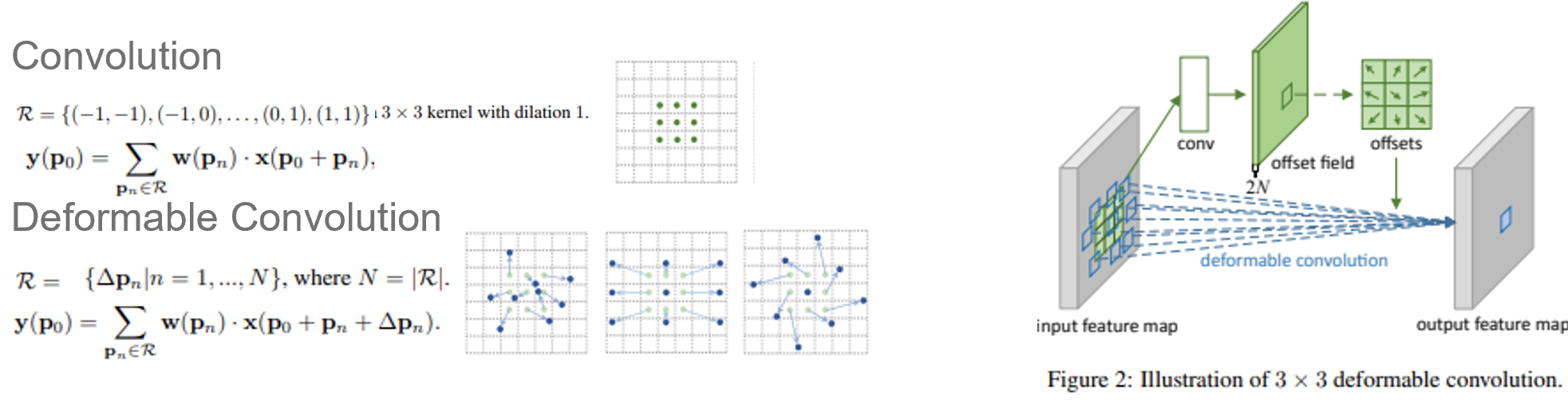

Deformable convolution

Main idea:

Adds learnable offsets Δpn to the standard fixed grid sampling locations in convolution

Sample the input feature map X at the modified positions, using interpolation of needed

The offsets are predicted by an auxiliary small network

Purpose:

Allow the kernel to adapt to the geometric structure of the input.

Improve the model's ability to handle spatial variations, such as object deformation, rotation, and scaling, which traditional convolution struggles with due to its rigid sampling grid.

Deformable Convolutional Networks

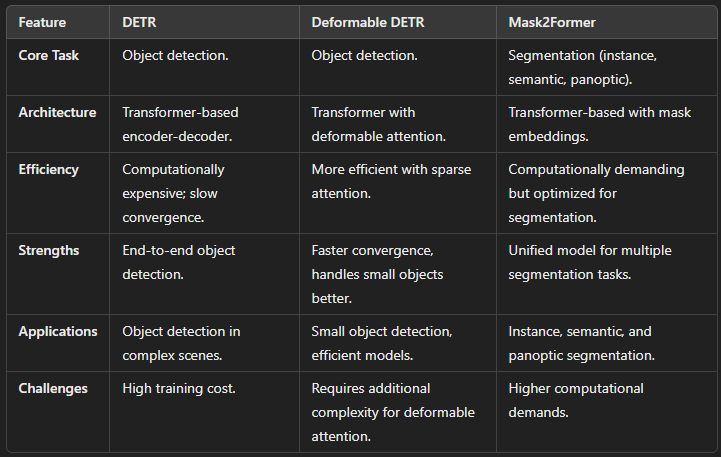

DETR (Detection Transformer)

End-to-end object detection using transformers.

Components:

Positional Encoding: Adds spatial information to the transformer.

Encoder-Decoder Architecture: Processes image features for object detection.

Drawbacks:

Computationally expensive for high-resolution images.

Slow convergence due to quadratic complexity.

Deformable DETR

Improves on DETR by:

Using sparse attention mechanisms for efficiency.

Handling complex scenes with better accuracy.

Mask2Former

Foundation model for segmentation tasks.

Builds on VIS concepts for enhanced video instance segmentation.

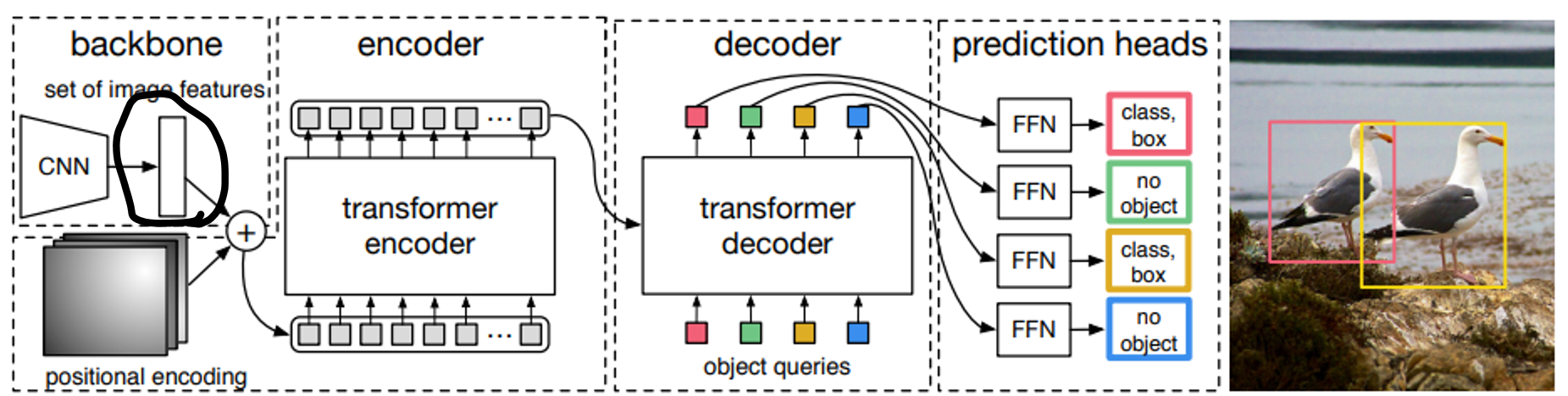

DEtection TRansformer (DETR)

Purpose:

Detect objects in an image end-to-end using a transformer architecture

Rethink object detection as a set prediction problem instead of using traditional methods like region proposals or anchors

How It Works:

Feature Extraction:

Uses a CNN backbone (e.g., ResNet) to extract feature maps from the input image.

Transformer Encoder-Decoder:

Encoder: Processes the feature map, capturing global context via multi-head self-attention

→ role: separate object instancesDecoder: Uses learnable generalized object queries which interact with the encoded image features to collect information about object classes and locations in the current image.

Prediction Heads:

simple FC layers to convert the updated queries into concrete outputs

separate heads for classification and bounding box prediction

Prediction Matching:

For each pair of a predicted query i and a ground truth object j, a cost matrix is computed using the weighted sum of the classification and bounding box loss

The Hungarian algorithm finds the optimal one-to-one assignment between predictions and ground truth objects that minimizes the total matching cost.

Outputs bounding boxes and class probabilities for each ground truth object

Advantages and disadvantages of DETR

Advantages:

End-to-end training: directly predicts the bounding boxes and class labels without the need for complex post-processing steps like NMS

Unified architecture: uses a single architecture (a Transformer) for both the detection and classification tasks, no need for separate RPN and classifiers

Handles complex scenes with many objects.

Simple and general: fewer task-specific components (like anchor boxes)

Drawbacks:

Computationally expensive (transformer encoder has quadratic cost), especially for high-resolution images → issues with small object detection

Longer training time: slow convergence, because of uniformly spread attention initially ←→ CNNs have some inductive bias due to local neighborhood

Needs large datasets

Positional encoding in DETR

Purpose of Positional Encoding:

Since the Transformer architecture, used in DETR, does not inherently process sequential or spatial information, positional encoding is introduced to give the model a sense of the position of objects in an image.

Helps the model differentiate between objects that are in the same class but in different spatial locations.

How Positional Encoding Works:

2D Positional Encoding: The original DETR uses a 2D grid-based positional encoding generated using sine and cosine functions of different wavelengths to represent both horizontal and vertical positions of image patches.

These encodings are added to the input feature maps at the beginning, combining spatial position information with learned visual features.

Queries in DETR

Definition:

Queries in DETR are learnable embeddings (vectors) that serve as placeholders for potential objects in an image.

Purpose:

To interact with the encoded image features via the transformer decoder and produce predictions for object detection (bounding boxes and class labels).

Key Characteristics

Learnable:

Queries are initialized as random embeddings and are optimized during training via backpropagation to generalize better across images.

Content-Independent (Initially):

At the start of decoding, queries are not tied to any specific image or object. They are generalized placeholders.

Content-Specific (During Decoding):

Queries interact with the encoded image features through cross-attention in the decoder, becoming specific to the objects in the image → they are used for prediction, but these updates get discarded

Key Benefits

Eliminates the need for predefined anchors or region proposals.

Queries dynamically adapt to the image content during decoding.

Supports end-to-end training and simplifies the detection pipeline.

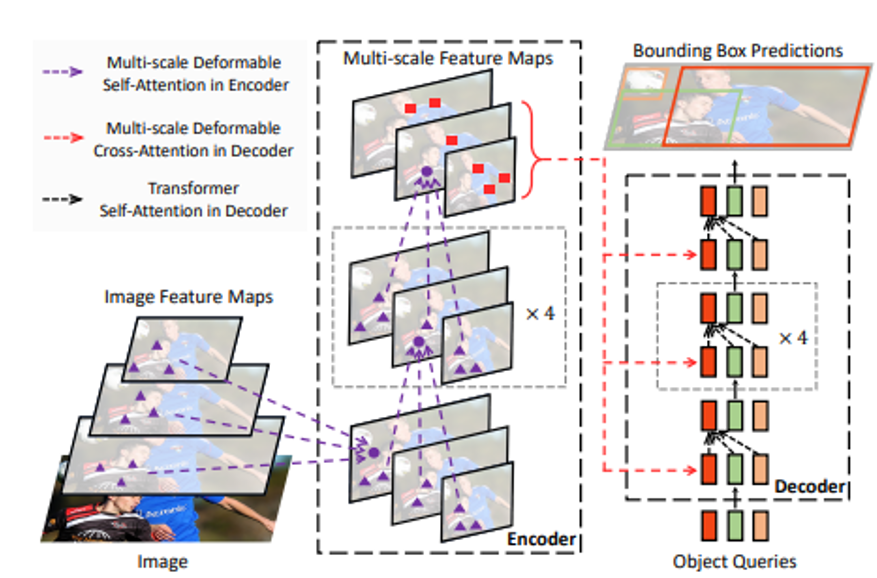

Deformable DETR

How It Works:

Builds on DETR but addresses its inefficiencies:

Sparse Attention:

Focuses attention on a small, learnable set of key points instead of all locations.

Deformable Attention Module:

Samples relevant regions dynamically for each object based on predicted offsets, adapting to their shape, position, and size.

Multi-scale feature maps:

Combines feature maps from different resolutions (like Feature Pyramid Networks) to detect objects of various sizes.

Advantages:

Faster convergence compared to DETR.

Better performance for complex scenes with occlusions or small objects.

Efficient computation → linear

Drawbacks:

Slightly more complex than DETR due to deformable attention mechanisms.

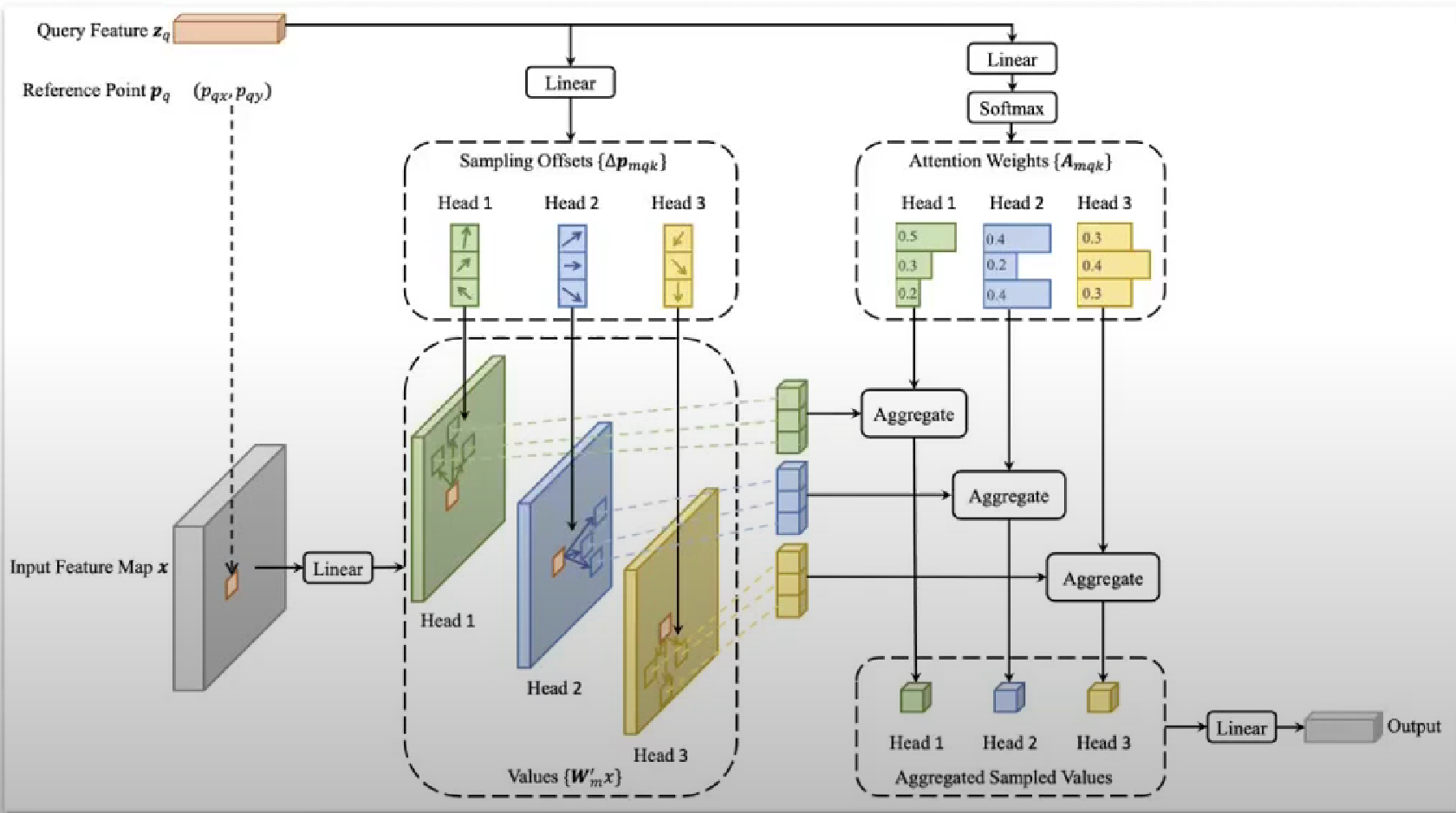

Deformable attention in Deformable DETR

Instead of attending to all positions, for each query k number of keys get sampled from the feature map for each attention head

→ the sample positions are produced by predicting an offset from the current query’s position

→ the sampled values get aggregated by weighted summation based on learnt attention weights

Number of queries:

in the encoder there is a query for each position of the input feature map produced by a CNN backbone

in the decoder the number of queries is the number of outputs, and there is a learnt reference point for each query

=> the cost is linear ( O(N*k)) instead of quadratic ( O(N²))

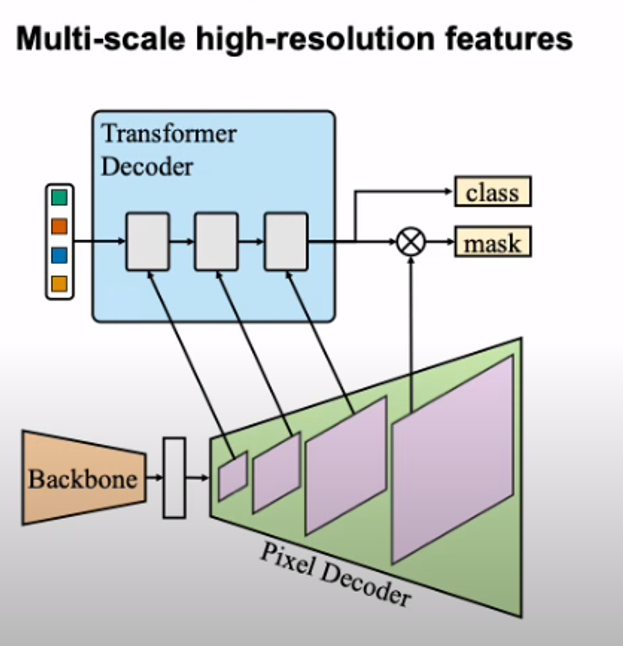

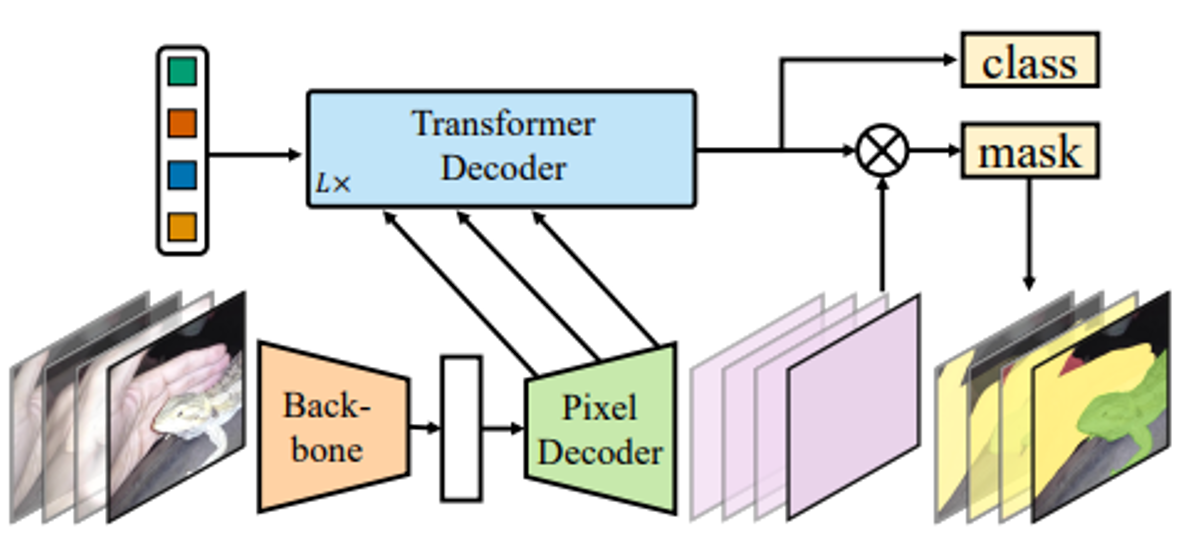

Mask2Former

How It Works:

Builds on the architecture of DETR, but it replaces bounding box prediction with dynamic mask prediction and incorporates a pixel decoder for fine-grained feature processing

Backbone:

A CNN (e.g., ResNet or Swin Transformer) extracts multi-scale feature maps from the input image.

Pixel Decoder:

Aggregates the multi-scale features into a dense pixel-level high-resolution feature map.

These features retain fine-grained spatial information crucial for segmentation tasks.

Transformer Decoder:

Uses query-based attention to predict object masks and their corresponding classes.

Queries represent potential objects or regions in the image.

Mask Prediction Head:

Combines the output of the transformer decoder with the pixel decoder features to produce binary masks for each query.

Advantages:

Generalizes instance, semantic, and panoptic segmentation into a single framework.

Achieves state-of-the-art results in segmentation benchmarks.

Drawbacks:

Computationally demanding for high-resolution images.

Mask2Former for video segmentation

Frame-Level Features:

Each frame is processed individually through the backbone and pixel decoder.

Query Propagation:

Queries are carried across frames to maintain consistency for objects appearing in multiple frames.

This allows the model to track objects over time.

Temporal Attention:

In addition to spatial cross-attention (within each frame), queries also attend to features from previous frames.

Output:

For each frame, the model produces segmentation masks and tracks object instances over time.

Masked attention in Mask2Former

Restricts attention to a specific subset of features or regions, defined by a mask

During the computation of the attention scores, invalid positions are masked (set to a large negative value, effectively ignored).

Where Masked Attention Appears in Mask2Former

During Mask Refinement:

Mask2Former uses dynamic masks predicted by the transformer decoder to guide attention.

For each query, the model generates a dynamic mask that highlights the relevant regions in the pixel decoder's feature map.

In Cross-Attention:

The mask restricts the query’s attention to regions it is responsible for, improving segmentation accuracy and efficiency.

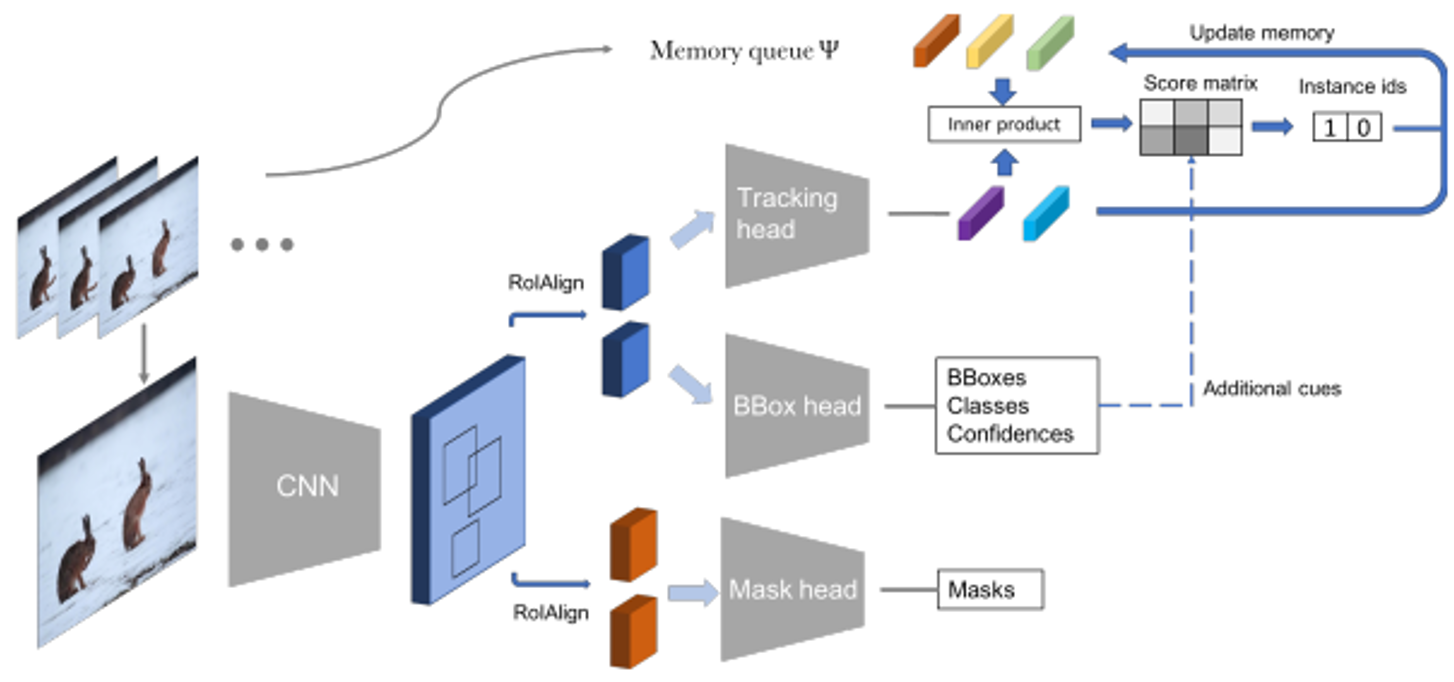

MaskTrackRCNN

A framework designed specifically for video instance segmentation (VIS). It extends Mask R-CNN by adding a mechanism to track instances across frames.

How MaskTrackRCNN Works

Backbone and Mask R-CNN Framework:

Processes each video frame using a standard Mask R-CNN pipeline:

Extracts feature maps.

Generates region proposals (RPN).

Predicts bounding boxes, classes, and instance masks.

Instance Association Across Frames:

Adds a tracking head to associate instances across frames:

Computes instance embeddings for detected objects.

Matches embeddings from one frame to the next using similarity metrics (e.g., cosine similarity).

Output:

For each video, it produces per-frame instance masks and a tracking ID for each object.

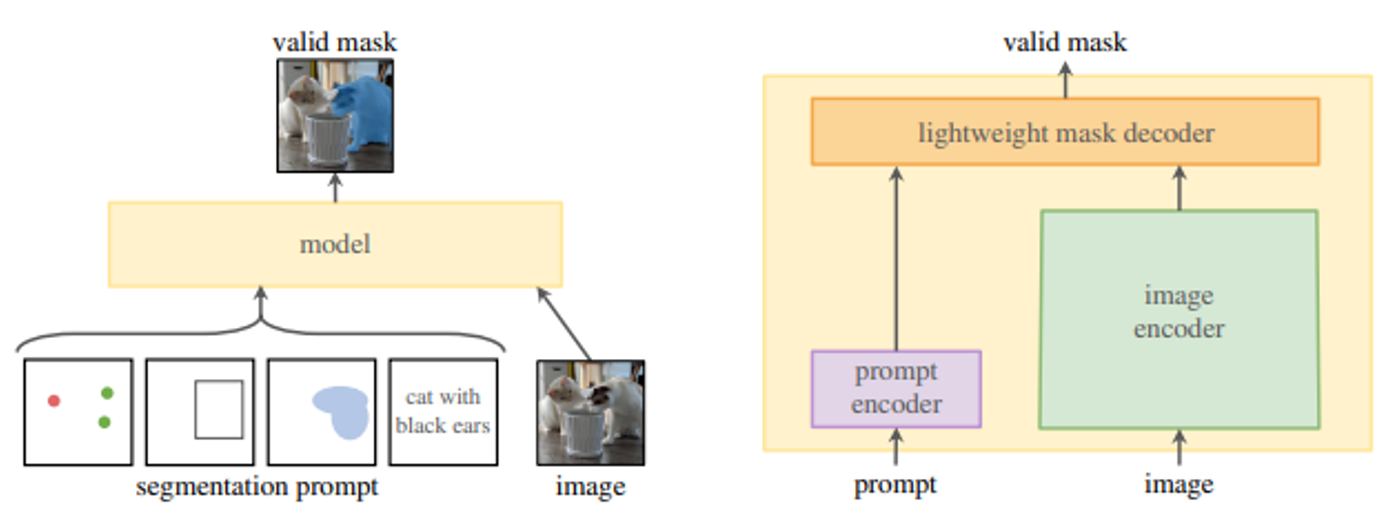

Segment Anything Model (SAM)

A universal segmentation model designed to segment any object in an image automatically or interactively through prompts, even without fine-tuning on specific datasets.

SAM Architecture

Image Encoder Backbone (Vision Transformer):

A modified ViT extracts image features.

The backbone processes the entire image in a single forward pass, providing a high-resolution feature map.

Prompt Encoder:

Encodes user-provided prompts such as:

Points: Positive or negative points to indicate object presence or absence.

Boxes: Bounding boxes around objects of interest.

Masks: Rough or partial masks for the object.

Text

Outputs embeddings that guide the segmentation process.

Mask Decoder:

Combines features from the image encoder and prompt embeddings to predict segmentation masks.

Produces high-quality masks that align accurately with object boundaries.

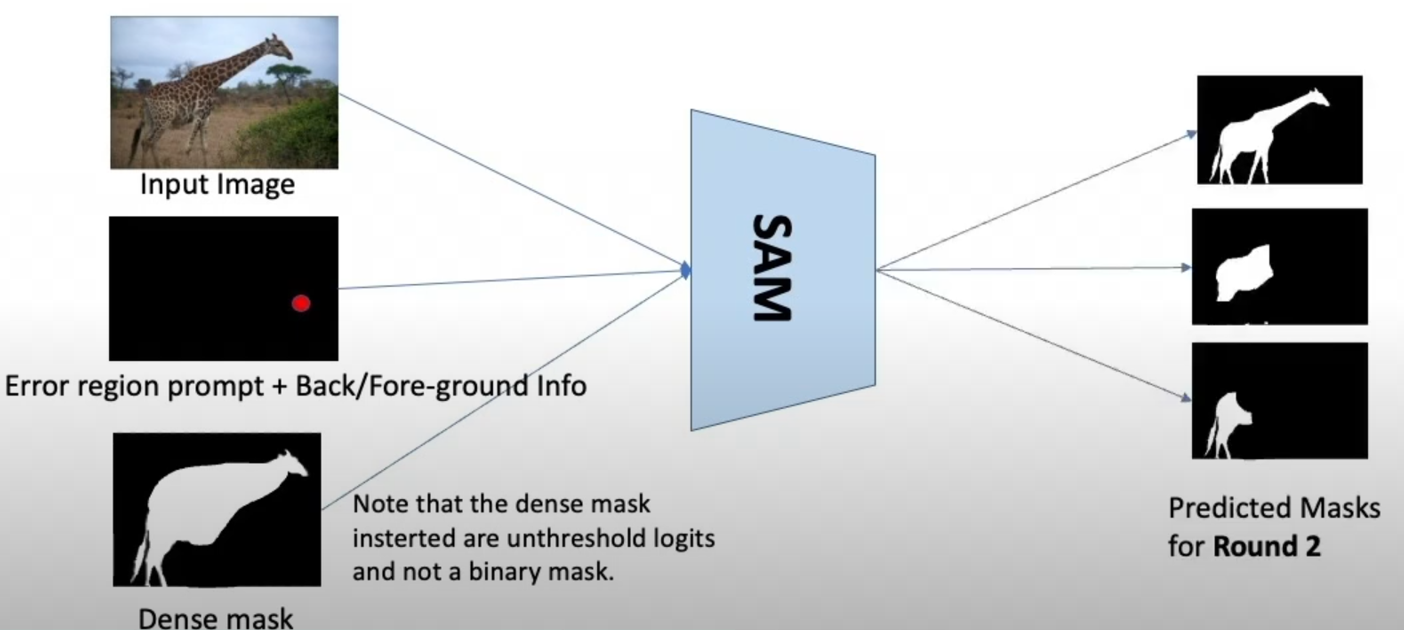

Iterative training of SAM

Training starts with simpler tasks or annotations, and these annotations are iteratively refined to improve mask quality and diversity.

The model’s predictions are progressively used to generate better training data for itself.

1. Base Model Training: Pretrain a simple segmentation model on existing datasets.

2. Mask Generation: Generate initial masks for images using the base model.

3. Error region: error region is calculated based on the difference to the human annotated mask

4. Expanded input: in the next training round, include in the input the model’s previous mask prediction and an error region prompt as well