Cognitive Week 5

1/4

Earn XP

Description and Tags

Auditory perception 2: Making sense of what we hear

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

5 Terms

What is sound localisation and the cues for localising sounds?

Visual space: Information represented in spatial maps (”retinotopic maps”)- we know where things are.

Auditory space: Information represented in tonotopic (pitch) maps (sounds mostly processed in contralateral auditory cortex).

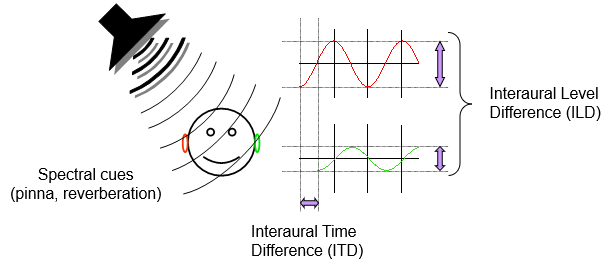

Interaural timing differences (ITD)

~600 microseconds to travel across the head from one ear to the other

ITDs useful for azimuth (left/right), not so useful for judging elevation and distance

Interaural level differences (ILD)

The head casts an ‘acoustic shadow’- reduce intensity of sounds reaching the far ear.

Bigger effect in high-frequency sounds

ILDs useful for azimuth

Cone of confusion: Two points within surface of cone will have the same ITD and ILD, and so listeners cannot reliably indicate elevation of sound source with the two cues alone.

Spectral cues

Spectral cues introduced by the head and external ears (pinna)

Change the intensity (volume) of different frequencies

The change in spectrum of the frequencies gives cues to location (including elevation)

What is ‘Changing ears’?

Sound localisation (a) was initially disrupted (b)

Over time they learned to localise sounds with their new ears (c,d)

But they remembered how to use their old ears (e)

What is perceptual organisation in auditory scene analysis?

All sounds enter the ear together, and the auditory system has to make sense of the mix of frequencies coded at the cochlea.→ This process of making sense of sounds is called auditory scene analysis

This is possible because the auditory system segregates the complex sound field into separate auditory objects

What is the ‘cocktail party effect’ and the 2 processes of auditory scene analysis used?

The cocktail party effect- following one voice despite distracting sounds.

Two processes of auditory scene analysis:

Grouping and segregation (from other sounds)

Simultaneous sounds are mixed before entering the ear→ Basilar membrane filters the sounds according to frequency.

The auditory system has to assign the right frequency components to the right source→ Extremely difficult if two sounds are played at exactly the same time and fundamental frequency

Two main cues to help:

Different sounds tend to start and stop at different times

Different sounds tend to have different pitches

Streaming (over time)

Listen to same person speaking, follow a melody, link separate footsteps.

Tones perceived as one stream or two simultaneous streams.

Rhythm depends on whether the notes are grouped together or segregated.

How was streaming and delayed attention studied?

Carlyon et al. (2001) tried a dual-task approach

Presented participants with the horse/morse stimuli

For the first 10 seconds participants also heard noise bursts

Asked to pay attention to the noise bursts or ignore them

Directing attention to the noise bursts delayed streaming→ If attention is NOT needed, then at the 10s mark, the Circle should be near to the Triangle and the Square