Comp 250 Midterm Review

1/167

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

168 Terms

Algorithm

A systemic and unambiguous procedure that produces (in a finite number of steps) the answer to a problem

-Understandable to both humans and computers

-Must have a clear input that satisfies specific conditions

What do algorithms produce?

Output- solution to the problem

-Also have clear conditions

What do good algorithms have?

1. Correctness- returns the right solution

2. Speed- time it takes to solve a problem- cannot be too long

3. Space- amount of memory used- cannot take up too much memory

4. Simplicity- easy to understand and analyze, easy to implement, easy to debug, modify, implement

Running time

Speed of an algorithm/program

What does running time depend on?

1. Size of input- longer when input is larger

2. Content of input- instance of the problem

Running time n

n = set of numbers at the number n- n numbers

Cases of running time

1. Best case- easiest input (like an in-order list) so the time is shortest- meaningless since this rarely happens

2. Worst case- hardest input (out-of-order list) so the time is longest- means the most because this is what the Big O is judged on and what you calculate to make the overall algorithm shorter

3. Average case- time of average case, hard to find

Languages for describing algorithms

1. English prose- paragraph- human readable but too vague/verbose

2. Binary language- precise but not human readable

3. Programming language- precise, human readable, needs knowledge of language and implementation

Pseudo-code

Universal language to describe algorithms to humans- not any specific language like programming language

Assignments

x

Conditions

If (x = 0) then...

Loops

For i

Objects/function calls

triangle.getArea()

Mathematical notiation

x

Blocks

Indentation

Sorting

Getting numbers in a random order and sorting them in increasing or decreasing order

Runtime of bubble sort, selection sort, insertion sort

O(n^2)

Runtime of merge sort, heap sort, quick sort

O(nlogn)

Bubble sort

Loop/iterate through the list many times; for each iteration through the list, if 2 neighboring elements are in the wrong order, swap them

Swapping variables

Use a temporary variable:

tmp = y;

y = x;

x = tmp;

Pseudocode for bubble sort

for ct = 0 to n - 1{

for i = 0 to n - 2 - ct{

if list[i] > list[i + 1]

swap(list[i], list[i + 1])

}

}

ct

Counter- makes bubble sort go through the list of numbers n-1 times and sort it each time

n

Number of elements in the list

n - 1

Number of elements in array because array starts at 0

n - 2 - ct

Removing the numbers you just did when you go through the list- since the last element will be in place at the end of the list after each time you do it, so you can ignore doing the last space

-n - 2 because you don't have to do the last place in the first place, as well as that the array starts at 0

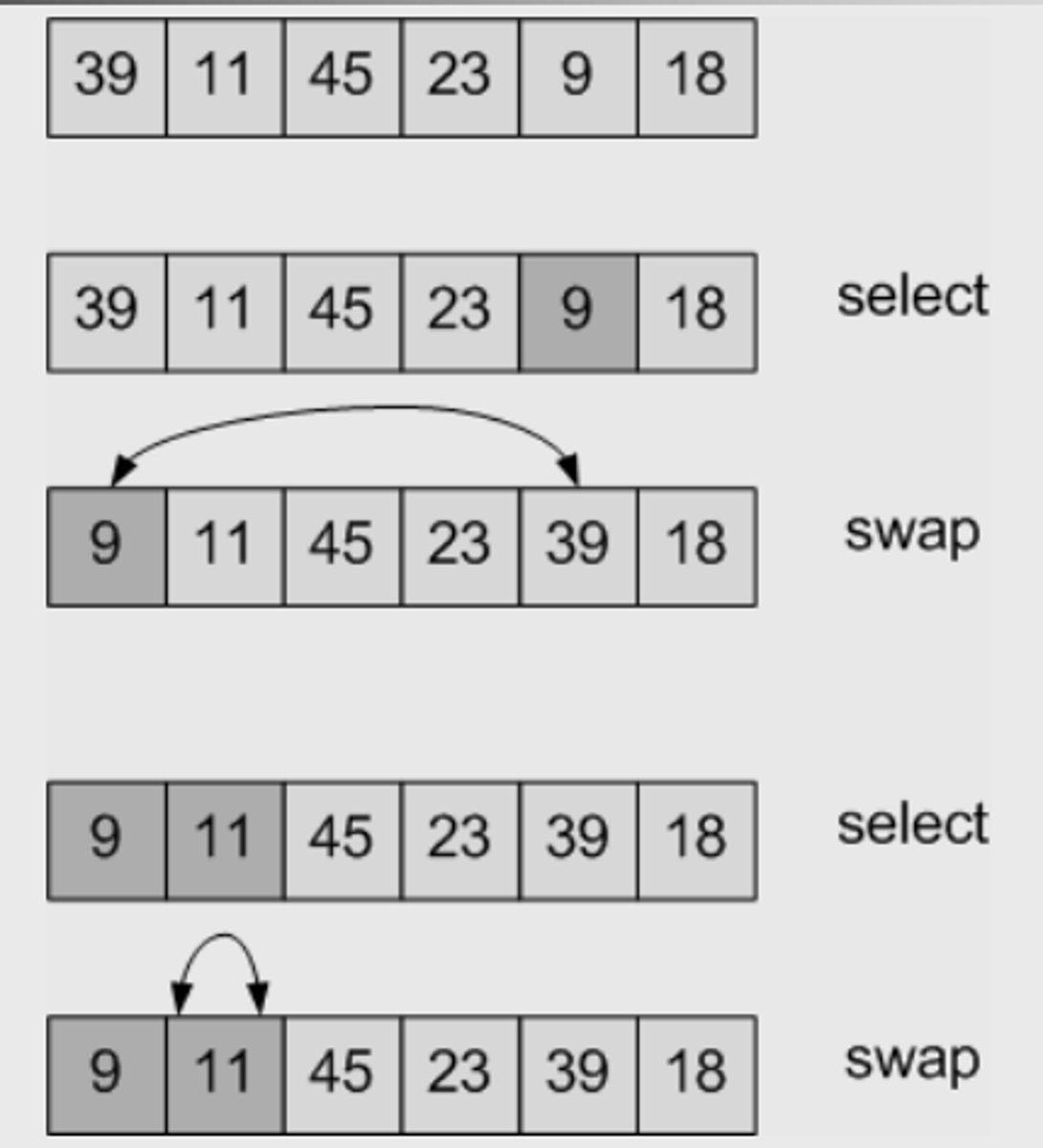

Selection sort

List is divided into parts- the sorted list and the rest of the elements

The sorted list is empty and all the elements are in the second list

Repeated n times: find the smallest number in the list and add it to the empty sorted list

Add numbers to the sorted list, which is just the beginning of the old list, by swapping the smallest elements with the elements whose places you're replacing

Selection sort image

Selection sort pseudo code

for i to n - 2{

tmpIndex = i //first element of the list

tmpMinValue = list[i] //makes the i (first) element of the list the minimum value

for k = i + 1 to n - 1{

if (list[k] < tmpMinValue){ //if each element after i is smaller than the tmpMin make it the new tmpMin

tmpIndex = k

tmpMinValue = list[k]

}

}

if (tmpIndex != i){ //if tmpIndex is smaller, swap

swap(list[i], list[tmpIndex])

}

}

for i to n-2

repeat n times

Selection sort passes

for i to n - 2

for k = i + 1 to n - 1

2 for loops

How many passes are there for the inner for loop?

n(n - 1)/2

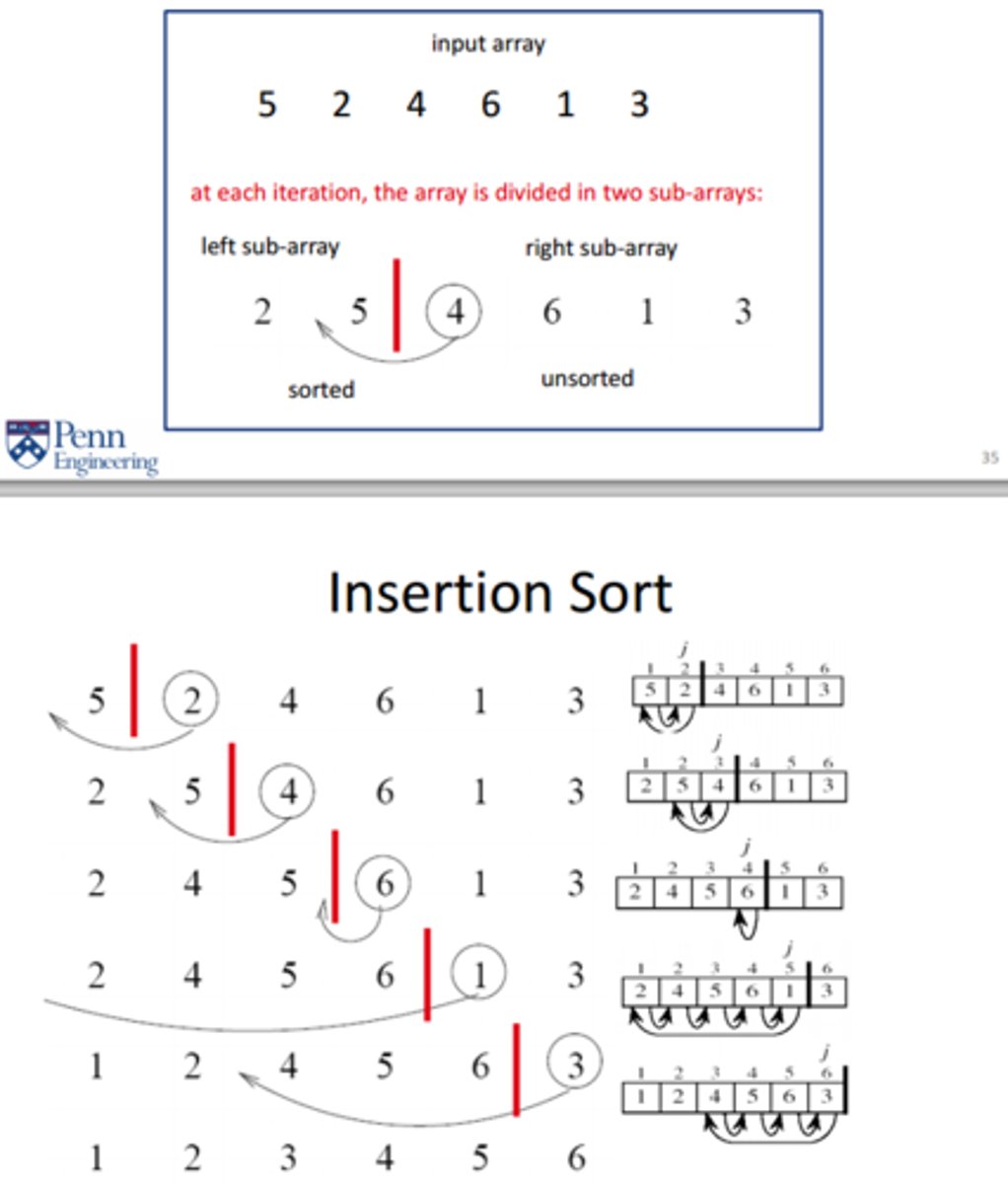

Insertion sort

For k = 1 to n - 1; insert list element at index k into its correct position with respect to the elements at indices 0 to k - 1

Essentially, there is a sublist in the beginning that is sorted. As we go through every element, the current element we have is checked against every element in the sorted sublist and shift the greater numbers to the right. When it is compared to a smaller number, then the current element is inserted in the blank space

Insertion sort image

Insertion sort explaination

Essentially, for every pass through, it sorts the n number of elements. ex. if its the third (n = 3), then the first 3 elements of the list will be sorted with each other and the rest of the list will be untouched

Insertion sort pseudo code

for k = 1 to n - 1{

elementk = list[k]

i = k

while (i > 0) and list([i - 1] > elementK){

list[i] = list[i - 1] //copy to next

i = i - 1 //move over

}

list[i] = elementK //past in space

}

Best case of insertion sort

O(n) if list is sorted- terminates immediately after 1 pass

Worst case for insertion sort

O(n^2) if list is sorted backwards

1 + 2 + 3 + ... + n - 1 = n(n - 1)/2

Comparison of runtime of three methods

Depends on data and implementation (how swap is done, what type of lists are used, etc)

Iterative algorithm

Algorithm where a problem is solved by iterating (going step-by-step) through a set of commands, often using loops

Power algorithm

product = 1

for i = 1 to n do

product = product * a

Multiplies a to itself for n times to create a^n

Recursive algorithm

Algorithm is recursive if in the process it calls itself one or more times

Power algorithm using recursion

power(a, n)

if n = 0 return 1 //base case

else{

previous = power(a, n-1) //keeps calling itself until n = 1

return previous * a //saves previous every time power is called and adds it to itself

}

Example: 7^4

power(7,4)

power(7,3)

power(7,2)

power(7,1)

n = 1

previous1 = 1*7 = 7

previous2 = 7*7 = 49

previous3 = 49*7 = 343

previous4 = 343*7 = 2401

Base case

Case that is easiest to solve, the "base" of a problem

-Write recursive algorithms to get to the base case and then work backwards

Recursive binary search

Returns 'key' if it is in the list using an array, a start/stop for a region to search, and the key to be found

Narrow the start/stop until you find the key

-Base case- once start/stop, start = key or if key is not there, return -1

-Recursion: create a mid in the start and stop- if the key = mid, return that

If the mid > key, key = binarySearch() using the mid as the stop

If the mid < key, key = binarySearch() using the mid as the start

Fibonacci sequence

Sequence of numbers where each number is the sum of the previous 2 that precede it

0, 1, 1, 2, 3, 5, 8, 13, 21, 34, 55, 89

Fibonacci algorithm

fib(n)

n is the input of a normal number, fib(n) is the Fibonacci number at the nth position

Base cases- if n = 0 or 1, return 0 or 1

previous = 0 //store previous number

current = 1 //store current number

fori = 2 to n do{

tmpCurrent = current

current = current + previous

previous = tmpCurrent

}

return current

Recursive algorithm framework

1. Divide problem into subproblems

2. Conquer the subproofs by solving them recursively

3. Combine the solution of each subproblem into the solution of the original problem

Merge sort

Sort elements of an array of n numbers

1. Divide array into left and half halves

2. Conquer each side by recursively sorting them

3. Combine sorted left and right halves into a full sorted array

Merge sort solution

Create a new array the same size as the original

Compare 1st two numbers of each list

Put larger number in array, leaving the other one

Keep doing it until the array is empty/ filled

Copy array back into the original array

Merge sort explaination

Essentially, merge sort keeps splitting the lists until what remains is a list the size of 1. Then it adds all those lists back together while ordering them

Merge sort pseudo code

if(left < right){

mid = left + right / 2

mergeSort(A, left, mid) // sorts left side

mergeSort(A, mid, right) // sorts right side

merge(A, left, mid, right)

}

Induction proofs

Prove that for all n ≥ n0, P(n)

n = just variable n, standing for number of times that the code runs through

n0 = any arbitrary number

P(n) = proposition

How to prove P(n)?

Use induction starting at a base case P(n0) aka prove n0 for P(n), then prove P(n0 + 1) as once you prove n + 1, it is logical that the rest should follow

Induction proof steps

1. Find the base case by making the equation = to given number

2. Get equations k and k + 1

3. Solve k + 1 by referring back to k equation

4. Make it equal to the equation given

Loop invariants

Prove that an algorithm will always work

What is an algorithm described by?

1. Input data

2. Output data

Preconditions

Specifies what restrictions to put on input data- parameters of what we put in

Postconditions

Specifies what is the result- parameters of what is put out

When is an algorithm correct?

1. Correct input data stops and produces correct output

2. Correct input and output data satisfies precondition and postcondition

Loop invariant

Loop property that holds before and after each iteration of a loop

-properties of loop hold before and after each iteration

-Variable must be true before and after loop

Proof using loop invariants: show

1. Initialization: it is true before initialization of loop

2. Maintenance: if it was true before, it must be true for the next iteration

3. Termination: if it stops and shows if algorithm is correct

Base case of loop invariant

Invariant holds before first loop

Inductive step of loop invariant

Invariant holds after first loop

Termination of a loop invariant

Stop induction when loop terminates instead aof going to infinity

Runtime

Total amount of time an algorithm takes to run- the sum of operation times as different operations have different times

Primitive operation times

Time is always the same, independent of the size of bigger problems solved

-Run in constant time

-Summation of all total time

Primitive operations

1. Tassign- assign a value to a variable; x

For/while loops

Multiply by number of iterations- n

Best case

Input that makes it go as fast as possible

Worst case

Input that makes it go as slow as possible

Primitive run time assumptions

Assume that each operation is compatible- all primitive operations is 1 unit

n(n + 1) / 2

i = 0 ∑ n - 1 > i

Selection sort worst case

1 + 15n + 5n^2

Big O notation

Describes how running time of algorithm increases as a function n (n size of problem) when n is large

What does Big O notation do?

1. Simplifies running times

2. Gets rid of terms that become insignificant when n is large

f(n)

Complicated equation

g(n)

Simpler equation you want to reduce f(n) to

When is f(n) Big O of g(n)

When f(n) < c*g(n) when c is a constant for all n0 → n

-Prove that f(n) will be smaller

How to prove that f(n) is not Big O of g(n)

f(n) > c*g(n) for n0 and c

Hierarchy of Big O classes

Smallest to largest

1. O(1)

2. O(logn)

3. O(√n)

4. O(n) → constant

5. O(n^k) if k > 1

6. O(a^n) exponential

O(1) ⊂ O(log n) ⊂ O(√n) ⊂ O(n) ⊂ O(n^k) ⊂ O(2^n)

O(1)

sin(n) + 10, 100 → O(1)

Primitive operations

O(n)

Linear functions

Shortcuts for Big O

1. Sum rule

2. Constant factors rule

3. Product rule

4. Exponential

5. Log I

6. Log II

Sum rule

If f(n) is O(g(n)) and h(n) is O(g(n)) then f(n) + h(n) is O(g(n)) as

ex. f(log(n)) → O(n) and h(n) → O(n) then f(log(n)) + h(n) → O(n)

When each term is Big O then the sum is Big O

Constant factors rule

If f(n) is Big O of g(n) then kf(n) is big O of g(n) for any factor k

Any polynomial less or equal to biggest polynomial n^k is O(n^k)

ex. 10n^3 is Big O of (n^3)

n^3 + 10n^2 + log(n) is O(n^3)

Product rule

If f(n) is O(g(n)) and h(n) is O(e(n)) then f(n)*g(n) is O(f(n)*e(n)) because...

Exponential

n^x is O(a^n) for any x > 0 and a > 1

BUT a^n is not O(n^x)

ex. n^10000 is O(1.0001^n) yet the c and n0 will be large and 1.0001^n is not O(n^100000)

Log I

Since log(n^x) = xlog(n) then log(n^x) is O(log(n)) because you isolate the log(n) since constants don't matter

Log II

Loga(n) is O(logb(n))

Limit shortcuts

1. If lim n→∞ f(n) / g(n) = 0 then f(n) is O(g(n)) but g(n) is not O(f(n))

2. If lim n→∞ f(n) / g(n) = something that is not 0 then f(n) is O(g(n)) and g(n) is O(f(n))

3. If lim n→∞ f(n) / g(n) = +∞ then g(n) is O(f(n)) and but f(n) is not O(g(n))

4. If the limit doesn't exist, then we can't say anything

l'Hopital rule

limn→+∞ f(n)/g(n) = limn→+∞

df(n)/dn

dg(n)/dn

Find runtime of recursive code

1. Establish a base case where you prove that t(1) is true

2. Use substitution approach starting with t(n), finding t(n - 1), t(n - 2), etc

3. Find the pattern between the t(n)s and establish a case with constant k

4. Plug in base case 1 using n - k = 1 therefore k = n - 1 with k as 1

5. Solve after plugging in t(1) for k

Quick sort

Divide: Choses a pivot- an element of the array- and the pivot divides into 2 groups: the smaller than pivot and smaller or equal of pivot

Conquer: recursively sorts each group

Combines: puts together both groups

Quick sort pseudo code

if (start < stop) then

pivot ← partition(A, start, stop)

quickSort(A, start, pivot-1)

quickSort(A, pivot+1, stop)

Runtime of quick sort

Faster than other algorithms- O(n^2) worst case, O(nlogn) average case when for other sorts the O(nlogn) is worst

Worst case of quick sort

When the pivot is the largest number- the pivot stays where it is because everything is to the left and choses the one right next to it on the left

What is the advantage it has over mergeSort

Constant in O(nlogn) is smaller than for constant of mergeSort O(nlogn) making it faster

In-place algorithm

Algorithm that uses a constant amount of memory in addition to what is used to store the input

Importance: if the algorithm itself uses a lot of memory, it doesn't want to grow that memory when it uses more input

In-place algorithms

Selection Sort, Intersection Sort- both just moving elements around