DP-203 Questions

1/57

Earn XP

Description and Tags

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

58 Terms

Clustered ColumnStore Index

The physical storage for the entire table and the standard for storing and querying large data warehousing fact tables. 10x better performance than row oriented storage. 10times the data compression over the uncompressed data size

Distribution: Hash([ProductKey])

A distribution type, distributes rows based on the distribution value. Designed to achieve high performance for queries on large tables. Choose a column with data that distributes evenly.

Distribution: REPLICATE

A replicated table has a full copy of the table available on ever compute node. Queries run fast on replicated tables since joins on replicated tables don’t require data movement. Replication requires extra storage and isn’t practical for large tables. Best when the table size is less that 2GB compressed.

Distribution: Round Robin

Distributes table rows evenly across all distributions. Rows are distributed randomly. Loading data into a round-robin table is fast. Keep in mind that queries can require more data movement than other distribution methods

TLDR: Maximizes loading speeds

You have an Azure Synapse Analytics dedicated SQL pool that contains a table named Table1. Table1 contains the following:

✑ One billion rows

✑ A clustered columnstore index

✑ A hash-distributed column named Product Key

✑ A column named Sales Date that is of the date data type and cannot be null

Thirty million rows will be added to Table1 each month.

You need to partition Table1 based on the Sales Date column. The solution must optimize query performance and data loading.

How often should you create a partition?

A. once per month

B. once per year

C. once per day

D. once per week

B: When creating a partition in a clustered columnstore index, Consider how many rows per partitions. For optimate compression and performance of clustered columnstore tables, a min of 1mil rows per partition are needed. Dedicated SQL Pool divides each table into 60 distribution by default. So you need at least 2 months to get at least 1mil rows per distribution in a new partition. So nothing besides B is correct.

Additionally, a partition range holds those 60 partitions with 60million rows. So if a question asks how many partition ranges the table would need you would divide the amount of records by 60million rows.

Slowly Changing Data (SCD) Type 2

Records all the changes made to each key in the table. These operations require updating the existing rows to mark the previous values of the keys as old and then inserting new rows as the latest values. it’s primarily used when you need to keep track of historical versions or changes to dimension data. SCD Type 2 is one of several techniques for handling slowly changing dimension and is specifically designed to track historical changes by creating new records for each version of the dimension as it evolves.

TLDR: Shows all row changes, make sure it has an effective end date, date modified, and some sort of PK for the row

Apache Parquet

open-sourcefile format for Hadoop, stores nested data structure in a flat columnar format. Compared to a traditional approach where data is stored in a row-oriented approach, is more efficient in terms of storage and performance. Especially good for queries that read particular columns from a wide table since only needed columns are read and IO is minimized.

-Doesn’t support timestamps

-Column oriented data stores are optimized for read heavy analytical workloads

-Supports Schema Property

Apache Avro

Ideal candidate for storing data in a data lake landing zone because:

-data is usually read as a whole for further processing by downstream systems (this is where row format is more efficient)

-Downstream systems can easily retrieve table schemas (no need to store separately)

-Source schema changes are easily handled (schema evolution)

-JSON Formatted

-Supports Timestamps

-Row oriented data stores are optimized for write heavy transactional workloads

Polybase Load

The basic steps for implementing a ___ ELT for dedicated SQL pool are:

Extract the source data into text files.

Land the data into Azure Blob storage or Azure Data Lake Store.

Prepare the data for loading.

Load the data into dedicated SQL pool staging tables using PolyBase.

Transform the data.

Insert the data into production tables.

Identity Column

Used to create surrogate key (unique identifier) within each row within a table.

ALTER INDEX ALL on table1 REBUILD

Alter the indexes (all indexes) on table1 and rebuild them all. Rebuilding ensures that the column-store compression in maximized for the data within the table.

How many distributions are in a Azure Synapse dedicated SQL Pool Table

60

DBCC INDEXDEFRAG (pool1, table1)

Improves index scanning performance by defragmenting the leaf level of an index so that the order of the pages matches the left-to-right logical order of leaf nodes

DBCC DBREINDEX (table1)

Rebuilds index for a table or all indexes for a table by allowing an index to be rebuilt dynamically, enforcing either PK or UNIQUE constraints can be rebuilt without having to make separate drop/re-create statements

ALTER INDEX ALL on table1 REORGANIZE

Improves index performance without completely rebuilding the index. Typically used for online index maintenance and optimization, faster and requires fewer resources than a typical rebuild.

File Paths and Partitioning by time periods

It’s important to put time period partitions at the end of file paths. If you put them first you would have to allow permissions to users based on every hour/week/month of the year. Permissioning does not make sense in this situation

Preserve Hierarchy (ADF Copy Activity)

Compared to the flat namespace on Blob storage, the hierarchical namespace (ADLS Gen2) greatly improves the performance of directory management operations, which improves overall job performance.

Managed Identity vs Service Principal

The managed identity grants permissions to the dedicated SQL pools in the workspace.

Managed Identity is when you want azure to manage the login details automatically. (MSRP issues a certificate internally to that identity)

A Service Principal is when you want to allow access to an app for specific access or control

Masking Function: Default()

Full masking according to the data types of the designated fields

✑ Use a zero value for numeric data types (bigint, bit, decimal, int, money, numeric, smallint, smallmoney, tinyint, float, real).

TLDR: Query w/out access you’ll get ‘0’

External Tables:

Only these Data Definition Language (DDL) statements are allowed on external tables:

✑ CREATE TABLE and DROP TABLE

✑ CREATE STATISTICS and DROP STATISTICS

✑ CREATE VIEW and DROP VIEW

Geo-Redundant Storage

Store copy of data in diff geo location: x

Doesn’t automatically initiate a failover from the primary to secondary location unless read-access is enabled

Zone-Redundant Storage

Store copy of data in each of the 3 azure zones:

Doesn’t automatically initiate a failover from the primary to secondary location unless read-access is enabled

Locally_Redundant Storage

Store 3 copies of data within same location

geo-zone-redundant storage

mix of geo and zone: two geo locations each with 3 zones replicated. very safe

Data Partitioning Strategies

Hash partitioning optimizes querying only (large fact tables).

Round robin is optimized for loading data (evenly disbursed).

Replicated tables provides optimized query performance for small tables (small dimensions).

Polybase Sharding

A. Scale the target database out. (Increases DWU, providing more compute resources, used for optimizing query performance)

B. Scale the target database up. (Increasing DWU performance level, used for optimizing query performance)

C. Shard the source data across multiple files. (maximizes network throughput)

D. Shard the source data across multiple storage accounts. (Maximizes parallelism and load balances across multiple storage accounts, used for very large datasets)

Incremental Load

Process of only loading new or updated data from a source. You need a column of type date or int to act as the filter column during an incremental load

Azure Synapese Analytics tables

An Azure Synapse Analytics SQL pool uses the sys.dm_pdw_exec_requests, sys.dm_pdw_request_steps, sys.dm_pdw_sql_requests

views to monitor SQL pool activity.

The sys.dm_exec_cached_plans view stores execution plans that do not show current activity.

The sys.dm_pdw_errors view stores errors, not current activity.

Azure Stream Analytics SUs to Nodes

There are 6 SUs per 1 Stream Analytics Nodes. has 3 nodes per job. 18 SUs to have min parallelism.

Databricks Watermark

Watermarks interact with output modes to control when data is written to the sink. Because watermarks reduce the total amount of state information to be processed, effective use of watermarks is essential for efficient stateful streaming throughput

Event Hubs Partitions

Event Hubs separates events into 4 partitions by default. Each published event will go into only 1 partition

External Table Columns

You can’t proactively add columns to an external table once it is created. You have to drop the table and recreate it to add additional columns.

Sensitivity Classification

Sensitivity tags that can allow different access to data based on the user. Are persistent no matter where the data is stored. Labels aren’t shown in apps for users outside your organization or to guests.

Personally Identifiable Information (PII) vs GDPR

PII is just name, address, birthdate, SS, banking info. While GDPR extends to photos of you, location, etc.

Slowly Changing Dimensions (All Types)

Type 0: Fixed, never changes

Type 1: Updated, no historical data

Type 2: Row Versioning, insert new data with date timestamps to be able to see versions and updates over time

Type 3: Previous Value Column, have a column that shows the old value of a column before it was updated. Constantly updates with updates

Type 4: History Table, shows current value in dimension table but tracks all changes in a separate table.

Type 6: Hybrid of SCD types 1, 2, and 3

Heap Indexing

Heap means no indexing. It is best used for staging tables as it is fast at loading due to its non-ordered nature. A clustered index would be slower as it organizes the data in order. Without order, heap data can insert rows in parallel.

Native and Hadoop External Table Return Policies

Location = ‘folder_or_filepath’ specifies the folder or file path with data. Files with names starting with ‘_’ or ‘.’ will be ignored

Native External Tables don’t return subfolders unless you specify the ‘/**’ characters at the end of the filepath

Hadoop External Tables return all subfolders by default

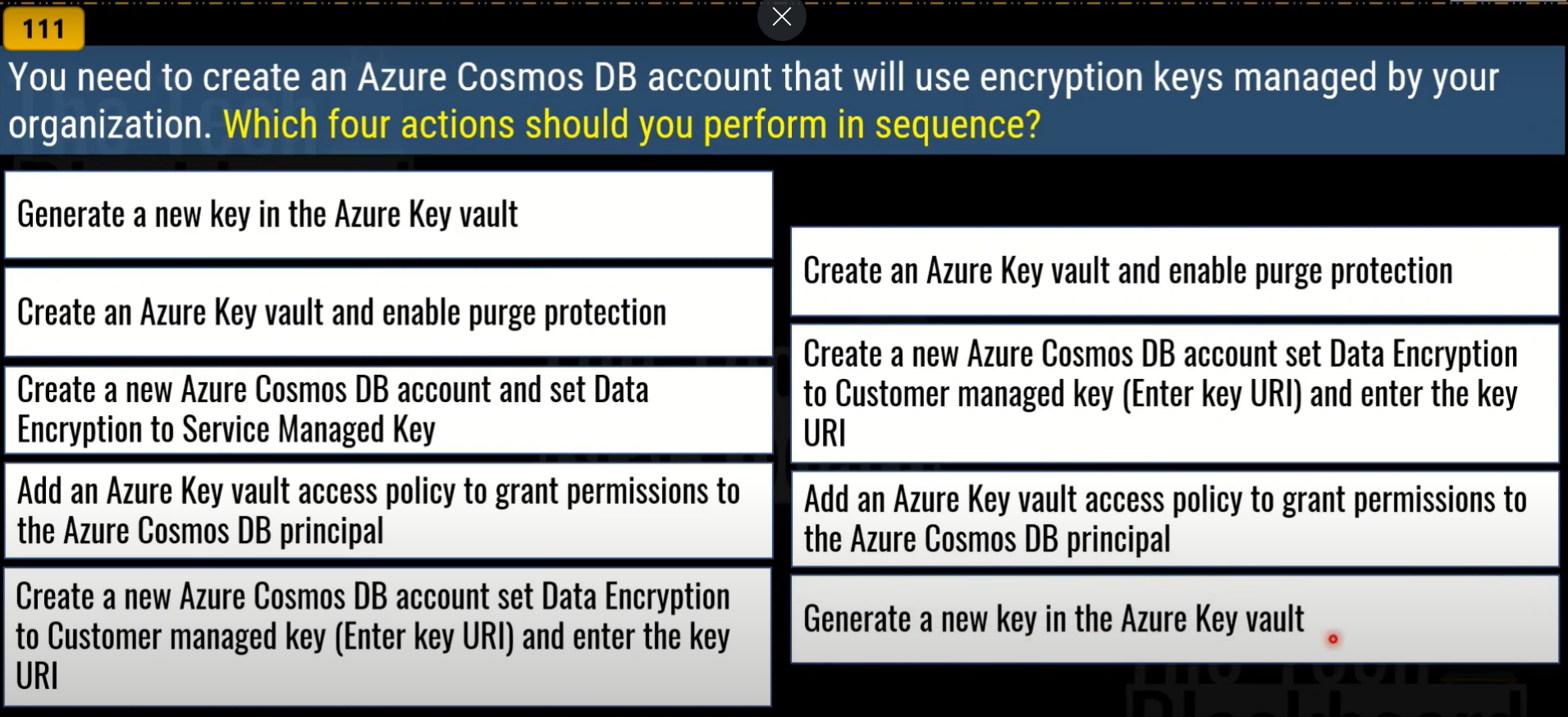

Create new CosmosDB with Key Vault

Create a Key Vault First

Create a new Azure Cosmos DB and set Data Encryption to Customer managed key (Enter Key URI) and enter the URI (Key vault is the customer managed key0

Add an Azure Key Vault Access Policy to grant permissions to the Azure Cosmos DB principal

Generate a new key in Azure Key Vault

Access and THEN generate a new key

Recovery Point Objective (RPO) and Recovery Time Objective (RTO)

RPO is the max duration of acceptable data loss

(8 hour default in Azure Synapse Analytics. You can also manually take a snapshot, 24 hour geo-restore time)

RTO is the max duration of acceptable downtime. Defined by your specification.

Monitor DW in Azure Synapse Analytics to know whether to scale up to a higher service level. Best Metric to monitor is?

DWU (Data Warhousing Units) percentage

Dedicated SQL Pool metric in Azure Monitor: CPU Percentage

CPU Utilization across all nodes for the DW

Dedicated SQL Pool metric in Azure Monitor: Data IO Percentage

IO (Input/ Output) Utilization across all nodes for the DW

Reaching the limit will cause queries to take a lot longer. How much disc activity your account is using (similar to CPU)

Dedicated SQL Pool metric in Azure Monitor: Memory Percentage

Memory Utilization (sql server) across all nodes for the DW

Dedicated SQL Pool metric in Azure Monitor: Active Queries and Queued Queries

# of active queries executing on the system and # of queued queries waiting to start executing

Dedicated SQL Pool metric in Azure Monitor: Successful Connections and Failed Connections: User Errors

# of successful user logins and number of failed user logins against the DB

Dedicated SQL Pool metric in Azure Monitor: Failed Connections: System Errors

Number of system failed logins (system NOT User logins)

Dedicated SQL Pool metric in Azure Monitor: Blocked by Firewall

# of logins blocked by firewall

Dedicated SQL Pool metric in Azure Monitor: DWU Limit

Service level objective of the data warehouse

Dedicated SQL Pool metric in Azure Monitor: DWU Percentage and DWU used

Max btwn CPU percentage and Data IO percentage. Usage is the DWU percentage times the DWU limit.

Dynamic Management Views (Syn DW) : Monitor Connection

sys.dm_pdw_exec_sessions

Dynamic Management Views (Syn DW) : Monitor Query Execution

sys.dm_pdw_exec_requests

Dynamic Management Views (Syn DW) : Monitor tempdb with views

sys.dm_pdw_nodes_db_session_space_usage

table joined with other stuff where DB_Name(ssu..database_id)= ‘tempdb’

Dynamic Management Views (Syn DW) : Monitor Memory

sys.dm_pdw_nodes_os_performance_counters.[Total Server Memory (KB)’ #Current memory

sys.dm_pdw_nodes_os_performance_counters.[Target Server Memory (KB)’ #Total memory allowed for this sql instance

Dynamic Management Views (Syn DW) : Monitor Transaction Log Size

sys.dm_pdw_nodes_os_performance_counters

Instance_name like ‘Distribution_%’

and counter_name = ‘Log File(s) Used Size (KB)’

Dynamic Management Views (Syn DW) : Monitor Transaction Log Rollback

sys.dm_pdw_nodes_tran_database_transactions

database_transaction_next_under_lsn

Dynamic Management Views (Syn DW) : Monitor PolyBase Load

sys.dm_pdw_exec_requests

inner join sys.dm_pdw_dms_external_work

Dynamic Management Views (Syn DW) : Monitor Query Blockings

sys.dm_pdw_waits

where waiting.state = ‘Queued’ and blocking.state = ‘Granted’