Ch. 6: Operant Conditioning – Introduction

1/59

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

60 Terms

Thorndike’s Law of Effect

Behaviors leading to a satisfying state of affairs are strengthened (“stamped in”), while behaviors leading to an unsatisfying or annoying state of affairs are weakened (“stamped out”)

The extent to which the consequences of a behavior are satisfying or annoying determine whether the behavior will be repeated

Skinner’s Selection by Consequences

“Free operant” procedure → the rat freely responds w/ a particular behavior (like pressing a lever) for food and it may do so at any rate

2 categories of behavior:

Involuntary, reflexive-type (Pavlov) → can be classically conditioned

Voluntary and controlled by their consequences rather than by the stimuli that precede them → operant behavior

Response = behavior

Shaping

Gradual creation of new behavior through reinforcement of successive approximations

Operant behavior (operants)

Behaviors influenced by consequences

Ex: a rat can press a lever food in a hard or soft way or quick or slow

These consequences then affect the future frequency (probability) of those responses

Operant conditioning

Effects of those consequences upon behavior

Basically Thorndike’s Law of Effect but emphasizes the effect of the consequence rather than satisfaction/annoyance

Instrumental conditioning → response is instrumental in producing the consequence

Operant consequence

Refers to the process/procedure in which a certain consequence changes the strength of a behavior

Reinforcers + punishers

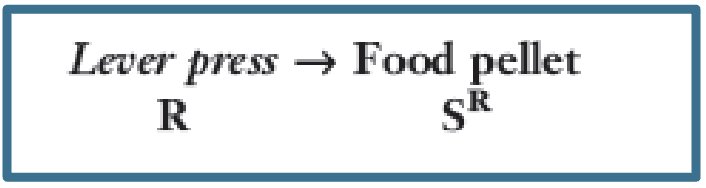

Reinforcer (SR)

Strengthen a behavior

Refer to the specific consequence used to strengthen behavior

Increases the future frequency of that behavior

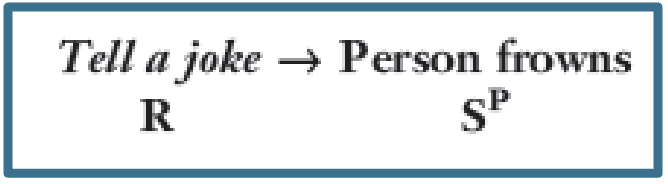

Punishers (SP)

Weaken a behavior

Refer to the specific consequence used to weaken behavior

Decreases the future frequency of that behavior

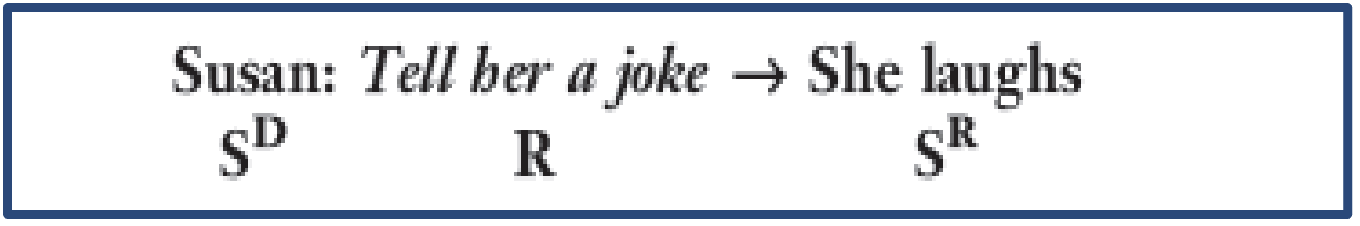

Discriminative stimulus (SD)

Stimulus in the presence of which responses are reinforced and in the absence of which they are not reinforced

A signal that indicates that a response will be followed by a reinforcer

The behavior is more likely to occur in the presence of those stimuli → increasing the probability of the behavior happening

“Set the occasion for”

4 types of contingencies

Positive reinforcement

Negative reinforcement

Positive punishment

Negative punishment

Positive reinforcement

Present pleasant/rewarding stimulus following response

Increase in the future strength of that response

Ex: study diligently for a quiz (R) → obtain an excellent mark (SR)

Negative reinforcement

Remove unpleasant/aversive stimulus following response

Increase in the future strength of that response

Ex: take aspirin (R) → eliminate headache (SR)

Positive punishment

Present unpleasant/aversive stimulus following response

Decrease in the future strength of that response

Ex: talk back to the boss (R) → get reprimanded (SP)

Negative punishment

Remove pleasant/rewarding stimulus following response

Decrease in the future strength of that response

Ex: argue w/ boss (R) → lose job (SP)

Positive reinforcement: more immediate reinforcer

Stronger its effect on the behavior

Positive reinforcement: primary reinforcer/unconditioned reinforcer

Innately reinforcing (i.e. food, water, temperature, sexual contact, stimulation)

Positive reinforcement: secondary reinforcer/conditioned reinforcer

Reinforcing event because associated w/ other reinforcer (i.e. nice clothes, good grades, certain music)

Positive reinforcement: generalized reinforcer/generalized secondary reinforcer

Reinforcing because associated w/ several other reinforcers; token economies (i.e. money)

Intrinsic reinforcement

Provided by mere act of performing the behavior (i.e. reading books for the love of learning)

Extrinsic reinforcement

Provided by some consequence that is external to the behavior (i.e. reading textbook to get an A on the exam)

Rewards can undermine intrinsic motivation when:

Reward is expected (i.e. person is instructed beforehand that she will receive a reward)

Reward is tangible (i.e. consists of money rather than praise)

Reward is given for simply performing the activity and not for how well it is performed (i.e. participant trophies)

Natural reinforcers

Typically provided for a certain behavior

Expected consequence of the behavior within that setting (i.e. money is a natural consequence of selling merchandise)

More efficient → use whenever possible

Contrived (artificial) reinforcers

Reinforcers that have been deliberately arranged to modify a behavior

Not a typical consequence of the behavior within that setting

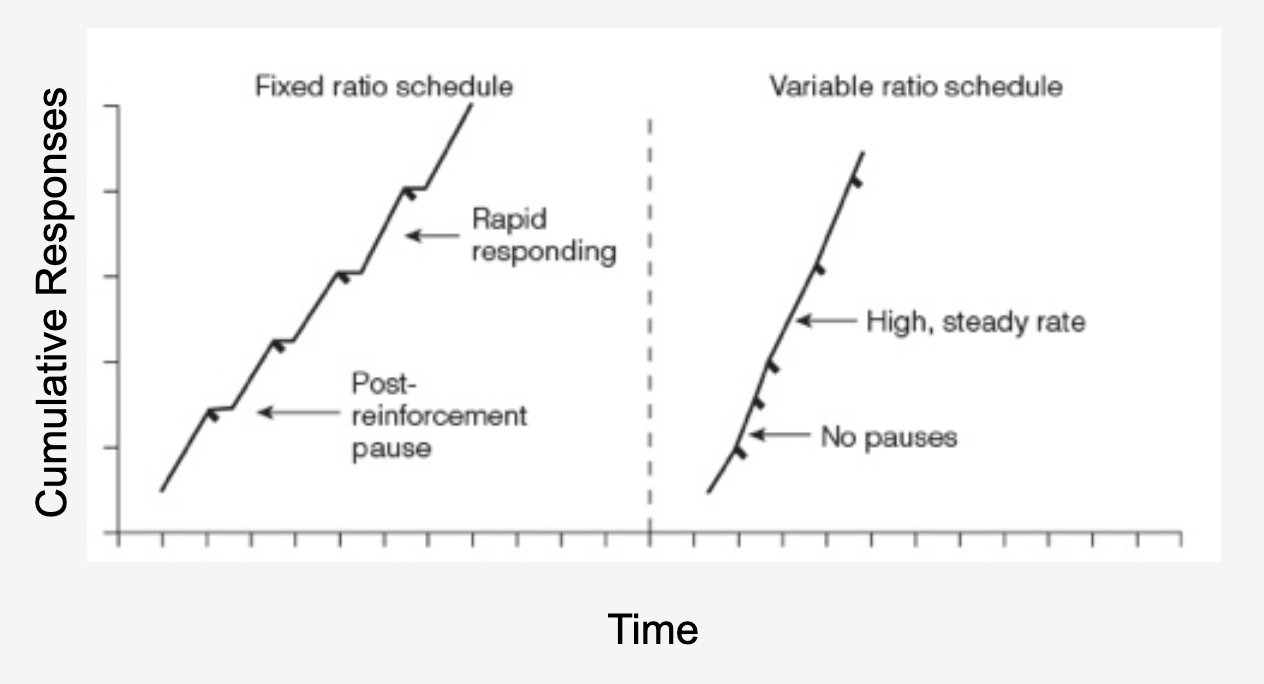

Fixed ratio schedule (FR)

Reinforcement is contingent upon a fixed/predictable # of responses

Ex: giving a pet a treat every 3 times it rolls over (FR 3)

Variable ratio schedule (VR)

Reinforcement is contingent upon a varying/unpredictable # of responses

VR examples: playing golf, a cheetah chasing prey, gambling

Ex: on average, there will be an acknowledgement every 2 times you hold open a door

Ratio strain

A disruption in responding due to an overly demanding response requirement

Prevention: gradually increase requirements

Ex: low workload in high school (FR-Low) → high workload in college (FR-High) = burnout

High schedule rate

Dense reinforcement

Reinforcement is delivered frequently → requires fewer steps

Faster learning + high motivation

Encourages consistent responding

Low schedule rate

Lean reinforcement

Reinforcement requires many responses before being given

Can lead to ratio strain if too demanding

Response rate may slow or stop if reward feels unattainable

Balance is key → too frequent reinforcement can reduce value, while too sparse can lead to extinction of behavior

Characteristics of FR schedules

High response rate

People + animals quickly learn that more response leads to rewards

Break-and-run pattern

Rapid responding until reinforcement, then a pause

Post-reinforcement pause

A short break after reinforcement before responding resumes

Higher FR schedules

Longer pauses

Characteristics of VR schedules

High, steady response rate

Uncertainty keeps behavior persistent

No/minimal post-reinforcement pause

Next rewards could be close

More resistant to extinction than FR schedules

Earning a dollar for every carburetor assembled is what kind of schedule?

FR 1 schedule (continuous reinforcement, CRF)

Earning a dollar for every 10 carburetors assembled is what kind of schedule?

FR 10 schedule

Response patterns (FR vs. VR schedules)

Both have high response rates

Post reinforcement pause present w/ FR

High ratio is like a long assignment

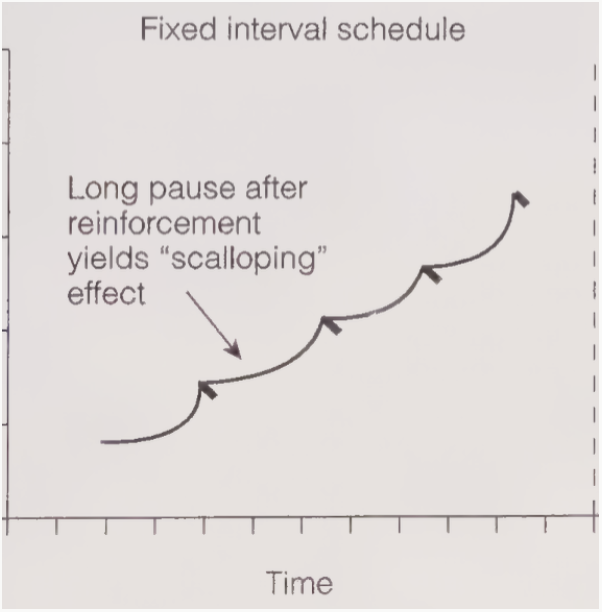

Fixed interval schedules

Reinforcement is dependent upon the first response after a fixed, predictable period of time

Ex: if a mouse presses a lever after 30 secs have passed, it gets a food pellet → learns to press the lever frequently after every 30-sec. interval

Real world example: the new mail schedule is for 3pm everyday → over the next several days, you learn to check mail close to 3pm

The “scalloped” effect

Long post-reinforcement pause:

No response at the beginning of the interval

Gradual increases in responses as the interval progresses

High response rate just before reinforcement is available

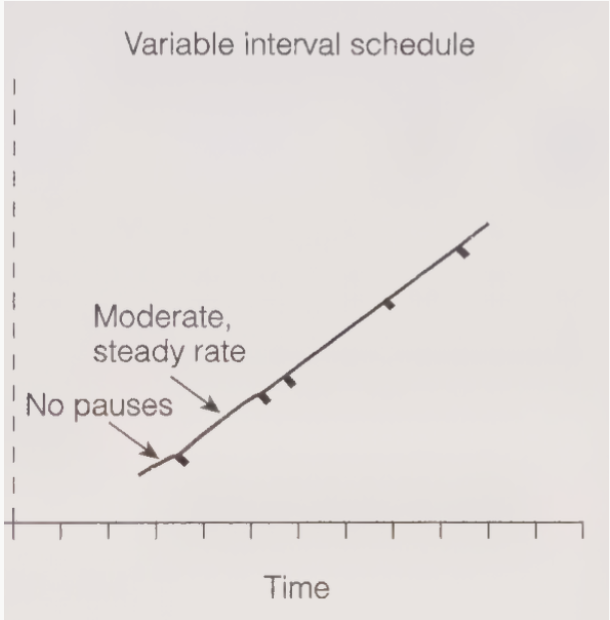

Variable interval schedules

Reinforcement is dependent on the first response after an unpredictable passage of time

Produces a moderate, steady rate of response

Usually little to no post-reinforcement pause

Ex: the first lever press after an average interval of 30 secs. will result in food for a rat (VI 30 schedule)

Real world example: a pop quiz could be given after a week, month, semester, etc. and is unpredictable → you should study at a steady rate

Duration schedules

Reinforcement is dependent on the continuous performance of a behavior throughout a period of time

Fixed duration schedule (FD)

Behavior is performed within a set period of time

Ex: must run on the treadmill for 15 mins before taking a water break

Variable duration schedule (VD)

Behavior is performed continuously over a varying, unpredictable period of time

Ex: at random times that average out to around 45 min, a treat is given to a child as the child practices the piano

FD and VD are imprecise

What constitutes a continuous behavior during the interval can vary widely

Response-rate schedules

Reinforcement is directly contingent upon the organism’s rate of response

Differential reinforcement of high rates (DRH)

Reinforcement contingent on emitting at least a certain # of responses in a certain period of time

Ex: a student earns points if they answer at least 5 questions correctly in a 10-minute discussion

Differential reinforcement of low rates (DRL)

Reinforcement contingent on emitting a response after a minimum amount of time has passed

Ex: a teacher reinforces a student only if they wait at least 5 minutes between questions, reducing interruptions

Differential reinforcement of paced responding (DRP)

Reinforcement contingent upon emitting a series of responses at a set rate

Ex: a teacher encourages students to participate every few minutes, rather than dominating the discussion or staying silent.

Noncontingent schedules

The reinforcer is delivered independently of what the organism may be doing

Fixed time (FT) schedule

Variable time (VT) schedule

Fixed time (FT) schedule

Reinforcer is delivered after a fixed amount of time, regardless of organism behavior

Ex: an employee receives a paycheck every hour, regardless of their productivity

Variable time (VT) schedule

Reinforcer is delivered after a variable amount of time, regardless of organism behavior

Ex: a manager randomly checks in on employees approximately every 10 minutes but not at set intervals

Why are fixed and variable time schedules not the same as fixed and variable interval schedules?

Interval rewards require some sort of behavior to occur after a certain time span

FT + VT are NOT contingent on any specific behavior

How can noncontingent schedules and superstitious behaviors connected?

Skinner presented pigeons w/ food every 15 secs. (FT 15-sec) regardless of their behavior

6 of the 8 pigeons began to display ritualistic behaviors

These behaviors were coincidentally reinforced during these behaviors

Ex: professional athletes + gamblers are prone to developing superstitions

Superstitious behaviors

Superstitious behaviors can sometimes develop as by-products of contingent reinforcement for some other behaviors

Ex: a businessman mistakenly believes a firm handshake is impressive but is not received that way in the Asian market

Can be seen as an attempt to make unpredictable situations more predictable

Do this more often in VT schedules (i.e. games of chance, fishing, gambling, etc.)

What happens when noncontingent reinforcement is added to a contingent reinforcement schedule?

A pigeon receiving free food reinforcement in addition to a VI schedule

Its response rate will decrease

Professional athletes w/ guaranteed contracts often perform worse

Reinforcement is no longer contingent on performance

Noncontingent reinforcement as a benefit

Can reduce maladaptive behaviors

Children who act out for attention stop misbehaving when given attention non-contingently

Unconditional positive regard

The love that one receives from others, regardless of one’s behavior

Form of noncontingent social reinforcement

Encourages healthy personality development

Can reduce motivation in some cases but enhance wellbeing in others

Important in behavior management + child development

The right balance between contingent + noncontingent reinforcement is crucial for optimal outcomes

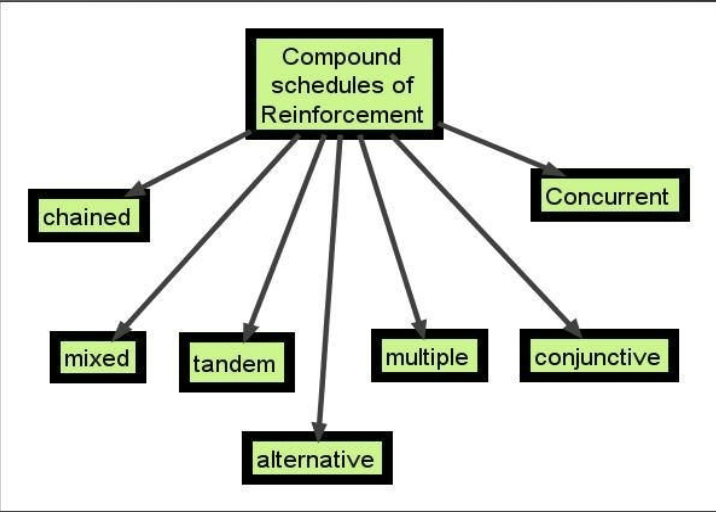

Complex schedule

A combo of 2 or more basic schedules

3 types:

Conjunctive schedule

Adjusting schedule

Chain schedule

Conjunctive schedules

Combine 2 simple schedules

Requirements for both must be met before the reinforcer is delivered

Ex: FI 5-min FR 100 schedule → 100 lever presses AND 5-min interval since the last reinforcer

Real world examples:

Earning wages contingent on X hours worked + X amount of work complete

Marathon qualifiers contingent on completing 26.2 mile race + being under cutoff time

Adjusting schedules

When the requirements of a wanted behavior to elicit a reinforcement changes as a result of the organism’s previous performance

Gradually increases or decreases the required response to elicit a reinforcement

Adapts to the organism’s performance to tailor the reinforcement to their needs

THINK SHAPING

Ex: when a piano student begins to learn pieces very quickly and perform excellently, the teacher decides to lengthen the lessons

Benefits of adjusting schedules

Maintains the behavior throughout time

Ensures the behavior is not reliant on constant rewards

Drawbacks of adjusting schedules

May lower the standards of what the organism is capable of achieving

Chained schedules

A sequence of 2 or more reinforcement schedules that must be completed in a specific order before receiving a final reward (terminal reinforcer)

How do chained schedules work?

The subject completes the first task (following the first reinforcement schedule)

A pigeon in an operant conditioning chamber pecks a green key (VR 20 schedule) → changes key color to red

This leads into the next task (following a different reinforcement schedule)

Pecks a red key (FI 60 seconds) → earns food as the final reward

Completing all required steps in the correct order results in the final reward

Food is the terminal reinforcer that maintains the entire chain

Why are chained schedules beneficial?

Helps us understand how complex behaviors are built step by step

Useful in training animals, teaching new skills, and developing structured learning processes

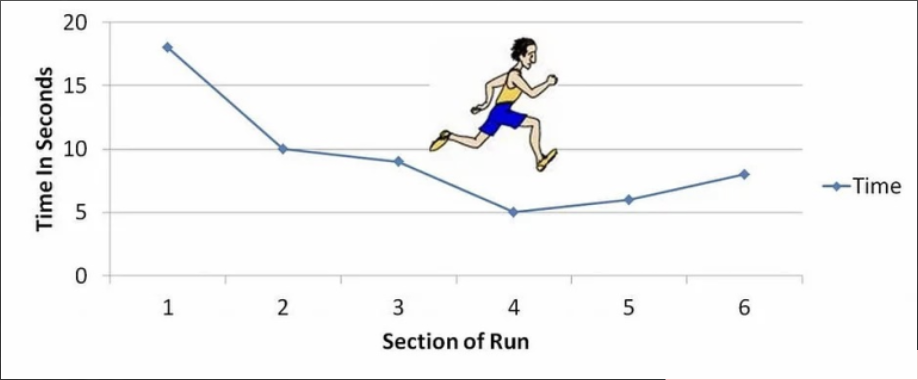

Goal gradient effect

Coined by behaviorist Clark Hull

Increase in the strength or efficiency of responding as one draws nearer to the goal

People are motivated by how much is left to reach their target, not how far they’ve come

Ex: a student writing an essay will tend to take fewer breaks and write quickly/more intensely as they approach the end