Big Data Test 1

1/160

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

161 Terms

Big Data

data whose scale, distribution, diversity and timliness

requires new data architetures

new analytical methodologies

integrating multiple skills

4 V’s of Big Data

Volume: high volume

Variety: diverse sources

Structured (table), semi-structured (XML), unstructured

Velocity: changing rapidly

Value: has some positive value (utility)

knowledge

information understanding that is gained from experience or education

cloud computing, mobile computing, knowledge management, ontology

intelligence

mental capability involved in the ability to acquire and apply knowledge and skills

Agents, etc.

Data Processing Capabilities

skill, rule, knowledge triangle (knowledge bottom up)

Embedded Intelligence

every device will have processing power and storage

Ex:

Smart phones generate half of data traffic

Possible Issue: communication bottleneck

Being able to track everything anywhere anytime

Possible Issue: processing bottleneck

Big Data tasks

problem solving

learning

decision making

planning

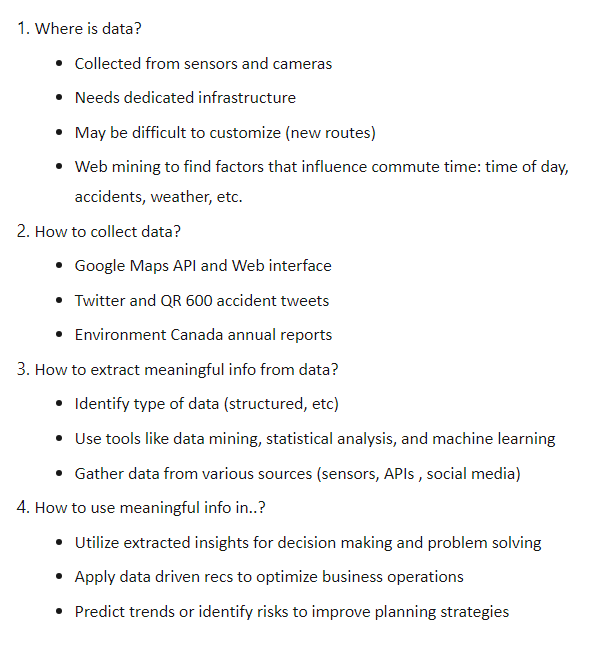

Vehicle Commute Time Prediciton

big data structures

structured

semi-structured

quasi structured

unstructured

unstructured

data contains defined data type

semi-structured

textual data files with discernable pattern enabling parsing

XML

Quasi Structured

textual data with erratic data formats, can be formatted with effort

Unstructured

no coherent structure and is typicallu stored as different types of files

Business Risk

customer churn, regulatory requirments

Data product

any application that combines data and algorithms that combine data with statistical algorithms for inference or prediction (ie. systems that learn from data)

data products redefined

derive value from data and generate more data by influencing human behaviour or making inferences or predictions upon new data

why cant you use traditional file system

cannot handle fault tolerant, small block size, large seek time, only one copy

hadoop

open source implementation of two papers at google, created by doug cutting, creator of apache lucene

HDFS properties

fault tolerance

reliable, scalable platform for storage/analysis

fault tolerance

common way of avoiding data loss through replication, redundant copies kept by system

why hadoop better than databases

Disk Seek Time: Faster by moving code to data, minimizing latency from disk seek times.

Flexible Schema: Less rigid, supports unstructured and semi-structured data with schema-on-read.

Handles Large Datasets: Optimized for big data processing with efficient streaming reads/writes.

No Costly Data Loading: Avoids extensive data loading phases typical of databases.

Flat Data Structure: Works well with denormalized data, avoiding latency from normalized (nonlocal) data

Disk Seek time

Databases move data to code, relying on high disk seek times.

High seek times add latency, slowing down large-scale analysis.

Hadoop moves code to data, reducing the need for disk seeking and latency

normal forms

Databases normalize data to maintain integrity and reduce redundancy.

Normalization causes data to be nonlocal, increasing latency for data retrieval.

Hadoop optimizes for contiguous, local data for high-speed streaming reads/writes.

Flat, denormalized data structures are more efficient for Hadoop processing

rigid schema

Databases require a predefined schema (schema-on-write), limiting flexibility.

Structured data must follow rigid formats (e.g., tables with set columns).

Hadoop uses schema-on-read, working well with unstructured/semi-structured data.

No strict schema requirements in Hadoop, allowing for faster data loading.

Data Locality and HDFS

Why isn’t a database with lots of disks enough for large-scale analysis?

Databases require high disk seek times, which adds latency. Hadoop instead moves code to data, reducing latency

How does Hadoop handle data structure differently from traditional databases?

Hadoop uses schema-on-read, which works well with unstructured or semi-structured data, unlike databases that need rigid schemas

What challenges does data normalization pose for Hadoop?

Normalization creates nonlocal data, increasing latency for Hadoop's high-speed streaming reads, which work best with local, contiguous data.

What is data locality in Hadoop?

Data locality means Hadoop co-locates data with compute nodes, so data access is faster because it’s local

How does Hadoop conserve network bandwidth?

By using data locality and modeling network topology, Hadoop reduces the need to move data, which conserves bandwidth

precious because it can easily be saturated by data transfers, impacting performance

distributed system requirements:

fault tolerance

recoverability

consistency

scalability

how hadoop addresses requirements

data distributed immediately when added to cluster and stored on multiple nodes

nodes prefer locally to minimize traffic across network

data stored in fixed size blocks and each is duplicated multiple times across system to provide redundancy

computation

job, jobs broken into tasks where each node performs task on single block

written at high level without concern for network programming time or low-level infrastruture allow developers to ficus on data vs. details

How does Hadoop handle large computations?

Hadoop breaks computations into jobs, which are further broken into tasks. Each node performs a task on a single block of data

Why don’t Hadoop jobs require concern for network programming?

Jobs are written at a high level, allowing developers to focus on data and computation rather than network or low-level distributed programming

How does Hadoop minimize network traffic between nodes?

Tasks are independent with no inter-process dependencies, reducing the need for nodes to communicate during processing and avoiding potential deadlocks

How is Hadoop fault-tolerant?

Hadoop uses task redundancy, so if a node or task fails, another node can complete the task, ensuring the final computation is correct

How does Hadoop utilize worker nodes

The master program allocates work to nodes, allowing parallel processing across many nodes on different portions of the dataset

What makes Hadoop different from traditional data warehouses?

Hadoop can run on economical, commercial off-the-shelf hardware, unlike traditional data warehouses that often require specialized hardware

What are the two primary components of Hadoop's architecture?

Hadoop's architecture is composed of HDFS (Hadoop Distributed File System) and YARN (Yet Another Resource Negotiator).

What is the role of HDFS in Hadoop

HDFS manages data storage across disks in the cluster, providing a distributed file system for big data

What does YARN do in the Hadoop architecture?

YARN acts as a cluster resource manager, allocating computational resources (like CPU and memory) to applications for distributed computation.

How do HDFS and YARN work together to optimize network traffic?

HDFS and YARN minimize network traffic by ensuring that data is local to the required computation, reducing the need to transfer data across the network

How does Hadoop ensure fault tolerance and consistency?

Hadoop duplicates both data and tasks across nodes, enabling fault tolerance, recoverability, and consistency within the cluster

What feature of Hadoop’s architecture allows for scalability?

The cluster is centrally managed to provide scalability and to abstract low-level details of clustering programming

How do HDFS and YARN function as more than just a platform?

Together, HDFS and YARN provide an operating system for big data applications, supporting distributed storage and computation

Is Hadoop a type of hardware?

No, Hadoop is not hardware; it is a distributed file system (HDFS) that runs on a cluster of machines

What is YARN in the Hadoop cluster?

YARN is a cluster resource manager that coordinates background services on a group of machines, typically using commodity hardware.

Is Hadoop a type of hardware

No, Hadoop is not hardware; it is a distributed file system (HDFS) that runs on a cluster of machines

What is YARN in the Hadoop cluster

YARN is a cluster resource manager that coordinates background services on a group of machines, typically using commodity hardware

What is a Hadoop cluster

A Hadoop cluster is a set of machines running HDFS and YARN, where each machine is known as a node

How do Hadoop clusters scale?

Hadoop clusters scale horizontally, meaning that as more nodes are added, the cluster’s capacity and performance increase linearly

What are daemon processes in Hadoop

Daemon processes are background services in YARN and HDFS that do not require user input and run continuously

How do Hadoop processes operate within a cluster

Hadoop processes run as services on each cluster node, accepting input and delivering output through the network.

How are Hadoop daemon processes managed in terms of resources

Each process runs in its own Java Virtual Machine (JVM) with dedicated system resource allocation, managed independently by the operating system

What role do master nodes play in a Hadoop cluster?

Master nodes coordinate services for Hadoop workers and serve as entry points for user access. They are essential for maintaining cluster coordination

What is the primary function of worker nodes in a Hadoop cluster?

Worker nodes perform tasks assigned by master nodes, such as storing or retrieving data and executing distributed computations in parallel

Why are master processes usually run on their own nodes?

Running master processes on separate nodes prevents them from competing for resources with worker nodes, avoiding potential bottlenecks

Can master daemons run on a single node in a Hadoop cluster?

Yes, in smaller clusters, master daemons can run on a single node if necessary

What is the role of the NameNode in Hadoop?

The NameNode (Master) stores the directory tree of the file system, file metadata, and the locations of each file block in the cluster. It directs clients to the appropriate DataNodes for data access.

What does the Secondary NameNode do?

The Secondary NameNode performs housekeeping tasks and check-pointing for the NameNode but is not a backup NameNode

What is the function of a DataNode in Hadoop?

A DataNode (Worker) stores HDFS blocks on local disks and reports health and data status back to the NameNode

Describe the workflow between a client, NameNode, and DataNode in Hadoop.

The client requests data from the NameNode, which provides a list of DataNodes storing the data. The client then retrieves data directly from those DataNodes, with the NameNode directing traffic like a “traffic cop.”

What is the role of the ResourceManager in YARN?

The ResourceManager (Master) allocates and monitors cluster resources like memory and CPU, managing job scheduling for applications

What is the purpose of the ApplicationMaster in YARN?

The ApplicationMaster coordinates the execution of a specific application on the cluster as scheduled by the ResourceManager.

What does the NodeManager do in YARN?

The NodeManager (Worker) runs tasks on individual nodes, manages resource allocation, and reports the health and status of tasks

Explain the YARN workflow for executing a job.

A client requests resources from the ResourceManager, which assigns an ApplicationMaster to handle the job. The ApplicationMaster coordinates the job, while the ResourceManager tracks status, and NodeManagers execute tasks on the nodes

MapReduce

programming model for data processing

MapReduce functions

map function, shuffle and sort, reduce

map function

step takes large dataset and transforms into keyvalue pairs.

shuffle and sort

once map phase complete, framework groups output by keys and organizes it for further processing by reduce

reduce function

processes sorted key value pairs typically aggregating or summarizing data to produce final result

What Unix tool is commonly used for processing line-oriented data in data analysis?

awk is the classic tool for processing line-oriented data

What is the purpose of the Unix script shown for analyzing temperature data?

The script loops through compressed year files, extracts air temperature and quality code fields with awk, checks data validity, and finds the maximum temperature for each year

How does the awk script validate air temperature data?

It ensures the temperature is not a missing value (9999) and checks the quality code to confirm the reading is not erroneous

What are the limitations of using Unix tools like awk for large-scale data analysis?

Limitations include difficulty in dividing equal-size pieces, the need for complex result combination, and being limited to the capacity of a single machine

Why is it challenging to divide data into equal-size pieces in Unix-based data analysis?

File sizes can vary by year, so some processes finish faster, with the longest file determining the total runtime. Dividing into fixed-size chunks is one solution

What are the challenges in combining results from independent processes in Unix-based analysis?

Results may need further processing, such as sorting and concatenating, which can be delicate when using fixed-size chunks

What is the limitation of single-machine processing in Unix-based data analysis?

Some datasets exceed the capacity of one machine, necessitating multiple machines and coordination for distributed processing

How does MapReduce differ from Unix tools in handling large datasets?

MapReduce splits tasks into map and reduce phases, allowing parallel processing across multiple machines, unlike Unix tools which are limited to a single machine

What is the purpose of the map function in Hadoop’s MapReduce?

The map function extracts relevant fields (e.g., year and temperature) from raw data and filters out bad records, preparing data for the reduce phase

How does the reduce function work in Hadoop’s MapReduce?

The reduce function aggregates data from the map phase, such as finding the maximum temperature for each year.

Why is the key-value structure important in Hadoop’s MapReduce?

The key-value pairs allow MapReduce to process and organize data across multiple nodes, with the key often being the offset in the file and the value being the data

What role does the map function play in handling erroneous data in Hadoop?

The map function filters out missing, suspect, or erroneous data during its processing phase

What is the role of the END block in the awk script?

The END block in awk executes after all lines in a file are processed, printing the maximum temperature value found

What is the benefit of MapReduce’s two-phase processing approach?

By separating processing into the map and reduce phases, MapReduce enables efficient parallel processing, improving scalability and speed

Why is network efficiency critical in Hadoop’s MapReduce?

Network efficiency is important because moving data across nodes is costly. MapReduce minimizes this by processing data locally in the map phase and only transmitting necessary results for the reduce phase

Why doesn’t the awk script in Unix handle large datasets as efficiently as MapReduce?

The awk script is limited by the processing capacity of a single machine, whereas MapReduce can distribute and process data across multiple nodes, allowing for handling of larger datasets

How is a MapReduce job executed in Hadoop?

A MapReduce job is executed by calling submit() on a Job object or using waitForCompletion(), which submits the job if not already submitted and waits for it to finish

What are the five main entities involved in a MapReduce job process?

The five entities are: the client, YARN Resource Manager, YARN Node Managers, MapReduce Application Master, and the distributed filesystem

What is the role of the YARN Resource Manager in a MapReduce job?

The Resource Manager allocates and coordinates computing resources across the cluster

Describe the role of the YARN Node Manager in MapReduce.

Node Managers launch and monitor computing containers on each node in the cluster

What does the MapReduce Application Master do?

It coordinates the execution of tasks within the MapReduce job, running tasks in containers allocated by the Resource Manager

What are the steps for job submission in a MapReduce process?

Step 1:

submit()method requests a new application ID from the Resource Manager.Step 2: JobSubmitter calculates input splits and checks output specifications.

Step 3: Job resources (JAR file, configuration, input splits) are copied to the shared filesystem.

Step 4: JobSubmitter calls

submitApplication()to officially submit the job

What are the steps in job initialization for MapReduce?

Step 5a: YARN scheduler allocates a container.

Step 5b: Resource Manager launches the Application Master's process in the allocated container under the Node Manager.

Step 6: Application Master (MRAppMaster) starts, responsible for managing the job's map and reduce tasks

What are the steps in task initialization for MapReduce?

Step 7: MRAppMaster retrieves input splits, creates map and reduce task objects, and assigns IDs.

Step 8: Application Master requests containers from the Resource Manager for each map and reduce task

What are the steps involved in task execution in a MapReduce job?

Step 9a: Resource Manager assigns containers, and the Node Manager starts tasks as YarnChild processes.

Step 9b: YarnChild retrieves configuration, JAR file, and necessary resources.

Step 10: YarnChild executes the map or reduce task, processing data as instructed

What are the five main entities in a MapReduce job, and what are their roles?

Client: Submits the job.

YARN Resource Manager: Allocates cluster resources and coordinates task scheduling.

YARN Node Manager: Launches and monitors containers on each node.

MapReduce Application Master: Manages and tracks tasks, requesting resources from the Resource Manager.

Distributed Filesystem (e.g., HDFS): Stores job files, input splits, and configurations for access by all component

major benefit of using hadoop

ability to handle failures and allowing job to complete successfully.

need to consider:

taks

app, manager

node manager

resource manager

What is a common cause of task failure in MapReduce?

Task failure often occurs when user code in the map or reduce task throws a runtime exception, which is reported to the Application Master