CS3003 Software Engineering

1/88

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

89 Terms

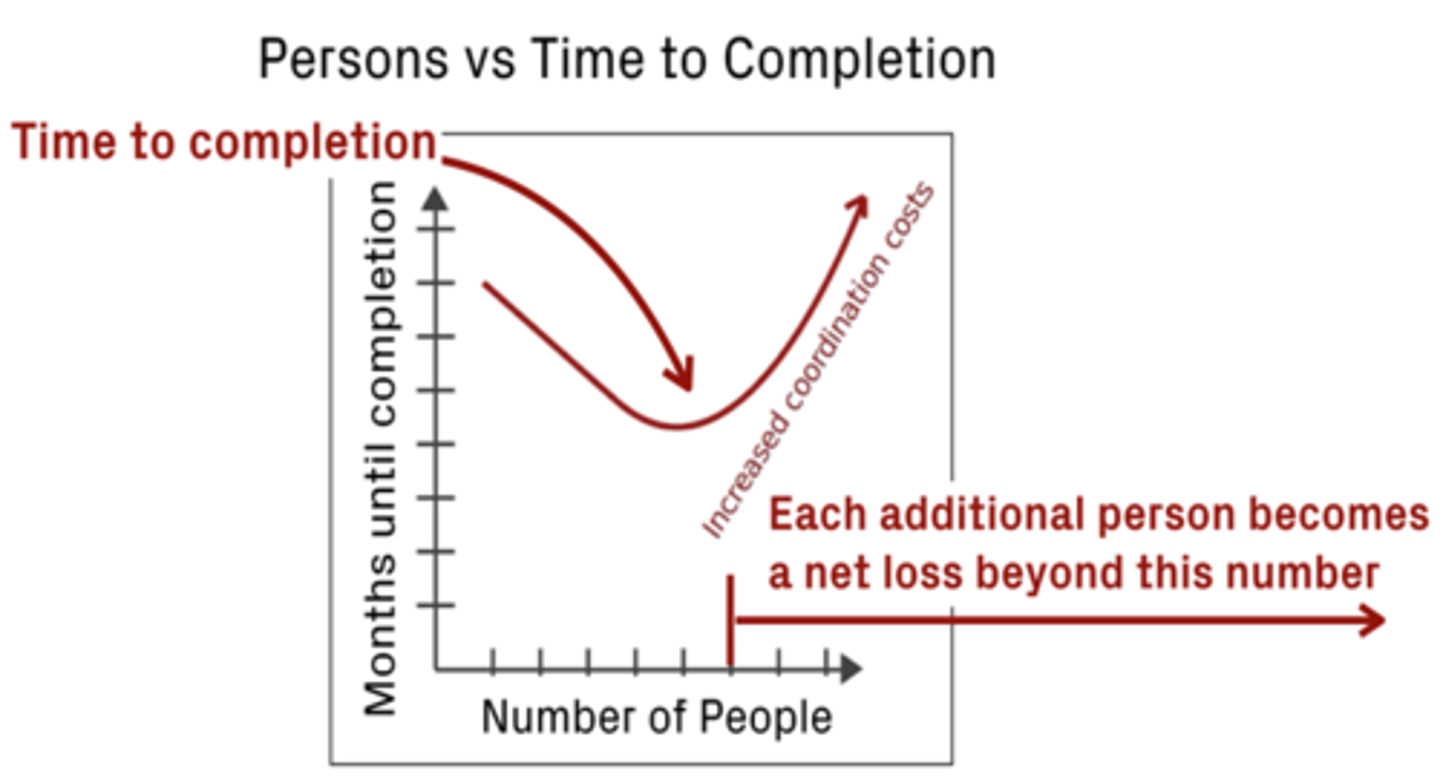

Brook's Law

Adding manpower to an already late software project makes it later. Due to: •ramp up •complexity of communication. Brooks' Law shines a light on the "Software Crisis", which reflects the IT world's complete inability to develop systems on time and on budget. 1. It highlights the need for proper training before staff are allocated to projects.

2. It emphasises the need to communicate properly in all forms (written and verbal) during a project.

3. By acknowledging Brooks' Law, it can lead to more efficient management of scarce "people" resources and that means you may save money.

Software Maintenance

The process of modifying a software system or component to correct bugs, improve performance or other attributes OR adapt to a changed environment.

What is the idea "design for maintenance"? Why is maintenance during development important ?

When you design a software, you take into account what may lie in the future → ease of maintenance.

• higher quality code means less corrective M

• anticipating changes means less adaptive M

• better development practises means less perfective M

• better tuning to users needs means less M overall (avoid code bloats and code smells)

What is code bloat?

Production of program codes that is perceived as long, slow or otherwise wasteful of resources. Which can cause code smells to emerge.

What factors affect maintenance and evolution?

1) team stability

2) poor development practices (quality)

3) staff skills

4) program age + structure

5) amount of technical debt

What factors help manage software evolution?

1) change management : ensures change requests are implemented rationally - they are analysed and prioritised by considering the urgency, value, impact, benefit and cost. XP

2) version control : a repository of the all versions and subsequent changes to it - this allows to keep track and control changes which is a major issue as many change requests are generated. Version control important to prevent changes being made to wrong version, prevent wrong version being delivered to the user, to roll back a change, help fault identification, to control concurrent changes done by developers and finally to track the evolution of the system.

What is version control? and why is version control important?

A repository of the all versions and subsequent changes to it - this allows to keep track and control changes which is a major issue as many change requests are generated. Version control important to prevent changes being made to wrong version, prevent wrong version being delivered to the user, to roll back a change, help fault identification, to control concurrent changes done by developers and finally to track the evolution of the system.

What is technical debt?

The result of not doing maintenance when you should which will come back and "haunt" you later → will be more expensive and difficult. For example, an incorrect requirement found at the requirements stage vs found at the testing stage is 1000x more expensive.

What is evolution of software?

The process of developing software and then maintaining and updating it after release by fixing bugs, improve performance or adapt to changed environment.

What are the different types of maintenance?

1)adaptive → adapting to changes in environment

2)corrective → fixing errors(human), bugs, faults and failures

3)perfective → refactoring

4)preventative → "future proofing", preventing problems from arising

Why is maintenance difficult?

People's style of writing is difference thus difficult to follow. Comments help understand code however they indicate a bad smell! Maintenance staff are rarely the development staff. People move jobs - lose knowledge. Our code memory is short lived and we believe we will remember a certain thing but don't! Coders hate doing documentation.

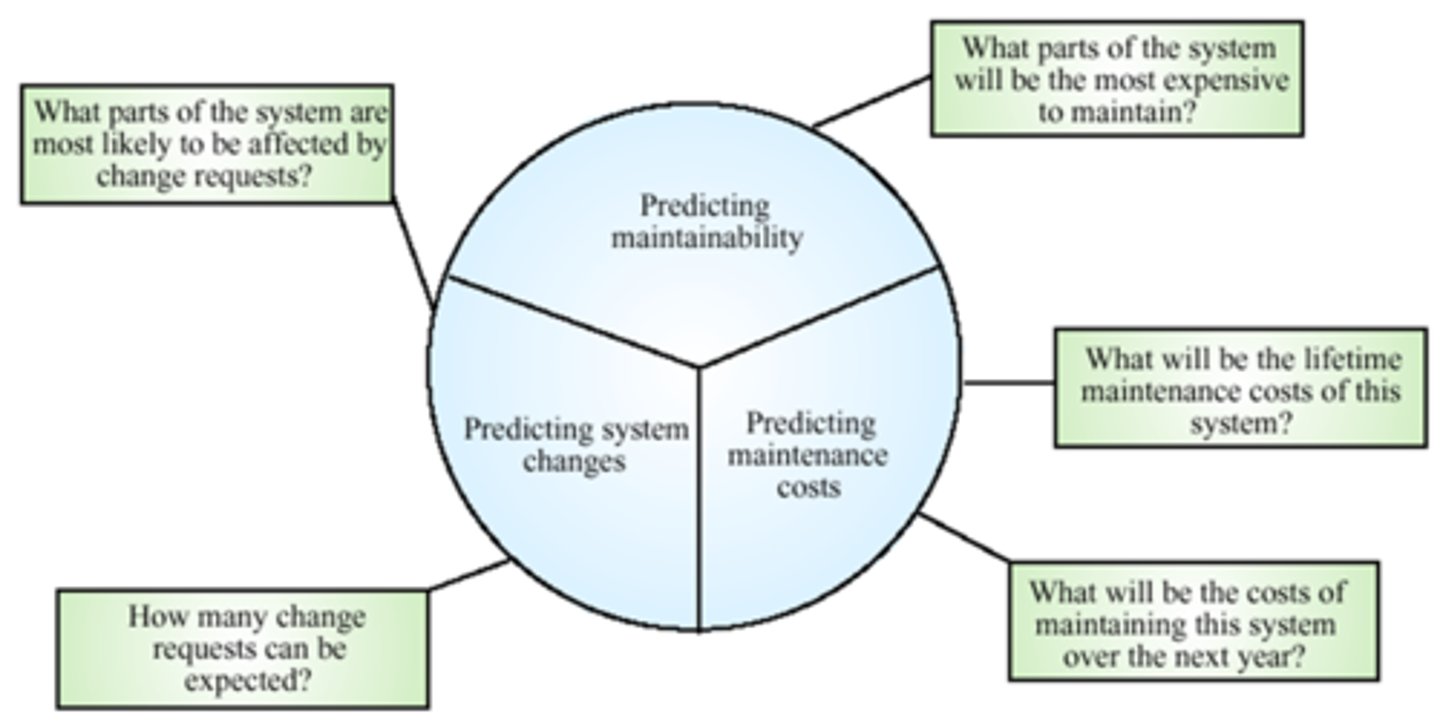

Maintenance prediction

APR

Automatic program repair.

software evolution theory lehman's laws

1) continuing change

2) continuing growth

3) declining quality

4) increasing complexity

5) system feedback - multi-level, multi-loop, multi-agent

Mob Programming

software development approach where the whole team works on the same thing, at the same time, in the same space, and at the same computer. This builds on principle of lean manufacturing and lean software development and EP.

Covers:

-definition of requirements

-designing

-coding

-testing

-deploying software

-working with customer + business experts

pros: overhead removed, individual weaknesses overcome, stress levels dropped, increased knowledge sharing and continuous learning, higher code quality, continuous work (even if 1 person is sick or laid off etc), shared responsibility for decision making, the promotion of team bonding through the joint solution of a task.

cons: high effort to solve a task together, possibly less efficient as people will allow others to take over and slack off themselves i.e. Hiding or deferring, louder voices will drown out quieter people like introverts, issues with time lag or technical difficulties or time difference when done remotely. Close proximity means if one person is sick all can get sick.

Defensive programming

a technique where you assume the worst for all inputs. A form of preventative maintenance.

1)never assume anything about input

2)use proper coding standards

3)keep code simple + reuse wherever possible.

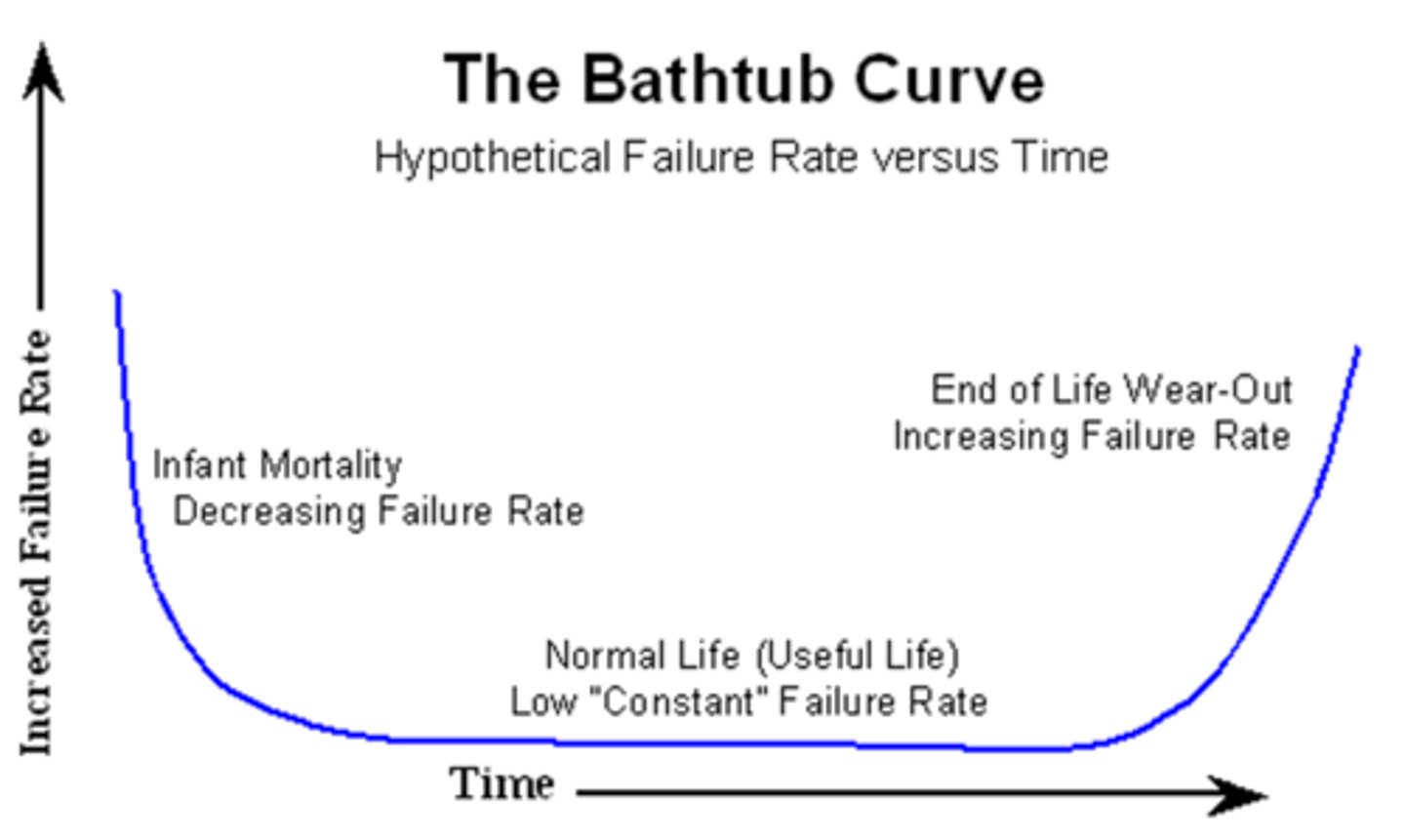

Bathtub curve

the typical failure rate of a product over time.

infant mortality-->constant failure rate-->wear out failures .

Death march

A project which is believed by its participants to be destined for failure or that requires a stretch of unsustainable overwork → the project marches to its death as its members are forced by superiors to continue against their better judgement.

Software Crisis

• Projects run over budget

• Projects not delivered on time

• Ran inefficiently

• Low quality software

• Software didn't meet requirements

• Never delivered

• Unmanageable, code difficult to maintain

Scope/Feature Creep

1)when the boundaries of a project i.e. what a system is supposed to do is changed after launch. This can be due to poor design & miscommunication during software design + development.

2)when new features are continually asked for after launch

C&K Metrics

1) WMC

2) DIT

3) NOC

4) CBO - coupling between objects

5) LCOM - how well intra-connected the fields and methods of a class are.

What is software metric measures software size? and why is it important?

LOC - lines of code.

It is useful for understanding the cost + effort required to maintain etc.

However this measure varies depending on comment lines, blank spaces, "{" , "}". People's coding methods i.e. compact + efficient vs longer but more understandable. Different languages vary is size.

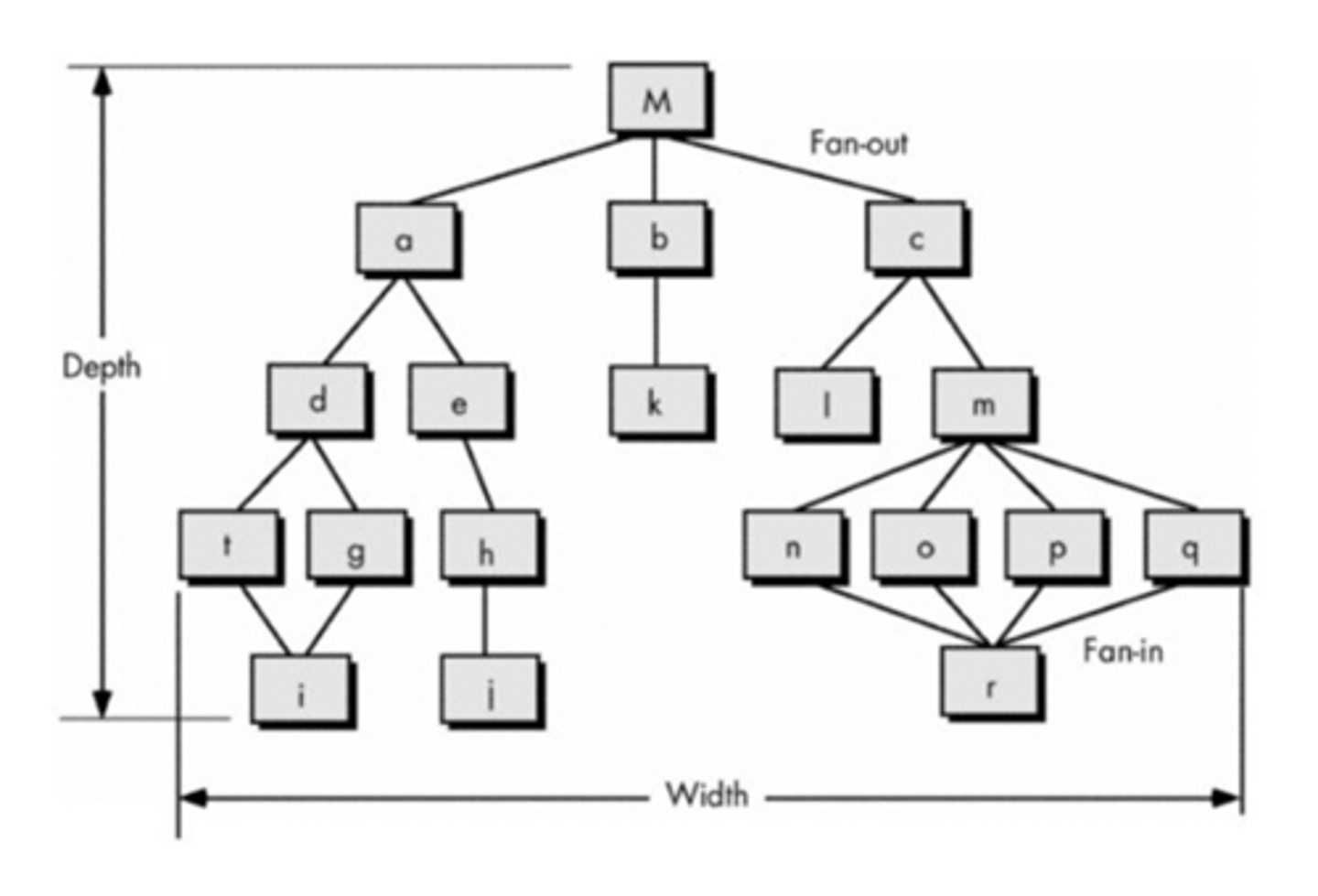

What are the software metrics used to understand software structure?

Fan-in = number of modules calling to a module.

Fan-out = number of modules called by a module.

FI(a) = 1

FO(a) = 2

These represent information flow and is an indicator of coupling and maintainability. It helps identify critical stress parts of the system as well as design problems.

Why are software metrics important? What are they used for ?

What are the problems with metrics?

As systems evolve, they decay/ erode/ degrade. Therefore, a method of capturing/ quantifying the extent of the erosion and to single out what/where the problem lies is by measuring software.

By measuring software we can 'understand' the data and establish quality targets and guidelines. This allows control and predict outcomes + thus change processes. And encourages 'improvement'.

Furthermore, they can help understand complexity, structure, size which can therefore identify:

• candidate modules for inspection

• areas where redesign may be appropriate

• areas where additional documentation is required

• areas where retesting may be required

• areas for refactoring

However, there are multiple issues such as

• tendency for professional to display over-optimism and over-confidence whereas the metrics are only indicators.

• can have -ve impact on developer's wellbeing and productivity

• academic/ industry divide: each can be interested in different aspect

• Never use metrics to threaten individuals or teams nor to appraise individuals

Why are complexity metrics useful?

They can help identify:

• candidate modules for inspection

• areas where redesign may be appropriate

• areas where additional documentation is required

• areas where retesting may be required

• areas for refactoring

Henry & Kafura's complexity

LOC(fan-infan-out)^2

McCabe's CC

Based on control flow graph and is useful for identifying white box test cases.

(#edges-#nodes)+2

LCOM

Cohesiveness of methods within a class is desirable, since it promotes encapsulation. Lack of cohesion implies classes should probably be split into two or more subclasses. Low cohesion increases complexity, thereby increasing the likelihood of errors during the development process.

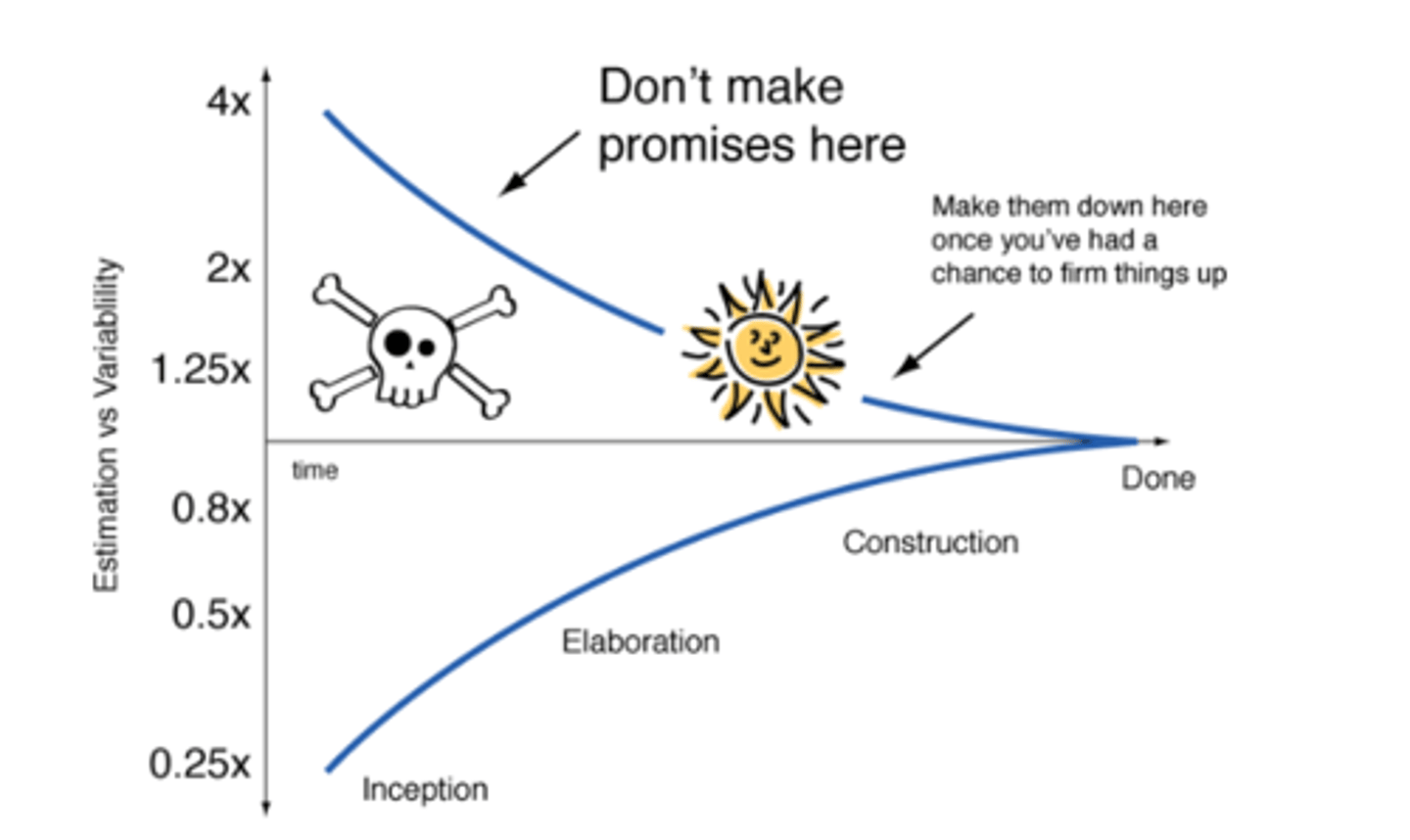

McConnell cone of uncertainty

software estimates vs time

Business quality vs system quality of a system

Assess quality using metrics then place system in one of 4 corners and evaluate: scap, fix i.e. refactor, keep, (improve value/ scap)

What are the test based metrics?

1)how many tests per requirement

2)how many test cases still to be designed

3)how many test cases executed

4)how many passed/ failed

5)how many bugs identified and what is their severity

Why does software degrade? and what can be done?

• bugs lead to improper aintanence and rushed job

• as code size grows, coupling tends to grow, thus complexity increases

• as system ages, technical debt rises

Bathtube curve!

Refactoring helps keep a constant failure plateaux and prolongs it by preventing wear-out failure rate rising quickly and steeply.

Why do developers write bad code?

•changing requirements which increases difficulty of updating code and leading to less optimal designs due to misunderstood goal / needs.

•new requirements which cause disruption and possibly taking shortcuts to meet deadline

•time & money

•learning on the job i.e. learning a better way after doing it once before

What is refactoring?

When should it be done?

When should it not be done?

What does it do?

Why refactoring isn't done when it should be?

The process of improving an existing software system such that external behaviour is not altered, yet improves internal structure. Thus, constant regression is vital to ensure external behaviour is not altered. It a form of preventative and perfective maintenance.

This is important in XP and TDD. It should be done if bad smell found, bug is fixed or during code review and to ensure software does not degrade quickly i.e. end up at wear-out failure rate of the bathtub curve. The amount of overhead/ communication depends on the refactor size. Communications avoids two people simultaneously refactoring the same code.

One should not refactor archived stable code nor someone else's code unless permission requested or it came to belong to you.

PROS: reducing duplicate code, coupling, complexity, technical debt, bugs and improves cohesion, maintainability and understandability. And it can be done at any time.

CONS: cost, complex as regression testing is required, may break the code and there is no evidence or guarantee that it will improve code and may cause developers to go down rabbit hole.

Often not done due to time or cost, or new features more important, or might break something, or wanting to get promoted and thinking people don't praise refactoring so leaving it to be someone else's problem.

What are the refactoring methods?

1) extract method --> when there are repeated code blocks that should be made into its own method

2) move method to another class

3) replace magic number

4) extract class --> when a class is doing work that should be done by 2, however this is more difficult and time consuming than extract method and harder to spot

5) remove dead code

6) encapsulate field--> public declaration changed into private

7) consolidate duplicate conditional code fragments

What is a code smell?

1) large class

2) long parameter list

3) lazy class (no longer 'pays' its way i.e. either was downsize after refactoring or represented planned functionality but did not pan out)

4) data class (only gets & sets)

5) solution sprawl (quickly adding a feature to a system without adjusting or considering the ripple and knock on effect of local changes)

6) refused bequest (inheritance not used as it should)

7) comments (often hide complicated code that needs restructuring, comments should explain why something is there as opposed to what it does...)

8) conditional complexity

if not addressed (i.e. left for technical debt!) the code smell will turn into code stench and this is how software decays quickly.

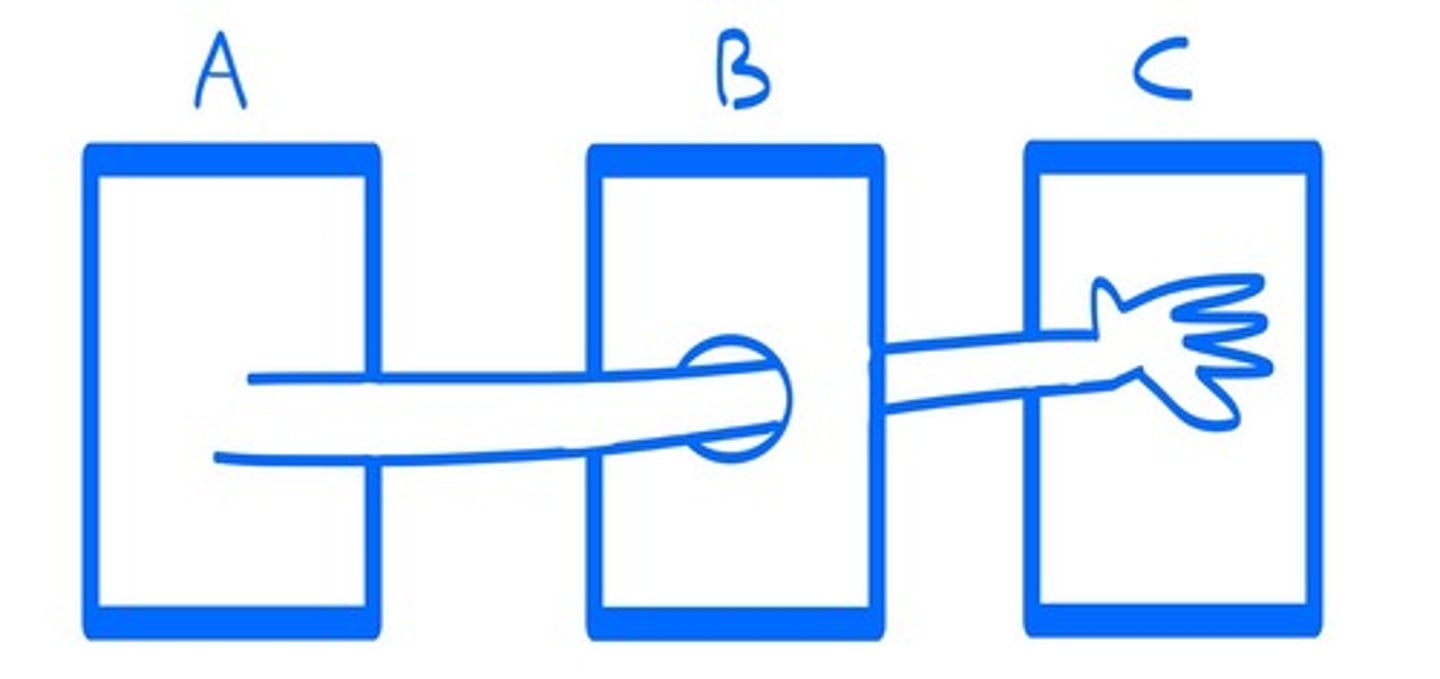

What is LoD? And what does it help with?

A design guidelines for developing software that helps prevent coupling - principle of least knowledge.

Each class should have limited knowledge about other classes, only classes closely related.

A and C should not talk.

It helps ensure locality of change, prevents chain of responsibility, reduces dependencies and helps build components that are loosely coupled.

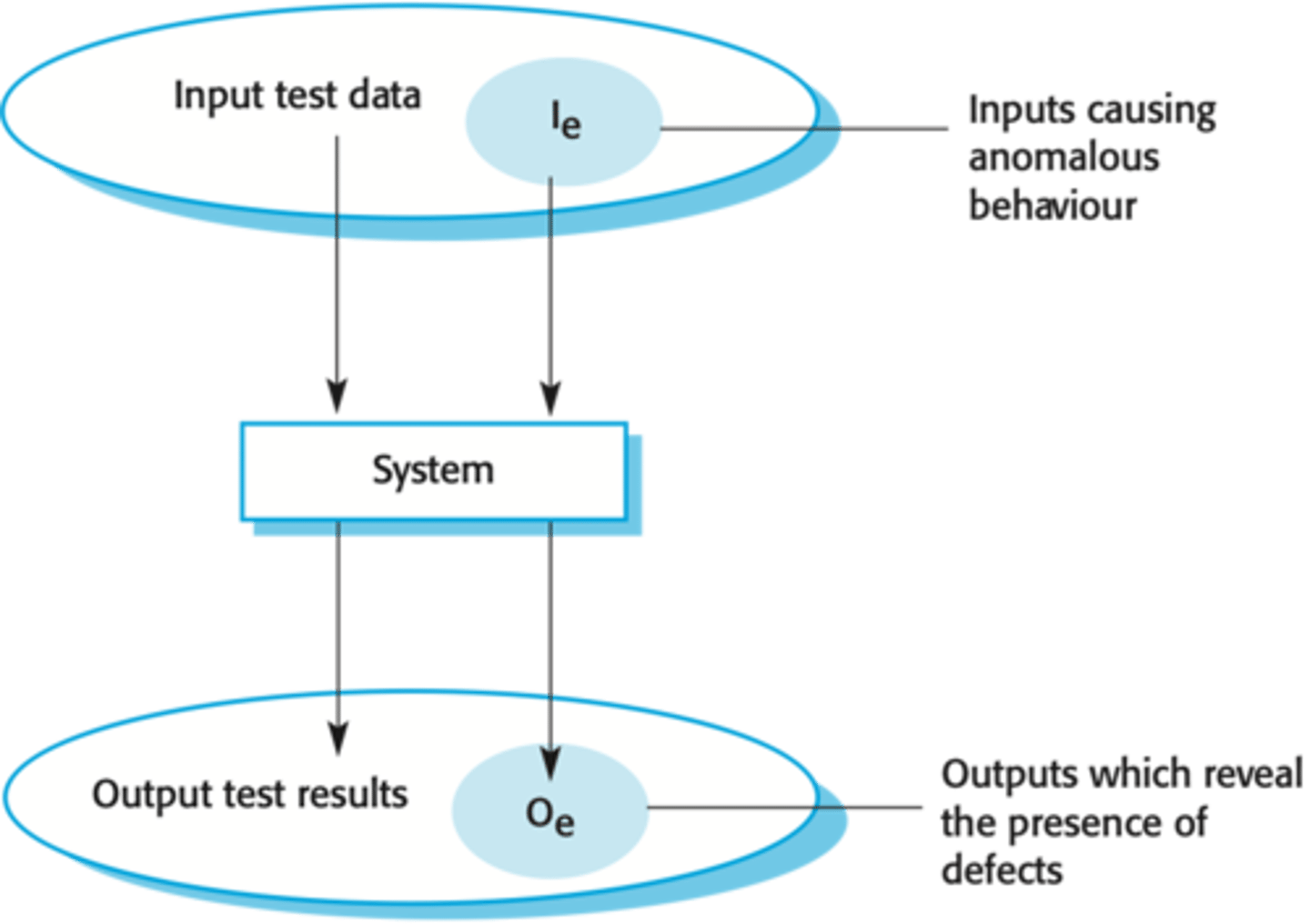

Validation testing

You expect the system to perform correctly using a given set of test cases that reflect the system's expected use. Demonstrates that software meets its requirements.

Successful tests show that system operates as it should.

Defect testing

Test cases designed to expose defects and are therefore are deliberately obscure and doesn't reflect system's normal use. Discovers where the software behaviour is incorrect, undesirable or doesn't conform with spec. E.g. system crash, unwanted interaction with other systems,

Successful tests makes system behave incorrectly and thus expose defects.

Program testing model

What is program testing?

This includes validation testing and defect testing.

-expose presence of errors, program defects, anomalies or info about programs non functional attributes

-uses artificial data

-v&v process

What is the difference between verification vs validation? and what is their aim?

Verification = is the product right? conforms to spec?

Validation = is it the right product?

The aim of V&V is to establish confidence that the system is 'fit for purpose'.

This depends on system's purpose (how critical the software is), user expectation (high/low user expectation of the software - who is the user?!) and marketing environment (may be more important to get the software to market than finding defects in the program).

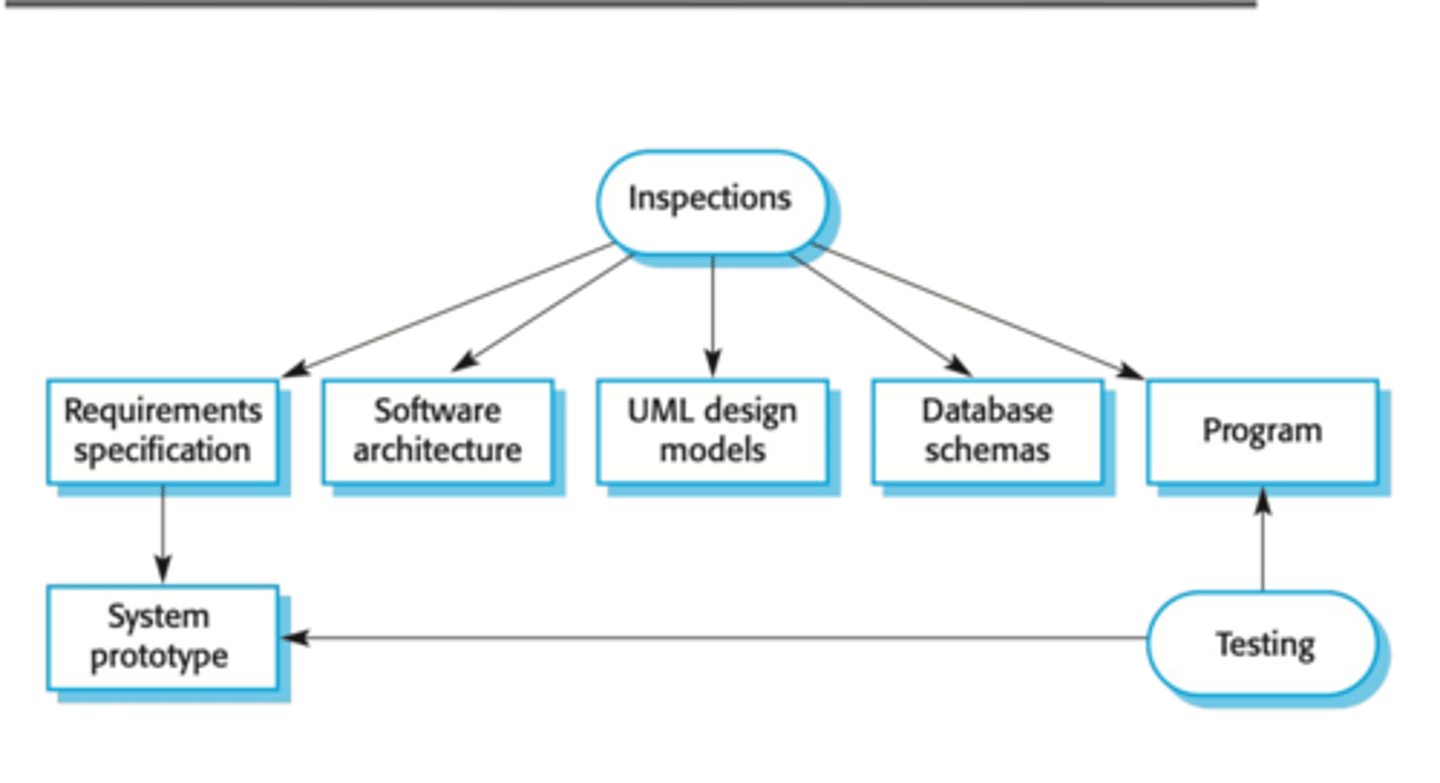

Software inspection and it's pros? cons?

The analysis of static system representation to discover problem and thus doesn't require execution of system so cannot check non-functional characteristics.

e.g. examining source code or any representation of the system i.e. documents and deliverables (reqs, design, data, etc)

Pros: incomplete versions can be inspected at no extra cost whilst testing incomplete version requires creation of specialised tests. Other quality attributes can be evaluates such as portability, maintainability, standards, etc. In testing, errors can mask other errors however as inspection is a static verification process there's no worry of errors interacting with other errors.

Cons: checks conformance with spec not with customers reqs

Software testing

Exercising and observing product behaviour (dynamic verification)

What is the difference between inspection and testing?

One is a static process where the system isn't executed and instead source code and other system representations in the form of documents and deliverables such as design, reqs, data are examined.

The other is a dynamic verification process where the system's behaviour is exercised and observed.

inspection vs testing diagram

What are the 3 stage of testing?

1) development (tests during development to discover bugs and defects)

2) release (testing complete version before release by a separate team)

3) user (users test software in their environment)

What are the 3 types of testing within development?

1) unit (methods or object classes - defect testing process)

2) component (several individual units i.e. multiple classes)

3) system (some or all components are integrated and system is tested as a whole)

What does complete test coverage of a object class involve?

• testing all operations associated with an object

• setting and interrogating all object attributes

• exercising the object in all possible states

Inheritance makes it more difficult to design object class tests as the info to be tested isn't localised.

What are the 2 types of unit test case?

•The first of these should reflect normal operation of a program and should show that the component works as expected i.e. validation testing

•The other kind of test case should be based on testing experience of where common problems arise. It should use abnormal inputs to check that these are properly processed and do not crash the component i.e. defect testing

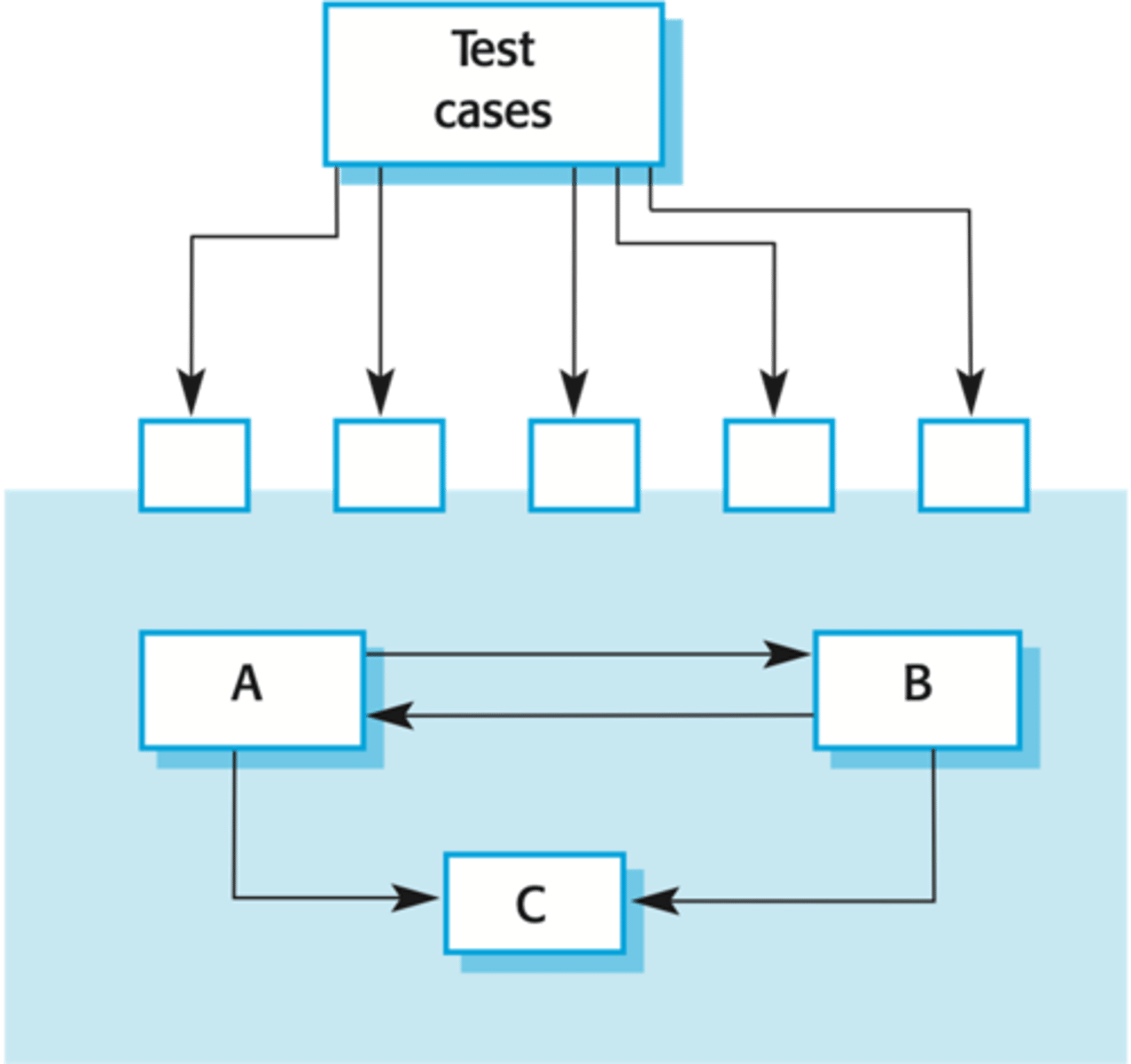

What is interface testing? What are the types?

Testing the interface of components (which are integrated units) in order to access functionality and detect faults due to interface errors or invalid assumptions about interfaces or timing errors (different speed or out of data info)

1) parameter = data passed from 1 method to another. (set to extreme ends)

2) shared memory = memory simultaneously accessed by multiple programs (vary order of components being activated)

3) procedural = encapsulates a set of procedures

4) message passing= requesting services (stress testing)

testing guidelines

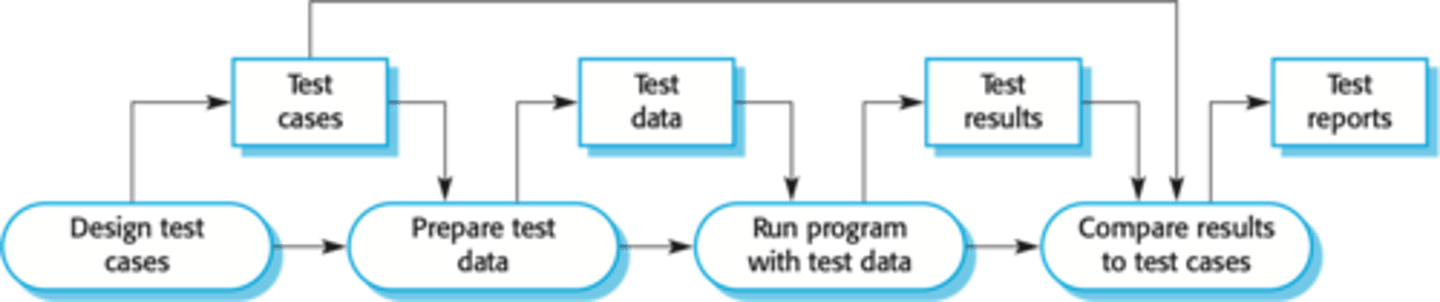

What are the components of an automated test?

1) set-up : initialise system with test case i.e. the input and expected output

2) call

3) assertion: compare

Interface testing diagram

What are the 3 interface errors?

1) misuse

2) misunderstanding (incorrect assumption about behaviour)

3) timing

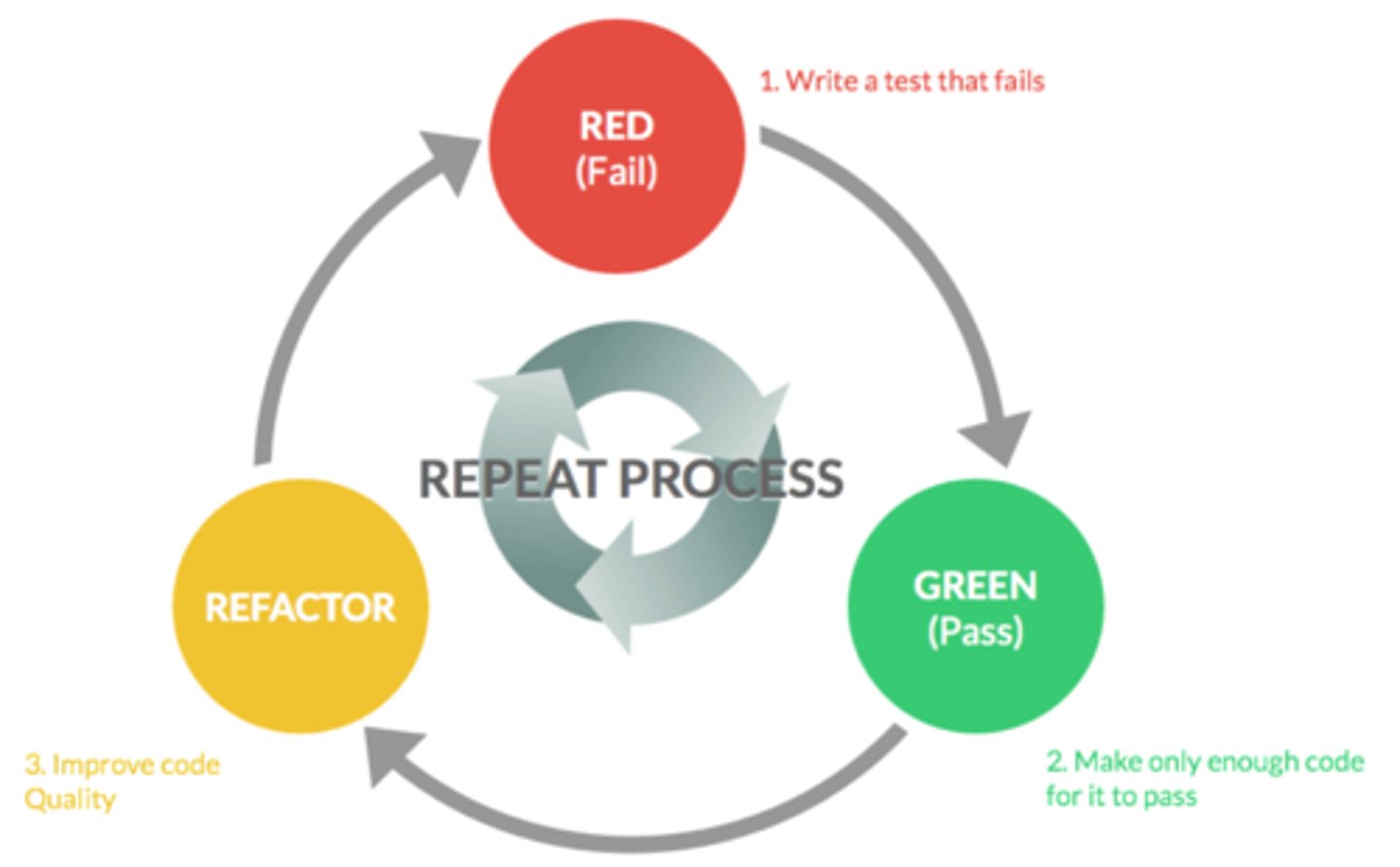

What is Test Driven Development (TDD)?

•An approach to program development in which you inter-leave testing and code development.

•Tests are written before code and 'passing' the tests is the critical driver of development.

•Code is developed incrementally, along with a test. You don't move onto next increment until the code that you have developed passes its test.

•Agile method as part of EP or plan-driven development.

- Start by identifying the increment of functionality that is required. This should normally be small and implementable in a few lines of code.

- Write a test for this functionality and implement this as an automated test.

- Run the test, along with all other tests that have been implemented. Initially, you have not implemented the functionality so the new test will fail.

- Implement the functionality and re-run the test.

- Once all tests run successfully, you move on to implementing the next chunk of functionality.

TDD diagram

TDD benefits

• code coverage(each code segment has at least one test associated with it)

• regression testing is developed incrementally as a program is developed.

• simplified debugging (obvious where problem lies - in the new code)

• system documentation (the tests are a form of documentation that describes what the code is doing)

3 laws of TDD

1) You may not write production code unless you've 1st written a failing unit test

2) Do not write more of a unit test than is sufficient to fail

3) Do not write more production code than is sufficient to make the failing test pass

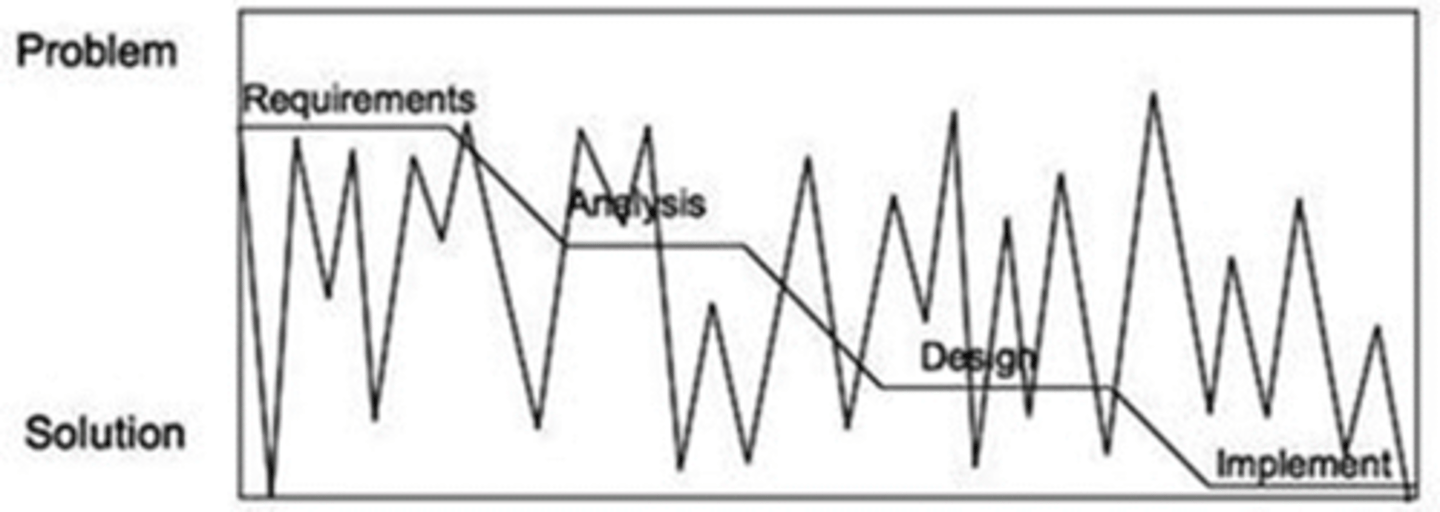

What is a wicked problem?

A problem that is difficult or impossible to solve because of incomplete, contradictory, and changing requirements that are often difficult to recognize. Such problem are often not understood until formulation of the solution.

•one-shot operation thus no opportunity for trial & error

•unique

•no right or wrong, just better or worse

What are characteristics of good design?

•class independence whereby low coupling and high cohesion

•fault prevention & tolerance (APR)

•design for change

•shallow & long bathtub

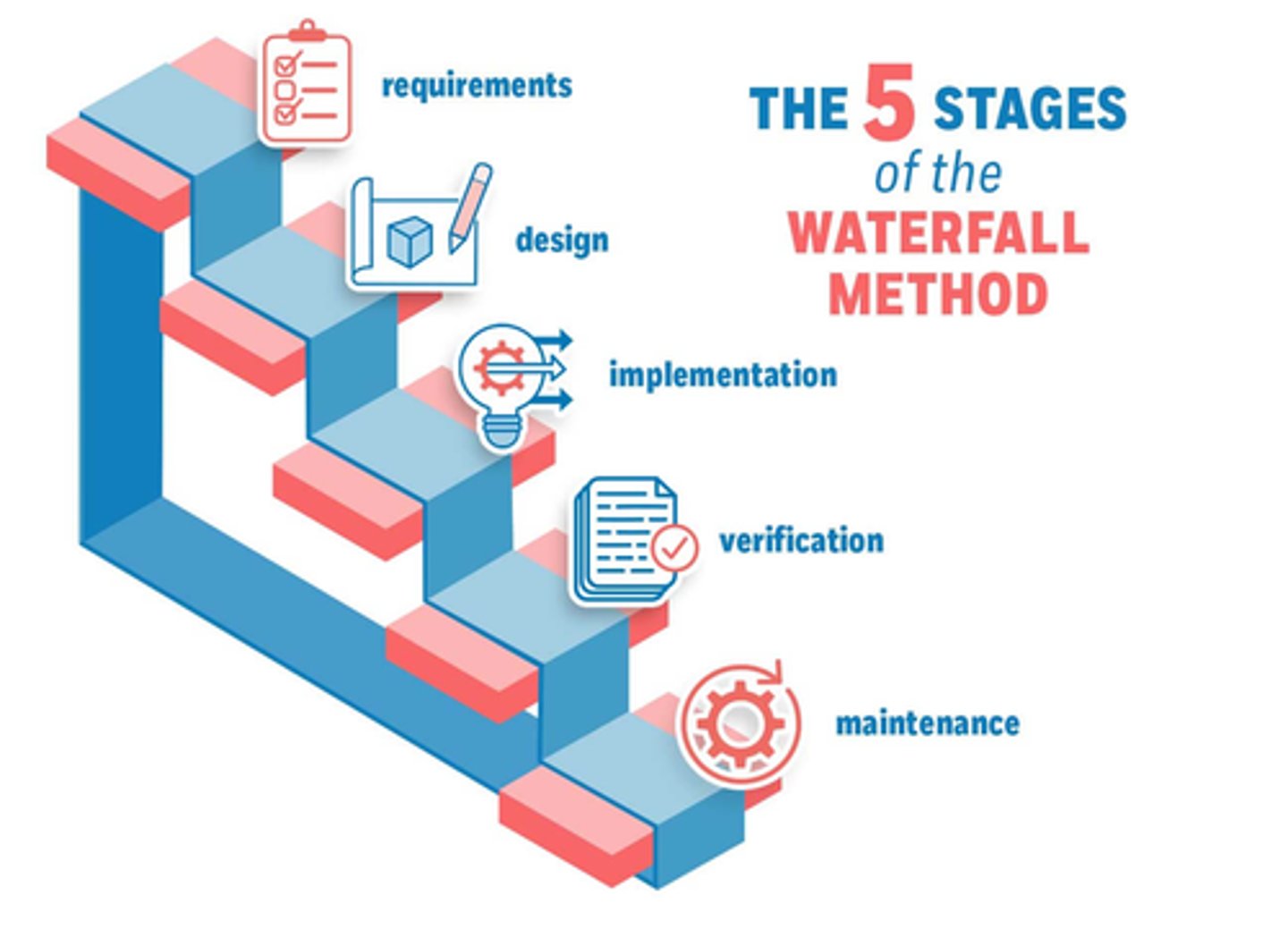

Waterfall methodology

What is coupling? What are the 4 grades? consequence of high/low?

The degree of dependence between classes i.e. the amount of interactions.

content (H) = one class modifies parts of another class thereby violating encapsulation!

common = more than 1 class shares data such as global data structures. The cause of this is poor design choice because of a lack of responsibility for data.

stamp = a class that passes data structures to another class but doesn't not authorise full access and would require a second class to know how to manipulate data structure.

uncoupled (L) = completely uncoupled classes are not systems since systems are made up for interacting classes.

H means it is difficult to understand the class in isolation, changes will ripple to other classes, difficult to re-use.

L means poor cohesion and poor code understandabilitiy.

What is cohesion? What are the 4 grades? consequence of high?

The degree to which all method of a class are directed towards a single task i.e. how related the responsibilities of a class are: they should be directed towards and essential for performing the same task.

Functional (H) = every essential method required for computation is contained within class (and only that!) e.g. a class that assigns seats to airline passengers

Sequential = output of 1 class is input to another (data flows between) e.g. 1 class reads data from fil passes to class 2 to format the data which passes to class 3 to process data.

Temporal = methods which are related by timing as opposed to order e.g. error logger that closes all open files, creates log file then notifies user (no data flow).

Coincidental (L) = methods which are unrelated and thus are only related due to location in source code. Accidental, worst form, bad smell as large bloated class of unrelated methods.

H means reduced class complexity, increased system maintainability and understandability. Locality of change thus no ripples effect. Increased usability.

What is a fault?

An error is a human mistake which can lead to fault=bug=defect which can (but doesn't always) failure of system. Faults can occur in any software artefact, many of which are domain specific. There are 30-85 faults per KLOC before testing and about 0.3-0.5 faults per KLOCK after the software is delivered to customer.

Many reported faults are due to interface errors.

What are the causes of faults?

1) requirements are wrong i.e. incorrect/ incomplete/ missing definitions (companies are interested in these the most as this feeds throughout entire system)

2) deliberate deviation from requirements by developers either by reusing previous similar work or by omitting functionality due to time/ budget pressure

3) logical errors i.e. division by zero or infinite loop or buffer overflow or interface

4) inadequate testing: incomplete test plan, testing cut short.

Why is it important to look for faults?

So that they can be sorted quickly as some faults may result in failures and some failures are worse than others. Furthermore, some faults result in security vulnerability. Thus, accurate prediction of fault prone code can reduce cost and effort whilst improving quality.

What is the metric for faults?

fault count / size of release (LOCs)

Does low fault density suggest high quality code?

No, the fault density does not take into account

•the skill of the tester (low skill tester won't uncover as many fault as a highly skilled tester)

•time spent testing (more time spent, more faults uncovered)

•fault type i.e. the severity of the fault (minor, major, critical)

What is an anti-pattern? List any 5 out of the 10 and explain them.

Design patterns are common re-usable requirement, design, or implementation to problems in standard way which have been formalized and are considered a good development practice which are well designed, well documented, easy to maintain and easy to extend i.e. do not reinvent the wheel.

As opposed to anti-patterns which are the opposite and are undesirable. They are certain patterns in software development that are considered bad programming practices.

1) blob class (one class monopolises too many responsibilities and processing thus breaks SRP and suggests low cohesion and high coupling)

2) broken window ( small problem left uncorrected which signals a lack of care or responsibility --boy/scout rule which states leave things as you found it.

3) analysis paralysis (aiming for perfection i.e. constant perfective maintenance which leads to project gridlock as no progress is being made i.e. too much refactoring which ends up in over engineering)

4)walking in a minefield (releasing software before it is ready therefore has many bugs and users are used as guineapigs. This can lead to users being fed up with the constantly shifting system and demand a different system)

5) poltergeist (classes with limited responsibilities and has no justified use and therefore doesn't "pay it's way")

6) "a big ball of mud" a system which lacks a perceivable architecture. This is due to high business pressure (money, time and resource), constantly changing environments and requirements or team stability/ developer turnover.

Debugging principles/techniques

1) fix 1 thing at a time, create a test hypothesis

2) question assumptions, dont assume anything

3) check code most recently changed as likely bug

4) use debugger (breakpoints, memory watch?)

5) break complex calculations

6) check boundary conditions (classic off by 1 for loops, <=, >=, etc)

7) minimise randomness, the more random the input, the more opportunity for things to go wrong

8) take a break

9) explain bug to somebody else (helps retrace steps, get fresh eyes and provides alternative hypothesis)

10) pair programming/ mob programming

11) get outside help i.e. stack overflow

Rubber Duck Debugging

A form of debugging where the programmer carefully examines each line of code explains the logic, goals, and operations to an inanimate listener (a rubber duck) as if it were a real person with no programming knowledge. Therefore, must communicate properly without assuming that it works but explain how it works.

Wolf Fence Debugging

One wolf in alaska, fence alaska, wait for wolf to howl, discard one half where you know the wolf isn't, repeat until again until wolf found. Similar concept to binary search.

Code reviews? Pros? Cons?

Where the developer produces code in front of 2-3 people who criticize it to find bugs and errors.

PROS:

•more eyes

•knowing you will be reviewed, you tend to raise quality.

•force author to justify their decisions in their code

•novice coders can learn

•improves overall understanding of the system as it involves team members from different parts of the system

A study showed that from 11 programs developed by the same group, 5 of which had no reviews where there were 4.5 faults per 100 LOC whilst the 6 that had reviews only had 0.62 faults per 100 LOC.

When AT&T introduced code reviews, productivity levels increased by 14% whilst faults decreased by 90%.

Inspection vs code review vs walkthrough vs code reading

Single Responsibility Principle

"every class should have only one reason to change"

This principle promotes H cohesion, L coupling by ensuring each class has a single responsibility. In turn, this reduces complexity and increases maintainability, understandability, etc. Prevents a ripple effect caused by locality change in potential future changes.

How to avoid faults?

1) avoid writing complex code, write clean code!

2) re-use code i.e. libraries, don't reinvent the wheel

3) review code, inspections

4) refactor to reduce complexity + coupling, improve cohesion

5) measure code to understand fault-prone/ complex/ critical stress areas

6) avoid code smells and antipatterns

What factors affect the life-time of a system?

1) bugs i.e. bathtub curve

2) business value

3) return on investment

4) system dependences i.e. coupling between systems

5) replacement cost

What is clean code?

• elegant, pleasing to read

• focused, H cohesive, L coupling

• looked after i.e. no technical debt

• DRY (no duplicate code)

What are the rules for clean code?

1) use meaningful names

2) use intention revealing names

3) use pronounceable names

4) use searchable names, avoid single letter name

5) use appropriate length for variable name

6) use accepted conventions

7) method should have a verb "doing thing"

8) minimise parameters

9) maintain consistency in naming i.e. use the same concept

10) use opposites properly

11) comments

12) keep code short

13) use appropriate indentation

14) declare variables close to where they are used

15) use vertical space sensibly & horizonal space consistently

PoLS!

What is dirty code?

1)fragile

2)rigid, brittle

3)immobile this non-reusable

What is the role for legacy system in clean code?

A system which is developed for an organisation and has a very long life time. It is usually out-of-data, bad or non-existent documentation, old language, inconsistent programming style, expertise no longer exist, corrupted structure. However, E.S. are expensive & risky to replace therefore a business must analyse it's cost, value, risk and benefit to decide whether to extend it's lifetime through refactoring/ re-engineering or scrap and replace. An audit grid can be used!

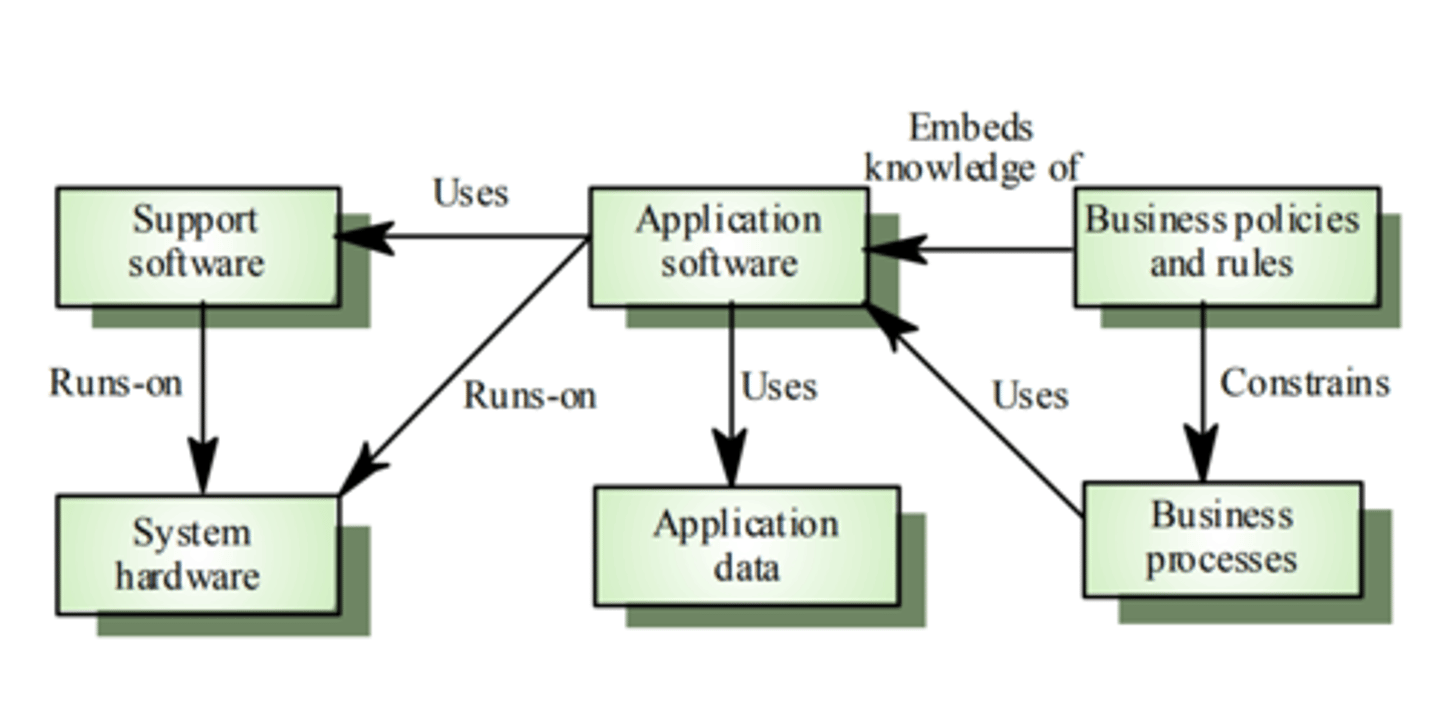

Legacy system components

All components interact therefore cannot extract or remove without affect the others, major coupling in a legacy system.

Sentimental analysis

The process of computationally identifying and categorising opinions expressed in a piece of text, especially in order to determine whether the writer's attitude towards a specific topics positive/ negative or neutral.

Software estimate techniques

1. Algorithmic cost modelling = formulaic approach based on historic cost information, generally based on the size of the software. Cost is estimated as function of product, project and process attributes (e.g. code review). Effort = abM

2. Expert judgement = one or more experts in both software and application domain predict software costs using their experience. Process iterates until a consensus is reached. Cheap estimation method and can be very accurate if experts have direct experience of similar systems but if no experts then very inaccurate.

3. Estimation by analogy = cost of a project is computer by comparing to a similar project in the same application domain. Accurate if project data is available but impossible if no comparable project has been tackled and needs systematically maintained cost data (records) - NASA use this a lot as their systems are similar so is a good guide to future projects.

4. Parkinson's law states work expands to fill the time available i.e. whatever cost you set, you'll always use them up so you'll either meet or exceed. To combat, project will cost the resources available and that's all it's getting rather than by objective statement. This results in no overspend however the system is usually unfinished.

5. Top down, bottom up = start at top level and asses overall system functionality and effort required for high-level components. Whereas, bottom-up starts with low level classes and add these efforts for final estimation.

6. 3-point estimation: (1) optimistic estimate i.e. best case (2) pessimistic estimate i.e. worst-case (3) best-guess PERT = (optimistic + pessimistic (4*best guest)/6

Factors affecting developer productivity

1) Application domain experience i.e. knowledge

2) Process qualities e.g. mob programming, pair programming

3) Project size - larger projects, the more time required for communications

4) Technology support - can improve productivity

5) Working environment like large office spaces or private work areas

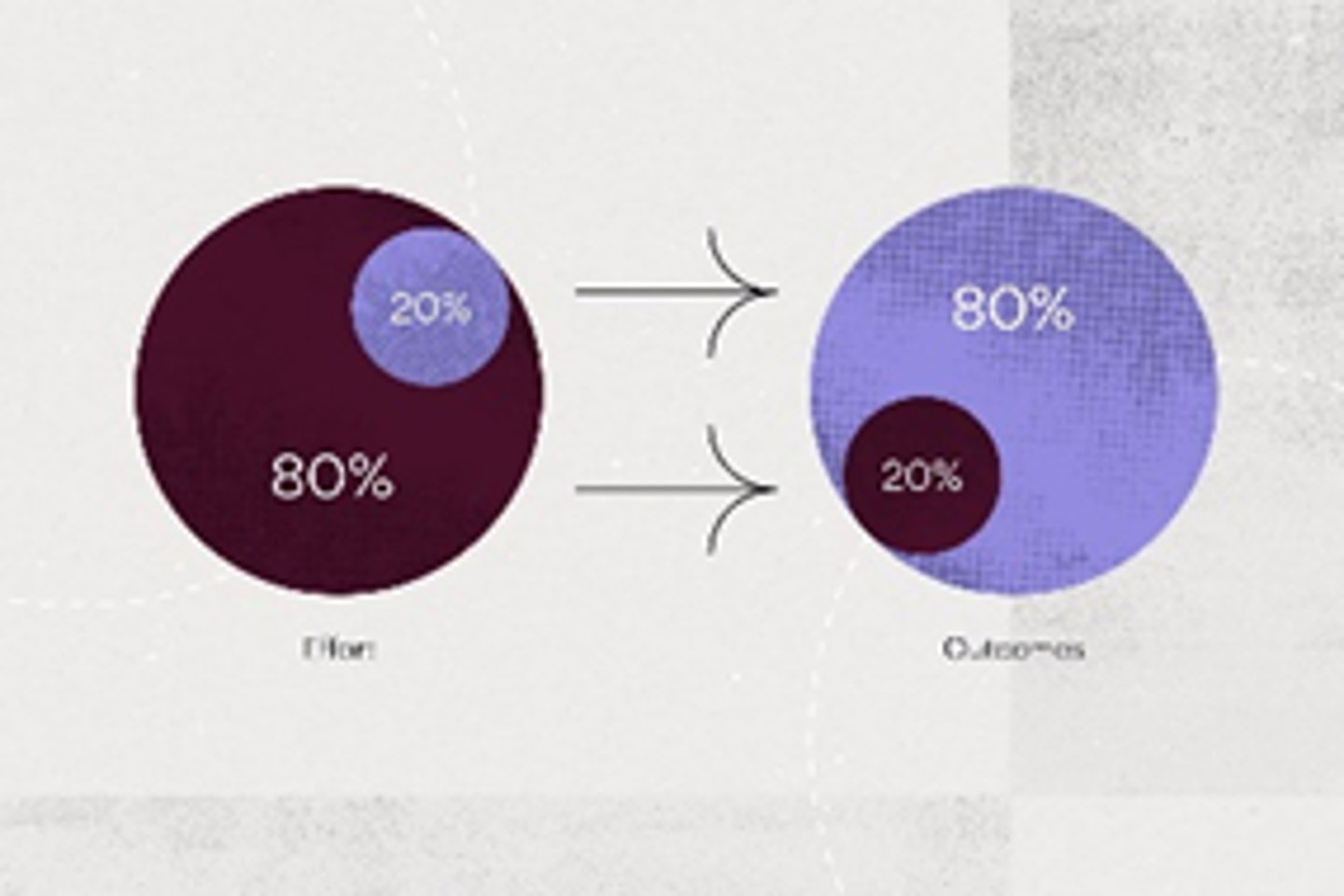

80/20 rule aka Pareto Analysis

he established that 20% of the population in Italy owned 80% of the land. This rule seems to be applied to other aspects.

e.g. 80% of coupling was from 20% of classes. or bugs

IBM found that 80% of a computer's time was spent executing 20% of instructions.

Therefore, this helps directing code reviews, refactoring at a certain portion.

Re-engineering

This does not preserve what the program does. Reorganising and modifying the existing software to make them more maintainable. The examination of a subject system to reconstitute it in a new form.

Code Restructuring

Source code is analysed using a restructuring tool so poorly designed code segments are redesigned. Violations of improper programming constructs noted and code is then restructured. The resulting code is reviewed and tested to ensure no anomalies have been introduced and internal code documentation is updated.

Developer motivation

intrinsic = internal desire

extrinsic = external compulsion

people, success, pay, status, recognition