L8 Language and modeling

1/20

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

21 Terms

What are 2 aspects of human cogniton?

study how information is acquired, represented and processed in human brain

identify processes and mechanisms involved in learning and processing

What are 2 aspects of computational modeling?

simulate a cognitive process via computational tools and techniques

use the model to explain the observed human behavior

What are models of natural language?

Probabilistic machines (trained to predict the most likely next word), humans are not since humans know what they want to deliver

→ example: chat gpt

What is fast mapping?

Children can establish a connection between a new object when they have 2 objects infront of them and know 1, asked to give the “dax” (made up word) to the researcher

young children easily map novel words to novel objects in familiar context

How does research work? (3)

you have a cognitive process → ex. learning new words

you have empirical findings → ex. how do children behave in specific situations

ultimate goal is to build a theoretical account to explain the empirical findings

what type of info do children use for learning words

*different paradigm approaches

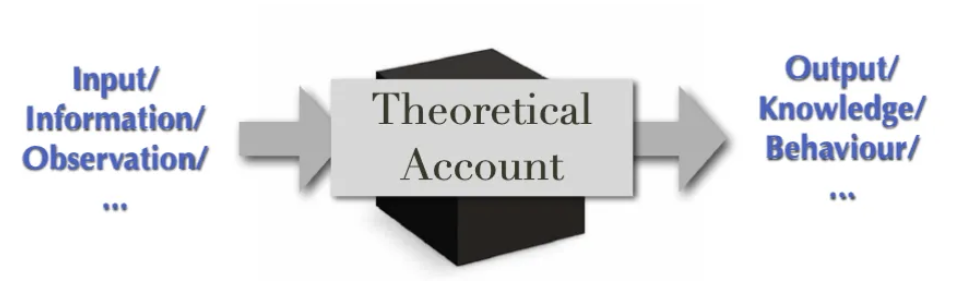

How does input map to output?

input/info/observation → BLACK BOX → output/knowledge/behavior

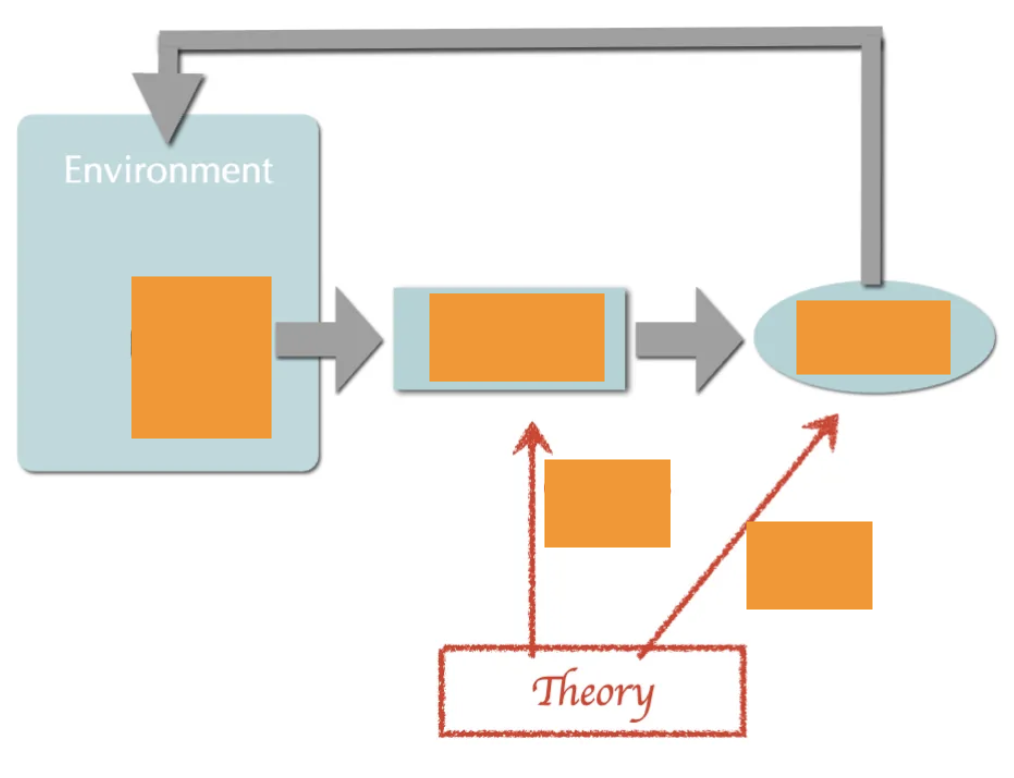

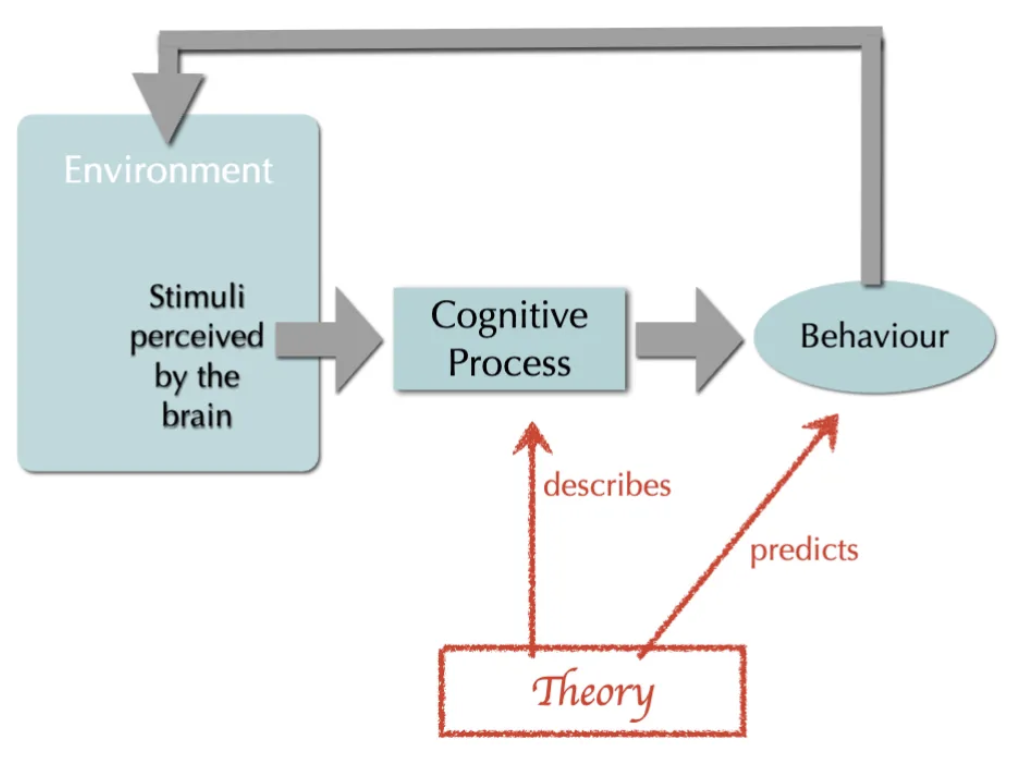

Theory model

environment (stimuli perceived by brain) → cognitive process → behavior (sometimes behavior leads back to environment and affects/changes it)

*the theory is interested in describing the cognitive process and predicting the behavior, not really interested in manipulating the environment

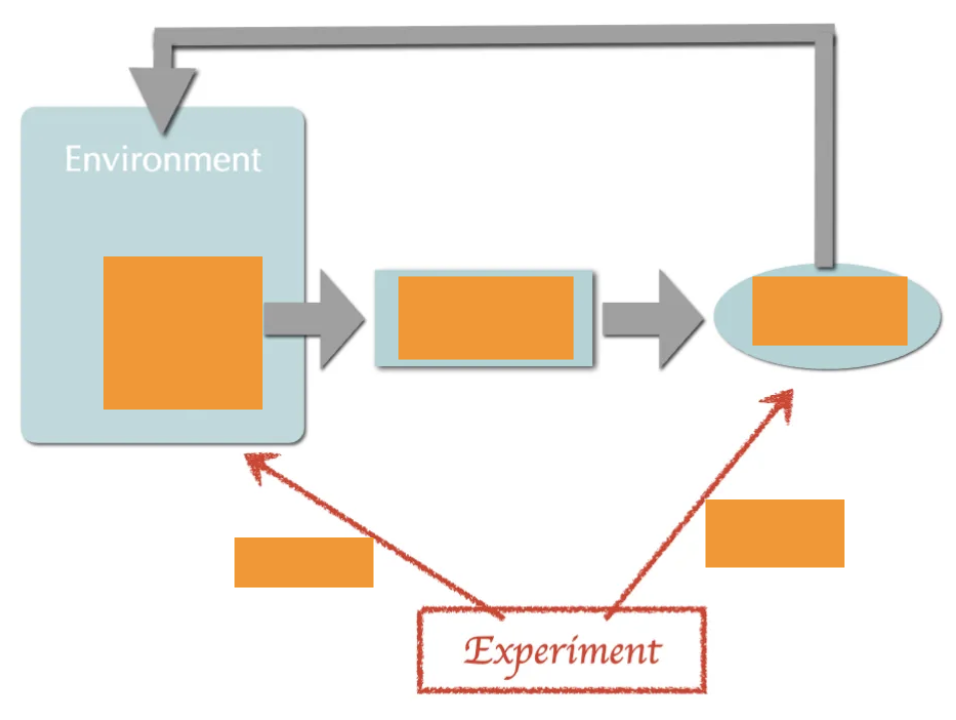

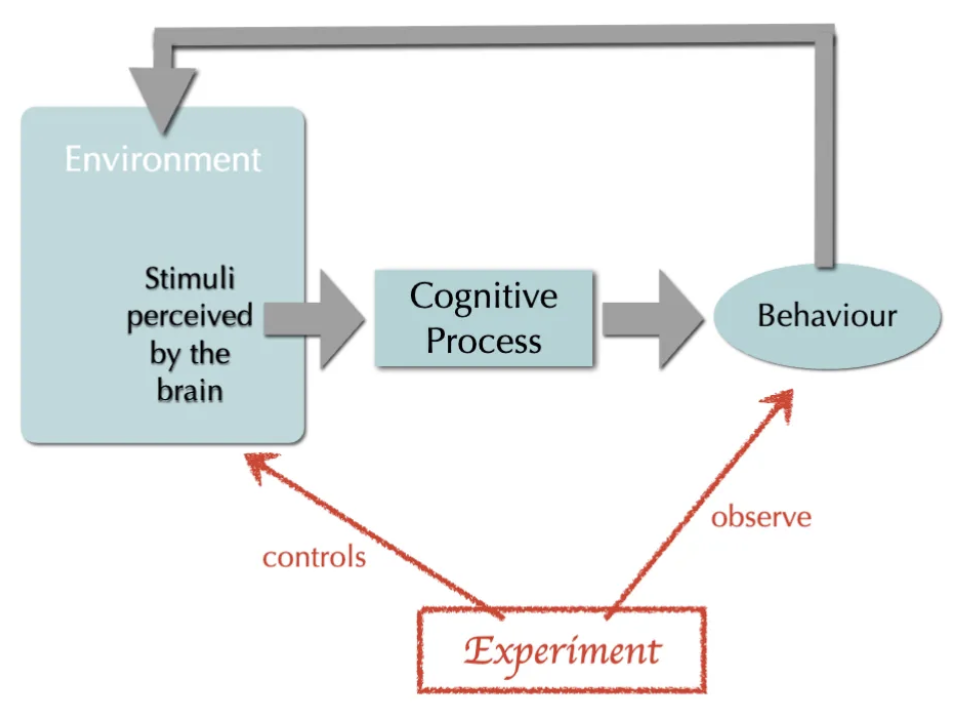

Running an experiment diagram

control the stimuli (environment) and observe the behavior

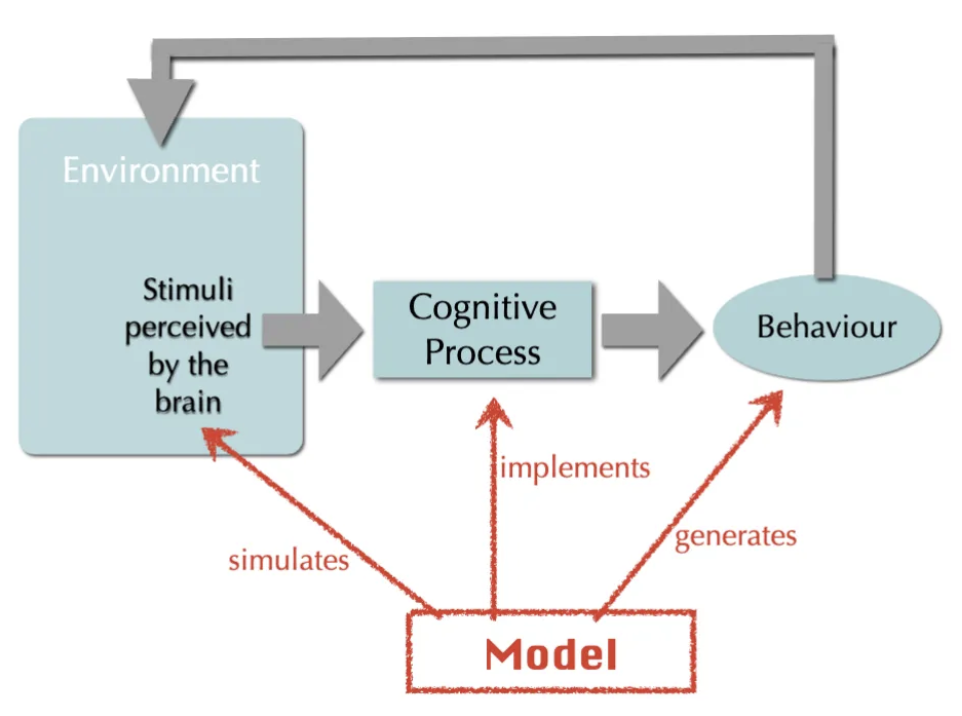

Building a computational model diagram

simulates stimuli and implements cognitive process and generates behavior → ex. i think humans rely on associations, so i build…

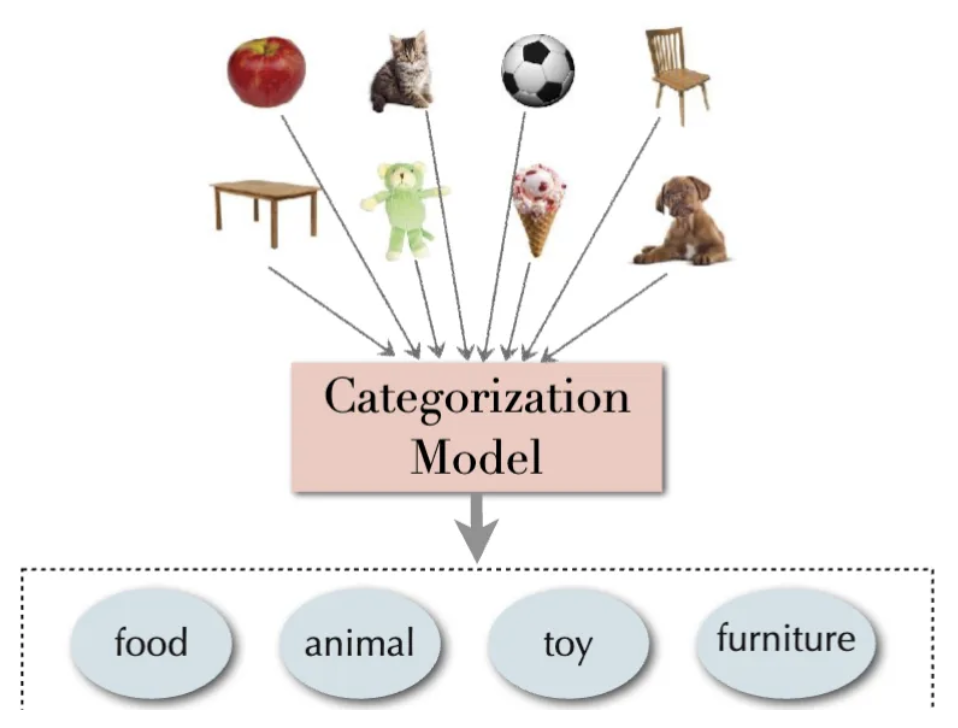

How do humans categorize objects?

categorization model:

features, shape, color…

also have to explain how model gives priority to one feature over another, what happens when new objects are introduced, are new categories created?…

What are 4 common modeling frameworks?

Symbolic: first generation of models influenced by early AI

had same underlying symbols and logical rules

Connectionist: inspired by the architecture of human brain

neural network models, limited and hard to train, small dataset

Probabilistic: following the success of statistical machine learning techniques

statistical models, machine learning, dominant models until neural models came back

Neural: a resurrection of old connectionist models

deep learning

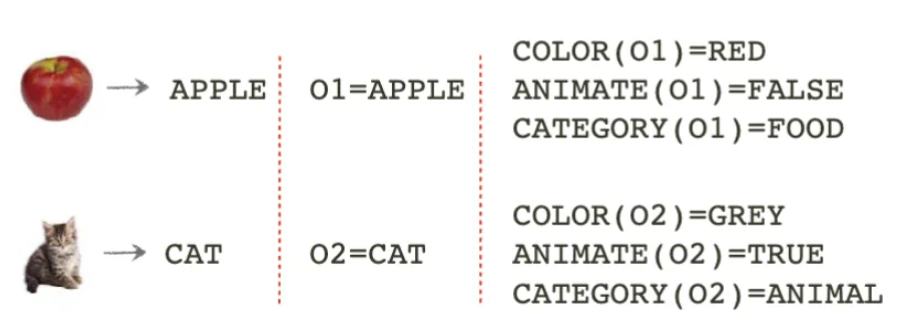

Describe 2 aspects of symbolic modeling

explicit formalization of the representation and processing of knowledge through a symbol processing system

representation of knowledge: a set of symbols and their propositional relation

symbol of apple = APPLE (01)

symbol of cat = CAT (02)

symbols are arbitrary, we have to know what properties each symbol has, use propositions to add value to the symbols (01: color = red, animate = False, category = food)

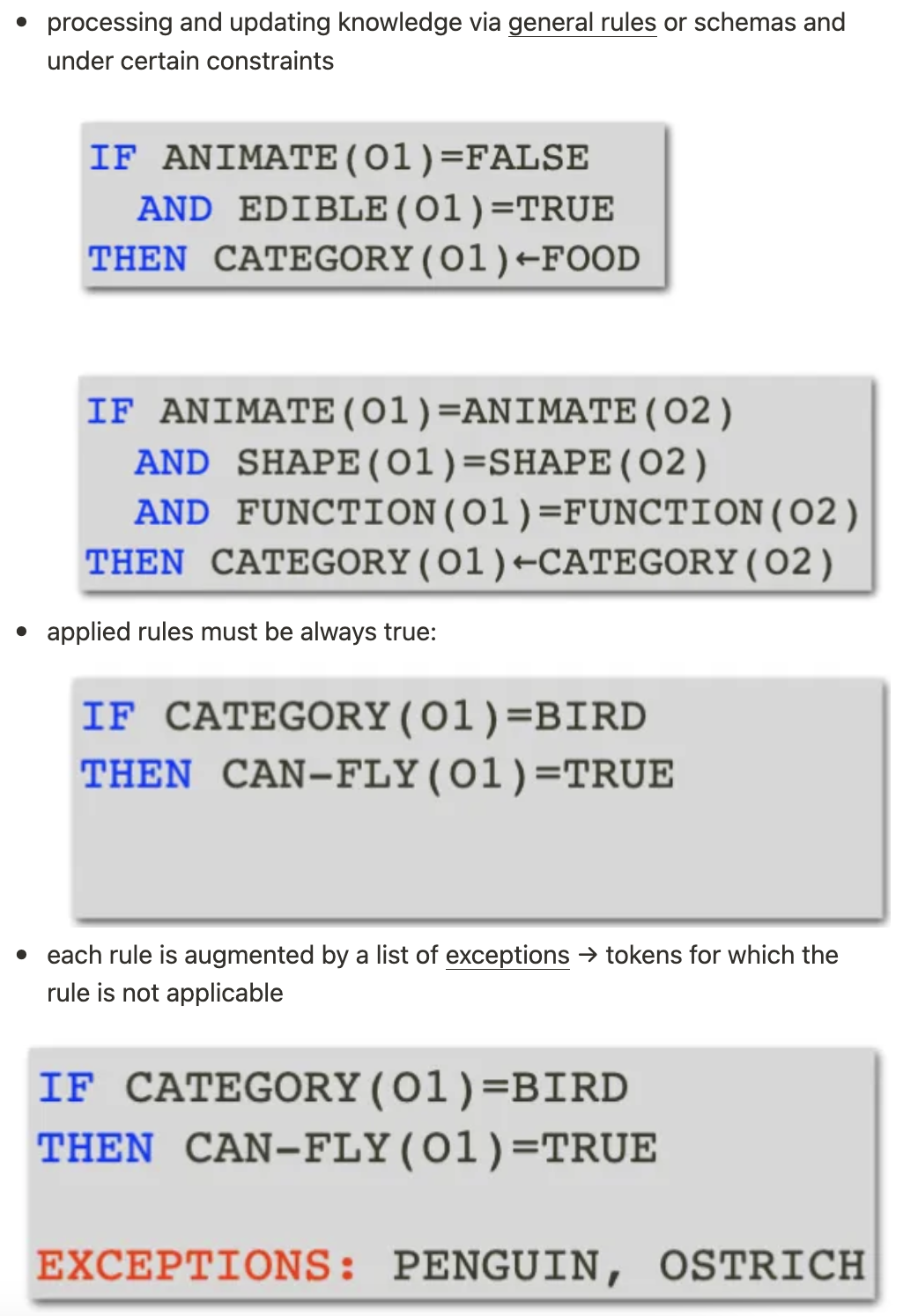

Describe 3 aspects of the learning and processing mechanism → a symbolic approach

Processing and updating knowledge via general rules or schemas and under certain constraints

Applied rules must always be true

Each rule is augmented by a list of exceptions → tokens for which the rule is not applicable

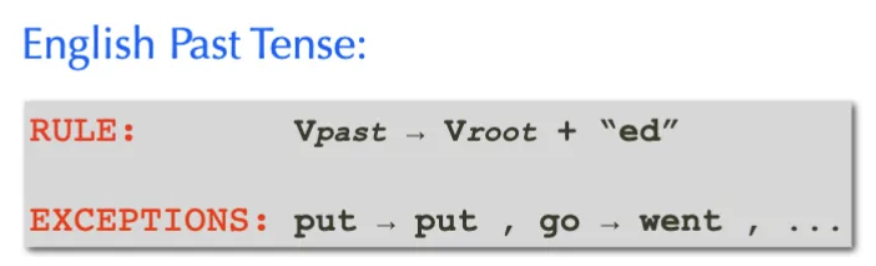

Describe symbolic modeling using the English Past-Tense example from language

context-free grammar (CFGs)

a symbolic formalism for representing grammatical knowledge of language

How do you deal with uncertainty? → Probabilistic Modeling

uncertainty:

two tables and two chairs create the shape of a stool → category for tables and chair, but now you have another category that doesn’t rly fit the already created categories

stool shape has 65% chair and 35% table → follows certain template

to deal with uncertainty, apply probability theory on previous exposure to data:

Representation of knowledge

Weighted information units that reflect bias or confidence based on previous observations

Learning mechanism

Principled algorithms for weighting and combining evidence to form hypotheses that explain data best

*Often in combination with techniques and formalisms from other frameworks

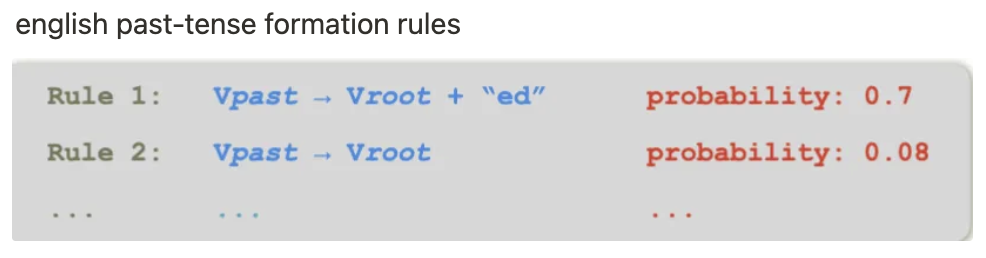

How does adding probabilities to symbolic models work?

a symbolic rule-based representation

each rule is augmented with a probability value indicating its applicability

english past-tense formation rules

*estimating probabilities comes from analyzing datasets

*how do we use the probabilities → we have to know how to combine the pieces of evidence, which are relevant…

overgeneralizing, bayesian rule (how to combine different pieces of info)

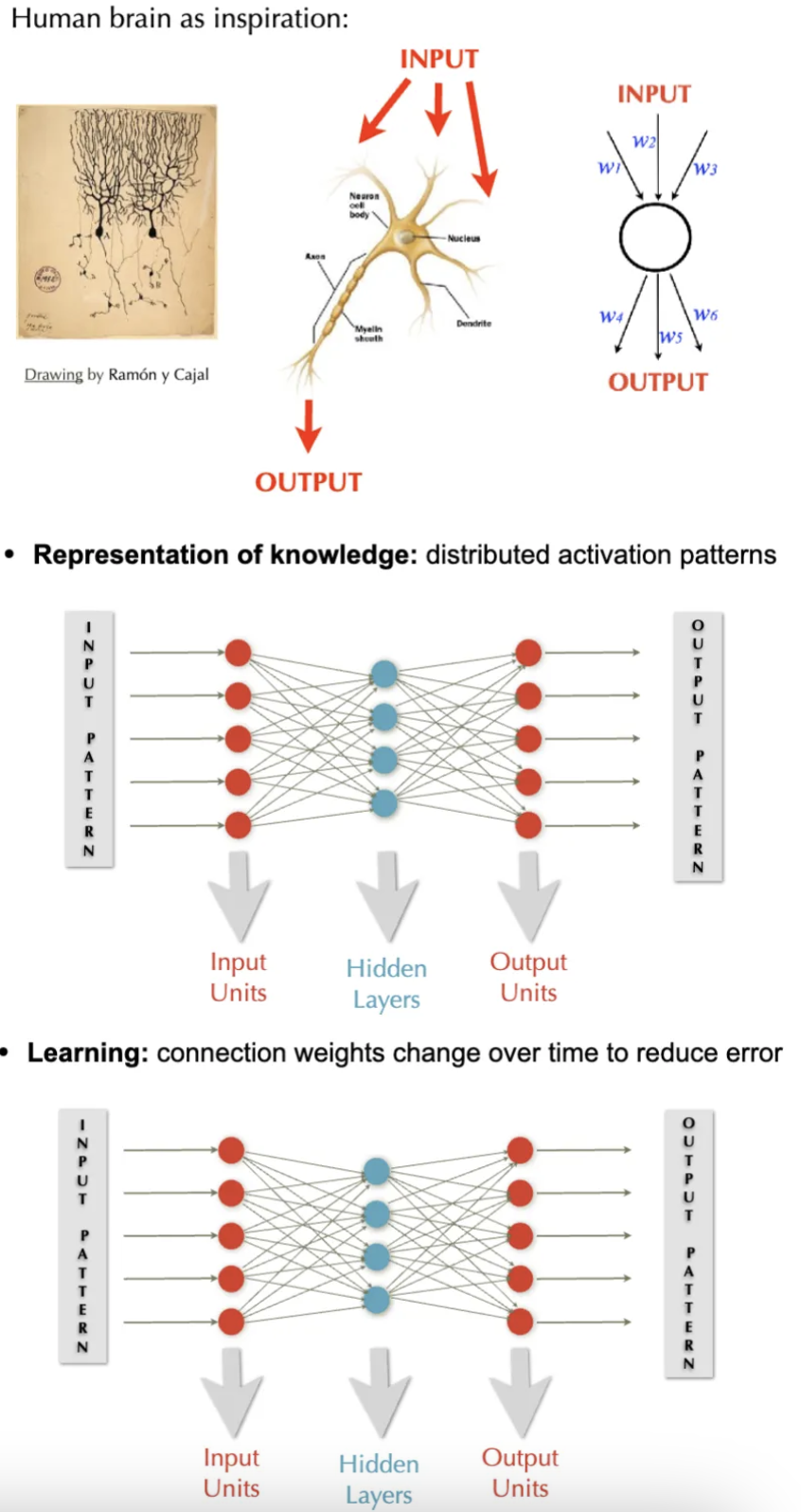

What are 4 aspects of connectionist modeling?

human brain as inspiration → neuron (nucleus, input-output signal channels)

strength of neural network models → combing different elements

representation of knowledge: distributed activation patterns

input pattern → hidden layers → output pattern (behavior)

how is symbol “apple” changed into distributed on computational model

learning happens by connection weights changing over time to reduce error

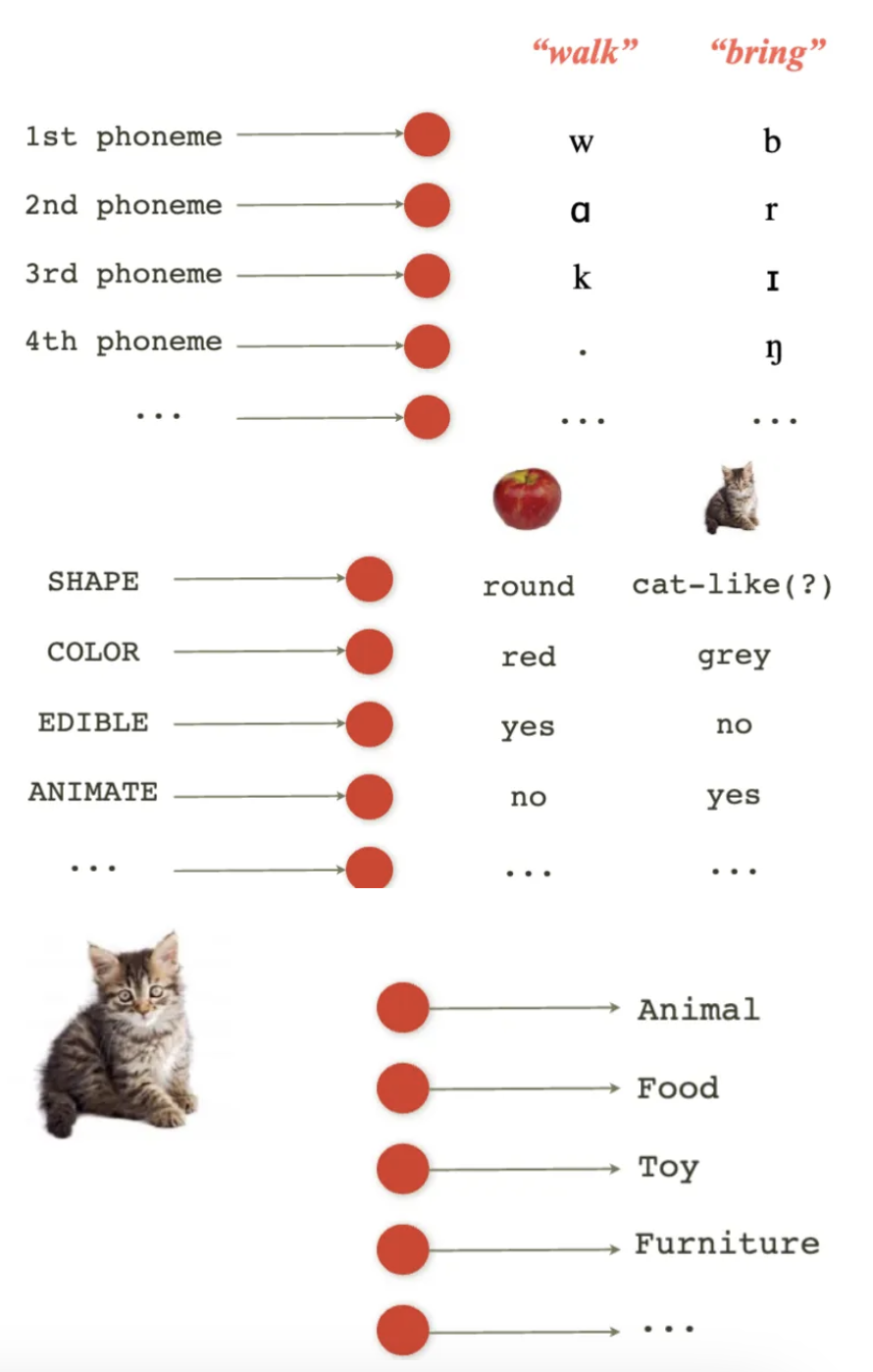

Explain distributed input representation (5)

Distribute Features: Features (shape, color, edible, animate) are spread across multiple nodes in a model, forming a distributed representation. These features work together to represent input data.

Input to Model: For tasks like predicting the English past tense, input words (e.g., "walk", "bring") are processed into distributed features, represented as nodes (circles in diagrams). Each node holds a part of the word's meaning.

Model Processing: The model uses phonemes as input (e.g., "w", "a", "k", "l") and generates outputs like past-tense forms by processing these features through nodes. The result is a set of features corresponding to word categories.

Feedback from Weights: As the model processes data, it adjusts weights based on feedback (errors), improving predictions over time.

New Word Generation: By distributing features and adjusting weights, the model generates new words or modifies existing ones based on input and learned patterns.

Key Points about the Concept:

Distributed Representations: These models rely on the idea that information, whether it's input or output, is not isolated but represented across multiple factors or features. For instance, when categorizing an object, the shape, color, and other attributes come together to form a holistic understanding of what the object is.

Neural Networks for Predicting Language Patterns: These models are widely used in language processing tasks such as predicting verb tenses based on phonemes.

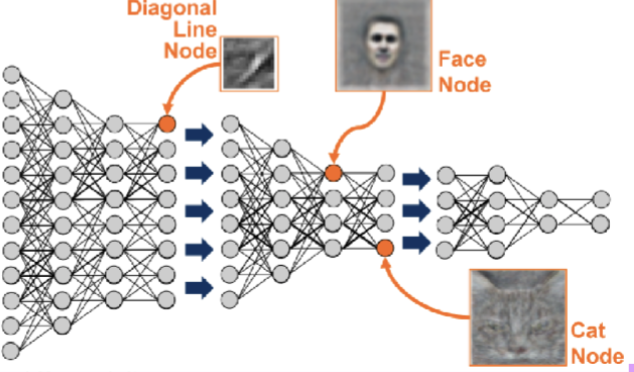

What is deep learning (2)

a resurrection of the connectionist models due to higher computational power and better training techniques

adding more hidden layers increases model’s power in learning abstract complex structures, hence “deep” learning

ex. image processing models

certain parts of model becomes specialized for certain features (shape, human vs cat face)

model recognizes which features are important

What are 3 common modelling frameworks?

Symbolic: first generation of models influenced by early AI

Symbolic systems of knowledge representation

Logic-based inference techniques

Probabilistic: following the success of statistical machine learning techniques

Combining descriptive power of symbolic models with the flexibility of the connectionist models

Neural: inspired by architecture of human brain

Distributional representation of knowledge

parallel processing of data

What are 4 things using computational methods to model cognitive processes enable us to do?

Using computational methods for modeling cognitive processes enables us to

study these processes through simulation

evaluate the plausibility of existing theories of cognition

explain the observed human behavior during a specific process

predict behavioral patterns that have not yet been experimentally investigated