Concurrent Systems Parallelism

1/40

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

41 Terms

Sequential Processing

They have one “thread” of execution

One step follows another in sequence

One processor is all that is needed to run the algorithm

Burglar Alarm System

The system continually monitors:

The front door

The back door

The sliding glass door

he door to the deck

The kitchen windows

The living room windows

The bedroom windows

Non-sequential Examples

a house with a burglar alarm system.

car that has an onboard digital dashboard

Digital Dashboard

Calculates how fast you’re going and displays it on the speedometer

Checks your oil level

Checks your fuel level and calculates consumption

Monitors the heat of the engine and turns on a light if it is too hot

Monitors your alternator to make sure it is charging your battery

Concurrent Systems

Multiple tasks can be executed at the same time

The tasks may be duplicates of each other, or distinct tasks

The overall time to perform the series of tasks is reduced

Advantages of Concurrency

Concurrent processes can reduce duplication in code.

The overall runtime of the algorithm can be significantly reduced.

More real-world problems can be solved than with sequential algorithms alone.

Redundancy can make systems more reliable.

Disadvantages of Concurrency

Runtime is not always reduced, so careful planning is required

Concurrent algorithms can be more complex than sequential algorithms

Shared data can be corrupted •

Communications between tasks is needed

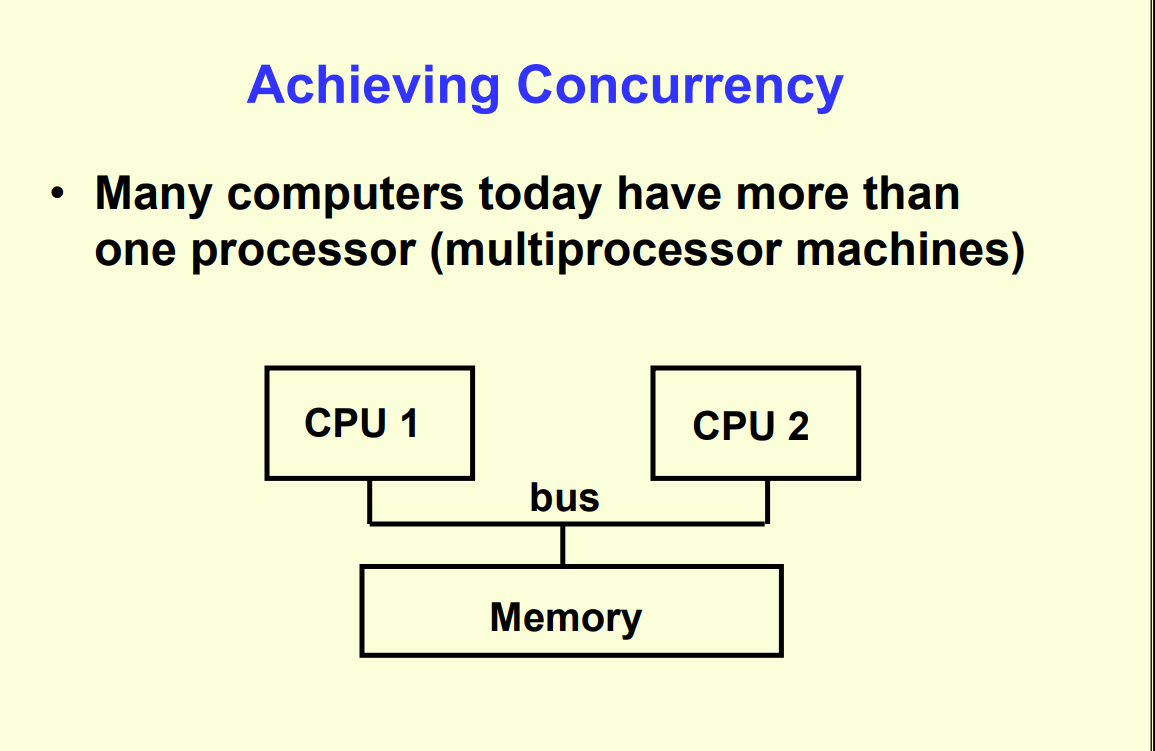

By having more than one processor (multiprocessor machines)

How does computers achieve concurrency?

Multiprocessor

What do you call this processor machine?

Concurrency with only one processor

The computer “juggles” jobs, swapping its attention to each in turn

“Time slicing” allows many users to get CPU resources

Tasks may be suspended while they wait for something, such as device I/O

Concurrency

is the execution of multiple tasks at the same time, regardless of the number of processors.

Parallelism

is the execution of multiple processors on the same task

Types of Concurrent Systems

Multiprogramming

Multiprocessing

Multitasking

Distributed Systems

Multiprogramming

Share a single CPU among many users or tasks.

May have a time-shared algorithm or a priority algorithm for determining which task to run next

Give the illusion of simultaneous processing through rapid swapping of tasks (interleaving)

Multiprocessing

Executes multiple tasks at the same time

Uses multiple processors to accomplish the tasks

Each processor may also timeshare among several tasks

Has a shared memory that is used by all the tasks

Multitasking

A single user can have multiple tasks running at the same time.

Can be done with one or more processors.

Used to be rare and for only expensive multiprocessing systems, but now most modern operating systems can do it.

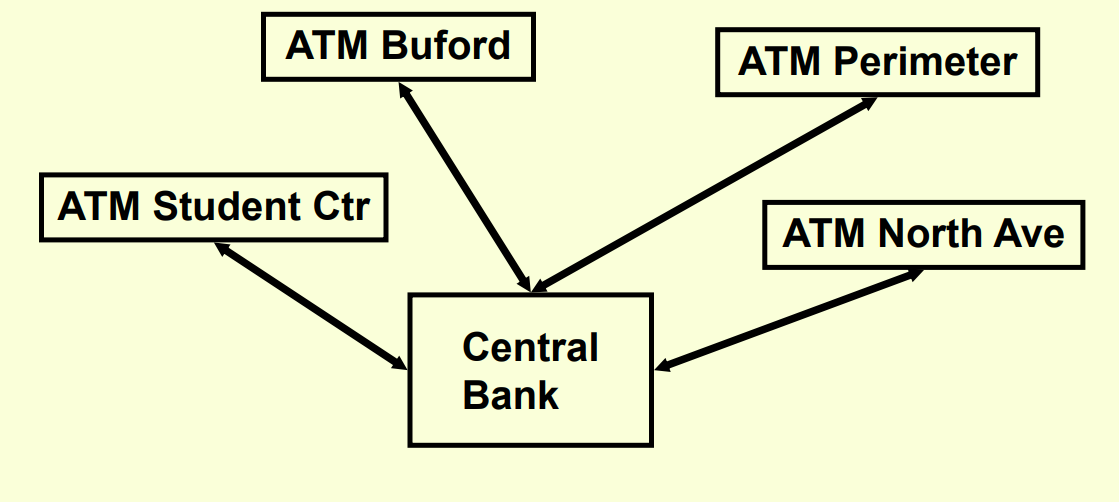

Distributed Systems

Multiple computers working together with no central program “in charge.”

Advantages

No bottlenecks from sharing processors

No central point of failure

Processing can be localized for efficiency

Disadvantages:

Complexity

Communication overhead

Distributed control

Parallelism

Using multiple processors to solve a single task.

Breaking the task into meaningful pieces

Doing the work on many processor

Coordinating and putting the pieces back together

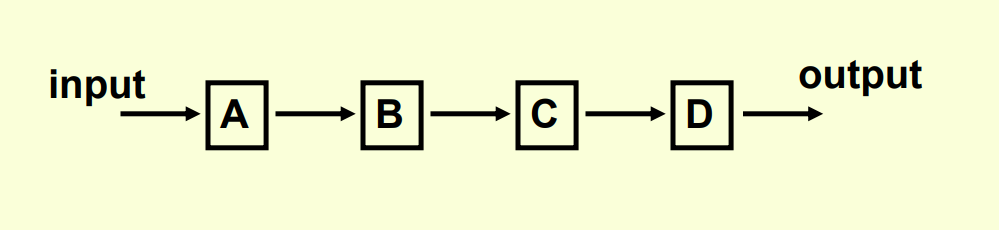

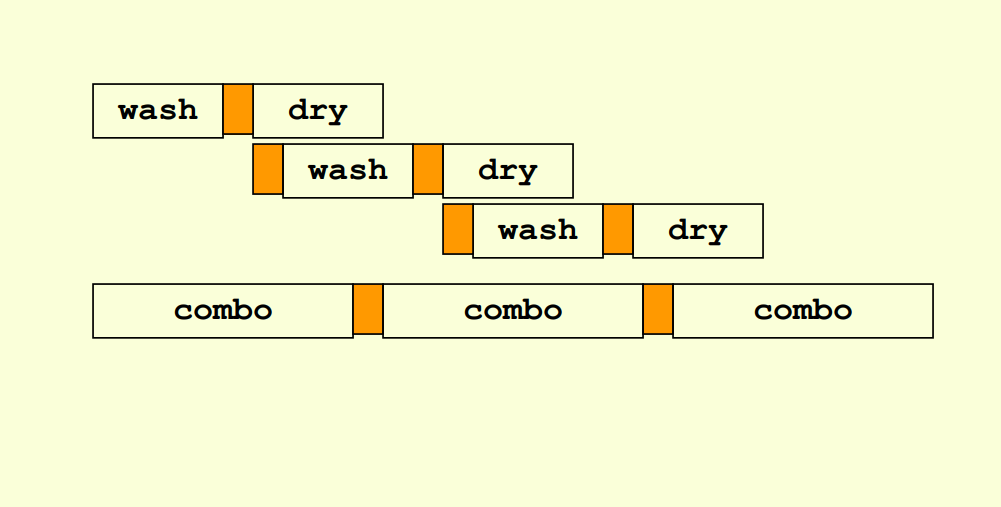

Pipeline Processing

Repeating a sequence of operations or pieces of a task.

Allocating each piece to a separate processor and chaining them together produces a pipeline, completing tasks faster.

One Load

Three Loads

Examples of Pipelined Tasks

Automobile manufacturing

Instruction processing within a computer

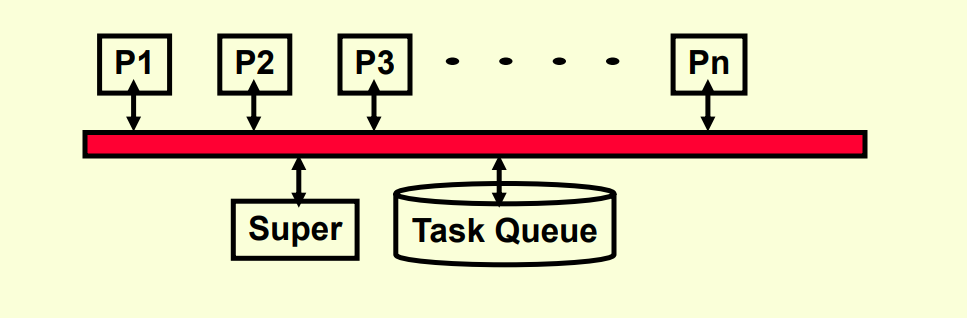

Task Queues

A supervisor processor maintains a queue of tasks to be performed in shared memory.

Each processor queries the queue, dequeues the next task and performs it.

Task execution may involve adding more tasks to the task queue.

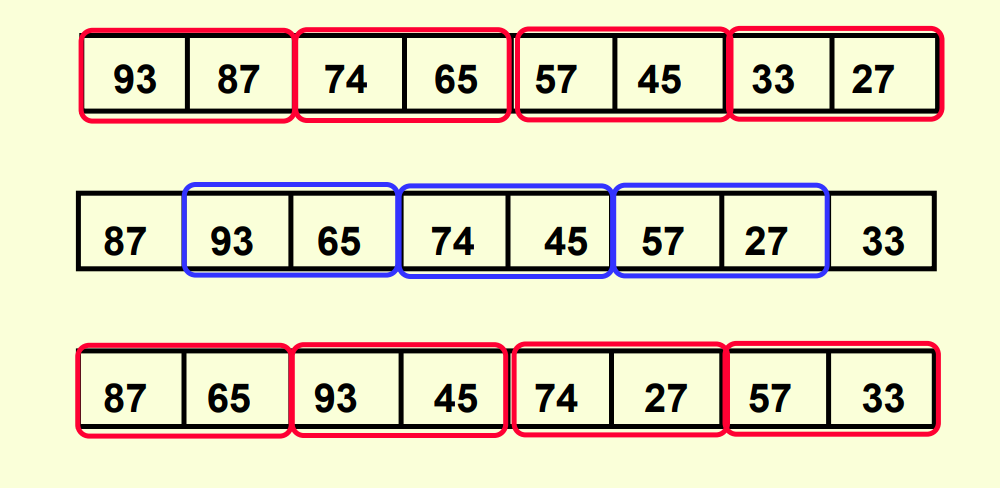

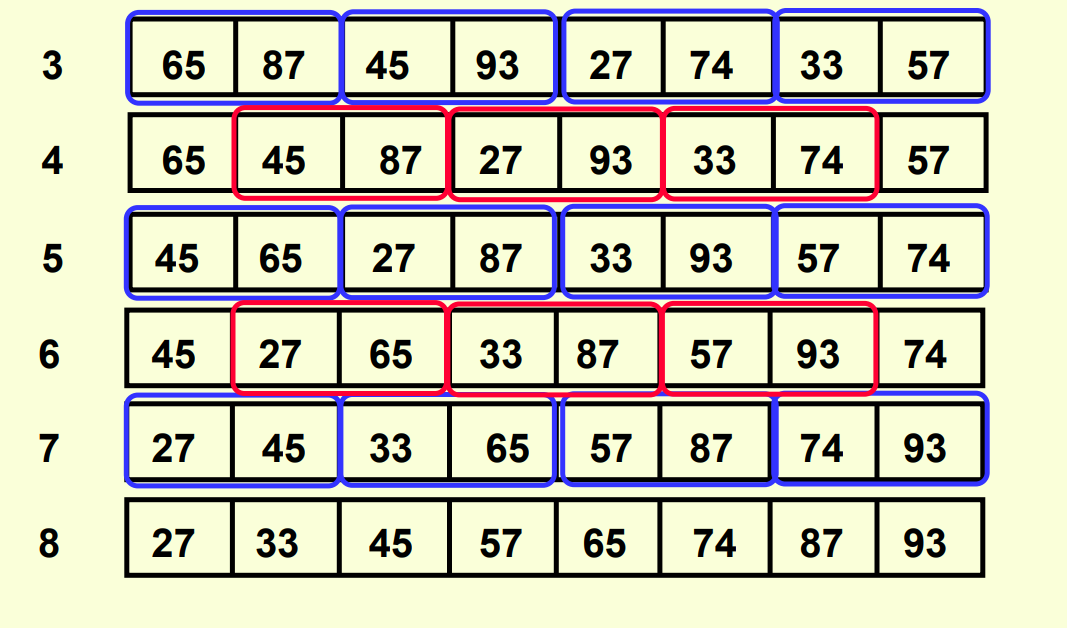

Parallel Bubblesort

We can use N/2 processors to do all the comparisons at once, “flopping” the pair-wise comparisons.

Runtime of Parallel Bubblesort

N2 time

Sequential bubblesort finishes in what time?

N time

Parallel bubblesort in what time?

Product Complexity

is the amount of work per “time chunk” multiplied by the number of “time chunks” – the total work done.

Parallelization

can reduce time, but it cannot reduce work. The product complexity cannot change or improve.

Improvement can parallelization provide

Given an O(NLogN) algorithm and Log N processors, the algorithm will take at least O(?) time.

Given an O(N3 ) algorithm and N processors, the algorithm will take at least O(?) time. O(N) time. O(N2 ) time.

Number of Processors

Processors are limited by hardware.

Typically, the number of processors is a power of 2

Usually: The number of processors is a constant factor, 2K

Conceivably: Networked computers joined as needed (ala Borg?).

Processors

are limited by hardware

power of 2

Typically, the number of processors is?

A program on one processor

Runs in X time

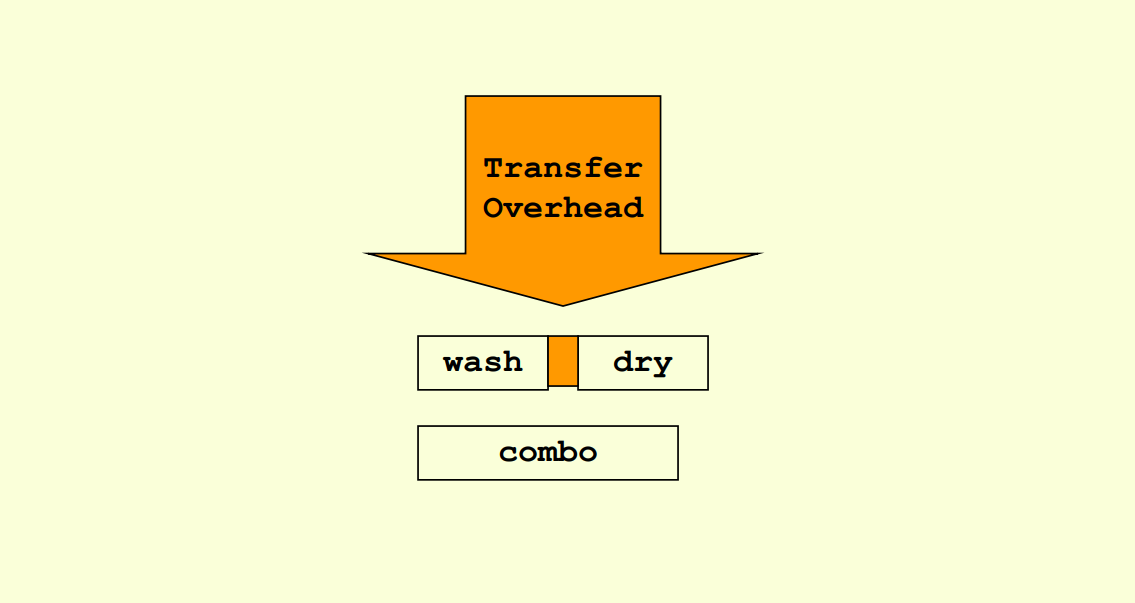

Adding another processor

Runs in no more than X/2 time

Realistically, it will run in X/2 + ε time because of overhead

Parallelization

is not free

controlled and coordinated

Processors must be?

special programming language

What type of programming language must be written for parallel systems?

What We Know about Tasks

Relatively isolated units of computation

Should be roughly equal in duration

Duration of the unit of work must be much greater than overhead time

Policy decisions and coordination required for shared data

Simpler algorithm are the easiest to parallelize