AWS Certified Machine Learning Engineer Associate

1/107

Earn XP

Description and Tags

Updated Mar 2025 | Missing qns - 29

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

108 Terms

A company is building a web-based AI application by using Amazon SageMaker. The application will provide the following capabilities and features: ML experimentation, training, a central model registry, model deployment, and model monitoring.

The application must ensure secure and isolated use of training data during the ML lifecycle. The training data is stored in Amazon S3.

The company needs to use the central model registry to manage different versions of models in the application.

Which action will meet this requirement with the LEAST operational overhead?

A. Create a separate Amazon Elastic Container Registry (Amazon ECR) repository for each model.

B. Use Amazon Elastic Container Registry (Amazon ECR) and unique tags for each model version.

C. Use the SageMaker Model Registry and model groups to catalog the models.

D. Use the SageMaker Model Registry and unique tags for each model version.

Answer:

C. Use the SageMaker Model Registry and model groups to catalog the models.

---

### Detailed Explanation

#### Requirements Summary

1. Central Model Registry: Manage multiple versions of models.

2. Secure & Isolated Training Data: Ensure data in S3 remains protected during the ML lifecycle.

3. Least Operational Overhead: Minimize manual processes, custom code, or complex setups.

---

### Analysis of the Correct Answer (Option C)

##### What It Does

- Uses SageMaker’s Model Registry to catalog models in model groups, where each group represents a logical model (e.g., "FraudDetectionModel") with versions (v1, v2, etc.).

##### Why It Meets the Requirements

1. Central Model Registry:

- Model Groups: Each group acts as a container for all versions of a model (e.g., "CustomerChurnModel-v1", "v2").

- Native Versioning: Automatically tracks versions, lineage, and metadata (training metrics, datasets, hyperparameters).

2. Security & Isolation:

- IAM Integration: Controls access to the Model Registry and S3 data via AWS IAM policies.

- S3 Isolation: Training data in S3 is secured using bucket policies and encryption, ensuring isolation during training and deployment.

3. Least Operational Overhead:

- No Custom Tooling: Fully managed by SageMaker, eliminating the need for manual tagging, ECR repository management, or external scripts.

- Automated Workflows: Direct integration with SageMaker Pipelines, training jobs, and deployment tools (e.g., one-click deployment to endpoints).

---

### Key Differentiators for Option C

1. Purpose-Built for Model Management:

- The Model Registry is explicitly designed for tracking model versions, approvals, and lifecycle stages (e.g., "Staging" vs. "Production").

2. End-to-End SageMaker Integration:

- Models trained in SageMaker can be registered automatically.

- Deployment is streamlined through built-in tools like SageMaker Endpoints or Batch Transform.

3. Governance and Compliance:

- Approval workflows ensure only validated models are deployed.

- Audit trails for model lineage (e.g., which data trained which version).

4. Scalability:

- Supports large-scale ML operations with minimal configuration.

---

### Analysis of Incorrect Options

##### Option A: Separate Amazon ECR Repositories for Each Model

- Problem:

- Misaligned Tool: ECR is designed for Docker containers, not model artifacts. Managing models here requires custom workflows to link containers to model versions.

- High Overhead: Creating and managing isolated ECR repositories for each model introduces complexity in permissions, cleanup, and tracking.

- No Native Versioning: Versioning would rely on manual tagging or external tools.

##### Option B: Amazon ECR + Unique Tags for Model Versions

- Problem:

- Manual Effort: Requires strict tagging discipline, which can lead to errors (e.g., inconsistent tags).

- No Lifecycle Management: ECR lacks native approval workflows, rollback capabilities, or staging environments for models.

- Operational Friction: Combines the overhead of ECR with manual tagging, doubling maintenance effort.

##### Option D: SageMaker Model Registry + Unique Tags

- Problem:

- Redundancy: The Model Registry already tracks versions via model groups. Adding tags duplicates functionality.

- Risk of Inconsistency: Tags could diverge from model group versions if not meticulously synchronized.

- Unnecessary Complexity: Enforcing tagging policies alongside model groups increases maintenance.

---

### Conclusion

Option C is the only solution that natively supports model versioning, governance, and security while minimizing operational overhead. The SageMaker Model Registry is purpose-built for managing ML models, whereas ECR-based solutions (A, B) are workarounds requiring custom tooling, and tagging (D) adds redundant effort. By using model groups, the company ensures a scalable, secure, and centralized model management system.

A company is building a web-based AI application by using Amazon SageMaker. The application will provide the following capabilities and features: ML experimentation, training, a central model registry, model deployment, and model monitoring.

The application must ensure secure and isolated use of training data during the ML lifecycle. The training data is stored in Amazon S3.

The company is experimenting with consecutive training jobs.

How can the company MINIMIZE infrastructure startup times for these jobs?

A. Use Managed Spot Training.

B. Use SageMaker managed warm pools.

C. Use SageMaker Training Compiler.

D. Use the SageMaker distributed data parallelism (SMDDP) library.

Answer:

B. Use SageMaker managed warm pools.

---

### Detailed Explanation

#### Requirements Summary

1. Minimize Infrastructure Startup Times: Reduce delays when launching consecutive training jobs.

2. Secure & Isolated Training Data: Ensure S3 data remains protected during the ML lifecycle.

---

### Analysis of the Correct Answer (Option B)

##### What It Does

- SageMaker Managed Warm Pools keep training instances (e.g., containers, ML compute resources) in a "warm" state after a training job completes. Subsequent jobs reuse these pre-initialized resources, avoiding cold starts.

##### Why It Meets the Requirements

1. Reduced Startup Time:

- Avoids Cold Starts: Traditional training jobs require provisioning new instances, installing dependencies, and loading containers, which can take several minutes. Warm pools skip this initialization phase.

- Reusable Resources: Consecutive jobs reuse the same warm pool, slashing startup latency to seconds instead of minutes.

2. Security & Isolation:

- Warm pools operate within the same SageMaker environment, inheriting IAM roles, VPC configurations, and S3 data access policies.

3. Cost Efficiency:

- While warm pools incur costs for idle instances, they are cheaper than repeated cold starts for frequent, consecutive jobs.

---

### Key Differentiators for Option B

1. Direct Impact on Startup Latency:

- Warm pools explicitly target infrastructure initialization delays, unlike other options that optimize training runtime.

2. Seamless Integration:

- Managed by SageMaker—no custom code or complex configurations required.

3. Scalability:

- Supports high-frequency experimentation by maintaining ready-to-use resources.

---

### Analysis of Incorrect Options

##### Option A: Managed Spot Training

- Problem:

- Focus on Cost, Not Speed: Managed Spot Training uses EC2 Spot Instances to reduce costs but does not reduce startup times.

- Increased Interruptions: Spot Instances can be reclaimed by AWS, causing job restarts and unpredictable delays.

##### Option C: SageMaker Training Compiler

- Problem:

- Optimizes Training Runtime, Not Startup: The Training Compiler speeds up model training by optimizing compute operations (e.g., GPU utilization). It has no impact on infrastructure provisioning times.

##### Option D: SageMaker Distributed Data Parallelism (SMDDP)

- Problem:

- Improves Training Efficiency, Not Startup: SMDDP accelerates distributed training across multiple GPUs/nodes but does not address the time required to provision resources.

---

### Conclusion

Option B is the only solution that directly minimizes infrastructure startup times by reusing pre-initialized resources via SageMaker managed warm pools. Other options focus on cost (A), training speed (C, D), or parallelism (D), which do not address the core requirement of reducing delays between consecutive job launches. Warm pools ensure rapid iteration during experimentation while maintaining security and isolation.

A company is building a web-based AI application by using Amazon SageMaker. The application will provide the following capabilities and features: ML experimentation, training, a central model registry, model deployment, and model monitoring.

The application must ensure secure and isolated use of training data during the ML lifecycle. The training data is stored in Amazon S3.

The company must implement a manual approval-based workflow to ensure that only approved models can be deployed to production endpoints.

Which solution will meet this requirement?

A. Use SageMaker Experiments to facilitate the approval process during model registration.

B. Use SageMaker ML Lineage Tracking on the central model registry. Create tracking entities for the approval process.

C. Use SageMaker Model Monitor to evaluate the performance of the model and to manage the approval.

D. Use SageMaker Pipelines. When a model version is registered, use the AWS SDK to change the approval status to "Approved."

Answer:

D. Use SageMaker Pipelines. When a model version is registered, use the AWS SDK to change the approval status to "Approved."

---

### Detailed Explanation

#### Requirements Summary

1. Manual Approval Workflow: Ensure only approved models are deployed to production.

2. Integration with Central Model Registry: Models must be registered and tracked.

---

### Analysis of the Correct Answer (Option D)

##### What It Does

- SageMaker Pipelines automates the ML workflow, including training, validation, and model registration.

- After a model version is registered in the SageMaker Model Registry, the AWS SDK (e.g., Boto3) is used to manually update the model’s approval status to "Approved."

##### Why It Meets the Requirements

1. Manual Approval:

- A human operator uses the AWS SDK (via scripts, CLI, or custom tools) to explicitly set the model version’s status to "Approved" in the Model Registry.

- This enforces a manual "gate" before deployment.

2. Central Model Registry Integration:

- The Model Registry natively supports approval statuses (e.g., "PendingManualApproval," "Approved," "Rejected").

- Deployment tools (e.g., SageMaker Endpoints) can be configured to only deploy "Approved" models.

3. Security:

- IAM policies restrict who can modify approval statuses, ensuring only authorized users approve models.

---

### Key Differentiators for Option D

1. Native Support for Approval Status:

- SageMaker Model Registry includes built-in fields for tracking approval, eliminating the need for custom tagging or external systems.

2. Flexibility in Workflow Design:

- Pipelines automate model registration, while the SDK allows manual approval outside the pipeline (e.g., via human review).

3. Auditability:

- Approval actions via the SDK are logged in AWS CloudTrail, providing an audit trail for compliance.

---

### Analysis of Incorrect Options

##### Option A: SageMaker Experiments

- Problem:

- Focus on Experiment Tracking: Experiments tracks training runs, parameters, and metrics but lacks native approval workflows.

- No Enforcement: Cannot prevent unapproved models from being deployed.

##### Option B: ML Lineage Tracking

- Problem:

- Audit Tool, Not Approval System: Lineage Tracking records model artifacts, datasets, and processes for compliance but does not enforce approval gates.

- Manual Effort: Creating "tracking entities" for approvals would require custom code and lacks integration with deployment controls.

##### Option C: SageMaker Model Monitor

- Problem:

- Post-Deployment Monitoring: Model Monitor evaluates live model performance (e.g., data drift) but does not govern pre-deployment approvals.

- Misaligned Purpose: Approval occurs after deployment, violating the requirement.

---

### Conclusion

Option D is the only solution that directly implements a manual approval workflow using SageMaker’s native capabilities. By combining Pipelines (for automated model registration) and the AWS SDK (for manual status updates), the company ensures that only approved models are deployed. Other options lack enforcement mechanisms or focus on unrelated stages of the ML lifecycle (tracking, monitoring).

A company is building a web-based AI application by using Amazon SageMaker. The application will provide the following capabilities and features: ML experimentation, training, a central model registry, model deployment, and model monitoring.

The application must ensure secure and isolated use of training data during the ML lifecycle. The training data is stored in Amazon S3.

The company needs to run an on-demand workflow to monitor bias drift for models that are deployed to real-time endpoints from the application.

Which action will meet this requirement?

A. Configure the application to invoke an AWS Lambda function that runs a SageMaker Clarify job.

B. Invoke an AWS Lambda function to pull the sagemaker-model-monitor-analyzer built-in SageMaker image.

C. Use AWS Glue Data Quality to monitor bias.

D. Use SageMaker notebooks to compare the bias.

Answer:

A. Configure the application to invoke an AWS Lambda function that runs a SageMaker Clarify job.

---

### Detailed Explanation

#### Requirements Summary

1. On-Demand Bias Drift Monitoring: Detect bias drift in models deployed to real-time endpoints.

2. Automated Workflow: Trigger monitoring programmatically.

3. Integration with SageMaker: Ensure compatibility with deployed endpoints.

---

### Analysis of the Correct Answer (Option A)

##### What It Does

- AWS Lambda invokes a SageMaker Clarify job on demand (e.g., via API Gateway, scheduled events, or application triggers).

- Clarify analyzes live endpoint data for bias metrics (e.g., disparate impact, class imbalance) and compares them to baseline training data.

##### Why It Meets the Requirements

1. Bias-Specific Analysis:

- SageMaker Clarify is purpose-built to measure bias in datasets and model predictions, including pre-training and post-deployment drift.

- Metrics like Class Imbalance (CI), Difference in Positive Proportions (DPP), and Conditional Demographic Disparity (CDD) are tracked.

2. On-Demand Workflow:

- Lambda enables event-driven execution (e.g., triggered by user requests, time intervals, or new data batches).

- Results are stored in S3 or visualized in SageMaker Studio for review.

3. Security & Isolation:

- Clarify jobs run in SageMaker-managed environments with IAM roles, ensuring secure access to S3 data and endpoints.

---

### Key Differentiators for Option A

1. Native Bias Monitoring:

- Clarify directly addresses the requirement to measure bias drift, unlike generic monitoring tools.

2. Automation:

- Lambda + Clarify creates a serverless, scalable workflow without manual intervention.

3. Real-Time Endpoint Integration:

- Clarify can ingest live endpoint data captured via SageMaker’s Data Capture feature.

---

### Analysis of Incorrect Options

##### Option B: SageMaker Model Monitor Built-In Analyzer

- Problem:

- Focuses on Data/Model Quality: The sagemaker-model-monitor-analyzer image checks for data drift (e.g., feature distributions) and model performance (e.g., accuracy), but not bias metrics.

- No Native Bias Tracking: Requires custom code to replicate Clarify’s bias analysis.

##### Option C: AWS Glue Data Quality

- Problem:

- Data Profiling, Not Bias: Glue Data Quality validates datasets for completeness, uniqueness, and schema compliance but lacks model bias evaluation.

##### Option D: SageMaker Notebooks

- Problem:

- Manual Process: Notebooks require human intervention to run bias comparisons, violating the "on-demand workflow" requirement.

- No Integration with Endpoints: Pulling live endpoint data and analyzing bias would require custom scripting.

---

### Conclusion

Option A is the only solution that combines automated, on-demand execution (via Lambda) with specialized bias drift monitoring (via SageMaker Clarify). Other options either lack bias-specific capabilities (B, C) or rely on manual processes (D). By using Clarify, the company ensures compliance with fairness standards while maintaining a secure, serverless workflow.

A company stores historical data in .csv files in Amazon S3. Only some of the rows and columns in the .csv files are populated. The columns are not labeled. An ML engineer needs to prepare and store the data so that the company can use the data to train ML models.

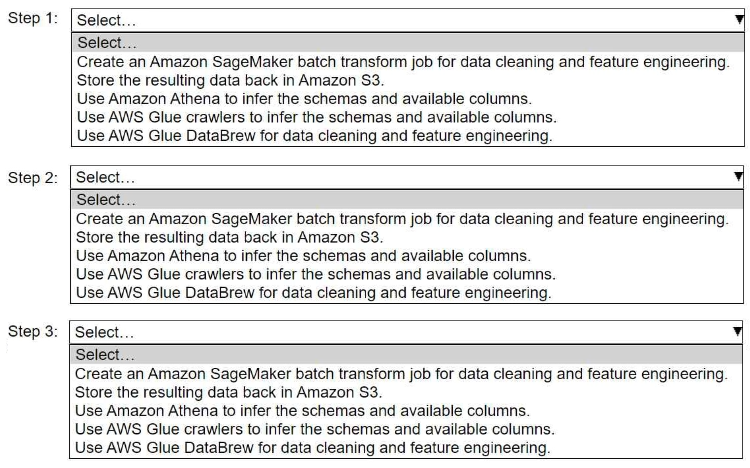

Select and order the correct steps from the following list to perform this task. Each step should be selected one time or not at all. (Select and order three.)

• Create an Amazon SageMaker batch transform job for data cleaning and feature engineering.

• Store the resulting data back in Amazon S3.

• Use Amazon Athena to infer the schemas and available columns.

• Use AWS Glue crawlers to infer the schemas and available columns.

• Use AWS Glue DataBrew for data cleaning and feature engineering.

Answer

Use AWS Glue crawlers to infer the schemas and available columns.

Use AWS Glue DataBrew for data cleaning and feature engineering.

Store the resulting data back in Amazon S3.

Detailed Explanation - Requirements Summary

The company has historical .csv files stored in Amazon S3 that contain incomplete data (some rows and columns are empty) and lack labeled columns. The goal is to prepare this raw data so it can be used for training ML models. The data preparation steps should:

Identify and label the data schema (column names, types, and structures).

Clean the data (handling missing values, normalizing formats, and applying transformations).

Store the processed data back in S3 for ML model training.

AWS provides multiple services for data preparation, but selecting the right ones requires understanding their specific roles and capabilities.

Analysis of the Correct Answer - What It Does/Why It Meets the Requirements

Use AWS Glue crawlers to infer the schemas and available columns:

AWS Glue is a fully managed ETL service that can automatically infer the schema of structured and semi-structured data.

AWS Glue Crawlers scan files in Amazon S3, detect column structures, and generate a schema in the AWS Glue Data Catalog.

Since the

.csvfiles lack labeled columns, this step is critical for structuring the data correctly before cleaning.

Use AWS Glue DataBrew for data cleaning and feature engineering:

AWS Glue DataBrew is a visual data preparation tool that allows users to apply transformations like:

Handling missing values.

Standardizing formats (e.g., converting dates to a common format).

Removing duplicates and normalizing column names.

This step ensures that the dataset is clean and usable for machine learning.

Store the resulting data back in Amazon S3:

The processed data needs to be stored in Amazon S3, where it can be accessed for training ML models using Amazon SageMaker or other ML services.

AWS services such as SageMaker and Athena can work with structured data stored in S3.

Key Differentiators for Option

AWS Glue Crawlers vs. Amazon Athena for Schema Inference:

Glue Crawlers automate schema discovery and create metadata catalogs.

Athena is primarily a SQL query engine and does not automatically generate schemas.

AWS Glue DataBrew vs. SageMaker Batch Transform for Data Cleaning:

DataBrew is designed for data preparation, including transformations and cleaning.

SageMaker Batch Transform is used for batch inference, not data cleaning.

Amazon S3 as a Storage Layer:

Amazon S3 is the most suitable choice for storing cleaned data, as it integrates with AWS ML services.

Analysis of Incorrect Options

Use Amazon Athena to infer the schemas and available columns:

Athena is an interactive query service that allows running SQL queries on data stored in S3.

However, Athena does not infer schema automatically—schema must be defined manually or through AWS Glue Crawlers.

Create an Amazon SageMaker batch transform job for data cleaning and feature engineering:

SageMaker Batch Transform is used to apply ML models for inference, not for data preprocessing.

It assumes that the data is already cleaned and structured, making it unsuitable for this task.

Conclusion

To prepare raw .csv data for ML training, the best approach is to first infer the schema using AWS Glue Crawlers, clean and transform the data with AWS Glue DataBrew, and finally store the processed data back in Amazon S3. This workflow ensures that the data is structured, clean, and ready for ML training while leveraging AWS-native tools optimized for large-scale data processing.

An ML engineer needs to use Amazon SageMaker Feature Store to create and manage features to train a model.

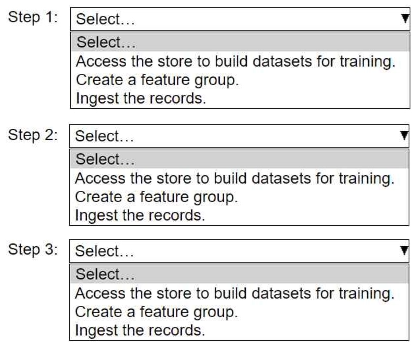

Select and order the steps from the following list to create and use the features in Feature Store. Each step should be selected one time. (Select and order three.)

• Access the store to build datasets for training.

• Create a feature group.

• Ingest the records.

Answer

Create a feature group.

Ingest the records.

Access the store to build datasets for training.

Detailed Explanation - Requirements Summary

Amazon SageMaker Feature Store is a purpose-built repository to manage, store, and retrieve features for machine learning (ML) models. The goal is to create a structured and reusable feature store that can be accessed efficiently during both training and inference. The key steps involved in setting up and using SageMaker Feature Store are:

Defining a feature group to organize and manage related features.

Ingesting data into the feature store.

Accessing the stored features to build datasets for model training.

These steps ensure that ML models can utilize well-defined and versioned feature sets during training and inference.

Analysis of the Correct Answer - What It Does/Why It Meets the Requirements

Create a feature group:

A Feature Group is a logical grouping of features in SageMaker Feature Store, similar to a table in a database.

It defines the schema, including feature names, data types, and identifiers.

Feature groups can be stored in two modes:

Online store: For real-time low-latency access.

Offline store: For batch processing and training.

This step is fundamental because it establishes the structure in which feature data will be stored.

Ingest the records:

Once the feature group is created, feature data must be ingested into the store.

Features can be updated in real-time (online store) or in batches (offline store).

Ingestion ensures that feature values are available for training and inference.

This step includes timestamping, ensuring that the latest feature values are always accessible for training.

Access the store to build datasets for training:

After features are stored, they need to be retrieved for model training.

This is done by querying the offline store, which maintains historical feature versions.

The accessed feature dataset can be used to train models in Amazon SageMaker or other ML frameworks.

Key Differentiators for Option

Creating a Feature Group First

A feature group provides the structure for feature data.

Without defining it, there is no organized place to ingest or retrieve features.

Ingesting Records Second

Data must be ingested before it can be accessed for training.

This step ensures that SageMaker Feature Store has relevant data to use.

Accessing the Store Last

Once features are ingested, they can be queried from the offline store for training datasets.

Analysis of Incorrect Options

Access the store to build datasets for training (as the first step):

Incorrect because there are no features stored at this stage.

The feature group must be created and populated before accessing it.

Ingest the records (before creating a feature group):

Incorrect because there is no defined structure to store the features.

Without a feature group, SageMaker Feature Store does not know how to handle ingested data.

Conclusion

The correct order for using Amazon SageMaker Feature Store is:

Create a feature group to define the schema and structure.

Ingest the records to populate the feature store with data.

Access the store to build datasets for training by retrieving features from the offline store.

This workflow ensures an organized, efficient, and scalable feature management process, enabling high-quality ML model training.

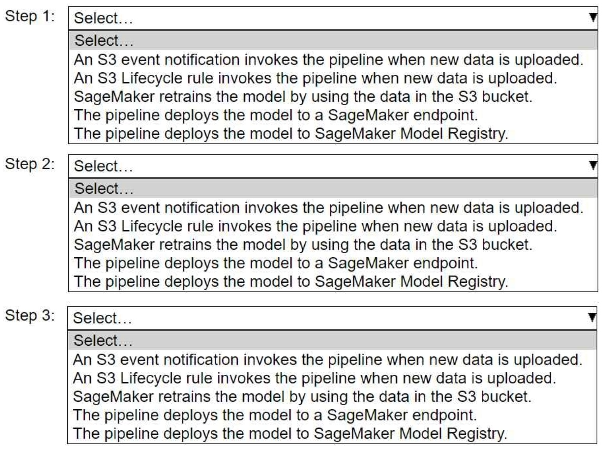

A company wants to host an ML model on Amazon SageMaker. An ML engineer is configuring a continuous integration and continuous delivery (Cl/CD) pipeline in AWS CodePipeline to deploy the model. The pipeline must run automatically when new training data for the model is uploaded to an Amazon S3 bucket.

Select and order the pipeline's correct steps from the following list. Each step should be selected one time or not at all. (Select and order three.)

• An S3 event notification invokes the pipeline when new data is uploaded.

• S3 Lifecycle rule invokes the pipeline when new data is uploaded.

• SageMaker retrains the model by using the data in the S3 bucket.

• The pipeline deploys the model to a SageMaker endpoint.

• The pipeline deploys the model to SageMaker Model Registry.

Answer

An S3 event notification invokes the pipeline when new data is uploaded.

SageMaker retrains the model by using the data in the S3 bucket.

The pipeline deploys the model to SageMaker Model Registry.

Detailed Explanation - Requirements Summary

The company is setting up a CI/CD pipeline in AWS CodePipeline to automate ML model training and deployment. The goal is to trigger the pipeline when new training data is uploaded, retrain the model with this data, and then manage the model lifecycle efficiently.

The required steps must ensure:

Automatic pipeline execution when new data arrives

Model retraining using the latest dataset

Model versioning and storage for controlled deployments

This setup enables continuous model training and deployment with minimal manual intervention.

Analysis of the Correct Answer - What It Does/Why It Meets the Requirements

An S3 event notification invokes the pipeline when new data is uploaded.

Why? The CI/CD pipeline should start automatically when new training data is added.

How? Amazon S3 event notifications can send triggers to AWS services like AWS Lambda, Amazon SNS, or AWS CodePipeline.

Alternative? AWS Step Functions could also orchestrate this workflow, but S3 event notifications are a standard trigger mechanism for ML pipelines.

SageMaker retrains the model by using the data in the S3 bucket.

Why? The model needs to be retrained with the new dataset before deployment.

How? SageMaker Training Jobs can be triggered by CodePipeline to use the updated dataset from S3.

Alternative? AWS Step Functions could manage this step, but CodePipeline is commonly used for CI/CD automation.

The pipeline deploys the model to SageMaker Model Registry.

Why? Model Registry is essential for model versioning, tracking, and governance before deployment.

How? SageMaker Model Registry stores different versions of the model, enabling model selection for production deployment.

Alternative? Direct deployment to a SageMaker endpoint is an option, but Model Registry allows better control over model promotions and rollback strategies.

Key Differentiators for Option

S3 Event Notification vs. S3 Lifecycle Rule

S3 Event Notification triggers pipelines instantly.

S3 Lifecycle Rules are used for managing storage (e.g., moving old data to Glacier) and cannot invoke pipelines.

Deploying to SageMaker Model Registry vs. SageMaker Endpoint

SageMaker Model Registry is used for storing, versioning, and managing models.

Deploying to a SageMaker Endpoint is done only when a model is approved for production use.

The CI/CD pipeline should store the retrained model first, and a separate process can decide when to deploy.

Analysis of Incorrect Options

S3 Lifecycle rule invokes the pipeline when new data is uploaded.

Incorrect because:

S3 Lifecycle rules are used to move, archive, or delete objects, not trigger events.

Lifecycle rules cannot start a CodePipeline or SageMaker job.

The pipeline deploys the model to a SageMaker endpoint.

Incorrect because:

CI/CD pipelines typically first store models in SageMaker Model Registry to manage versions.

Deployment to an endpoint should be a controlled process, often requiring approval or testing before production deployment.

Conclusion

The best approach for automating model retraining and management using AWS CodePipeline and SageMaker is:

Trigger the pipeline using S3 event notifications.

Retrain the model using updated data from S3.

Store the model in SageMaker Model Registry for versioning and controlled deployment.

This workflow ensures continuous and automated model training while maintaining proper governance over deployed models.

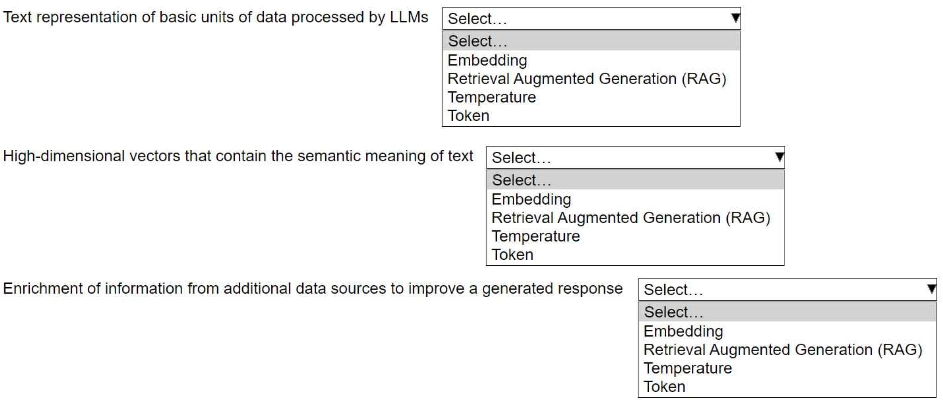

An ML engineer is building a generative AI application on Amazon Bedrock by using large language models (LLMs).

Select the correct generative AI term from the following list for each description. Each term should be selected one time or not at all. (Select three.)

• Embedding

• Retrieval Augmented Generation (RAG)

• Temperature

• Token

Answer

Text representation of basic units of data processed by LLMs → Token

High-dimensional vectors that contain the semantic meaning of text → Embedding

Enrichment of information from additional data sources to improve a generated response → Retrieval Augmented Generation (RAG)

Detailed Explanation - Requirements Summary

The ML engineer is working with Amazon Bedrock and LLMs for a generative AI application. The task is to correctly match key generative AI terms with their respective definitions.

LLMs process text data in the form of tokens.

Semantic representations of text are stored as embeddings.

Retrieval Augmented Generation (RAG) enhances responses by incorporating external knowledge sources.

These concepts are critical for improving text generation quality, retrieval efficiency, and model interpretability in AI applications.

Analysis of the Correct Answer - What It Does/Why It Meets the Requirements

Token - Text representation of basic units of data processed by LLMs

What it does:

A token is a basic unit of text used by LLMs.

It can be a word, subword, or character, depending on the tokenizer.

Why it fits:

LLMs do not process entire sentences at once; they convert text into a sequence of tokens before processing.

Embedding - High-dimensional vectors that contain the semantic meaning of text

What it does:

Embeddings are numerical representations of text that capture meaning and relationships.

They map words or sentences to high-dimensional vector spaces.

Why it fits:

LLMs and search engines use embeddings for semantic search, clustering, and recommendation systems.

Retrieval Augmented Generation (RAG) - Enrichment of information from additional data sources to improve a generated response

What it does:

RAG improves LLM outputs by retrieving relevant information from external sources (e.g., databases, documents, APIs).

It combines retrieval-based search with generative AI for more accurate and contextually rich responses.

Why it fits:

Many enterprise LLM applications use RAG to ensure up-to-date responses instead of relying only on pretrained data.

Key Differentiators for Option

Token vs. Embedding:

A token is a unit of text (e.g., "dog" → token).

An embedding is a vector representing meaning (e.g., "dog" → [1.2, 0.5, -0.7]).

RAG vs. Temperature:

RAG improves model output by fetching relevant information from external knowledge sources.

Temperature controls randomness in text generation (lower = more deterministic, higher = more creative).

Analysis of Incorrect Options

Temperature (Incorrect)

Why it doesn’t fit?

Temperature controls randomness in generated text but is not related to retrieving external data.

Where is it used?

A lower temperature (e.g., 0.2) makes responses more predictable.

A higher temperature (e.g., 1.0) adds diversity and randomness.

Token for High-dimensional vectors (Incorrect)

Why it doesn’t fit?

Tokens represent textual units, but do not capture relationships or semantic meaning.

Correct term: Embeddings are used for this purpose.

Conclusion

The correct matches for each description are:

Token → Smallest unit of text processed by LLMs.

Embedding → High-dimensional vector representation of text meaning.

Retrieval Augmented Generation (RAG) → Enhancing responses with additional retrieved data.

This mapping ensures an accurate conceptual understanding of LLM processing, vectorization, and retrieval techniques in Amazon Bedrock.

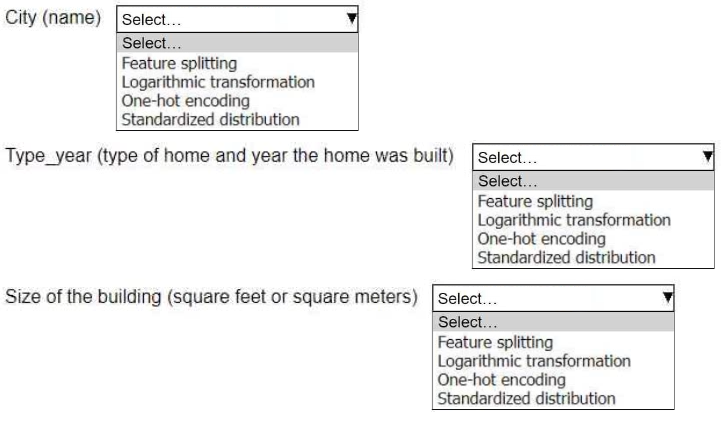

An ML engineer is working on an ML model to predict the prices of similarly sized homes. The model will base predictions on several features The ML engineer will use the following feature engineering techniques to estimate the prices of the homes:

• Feature splitting

• Logarithmic transformation

• One-hot encoding

• Standardized distribution

Select the correct feature engineering techniques for the following list of features. Each feature engineering technique should be selected one time or not at all (Select three.)

Answer

City (name) → One-hot encoding

Type_year (type of home and year the home was built) → Feature splitting

Size of the building (square feet or square meters) → Logarithmic transformation

Detailed Explanation - Requirements Summary

The ML engineer is working on a home price prediction model, requiring proper feature engineering techniques to handle different types of data:

Categorical data (City name) needs encoding for machine learning models.

Composite features (Type and year built) should be split into separate features.

Numeric features with large ranges (Size of the building) benefit from transformations like log scaling to improve model stability.

Each feature requires a specific transformation to ensure optimal ML model performance.

Analysis of the Correct Answer - What It Does/Why It Meets the Requirements

City (name) → One-hot encoding

What it does:

Converts categorical variables (e.g., "New York", "Los Angeles") into binary vectors.

Why it fits:

City names are nominal categories with no inherent order, so one-hot encoding is the best choice.

Example Transformation:

Original:

"New York", "Los Angeles", "Chicago"One-hot encoded:

[1,0,0],[0,1,0],[0,0,1]

Type_year (type of home and year the home was built) → Feature splitting

What it does:

Splits a composite feature (e.g., "Single Family, 1995") into two distinct features: home type and year built.

Why it fits:

Separating categorical and numerical values improves model interpretability.

Example Transformation:

Original:

"Apartment, 2010"Split into:

"Apartment"(categorical) and2010(numerical)

Size of the building (square feet or square meters) → Logarithmic transformation

What it does:

Applies a log transformation to reduce skewness in numeric data.

Why it fits:

Home sizes vary widely (e.g., 500 sq ft vs. 5000 sq ft), and a log transformation helps normalize the distribution.

Example Transformation:

Original:

500, 1000, 5000Log Transformed:

log(500) ≈ 6.2, log(1000) ≈ 6.9, log(5000) ≈ 8.5

Key Differentiators for Option

One-hot encoding vs. Feature splitting:

One-hot encoding is for pure categorical data.

Feature splitting is for composite features containing multiple attributes.

Logarithmic transformation vs. Standardized distribution:

Log transformation reduces the impact of outliers in skewed numerical data.

Standardized distribution scales numerical features to a mean of 0 and standard deviation of 1, but doesn’t address skewness.

Analysis of Incorrect Options

Feature splitting for City (Incorrect)

Why it doesn’t fit?

Cities are not composite attributes needing splitting.

Correct choice: One-hot encoding because "New York" vs. "Los Angeles" is a categorical distinction.

Standardized distribution for Size of the building (Incorrect)

Why it doesn’t fit?

Standardization adjusts the scale but does not correct data skew (large homes vs. small homes).

Correct choice: Logarithmic transformation better handles extreme size differences.

One-hot encoding for Type_year (Incorrect)

Why it doesn’t fit?

The year built is numerical and should not be encoded as a category.

Correct choice: Feature splitting separates "Type" (categorical) from "Year" (numerical).

Conclusion

The correct feature engineering techniques for the dataset are:

One-hot encoding for city names (categorical variable).

Feature splitting for type and year built (composite feature).

Logarithmic transformation for building size (numeric variable with large variance).

Using these methods ensures better model interpretability, reduced skewness, and improved prediction accuracy for the home pricing model.

An ML engineer is developing a fraud detection model on AWS. The training dataset includes transaction logs, customer profiles, and tables from an on-premises MySQL database. The transaction logs and customer profiles are stored in Amazon S3.

The dataset has a class imbalance that affects the learning of the model's algorithm. Additionally, many of the features have interdependencies. The algorithm is not capturing all the desired underlying patterns in the data.

Which AWS service or feature can aggregate the data from the various data sources?

A. Amazon EMR Spark jobs

B. Amazon Kinesis Data Streams

C. Amazon DynamoDB

D. AWS Lake Formation

Answer:

D. AWS Lake Formation

---

### Detailed Explanation

#### Requirements Summary

1. Aggregate Data from Multiple Sources: Combine transaction logs (Amazon S3), customer profiles (Amazon S3), and on-premises MySQL tables into a unified dataset.

2. Class Imbalance & Feature Engineering: While not directly addressed by aggregation, centralized data is critical for preprocessing.

---

### Analysis of the Correct Answer (Option D)

##### What It Does

- AWS Lake Formation simplifies building and managing a data lake by aggregating structured/unstructured data from diverse sources (S3, databases, on-premises systems) into a centralized repository (e.g., Amazon S3).

- Key Features:

- Crawlers: Automatically discover and catalog data from S3 and MySQL (via AWS Glue connectors).

- ETL Jobs: Transform and merge data using AWS Glue.

- Centralized Permissions: Securely manage access across datasets.

##### Why It Meets the Requirements

1. Multi-Source Aggregation:

- S3 Integration: Directly ingest transaction logs and customer profiles.

- On-Premises MySQL: Use AWS Glue JDBC connectors to pull data into the lake.

2. Unified Data Preparation:

- Clean, deduplicate, and merge datasets in a single location for ML training.

3. Security:

- Lake Formation enforces fine-grained access controls (e.g., column-level permissions) on aggregated data.

---

### Key Differentiators for Option D

1. Purpose-Built for Data Lakes:

- Lake Formation is designed to unify disparate data sources into a governed, queryable repository.

2. Automation:

- Glue crawlers auto-detect schemas, reducing manual effort to structure data.

3. Scalability:

- Handles large volumes of data across hybrid (cloud + on-premises) environments.

---

### Analysis of Incorrect Options

##### Option A: Amazon EMR Spark Jobs

- Problem:

- Manual Coding Required: While EMR can process data from S3 and MySQL via Spark, it requires writing custom jobs to extract, transform, and join datasets.

- Operational Overhead: Managing clusters and ETL pipelines adds complexity compared to Lake Formation’s managed workflows.

##### Option B: Amazon Kinesis Data Streams

- Problem:

- Real-Time Focus: Kinesis streams data in real time (e.g., clickstreams), which is irrelevant for batch aggregation of existing S3 files and MySQL tables.

##### Option C: Amazon DynamoDB

- Problem:

- NoSQL Database Use Case: DynamoDB is optimized for low-latency transactional workloads, not batch data aggregation or large-scale ML datasets.

---

### Conclusion

AWS Lake Formation (D) is the optimal choice for aggregating data from S3 and on-premises MySQL into a centralized, governed data lake. It automates schema discovery, ETL, and access controls, enabling the ML engineer to focus on resolving class imbalance and feature interdependencies with a unified dataset. Other options either require manual coding (A), address unrelated use cases (B, C), or lack native hybrid data integration.

An ML engineer is developing a fraud detection model on AWS. The training dataset includes transaction logs, customer profiles, and tables from an on-premises MySQL database. The transaction logs and customer profiles are stored in Amazon S3.

The dataset has a class imbalance that affects the learning of the model's algorithm. Additionally, many of the features have interdependencies. The algorithm is not capturing all the desired underlying patterns in the data.

After the data is aggregated, the ML engineer must implement a solution to automatically detect anomalies in the data and to visualize the result.

Which solution will meet these requirements?

A. Use Amazon Athena to automatically detect the anomalies and to visualize the result.

B. Use Amazon Redshift Spectrum to automatically detect the anomalies. Use Amazon QuickSight to visualize the result.

C. Use Amazon SageMaker Data Wrangler to automatically detect the anomalies and to visualize the result.

D. Use AWS Batch to automatically detect the anomalies. Use Amazon QuickSight to visualize the result.

Answer:

C. Use Amazon SageMaker Data Wrangler to automatically detect the anomalies and to visualize the result.

---

### Detailed Explanation

#### Requirements Summary

1. Automated Anomaly Detection: Identify outliers or anomalies in the aggregated dataset.

2. Visualization: Generate insights to understand anomalies.

3. Integration with Aggregated Data: Work with data already unified from S3 and MySQL.

---

### Analysis of the Correct Answer (Option C)

##### What It Does

- Amazon SageMaker Data Wrangler provides a low-code interface for data preparation, including built-in anomaly detection and visualization.

- Key Features:

- Outlier Detection: Uses statistical methods (e.g., Interquartile Range, Z-score) to flag anomalies.

- Visual Analysis: Generates histograms, scatter plots, and heatmaps to highlight anomalies.

- Integration: Directly processes data from S3 or Lake Formation.

##### Why It Meets the Requirements

1. Automated Anomaly Detection:

- Prebuilt transforms like Find Outliers automatically identify anomalies in features.

- Supports custom thresholds and rules for fraud-specific patterns.

2. Visualization:

- In-app visualizations (e.g., box plots, distribution charts) show detected anomalies.

- Exports cleaned data for downstream ML training.

3. End-to-End Workflow:

- Integrates with SageMaker Pipelines for automated retraining and deployment.

---

### Key Differentiators for Option C

1. Purpose-Built for ML Data Prep:

- Combines anomaly detection and visualization in a single tool, tailored for ML workflows.

2. Low-Code Automation:

- No need to write custom scripts or manage infrastructure (unlike AWS Batch).

3. SageMaker Ecosystem Integration:

- Seamlessly connects to SageMaker training jobs and model registry.

---

### Analysis of Incorrect Options

##### Option A: Amazon Athena

- Problem:

- Ad-Hoc Query Tool: Athena requires manual SQL queries to detect anomalies, lacking automation.

- No Native Visualization: Athena outputs tabular results; visualization would require separate tools.

##### Option B: Amazon Redshift Spectrum + QuickSight

- Problem:

- Complex Setup: Redshift Spectrum requires defining external tables and writing SQL/ML queries for anomaly detection.

- No Native Anomaly Detection: QuickSight visualizes results but doesn’t automate detection.

##### Option D: AWS Batch + QuickSight

- Problem:

- Manual Coding: Requires writing custom anomaly detection logic (e.g., Python scripts) and managing Batch jobs.

- Disconnected Workflow: Anomaly detection and visualization are separate steps, increasing operational overhead.

---

### Conclusion

Option C is the only solution that automates anomaly detection and visualization within a unified, ML-focused tool. SageMaker Data Wrangler’s built-in transforms and visualizations eliminate manual coding, enabling the engineer to quickly address data quality issues and improve model performance. Other options lack integration, automation, or require fragmented workflows.

An ML engineer is developing a fraud detection model on AWS. The training dataset includes transaction logs, customer profiles, and tables from an on-premises MySQL database. The transaction logs and customer profiles are stored in Amazon S3.

The dataset has a class imbalance that affects the learning of the model's algorithm. Additionally, many of the features have interdependencies. The algorithm is not capturing all the desired underlying patterns in the data.

The training dataset includes categorical data and numerical data. The ML engineer must prepare the training dataset to maximize the accuracy of the model.

Which action will meet this requirement with the LEAST operational overhead?

A. Use AWS Glue to transform the categorical data into numerical data.

B. Use AWS Glue to transform the numerical data into categorical data.

C. Use Amazon SageMaker Data Wrangler to transform the categorical data into numerical data.

D. Use Amazon SageMaker Data Wrangler to transform the numerical data into categorical data.

Answer:

C. Use Amazon SageMaker Data Wrangler to transform the categorical data into numerical data.

---

### Detailed Explanation

#### Requirements Summary

1. Prepare Training Data: Convert categorical data to numerical format for ML algorithms.

2. Maximize Model Accuracy: Address class imbalance and feature interdependencies.

3. Minimize Operational Overhead: Avoid complex scripting or infrastructure management.

---

### Analysis of the Correct Answer (Option C)

##### What It Does

- Amazon SageMaker Data Wrangler provides a low-code/no-code interface to automatically encode categorical data (e.g., one-hot encoding, ordinal encoding) into numerical features.

- Key Features:

- Built-in Transforms: Preconfigured recipes for categorical encoding (e.g., one-hot, frequency, target encoding).

- Visual Workflow: Drag-and-drop transformations with real-time visual feedback.

- Integration: Directly processes data from S3 or Lake Formation and exports to SageMaker training jobs.

##### Why It Meets the Requirements

1. Categorical-to-Numerical Conversion:

- ML algorithms (e.g., XGBoost, neural networks) require numerical input to capture patterns effectively.

- Techniques like one-hot encoding resolve interdependencies between categorical features.

2. Operational Efficiency:

- No need to write custom ETL code (unlike AWS Glue). Prebuilt transforms reduce setup time.

3. Accuracy Improvements:

- Proper encoding helps models leverage feature relationships, mitigating class imbalance effects.

---

### Key Differentiators for Option C

1. ML-Focused Tool:

- Data Wrangler is purpose-built for ML data prep, with optimizations for feature engineering.

2. Low Overhead:

- Managed service with no infrastructure to provision. Integrates seamlessly with SageMaker.

3. Automation:

- Reusable workflows for consistent preprocessing across experiments.

---

### Analysis of Incorrect Options

##### Option A: AWS Glue for Categorical-to-Numerical

- Problem:

- Manual Scripting: Requires writing PySpark/Scala code to implement encoders, increasing development time.

- No ML-Specific Optimizations: Glue is a general-purpose ETL tool, lacking built-in ML feature engineering.

##### Option B/D: Converting Numerical to Categorical

- Problem:

- Counterproductive: Most algorithms perform better with numerical data. Discretizing numerical features (e.g., binning) risks losing critical patterns.

##### Option D: AWS Glue for Numerical-to-Categorical

- Same Issues as B: Converts data in the wrong direction, harming model accuracy.

---

### Conclusion

Option C is the only solution that efficiently converts categorical data to numerical while minimizing operational overhead. SageMaker Data Wrangler’s prebuilt transforms and visual interface enable rapid, accurate feature engineering, directly addressing the model’s inability to capture underlying patterns. AWS Glue (A) requires manual coding, and converting numerical to categorical (B/D) degrades model performance.

An ML engineer is developing a fraud detection model on AWS. The training dataset includes transaction logs, customer profiles, and tables from an on-premises MySQL database. The transaction logs and customer profiles are stored in Amazon S3.

The dataset has a class imbalance that affects the learning of the model's algorithm. Additionally, many of the features have interdependencies. The algorithm is not capturing all the desired underlying patterns in the data.

Before the ML engineer trains the model, the ML engineer must resolve the issue of the imbalanced data.

Which solution will meet this requirement with the LEAST operational effort?

A. Use Amazon Athena to identify patterns that contribute to the imbalance. Adjust the dataset accordingly.

B. Use Amazon SageMaker Studio Classic built-in algorithms to process the imbalanced dataset.

C. Use AWS Glue DataBrew built-in features to oversample the minority class.

D. Use the Amazon SageMaker Data Wrangler balance data operation to oversample the minority class.

Answer:

D. Use the Amazon SageMaker Data Wrangler balance data operation to oversample the minority class.

---

### Detailed Explanation

#### Requirements Summary

1. Resolve Class Imbalance: Address the skewed distribution of fraud (minority) vs. non-fraud (majority) classes.

2. Minimal Operational Effort: Avoid manual scripting or complex configurations.

---

### Analysis of the Correct Answer (Option D)

##### What It Does

- Amazon SageMaker Data Wrangler includes a Balance Data operation that automatically oversamples the minority class (e.g., fraud cases) using techniques like Synthetic Minority Oversampling Technique (SMOTE) or random duplication.

##### Why It Meets the Requirements

1. Automated Class Balancing:

- A single click/configuration applies oversampling, ensuring the model trains on a balanced dataset.

- No need to manually code resampling logic or manage data pipelines.

2. Integration with SageMaker:

- Directly processes data from S3 or Lake Formation and exports to SageMaker training jobs.

3. Operational Simplicity:

- Managed UI-driven workflow eliminates coding, infrastructure setup, or dependency management.

---

### Key Differentiators for Option D

1. Built-In, ML-Optimized Solution:

- Data Wrangler is purpose-built for ML data preparation, with balancing tailored to improve model performance.

2. Low-Code Efficiency:

- Preconfigured balancing methods reduce the risk of errors compared to manual scripting (e.g., AWS Glue).

---

### Analysis of Incorrect Options

##### Option A: Amazon Athena

- Problem:

- Manual Analysis Only: Athena identifies imbalance via SQL queries but requires custom code to resample data (e.g., rewriting datasets in S3).

- No Automation: Operational effort increases due to manual dataset adjustments.

##### Option B: SageMaker Built-In Algorithms

- Problem:

- No Preprocessing: Built-in algorithms (e.g., XGBoost) do not automatically balance data. Some support class weights, but this only adjusts loss functions—it does not fix imbalanced input data.

- Partial Solution: Class weighting requires manual hyperparameter tuning and is less effective than oversampling.

##### Option C: AWS Glue DataBrew

- Problem:

- No Native Balancing: DataBrew focuses on cleaning (e.g., missing values, outliers) but lacks built-in oversampling/undersampling features.

- Custom Effort: Resampling would require writing custom transforms, increasing operational overhead.

---

### Conclusion

Option D is the only solution that automates class balancing with minimal effort. SageMaker Data Wrangler’s "Balance Data" operation directly addresses the root cause of class imbalance, enabling the model to learn fraud patterns effectively. Other options either lack automation (A, C) or fail to resolve the data imbalance (B).

An ML engineer is developing a fraud detection model on AWS. The training dataset includes transaction logs, customer profiles, and tables from an on-premises MySQL database. The transaction logs and customer profiles are stored in Amazon S3.

The dataset has a class imbalance that affects the learning of the model's algorithm. Additionally, many of the features have interdependencies. The algorithm is not capturing all the desired underlying patterns in the data.

The ML engineer needs to use an Amazon SageMaker built-in algorithm to train the model.

Which algorithm should the ML engineer use to meet this requirement?

A. LightGBM

B. Linear learner

C. К-means clustering

D. Neural Topic Model (NTM)

Answer:

A. LightGBM

---

### Detailed Explanation

#### Requirements Summary

1. Class Imbalance: The fraud detection dataset has a skewed distribution (e.g., few fraud cases vs. many non-fraud).

2. Feature Interdependencies: Complex relationships between features that the model must capture.

3. Use SageMaker Built-in Algorithm: Leverage AWS-native solutions to minimize custom code.

---

### Analysis of the Correct Answer (Option A)

##### What It Does

- LightGBM (Gradient Boosting Machine) is a SageMaker built-in algorithm optimized for classification tasks.

- Key Features:

- Handles Class Imbalance: Automatically adjusts weights for minority classes (e.g., fraud) via parameters like scale_pos_weight.

- Captures Feature Interactions: Uses gradient-boosted trees to model non-linear relationships and feature interdependencies.

- Efficiency: Faster training and lower memory usage compared to traditional GBM frameworks.

##### Why It Meets the Requirements

1. Class Imbalance Mitigation:

- LightGBM penalizes misclassifications of the minority class more heavily, improving fraud detection recall.

2. Complex Pattern Recognition:

- Decision trees inherently model feature interactions, capturing interdependencies (e.g., transaction amount vs. customer location).

3. SageMaker Integration:

- Preconfigured hyperparameters and one-click deployment reduce operational effort.

---

### Key Differentiators for Option A

1. Optimized for Imbalanced Data:

- LightGBM supports is_unbalance and scale_pos_weight parameters to prioritize minority class accuracy.

2. Tree-Based Architecture:

- Splits data hierarchically, naturally identifying interactions between features (e.g., "high-value transactions from new accounts").

3. Built-In Algorithm:

- No need to install custom libraries or manage dependencies.

---

### Analysis of Incorrect Options

##### Option B: Linear Learner

- Problem:

- Linear Models Struggle with Complexity: Assumes linear relationships between features, failing to capture interdependencies.

- Manual Class Weighting: Requires explicit hyperparameter tuning to address imbalance, increasing effort.

##### Option C: K-Means Clustering

- Problem:

- Unsupervised Use Case: K-means groups unlabeled data, but fraud detection requires supervised learning (labeled fraud/non-fraud).

- Ignores Class Labels: Cannot directly optimize for fraud detection accuracy.

##### Option D: Neural Topic Model (NTM)

- Problem:

- Text/Topic Modeling Focus: NTM analyzes document topics (e.g., LDA alternative), irrelevant for tabular transaction data.

---

### Conclusion

LightGBM (A) is the only SageMaker built-in algorithm that directly addresses class imbalance and feature interdependencies for fraud detection. Its tree-based architecture and built-in imbalance handling outperform linear models (B) and are irrelevant to unsupervised (C) or NLP-focused (D) algorithms. By using LightGBM, the engineer maximizes model accuracy with minimal operational overhead.

A company has deployed an XGBoost prediction model in production to predict if a customer is likely to cancel a subscription. The company uses Amazon SageMaker Model Monitor to detect deviations in the F1 score.

During a baseline analysis of model quality, the company recorded a threshold for the F1 score. After several months of no change, the model's F1 score decreases significantly.

What could be the reason for the reduced F1 score?

A. Concept drift occurred in the underlying customer data that was used for predictions.

B. The model was not sufficiently complex to capture all the patterns in the original baseline data.

C. The original baseline data had a data quality issue of missing values.

D. Incorrect ground truth labels were provided to Model Monitor during the calculation of the baseline.

Answer:

A. Concept drift occurred in the underlying customer data that was used for predictions.

---

### Detailed Explanation

#### Key Requirements & Context

- Problem: The F1 score of an XGBoost model (monitored via SageMaker Model Monitor) dropped significantly after months of stability.

- F1 Score: Measures the balance between precision and recall, critical for imbalanced tasks like subscription churn prediction.

- Baseline Analysis: Model Monitor compares live performance to an initial baseline. A sustained drop suggests a systemic issue post-deployment.

---

### Analysis of the Correct Answer (Option A)

##### What is Concept Drift?

- Definition: Changes in the statistical properties of input data or relationships between features and the target variable over time.

- Example: Customer behavior evolves (e.g., new cancellation reasons emerge, economic shifts alter spending patterns), making the model’s original assumptions outdated.

##### Why It Explains the F1 Score Drop

1. Delayed Impact:

- The model performed well initially because training data matched live data. Over months, gradual drift degraded predictions.

2. F1 Sensitivity:

- F1 depends on both precision and recall. Concept drift can disproportionately affect minority classes (e.g., cancellations), reducing recall or precision.

3. Model Monitor Detection:

- Model Monitor flags deviations from the baseline F1 threshold, aligning with the observed drop.

---

### Key Differentiators for Option A

- Temporal Pattern: The F1 score was stable initially but declined later, matching the gradual onset of concept drift.

- Real-World Dynamics: Subscription behaviors naturally evolve due to external factors (e.g., market trends, competitor actions).

---

### Analysis of Incorrect Options

##### Option B: Model Complexity

- Issue:

- If the model was insufficiently complex, poor performance (low F1) would appear immediately, not after months of stability.

- XGBoost is inherently capable of capturing complex patterns via boosted trees.

##### Option C: Missing Values in Baseline Data

- Issue:

- Baseline data quality issues (e.g., missing values) would skew the initial F1 threshold. However, Model Monitor would detect deviations from the start, not after months.

- The problem is a recent decline, not a flawed baseline.

##### Option D: Incorrect Ground Truth Labels in Baseline

- Issue:

- Wrong labels during baseline creation would invalidate the F1 threshold. However, this would cause mismatched monitoring from day one, not a delayed drop.

- Example: If baseline F1 was artificially high due to mislabeled data, live performance would immediately appear worse, not degrade later.

---

### Conclusion

Concept drift (A) is the most plausible explanation. The model’s performance decayed because the relationships between input features (e.g., customer behavior) and the target variable (subscription cancellation) shifted over time. Other options fail to explain the delayed decline in F1 or conflict with the problem’s timeline. To resolve this, the company should retrain the model with recent data and implement continuous monitoring for drift detection.

A company has a team of data scientists who use Amazon SageMaker notebook instances to test ML models. When the data scientists need new permissions, the company attaches the permissions to each individual role that was created during the creation of the SageMaker notebook instance.

The company needs to centralize management of the team's permissions.

Which solution will meet this requirement?

A. Create a single IAM role that has the necessary permissions. Attach the role to each notebook instance that the team uses.

B. Create a single IAM group. Add the data scientists to the group. Associate the group with each notebook instance that the team uses.

C. Create a single IAM user. Attach the AdministratorAccess AWS managed IAM policy to the user. Configure each notebook instance to use the IAM user.

D. Create a single IAM group. Add the data scientists to the group. Create an IAM role. Attach the AdministratorAccess AWS managed IAM policy to the role. Associate the role with the group. Associate the group with each notebook instance that the team uses.

Answer:

A. Create a single IAM role that has the necessary permissions. Attach the role to each notebook instance that the team uses.

---

### Detailed Explanation

#### Requirements Summary

1. Centralize Permissions: Manage permissions for all data scientists’ SageMaker notebook instances from a single location.

2. Minimize Redundancy: Eliminate per-notebook role updates.

---

### Analysis of the Correct Answer (Option A)

##### What It Does

- Single IAM Role: A unified role is created with all required permissions (e.g., S3 access, SageMaker API permissions).

- Role Attachment: This role is assigned to every SageMaker notebook instance used by the data science team.

##### Why It Meets the Requirements

1. Centralized Management:

- Permissions are defined once in the role’s policy. Changes to the role (e.g., adding S3 write access) automatically apply to all notebook instances using it.

2. Scalability:

- New notebook instances inherit the role without requiring individual permission updates.

3. Security:

- Follows AWS best practices by using roles (not users/groups) for resource permissions.

---

### Key Differentiators for Option A

1. Role-Based Access Control (RBAC):

- IAM roles are designed to grant permissions to AWS resources (e.g., SageMaker notebooks), unlike groups/users, which manage human/application access.

2. Operational Simplicity:

- No need to manage users, groups, or credentials—only one role is maintained.

---

### Analysis of Incorrect Options

##### Option B: IAM Group Associated with Notebook Instances

- Problem:

- Groups Manage Users, Not Resources: IAM groups organize users (e.g., data scientists) but cannot be directly attached to SageMaker notebook instances.

- No Permission Inheritance: Associating a group with a notebook instance is not a valid AWS configuration.

##### Option C: Shared IAM User with AdministratorAccess

- Problem:

- Security Risk: Sharing a single user with broad permissions violates the principle of least privilege and complicates auditing.

- Not Resource-Focused: Users are for human/application identities, not resources like notebooks.

##### Option D: Group + Role with AdministratorAccess

- Problem:

- Overly Broad Permissions: The AdministratorAccess policy grants excessive privileges, increasing security risks.

- Mismatched Components: Groups manage user permissions, not resource permissions. Associating a role with a group does not automatically grant the role to notebook instances.

---

### Conclusion

Option A is the only solution that centralizes permissions for SageMaker notebook instances using AWS’s native role-based model. By assigning a single IAM role to all notebooks, the company ensures consistent, scalable, and secure permission management. Other options misuse IAM components (B, D) or introduce security risks (C).

An ML engineer needs to use an ML model to predict the price of apartments in a specific location.

Which metric should the ML engineer use to evaluate the model's performance?

A. Accuracy

B. Area Under the ROC Curve (AUC)

C. F1 score

D. Mean absolute error (MAE)

Answer:

D. Mean absolute error (MAE)

---

### Detailed Explanation

#### Requirements Summary

1. Regression Task: Predicting apartment prices (continuous numerical output).

2. Performance Metric: Measure how closely predicted prices align with actual prices.

---

### Analysis of the Correct Answer (Option D)

##### What It Does

- Mean Absolute Error (MAE) calculates the average absolute difference between predicted and actual values.

- Formula:

MAE=n1i=1∑n∣yi−y^i∣

where y i y i = actual price, y ^ i y ^ i = predicted price, and n n = number of samples.

##### Why It Meets the Requirements

1. Regression-Specific:

- MAE is designed for regression tasks (continuous outputs like prices).

2. Interpretability:

- Represents the average error in price units (e.g., "average prediction is off by \$5,000").

3. Robustness:

- Less sensitive to outliers compared to Mean Squared Error (MSE).

---

### Key Differentiators for Option D

- Appropriate for Regression: Unlike classification metrics (A, B, C), MAE directly quantifies prediction errors for numerical targets.

- Alignment with Business Goals: Real estate pricing requires understanding average error magnitude, which MAE provides.

---

### Analysis of Incorrect Options

##### Option A: Accuracy

- Problem:

- Classification Metric: Accuracy measures the percentage of correct class predictions (e.g., spam vs. not spam). Irrelevant for numerical predictions.

##### Option B: AUC-ROC

- Problem:

- Binary Classification Metric: AUC evaluates how well a model distinguishes between two classes (e.g., fraud vs. non-fraud). Does not apply to regression.

##### Option C: F1 Score

- Problem:

- Classification Metric: Combines precision and recall for imbalanced classification tasks. Not applicable to numerical outputs.

---

### Conclusion

MAE (D) is the only metric tailored for regression tasks like apartment price prediction. It quantifies prediction errors in a meaningful, interpretable way, unlike classification metrics (A, B, C). For regression, alternatives like MSE or R² could also be valid, but MAE is the best choice among the given options.

An ML engineer has trained a neural network by using stochastic gradient descent (SGD). The neural network performs poorly on the test set. The values for training loss and validation loss remain high and show an oscillating pattern. The values decrease for a few epochs and then increase for a few epochs before repeating the same cycle.

What should the ML engineer do to improve the training process?

A. Introduce early stopping.

B. Increase the size of the test set.

C. Increase the learning rate.

D. Decrease the learning rate.

Answer:

D. Decrease the learning rate.

---

### Detailed Explanation

#### Problem Analysis

- Symptoms: High and oscillating training/validation losses indicate instability in the training process.

- Root Cause: A learning rate that is too high causes the optimizer to overshoot the optimal solution, leading to erratic updates and unstable convergence.

---

### Why Decreasing the Learning Rate Helps

1. Smoother Convergence:

- A smaller learning rate reduces the step size during gradient descent, allowing the optimizer to approach the loss minimum more carefully.

2. Reduced Oscillations:

- Prevents large parameter updates that cause the loss to "bounce" around instead of steadily decreasing.

3. Mitigates Underfitting:

- While high loss suggests underfitting, oscillations are a stronger indicator of unstable training dynamics (fixed by tuning the learning rate).

---

### Key Differentiators for Option D

- Directly Addresses Oscillations: The cyclical loss pattern is a hallmark of an excessively high learning rate. Decreasing it stabilizes updates.

- Improves Model Fit: A stable learning process allows the model to better minimize training and validation losses.

---

### Analysis of Incorrect Options

##### Option A: Early Stopping

- Issue:

- Early stopping halts training when validation loss increases to prevent overfitting. However, both training and validation losses are high, indicating underfitting or unstable training—not overfitting.

##### Option B: Increase Test Set Size

- Issue:

- A larger test set improves evaluation reliability but does nothing to fix the training process or reduce loss values.

##### Option C: Increase Learning Rate

- Issue:

- A higher learning rate would amplify oscillations, worsening instability and preventing convergence.

---

### Conclusion

Decreasing the learning rate (D) is the most effective action to stabilize training and reduce oscillating losses. This adjustment allows the optimizer to navigate the loss landscape more effectively, improving model performance on both training and test data.

An ML engineer needs to process thousands of existing CSV objects and new CSV objects that are uploaded. The CSV objects are stored in a central Amazon S3 bucket and have the same number of columns. One of the columns is a transaction date. The ML engineer must query the data based on the transaction date.

Which solution will meet these requirements with the LEAST operational overhead?

A. Use an Amazon Athena CREATE TABLE AS SELECT (CTAS) statement to create a table based on the transaction date from data in the central S3 bucket. Query the objects from the table.

B. Create a new S3 bucket for processed data. Set up S3 replication from the central S3 bucket to the new S3 bucket. Use S3 Object Lambda to query the objects based on transaction date.

C. Create a new S3 bucket for processed data. Use AWS Glue for Apache Spark to create a job to query the CSV objects based on transaction date. Configure the job to store the results in the new S3 bucket. Query the objects from the new S3 bucket.

D. Create a new S3 bucket for processed data. Use Amazon Data Firehose to transfer the data from the central S3 bucket to the new S3 bucket. Configure Firehose to run an AWS Lambda function to query the data based on transaction date.

Answer:

A. Use an Amazon Athena CREATE TABLE AS SELECT (CTAS) statement to create a table based on the transaction date from data in the central S3 bucket. Query the objects from the table.

---

### Detailed Explanation

#### Requirements Summary

1. Query CSV Data by Transaction Date: Filter existing and new CSV files stored in S3 using the transaction date column.

2. Minimal Operational Overhead: Avoid complex ETL pipelines, recurring jobs, or manual intervention.

---

### Analysis of the Correct Answer (Option A)

##### What It Does

- Athena CTAS creates a new table in AWS Glue Data Catalog, partitioning the data by transaction date (if specified).

- Query Efficiency: Partitions allow Athena to scan only relevant data during queries, improving performance and cost.

- Serverless & Automated: Athena requires no infrastructure management. New CSV files added to the S3 bucket are automatically included in subsequent queries.

##### Why It Meets the Requirements

1. Centralized Data Access:

- The CTAS table references all CSV files in the central S3 bucket. New files are automatically detected and queried without manual updates.

2. No Ongoing Maintenance:

- Athena’s serverless architecture eliminates the need for job scheduling, cluster management, or code maintenance.

3. Partitioning (Optional):

- If the CTAS statement partitions data by transaction_date, queries filter data at the partition level, reducing scan volume and cost.

---

### Key Differentiators for Option A

- Zero ETL Pipeline: Athena directly queries raw CSV files in S3 without requiring data movement or preprocessing.

- Immediate Query Capability: Once the table is created, ad-hoc or programmatic queries can filter by transaction date using SQL.

---

### Analysis of Incorrect Options

##### Option B: S3 Replication + S3 Object Lambda

- Problem:

- Inefficient for Bulk Queries: Object Lambda transforms data per request, which is impractical for querying thousands of CSV objects.

- Operational Complexity: Replicating data and configuring Lambda functions adds unnecessary steps.

##### Option C: AWS Glue Job + New S3 Bucket

- Problem:

- ETL Overhead: Requires writing and maintaining a Spark job, scheduling it for new data, and managing output buckets.

- Delayed Processing: New data isn’t queryable until the Glue job runs, introducing latency.

##### Option D: Amazon Kinesis Data Firehose + Lambda

- Problem:

- Designed for Streaming: Firehose is unsuitable for batch processing existing S3 data.

- Redundant Data Movement: Copying data to a new bucket adds cost and complexity.

---

### Conclusion

Option A provides the least operational overhead by leveraging Athena’s serverless SQL engine to query CSV data directly in S3. The CTAS statement creates a reusable table that automatically includes new files, enabling efficient filtering by transaction date without ETL pipelines or infrastructure management. Other options introduce unnecessary complexity, latency, or costs.

A company has a large, unstructured dataset. The dataset includes many duplicate records across several key attributes.

Which solution on AWS will detect duplicates in the dataset with the LEAST code development?

A. Use Amazon Mechanical Turk jobs to detect duplicates.

B. Use Amazon QuickSight ML Insights to build a custom deduplication model.

C. Use Amazon SageMaker Data Wrangler to pre-process and detect duplicates.

D. Use the AWS Glue FindMatches transform to detect duplicates.

Answer:

C. Use Amazon SageMaker Data Wrangler to pre-process and detect duplicates.

---

### Detailed Explanation

#### Requirements Summary

1. Detect Duplicates: Identify records with duplicate values across key attributes in a large unstructured dataset.