Intelligent Agents and their Environments

1/52

Earn XP

Description and Tags

To understand what an agent is, how it can be modelled, the environment and assumptions that need to be made, making the right design decisions and choosing the right tools and managing complexity.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

53 Terms

Define an ‘agent’

Perceives its environment through its sensors then achieves its goals by acting on its environment via actuators.

What are actuators?

Perceives its environment through its sensors then achieves its goals by acting on its environment via actuators.

How do we categorise agents?

Environment, e.g. conveyer belt of letters

Goals, e.g. route letter into correct bins

Percepts, e.g. array of pixel intensities

Actions, e.g. route letter into a bin.

What are the different types of Intelligent Agents?

Simple reflex agents

Model-based reflex agents

Goal-based agents

Utility-based agents

Learning agent

What is a simple reflex agent?

The action of the agent depends only on immediate percepts.

Implemented by condition-action rules

Stateless - no memory of previous actions or percept so we cannot take any preventative measures if agent reacts to a percept.

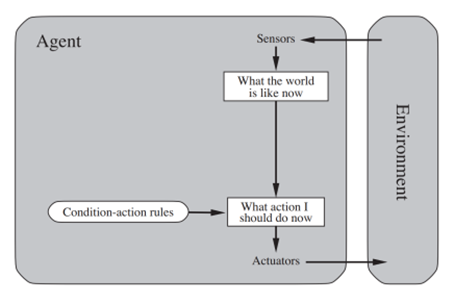

What does the simple reflex agent diagram look like?

What is a model-based reflex agent?

Action may depend on history or unperceived aspects of the world.

Internal world model needs to be maintained.

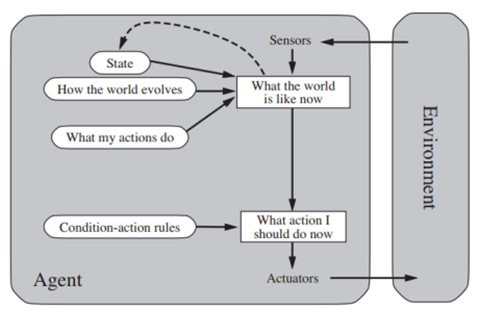

What does the Model-Based reflex agent diagram look like?

What is a Goal-Based agent?

Action depends on a single goal by using information form its environment.

Stateful

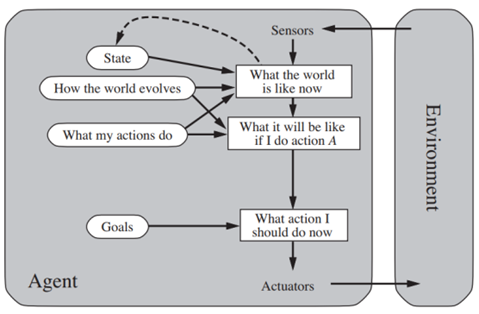

What does the Goal-Based agent diagram look like?

What is a Utility-Based agent?

Action depends on juggling conflicting goals.

Used to optimise utility over a range of goals, such as profits or energy efficiency.

Utility: Measure of goodness (real number).

Combine with probability of success to get expected utility.

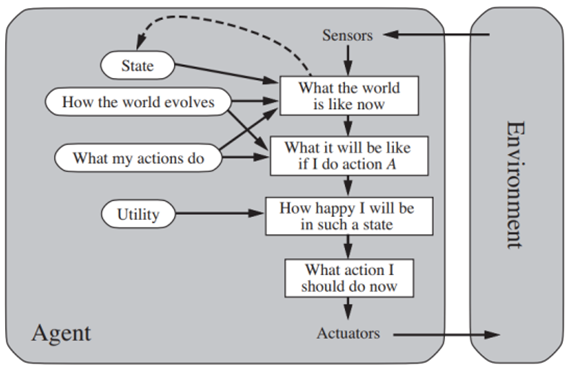

What does the Utility-Based agent diagram look like?

What is a learning agent?

Uses machine-learning and data mining to improve its performance over time.

Can learn from their experiences and make decisions based on that knowledge.

What do we mean if an agent is benign?

Fully observable, deterministic, episodic, static, discrete and single agent. E.g. mail-sorting robot.

What do we mean if an agent is chaotic?

Partially observable, stochastic, sequential, dynamic, continuous and multi-agent. E.g. weather, smoke, ocean turbulence.

What is a Problem-Solving agent?

A type of goal based agent, considers future action and the desirability of their outcomes.

Uses atomic representation, states of the world are considered as wholes, with no internal structure visible to the problem-solving algorithms.

E.g. a chess game with computer.

How are goals formulated in a problem-solving agent?

Based on the current situation and the agent’s performance measure. First step in problem solving.

Consider a goal to be a set of world states - exactly those states in which the goal is satisfied (relates to FSM).

How are problems formulated in a problem-solving agent?

Process of deciding which actions and states to consider, given a goal.

How does an agent determine the best possible actions to solve a problem??

It must require additional information, e.g. familiarity with the geography of Romania to achieve the goal of driving from Bucharest to Arad.

What happens if the environment is unknown to the agent but it must determine the best possible actions?

Then it has no choice but to try one of the actions at random. In general, an agent with several immediate options of unknown value can decide what to do by first examining future actions that eventually lead to states of known value.

What do we mean by ‘search’?

The process of looking for a sequence of actions that reaches the goal.

What does a search algorithm do?

Takes a problem as input and returns a solution in the form of an action sequence.

What is the execution phase?

Once a solution is found, the actions recommended by the search algorithm can be carried out.

What happens once the solution has been executed?

The agent will formulate a new goal.

Why does an agent ignore its percept during execution phase?

It knows in advance what the actions will be through the action sequence.

Define ‘percept’

The input that an intelligent agent is perceiving at any given moment

What is an ‘open loop system’?

An agent that carries out its plan with its eyes closed must be quite certain of what is going on.

Define ‘abstraction’

The process of removing unnecessary detail from a representation.

When is abstraction useful?

If carrying out each actions in the solution is easier than the original problem by removing as much unnecessary detail as possible while retaining validity and ensuring that the abstracted actions are easy to carry out.

What is a ‘toy problem’?

Intended to illustrate or exercise various problem-solving methods. It can be given a concise, exact description and hence is usable by different researchers to compare the performance of algorithms.

What is a ‘real-world problem’?

One whose solutions people actually care about. Such problems tend to not have a single agreed-upon description, but we can give the general flavour of their formulations.

What are the two main kinds of formulation?

Incremental

Complete-state

In either case, the path cost is of no interest because only the final state counts.

What is ‘incremental formulation’?

Involves operators that augment the state description, starting with an empty state; for the 8-queens problem, this means that each action adds a queen to the state.

What is ‘complete-state formulation’?

Does not start with an empty state, starts with all 8 queens on the board and moves them around.

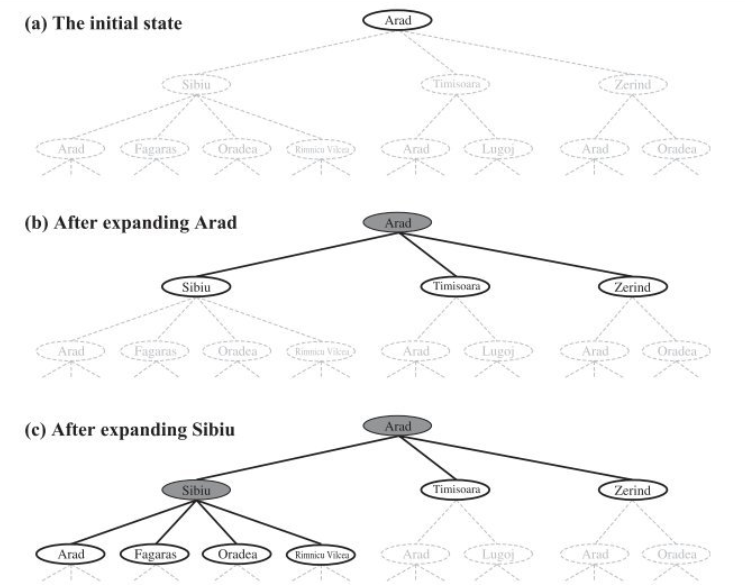

How do we search for solutions?

Searching algorithms work by considering various possible action sequences.

The possible action sequences starting at the initial state form a search tree with the initial state at the root; the branches are actions and nodes corresponding to states in the state space of the problem.

Then we need to consider taking various actions by expanding the current state; that is, applying each legal action to the current state, thereby generating a new set of states.

What does a partial search tree look like?

What is a search strategy?

Search algorithms all share this basic structure; they vary primarily according to how they choose which state to expand next.

What are redundant paths?

Exists whenever there is more than one way to get from one state to another. E.g. consider the paths Arad-Sibiu (140km long) and Arad-Zerind-Oradea-Sibiu (297km long), hence second path is redudant as it’s just a worse way to get to the same state.

What is a queue?

A FIFO data structure which pops the oldest element off the queue.

What is a stack?

A LIFO data structure which pops the newest element off the queue.

How do we measure problem-solving performance of an algorithm?

By evaluating:

Completeness: Is the algorithm guaranteed to find a solution when there is one?

Optimality: Does the strategy find the optimal solution?

Time complexity: How long does it take to find a solution?

Space complexity: How much memory is needed to perform the search?

What is a single-agent environment?

Only one agent interacts with the environment. There are no other agents to compete with or collaborate with.

What is a multi-agent environment?

Multiple agents interact with each other. These agents can either cooperate or compete, making the environment more complex.

What is discrete environment?

The number of possible states and actions is finite and countable. The agent makes decisions in clear, distinct steps.

What is continuous environment?

The number of possible states and actions is infinite. The agent must handle a range of values and movements, which can vary smoothly.

What is deterministic environment?

The outcome of every action is certain. The agent can predict the exact result of any action.

What is stochastic environment?

The outcome of actions is uncertain and can vary. This randomness means the agent cannot predict with full certainty what will happen after taking an action.

What is episodic environment?

The agent’s actions are divided into separate, self-contained episodes. Each episode is independent of the others, meaning the agent’s actions in one episode do not affect future episodes.

What is sequential environment?

The agent’s actions influence future decisions. The current state of the environment depends on previous actions, requiring the agent to think ahead.

What is a fully observable environment?

The agent has complete and accurate knowledge of the environment’s state at any given time. It can make decisions without needing to predict or infer hidden information.

What is a partially observable environment?

The agent only has access to partial information about the environment. Some aspects of the environment may be hidden or unknown, forcing the agent to make decisions with uncertainty.

What is static environment?

The state of the environment remains unchanged unless the agent takes an action. The environment is predictable because it does not evolve on its own.

What is dynamic environment?

The state of the environment can change independently of the agent’s actions. This requires the agent to adapt to changes beyond its control.