PSYC2050

1/183

Earn XP

Description and Tags

Final exam

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

184 Terms

habituation

getting used to it response

what is Watson's Methodological Behaviourism

Measure behaviour to infer learning

Limited to observable effects

what is Skinner's Radical Behaviourism

Measure behaviour to infer learning

Limited to observable effects

Complex processes can be broken into small behavioural stimulus and response units

what is US: unconditioned stimulus

A stimulus that naturally and automatically triggers a response without prior learning.

UR: unconditioned response

The natural reaction to an unconditioned stimulus that occurs without prior conditioning.

CS: conditioned stimulus

Stimulus to learn to respond

CR: conditioned response

Response to learnt CS

INNATE vs learned (conditioned or unconditioned)

unconditioned vs conditioned

. Phases of a typical conditioning experiment

habituation

aquisition

extinction

what happens during Habituation

CS presented alone

what happens during Acquisition

Present CS + US

what happens during Extinction

CS presented alone

Learning processes during extinction

Spontaneous recovery + Renewal effect + Reinstatement

Spontaneous recovery VS Renewal effect VS Reinstatement

reintroduce CS after break. CR reappears VS when extinction is context specific VS Present US alone after extinction

Factors influencing acquisition curve

Intensity of US (more intense, more rapid learning

Order and timing (CS coming before US is better)

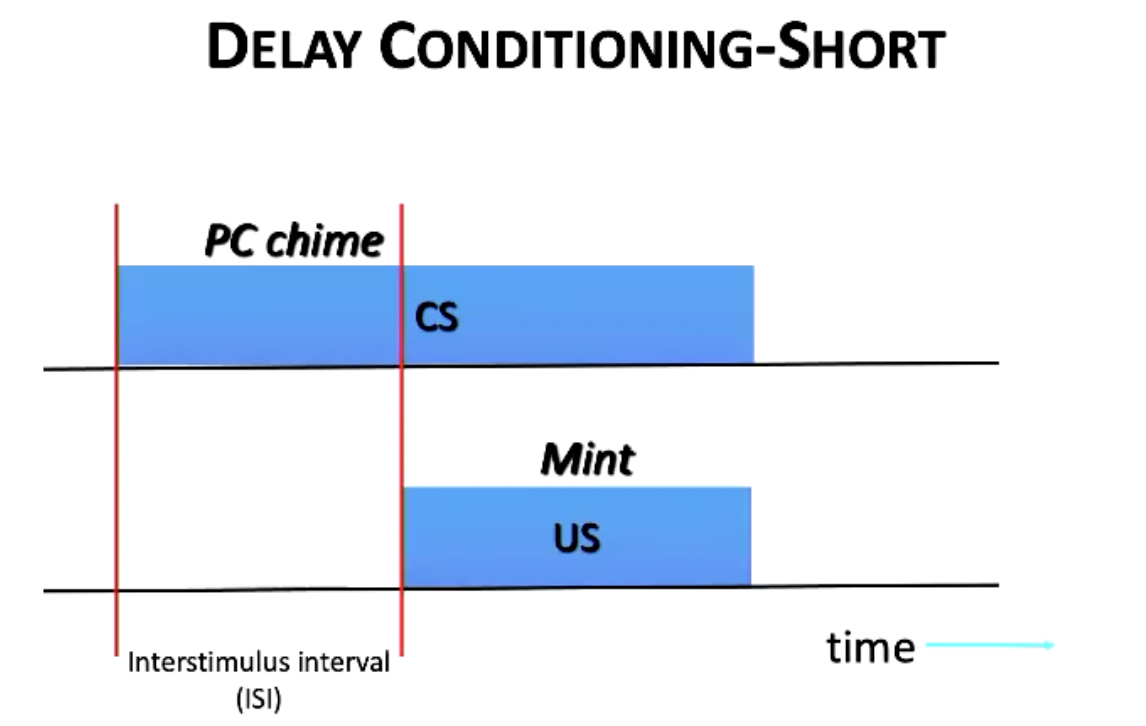

describe delay conditoning short

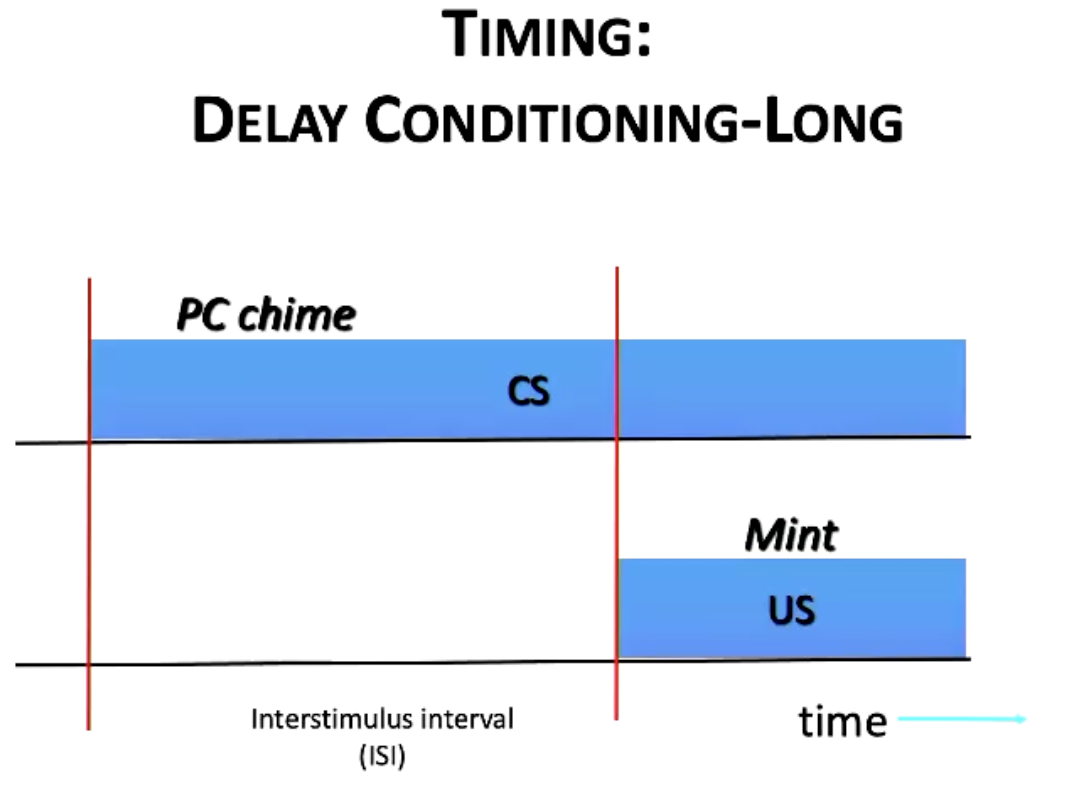

describe delay conditioning long

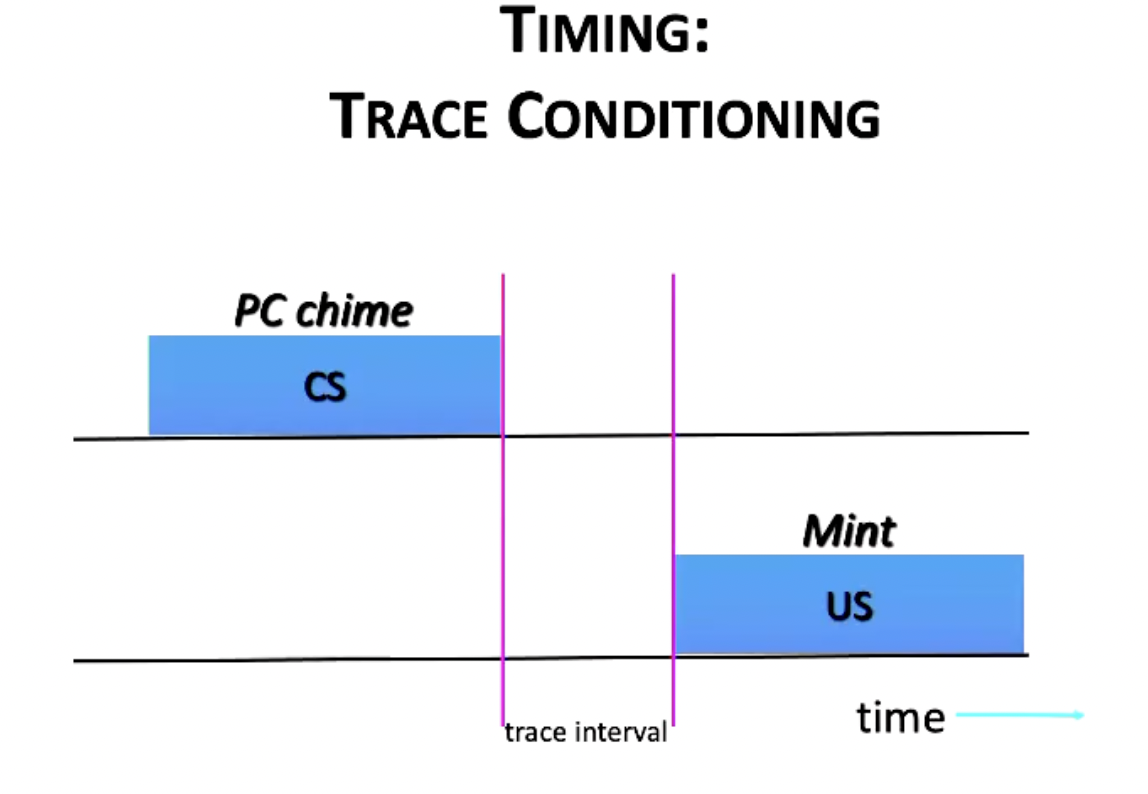

describe trace conditioning

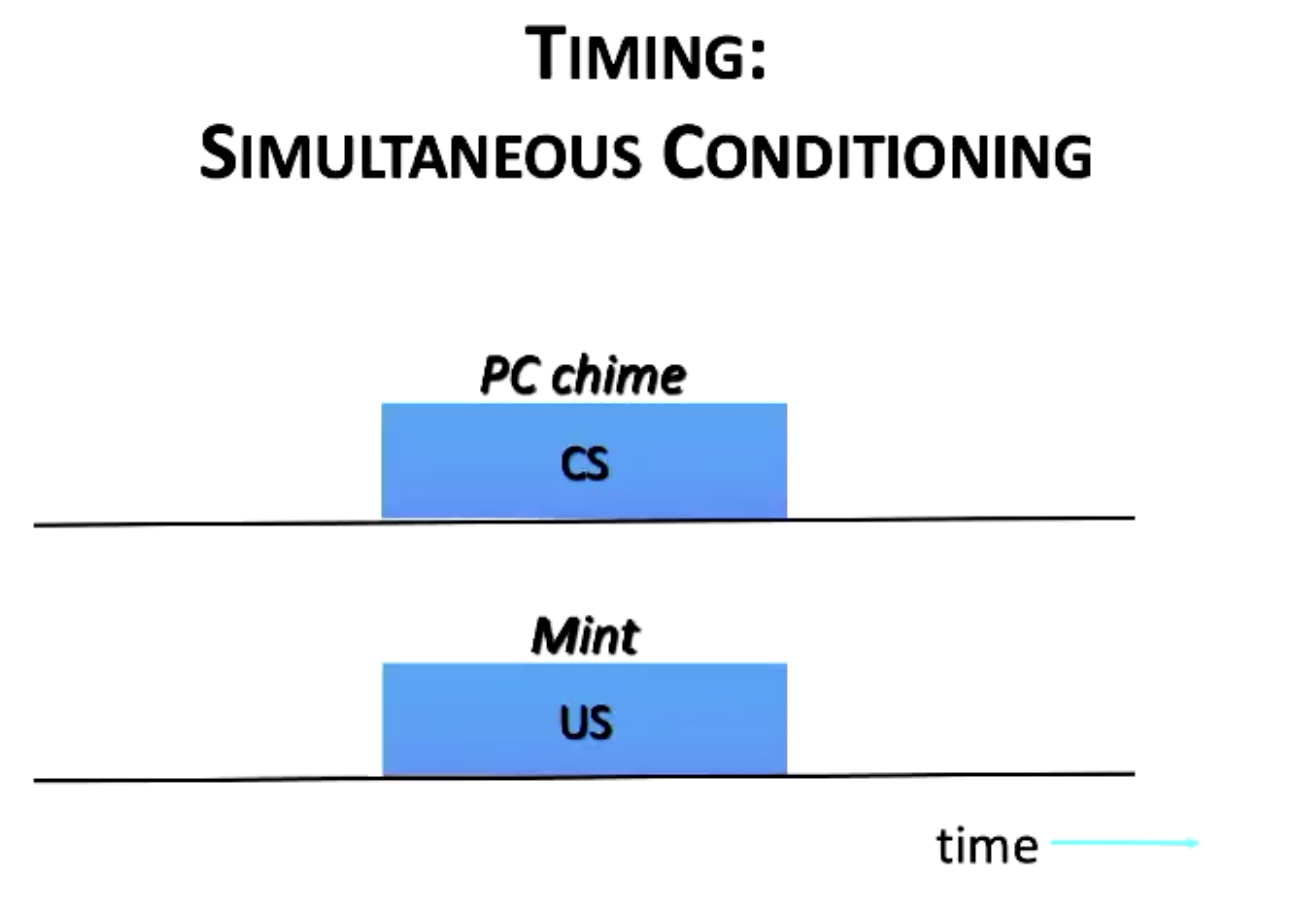

describe simultaneous conditioning

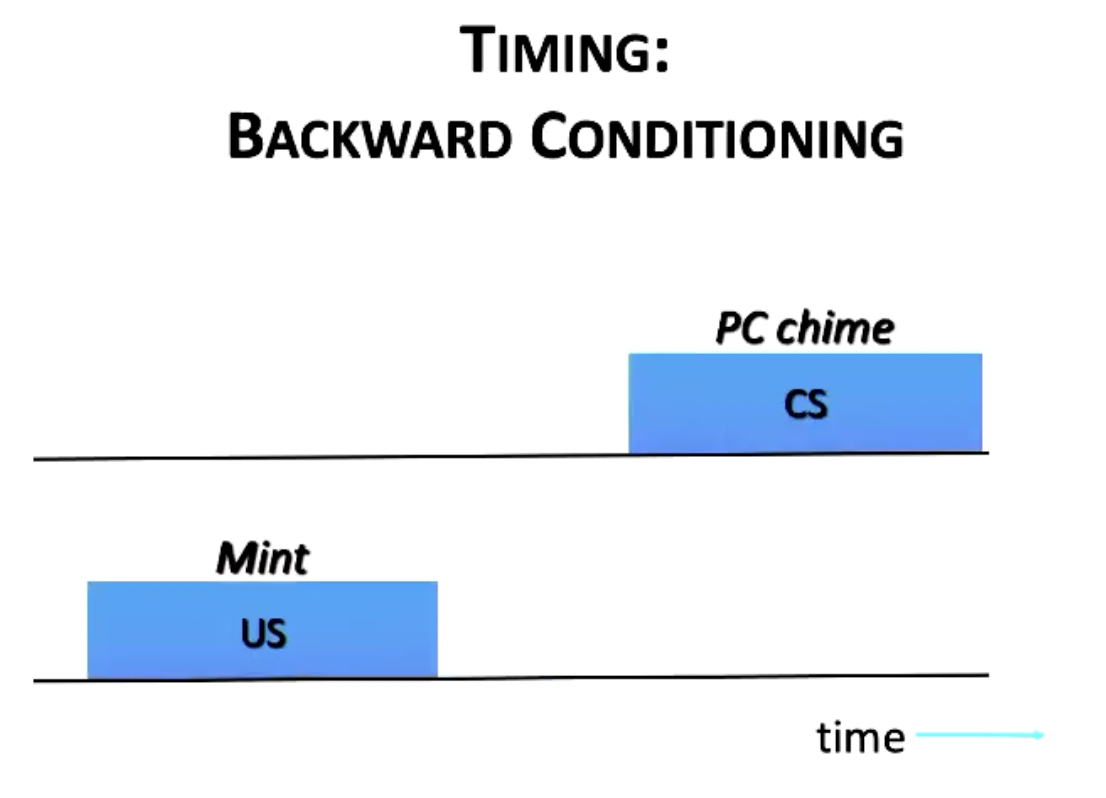

describe backward conditioning

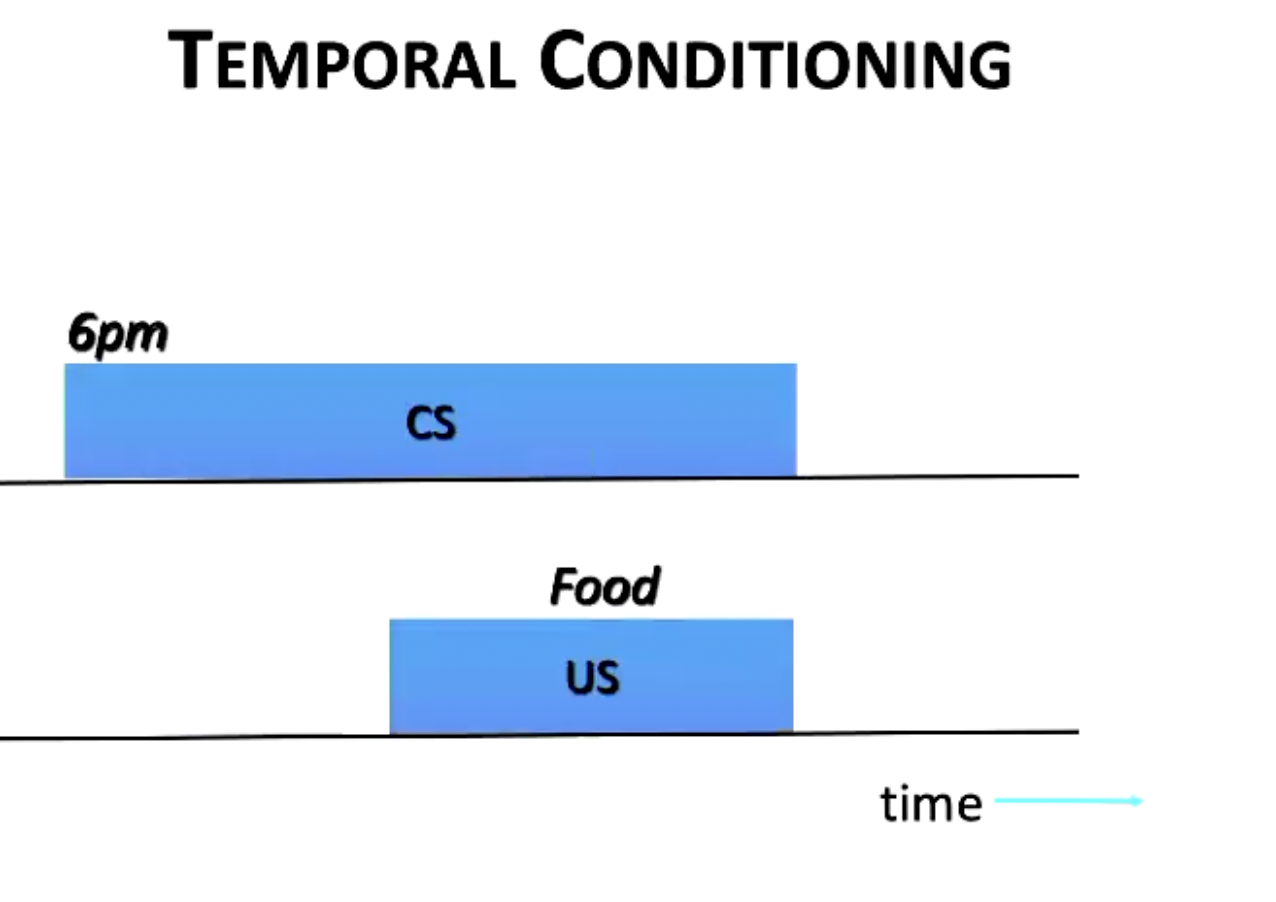

describe temporal conditioning

Excitatory

CS predicts occurrence of US

exam excitatory

if 'A' is bell… A-US, A-US, A-US -> A lead to CR

Inhibitory

CS predicts absence of US or different US

example of Inhibitory

E.g. if 'B' is light, so no US… A-US, A-US, AB, A-US, AB

Animal learns that B predicts absence of US, so no CR

How do we know if animal has learned smth about inhibitory stimulus?

summation and retardation tests

Retardation test (what takes place, how to test

Inhibitory conditioning takes place

A-US, A-US, AB, A-US, AB

B becomes inhibitor 'I'

To test: Train 'I' and neutral stimulus 'N' -> excitatory

I-US, I-US, I-US

N-US, N-US, N-US

Slower learning to inhibitor: I< N

You don’t learn as well to it because it was originally an inhibitory stimulus

summation test (what takes place, how to test

Inhibitory conditioning takes place

A-US, A-US, AB, A-US, AB

To test:

New excitatory CS alone: N

New excitatory CS + inhibitor: N + I

CR time is faster for N compared to N + I

retardation vs summation

What it is: A test to see if a stimulus takes longer to become excitatory.

How it works: After a stimulus has been trained as a conditioned inhibitor (meaning it signals the absence of an outcome), it is then paired with an outcome to become a conditioned excitor. If it functions as a true inhibitor, it will take more trials for the organism to learn the excitatory association compared to a novel stimulus.

Purpose: To determine if the prior inhibitory training has retarded the acquisition of an excitatory response. VS Inhibitory presented WITH new stim

Equipotentiality

any two stimuli can be paired together

Contiguity

the more two stimuli are paired, the stronger the association

Contingency

conditioning changes trial to trial in a regular way

Blocking

when a neutral stimulus and excitatory stim r paired with the US

no learning of neutral stimulus with the US

superconditioning

when a neutral stimulus and inhibitory stim r paired with the US

strong association of neutral stimulus with the US

example of blocking

E.g. rats in either 'blocking' and 'control group'

Control: light + noise -> shock , repeat till CR

Just light-> avoid

Blocking: noise -> shock, repeat till CR THEN light + noise -> shock

Just light-> no avoidance

Blocking effect: history of learnt blocks learning of new stimulus

example of superconditioning

Noise-> no shock , so safe. (inhibitor)

Tone + light -> shock

Rats showed stronger conditioning to light then control

CS pre-exposure (latent inhibition) ( retardation, summation?)

Longer you are presented with CS before learning protocol -> less likely to learn

Context specific

Passes retardation test

Does not pass summation test

testing Generalisation

Test:

CS1 (e.g. tone)- US

Test different groups with CS1(e.g. tone w diff frequency) CS2(e.g. tone w diff frequency) , CS3(e.g. tone w diff frequency) …

-> generalisation

E.G. Rabbit eye blink response

US: mild electric shock

UR: eye blink

CS+: 1200 Hz tone

-> Tone-shock; Tone-shock; Tone-shock

-> response

-> playing other frequency (e.g. 400 Hz, 800Hz) -> learning occurred, but learning is less compared to 1200Hz

*other similar stimuli may produce CR

More similar to CS, more likely to elicit CR

* as learning continues, organism learns the best CS associated with US (discriminate)

Garcia effect (preparedness)

Some association are learnt faster

US sickness (taste- sickness > noise + light- sickness)

US shock (taste- shock> noise + light- shock)

Because noise + light are commonly associated with lightning, which is like common sense

Rescorla-Wagner model

Explains how organism learns prediction of US

Expected VS actual strength of US

Big difference-> surprise

Expectation is based on prior experience with US

Strength of US is fixed

Describe a new fear conditioning experiment and use it to illustrate the renewal effect

CS: whistle

US: angry dog

UR: flinching

CR: flinching

Renewal effect: get extinction in one condition, one context, but in another context it comes back

E.g. administer shock in context A till extinction-> context B, CR comes back

Classical conditioning vs Operant conditioning

Classical conditioning | Operant conditioning |

Ivan Pavlov | B.F. Skinner |

Learning via association | Learning via reinforcement |

Relies on reflective associations between stimuli | Relies on consequences of past actions influencing behaviour |

Involuntary responses | Voluntary behaviours |

Chaining (teaching new behaviour)

Best to shape behaviour, best to start with the last behaviour on the chain (learning backwards)

Positive reinforcement

adds smth to increase behaviour (e.g. star for good behaviour)

Negative reinforcement

taking smth away to increase behaviour (remove discomfort)

Positive punishment:

adds smth to decrease behaviour (e.g. getting told off)

Negative punishment:

takes smth away to decrease behaviour (losing licence)

Strongest rate of learning: VI, FR, VR, FI

Strongest rate of learning: VR, FR, VI, FI

Schedules of reinforcements

Continuous (CRF)

More effective

Partial, Intermittent (ratio- instances of behaviour & interval- time of behaviour; fixed & variable)

Fixed ratio: every nth action is associated with an action (e.g. every 10th visit get free coffee)

Variable ratio: on average, every nth action (e.g. gambling 'wins' after placing bets)

Most resistant to extinction

Fixed interval: first behaviour after n seconds (e.g. pocket money every week for cleaning room)

Variable interval: on average, first behaviour after n seconds (e.g. checking messages)

Partial, Intermittent (Schedules of reinforcements)

ratio- instances of behaviour & interval- time of behaviour; fixed & variable

Fixed ratio

every nth action is associated with an action (e.g. every 10th visit get free coffee)

Variable ratio

on average, every nth action (e.g. gambling 'wins' after placing bets)

Most resistant to extinction

Fixed interval

first behaviour after n seconds (e.g. pocket money every week for cleaning room)

Variable interval

on average, first behaviour after n seconds (e.g. checking messages)

Successful punishment:

|

• understand the various reward variables that affect reinforcement

Drive: Reinforcement depends how much the subject wants it (motivation)

Magnitude/size: size matters (e.g. amount of food given)

Delay: delayed reinforcement reduces learning

Reinforces work better when:

– Drive/desire is higher

– Reinforcer is larger (but this tapers off)

– Reinforcer is given right away

Three term contingency

1. The discriminative stimulus - Sets the occasion

2. The operant response - The behaviour 3. The outcome (reinforcer/punisher) that follows - The consequence |

• understand the mechanism of stimulus control

|

Shaping (successive approximations)

Gradually develop new behaviour Reinforce behaviour that leads to desired behaviour |

Operant conditioning:

You don't need to experience responses or consequences for learning to occur

Behaviour regulation theory (premack

what if stimuli aren't reinforcers but behaviours are

E.g. water X -> drinking water

TV X -> watching TV

Escape learning

emit a response that terminates an aversive consequence (negative reinforcement)

Avoidance learning:

after escape learning has been learnt enough, emit a response that prevents an aversive consequence altogether

Avoidance learning- anxiety example

E.g. fear of snakes

Avoid going on bushwalks where snake could appear

Avoid the aversive feeling of snakes being around

Flooding method

Flooding (e.g. exposure to snakes with no negative consequence)

escape can't take place

Learned helplessness

Effect of unavoidable aversive experience

Functional analysis

What is the problem of behaviour? When does it occur? Identify reinforcers Stimuli, behaviour, consequence |

Positive parenting program

|

Using operant conditioning

Using chaining and reinforcement

E.g. sending kids to school

Teach kids behaviour to perform

Set a routine

Reward for desired behaviour

Avoid nagging or hassling

Turn it into a game

Selective attention

Ignoring irrelevant stimulus

MORE difficult the more similar the two things are

Endogenous/goal-directed (top-down) control

E.g. tuning out of dull convo and tuning into another

Exogenous/stimulus-driven (bottom-up) control

E.g. attention captured by shattered glass

Endogenous VS Exogenous

Endogenous/goal-directed (top-down) control VS Exogenous/stimulus-driven (bottom-up) control

Inattentional blindness

When you focus your attention, you miss other changes in plain sight

Change blindness

Changes are missed because they occur alongside brief visual disruption (e.g. blinks, windshield wipers)

Balint's syndrome simultanagnosia

Bilateral occipital/parietal lobe damage prevents patients from perceiving more than 1 stimulus at once, but you can link them together.

Describe evidence that attention spreads across objects

Balint's syndrome simultanagnosia Red dots- participants identify red Green dots- participants identifies green Red and green dots- participant only identifies red Linking red n green dots- participant identifies red n green |

Overt

Divert eyes to location of attention

Covert

Eye focuses on else where but attention is else where

Late selection

Distractor influences target processing

Something I didn't pay attention to but still extract meaning from it

Early selection

Distractor doesn't influence target processing

I know its colour, its blah

You target with something in mind before already

Perceptual load

Low load- numbers are higher, slower

High load- numbers are lower

Describe attention and the early vs. late selection issue

What impacts early or late selection is due to perceptual load.

Under what condition do you get late selection under the load theory

Conditions of Low perceptual load

High working memory load

Late selection Much easier to be distracted |

Low perceptual load =

VS

High working memory load =

Low perceptual load = late selection

VS

High working memory load = late selection

Low working memory load task

Number (e.g. 7

Reaction time task

Was the number a 7

High working memory load task

Number (E.g. 197365

Reaction time task

Was 7 a number

Broadbent's filter theory

Structural

Predicts early selection

Attentional limitations

Kahneman

Attention -> resources

Number of current task that can be performed depends on difficulty (resource demands)

Available resources increases under arousal/motivation

Automaticity -> training improves attention

Feature vs conjunction search

Feature (disjunctive) | Conjunction |

Parallel | serial |

Pop-out (doesn't matter how many distractors) | Increase distractors- longer search time |

E.g. red X amongst black X | E.g. red X amongst black X and red O |

Unaffected by search set size | Affected by search set size |

Pre-attentive | Attentive |

Efficient | Inefficient |

Target absent, conjunction search - takes longest time

Feature (disjunctive)

Feature (disjunctive) |

Parallel |

Pop-out (doesn't matter how many distractors) |

E.g. red X amongst black X |

Unaffected by search set size |

Pre-attentive |

Efficient |

Conjunction search

Conjunction |

serial |

Increase distractors- longer search time |

E.g. red X amongst black X and red O |

Affected by search set size |

Attentive |

Inefficient |

Feature integration theory (FIT)

|

Rapid serial visual presentation (RSVP)

Limitations:

|

Two target RSVP- attentional blink (AB)

Task: report the 2 letters amongst numbers

What varies is the number of distractors (e.g. 3 distractors in between -> lag 3)

People are good at lag 1

Easier identified T1-> reduce AB as less time to process

AB: rejecting distractions (if you remove distractions, there is no AB)

Task switching

E.g. T1: letter? T2: colour? Task set: rules to do a task Cost: disabling one task set/disengaging from prior task for another

Moving assembly line: focus on one task/pp The more similar two tasks are, the more difficult to switch (vise versa Practise reduce cost

Switching to EASIER task is MORE DIFFICULT -> hard to disengage from hard task |

Theories of switch cost

The theories differ in:

|

Automaticity

The more you learn a task, consumes fewer resources

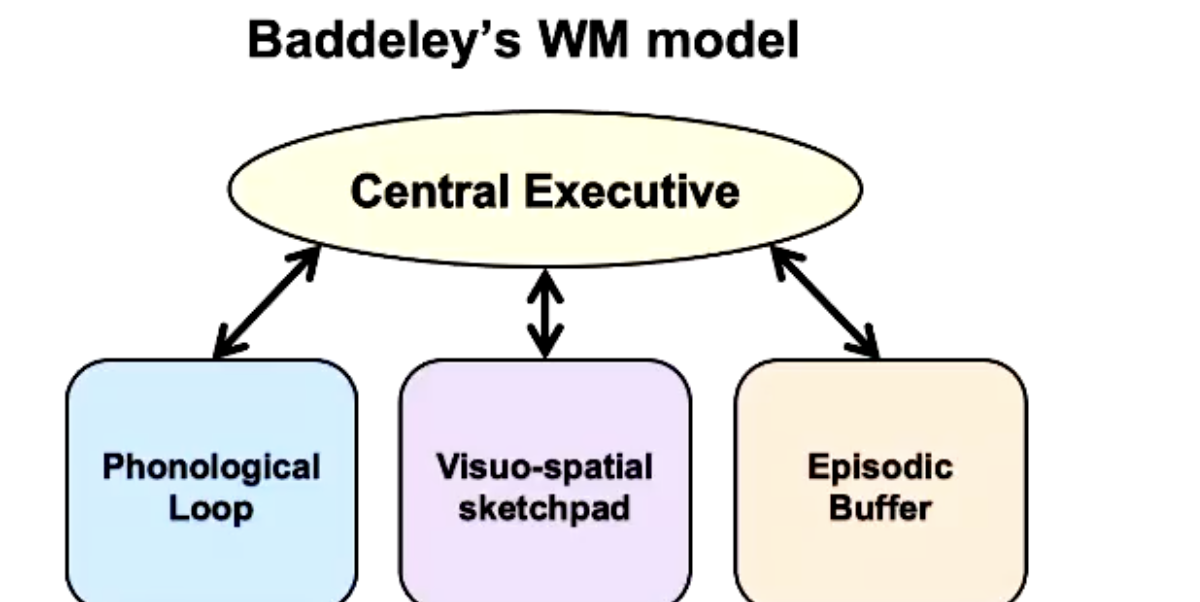

Baddeley's Working memory (short term memory) model

CENTRAL EXECUTIVE:

CENTRAL EXECUTIVE:

Attentional controller

Links working memory and long term memory

Switching attention

Mental manipulation of attention

No storage capacity