Psychology (Dr. C) Unit 2 Test

1/148

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

149 Terms

learning

the process of acquiring, through experience, new and relatively enduring information and/or behaviors; it is important because it allows us to adapt to our environment

how long, on average, does it take university students to learn new, desirable habits?

around 66 days (2 months)

behaviorism

the view advocated by the eminent early psychologist John B. Watson that psychology should be an objective science that studies directly observable external behavior without any necessity to consider internal mental processes

john b watson

founder of behaviorism

associative learning

learning that certain events occur together (pavlov's dog)

ivan pavlov

russian medical researcher who spent 20 years studying digestion. won a noble prize. while studying digestion, he discovered that as you chew, you maximize surface area of food

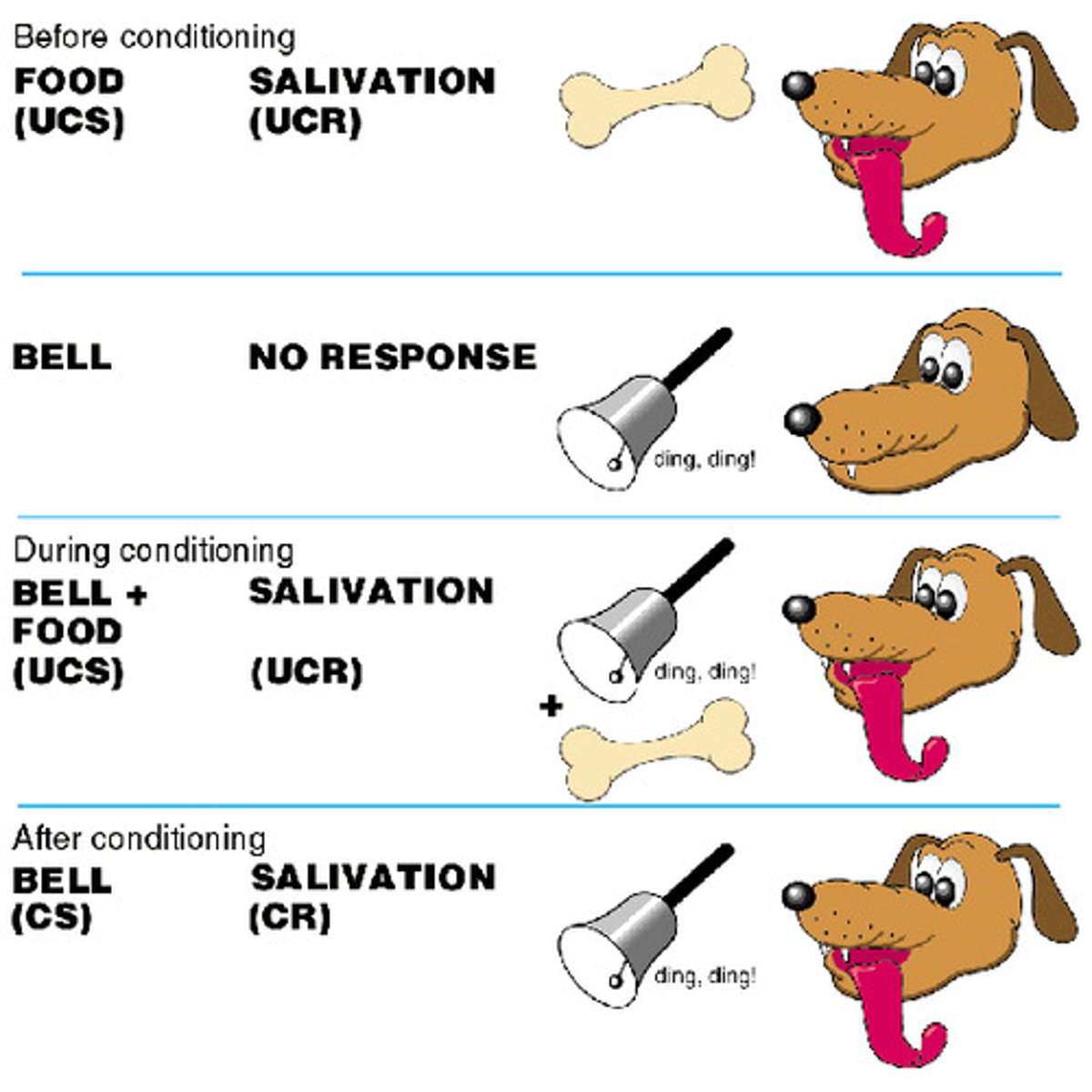

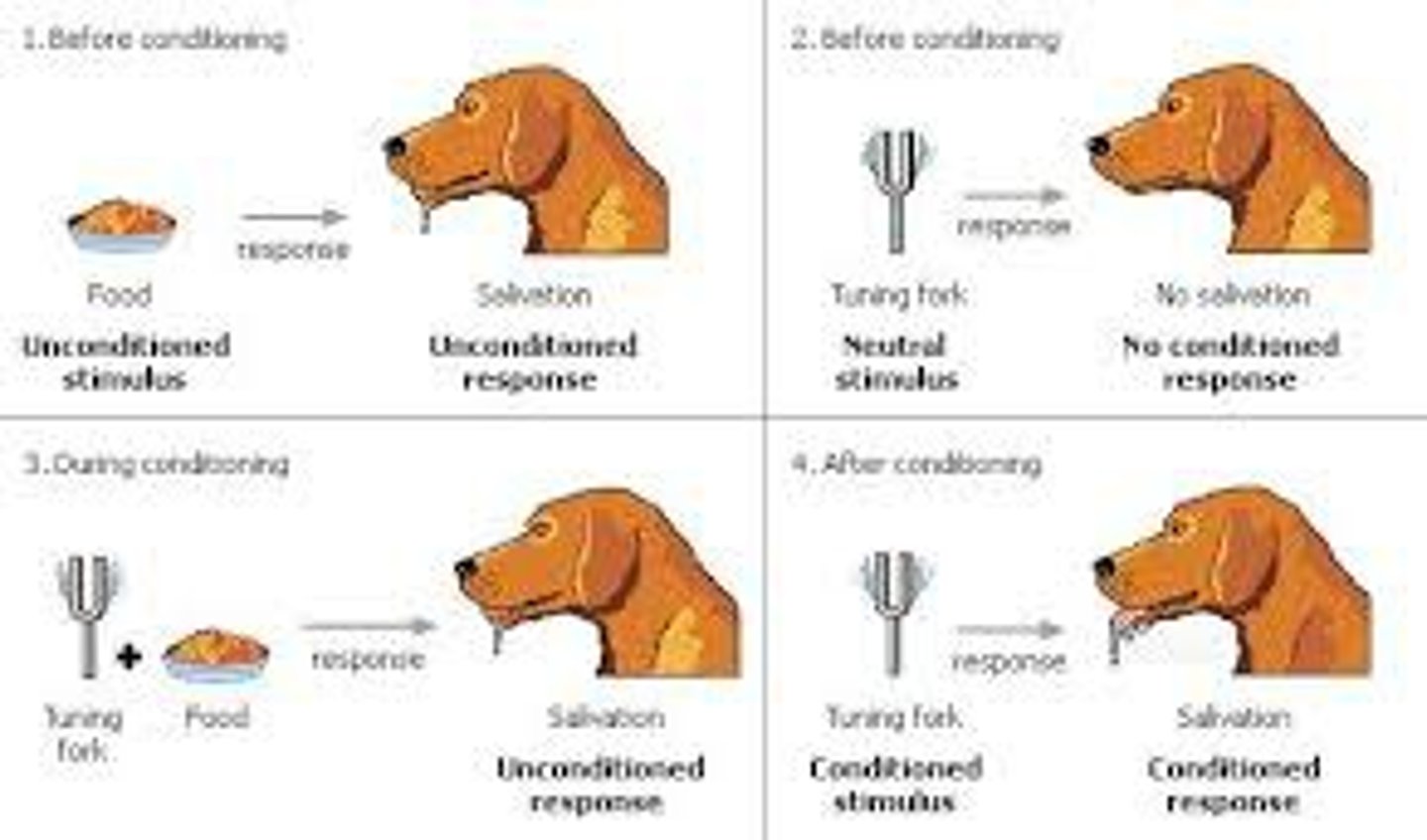

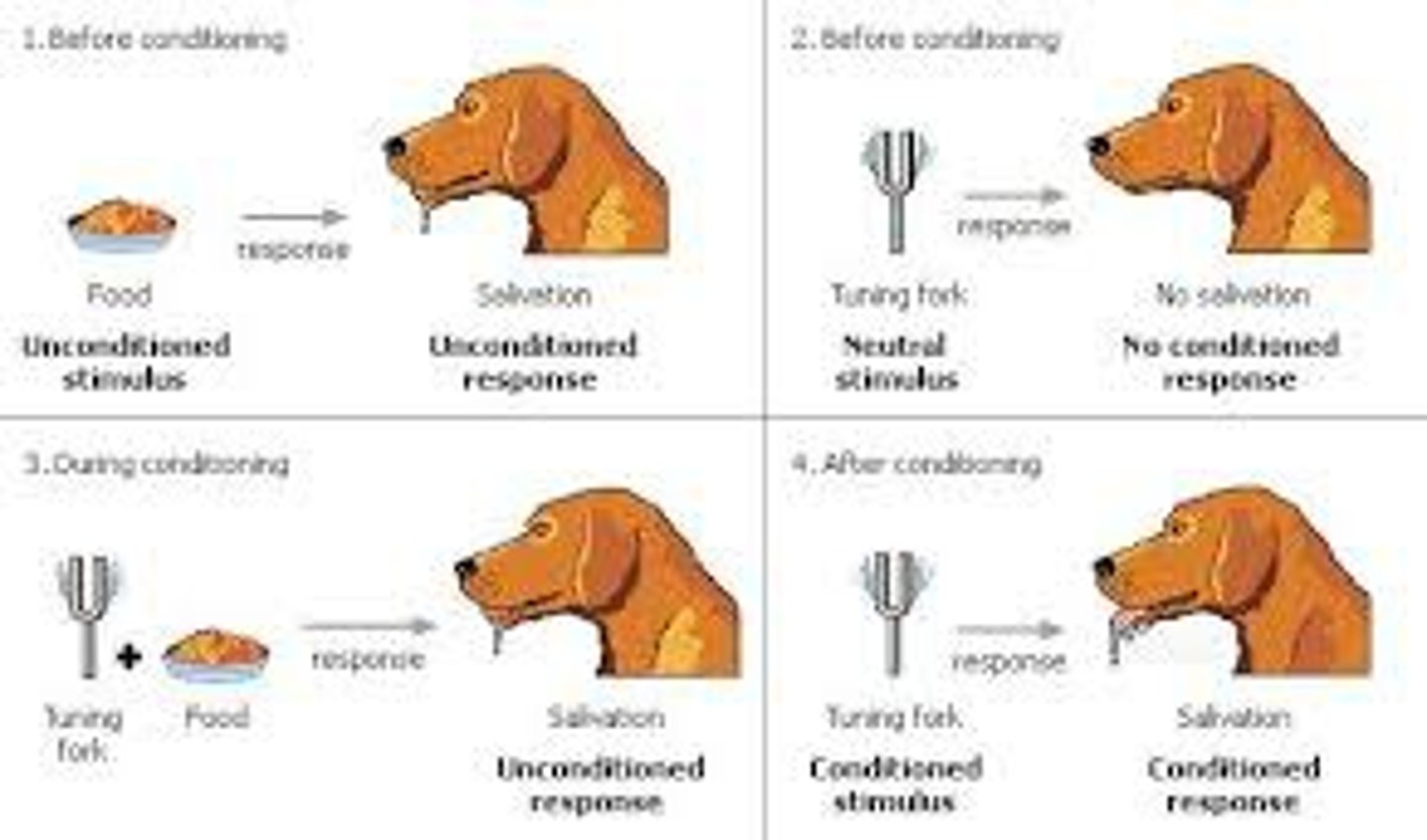

classical conditioning (pavlov)

a kind of learning in which a subject learns to associate one stimulus with another stimulus that is followed by a certain event, such that the subject learns to anticipate that event and respond to the first stimulus as if it were the second stimulus

how did pavlov first come across classical conditioning

his dogs would salivate before they digested food/as they saw food/heard the attendant bring the food

pavlov's early experiment

pavlov would sound a musical tone, then he would give the dogs powdered meat, causing the animals to drool. after this event would be repeated, the dogs would drool after the musical tone was sounded because they associated the musical tone with the food

unconditioned stimulus

UCS/US; a stimulus that naturally brings about a response you are looking at, without any learning having to take place (food)

unconditioned response

UCR/UR: a naturally occcuring response to the unconditioned stimulus, a response that does not require learning in order to take place (dog drooling)

unconditioned

natural, built-in, automatic, not requiring any learning in order to occur

neutral stimulus

NS; a stimulus that does not initially (naturally) bring about the response you are interested in (musical tone at first)

conditioned stimulus

CS; an originally neutral stimulus that, after being associated with (paired with) an unconditioned stimulus, takes on the ability to bring about the response that originally followed the unconditioned stimulus (musical tone later)

conditioned response

CR; a learned response to a previously neutral stimulus that has now become the conditioned stimulus (drooling in response to the musical tone)

conditioned

not natural, not built-in, not automatic, something that would only happen if learning had occured

aquisition

the initial learning of a stimulus-response relationship in which a neutral stimulus is linked to an unconditioned stimulus, and the neutral stimulus becomes a conditioned stimulus capable of bringing about a conditioned response that is the same as the unconditioned response

in pavlov's original studies, what was the optimal interval between presenting the neutral or conditioned stimulus, and the unconditioned stimulus?

right away/the interval must be short. you must start with the neutral stimulus and follow it with the unconditioned stimulus

what is the usual result if the interval is too long, or if the conditioned stimulus comes after (not before) the unconditioned stimulus?

conditioning does not work

experiment with japanese quail

researchers took a male quail, put it in a cage, and turned on a red light. shortly after, they put a receptive female in the cage with the male, then they removed the female. this process was repeated several times. soon, when the red light went off, the male quail became sexually aroused before the female was placed in the cage

sexual arousal can be conditioned in humans

true; example: breaking up with someone who wore a specific perfume, and thinking about the person whenever you smelled the perfume

extinction

the diminishing of a conditioned response; specifically, in classical conditioning, when an unconditioned stimulus no longer follows the conditioned stimulus, until the conditioned response no longer appears; ex: once the food was not given to the dog after the musical tone, the response of drooling was extinguished

spontaneous recovery

the reappearance of a previously extinguished conditioned response after a pause in which there has been no training; ex: seeing your ex after a long time and calling them by their pet name ("hi cutie!")

generalization

following the establishment of a conditioned response, the tendency for stimuli that are similar to the conditioned stimulus to bring about similar responses (ex: pavlov projecting an ellipse instead of a circle to make the dog drool, Dr. C mistaking a left turn arrow for a green light)

discrimination

the learned ability to tell the difference between the conditioned stimulus that will be followed by the unconditioned stimulus, and other, similar stimuli that will not be followed by the unconditioned stimulus (ex: projecting a circle on the screen, giving the dog food, and alternating between projecting an ellipse and not giving the dog food -> dog will not drool for the ellipse because it knows it will not recieve food)

cognitions (internal thought processes) influence classical conditioning

true; this happens in attempts to condition alcoholics to stop drinking (antabuse is a drug that sensitizes you to alcohol/you get violently ill when you drink. an alcoholic knows that if they take the drug, it is the drug that is making them ill, and not the alcohol)

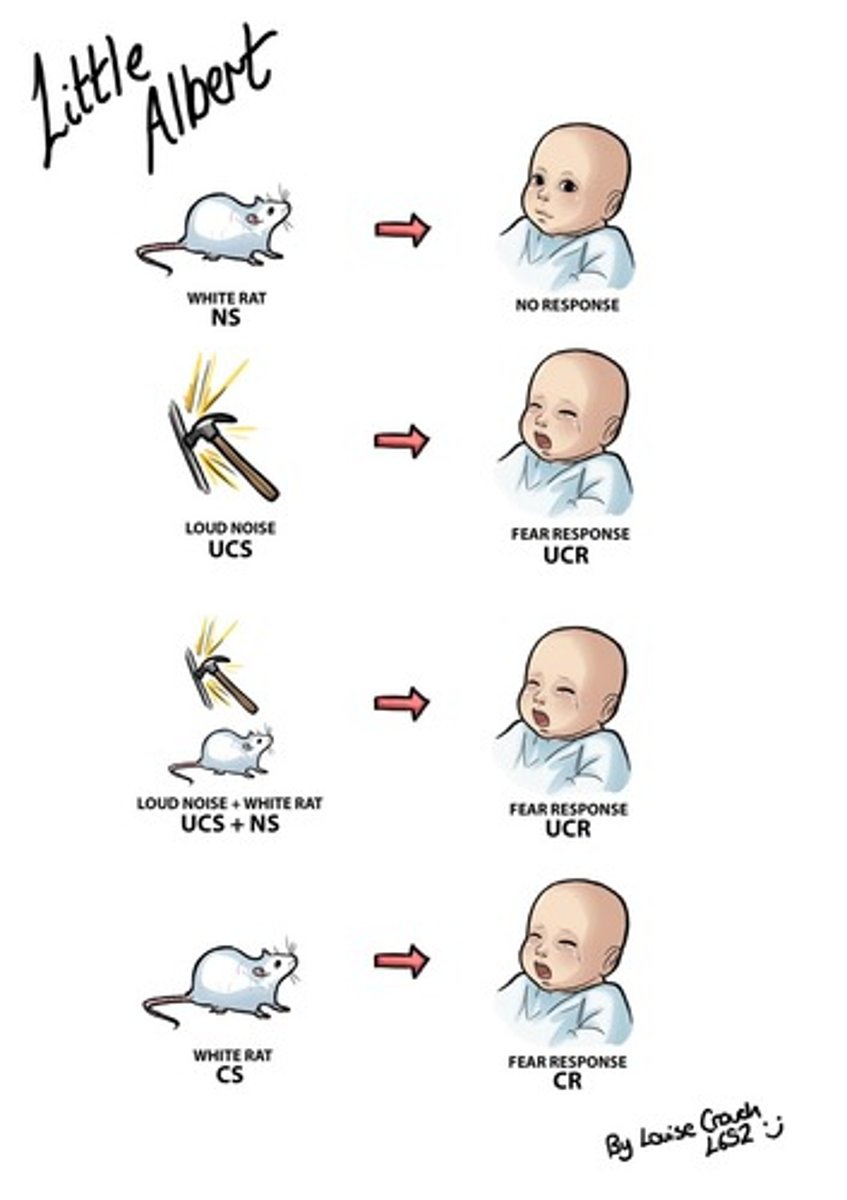

watson and rayner (1920) - little albert

wanted to demonstrate where fears in children came from. they hypothesized that it was a result of conditioning. in the experiment, they took an orphan (little albert) and put him in a playspace with a white rat. then, they hung a big metal bar behind him. anytime little albert tried to touch the rat, they banged the metal bar because children are scared of loud noises. after 7 times of doing this, little albert became terrified of white rats (conditioned stimulus)

watson and rayner demonstrated...

1) fear can be conditioned

2) different but similar stimuli can initiate the fear response, so fear can be generalized (little albert became terrified of white coats, white rabbits, etc.)

what ultimately became of watson and rayner, and what ultimately became of little albert?

watson/rayner: watson had an affair with rayner. this became a scandal and he was fired. watson could not get a job in any university or college bc of this. eventually he married rayner, moved to NYC, became an advertisement designer, became an alcoholic, and died at 36. both of their children committed suicide

little albert: lived a long and happy life, however, he had a huge fear of dogs. died in 2007.

most of the time, fears produced in real life are a result of classical conditioning and generalization

true (example: story of Dr. C running over his daughter, and his daughter having a conditioned fear of being outside/anywhere near the front door (living room, dining room, etc = generalization)

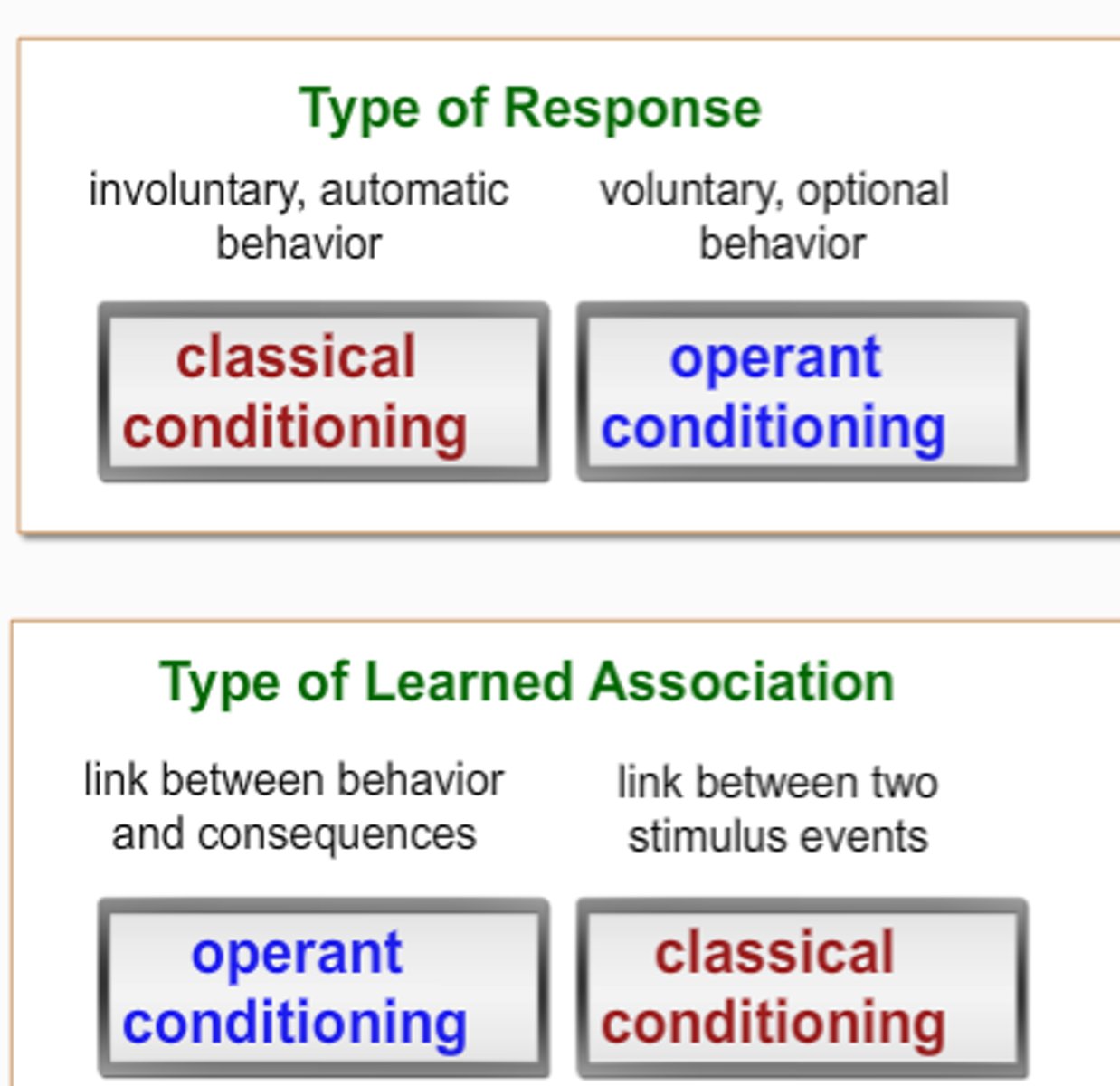

classical conditioning vs. operant conditioning

in classical conditioning, the subject learns to form associations between events (stimuli) it does not control, resulting in behaviors it does not choose; in operant conditioning, the subject learns associations between its own voluntary (operant) behavior and the events (consequences) that result from it

operant conditioning

a type of learning in which voluntary behavior is strengthened if it is followed by reinforcement (some form of reward) and diminished if it is punished or not followed by reinforcement

respondent behavior

behavior that occurs automatically in response to certain stimuli (we observe such behavior in CLASSICAL CONDITIONING)

operant behavior

voluntary behavior that operates on the environment to produce either rewarding or punishing consequences (we observe such behavior in OPERANT CONDITIONING)

B. F. Skinner

pioneered the study of operant conditioning and behavior; english undergraduate (20th century); based his earlier work on project pigeon

thorndikes law of effect

behaviors that are followed by favorable consequences become more likely, while behaviors followed by negative consequences become less likely

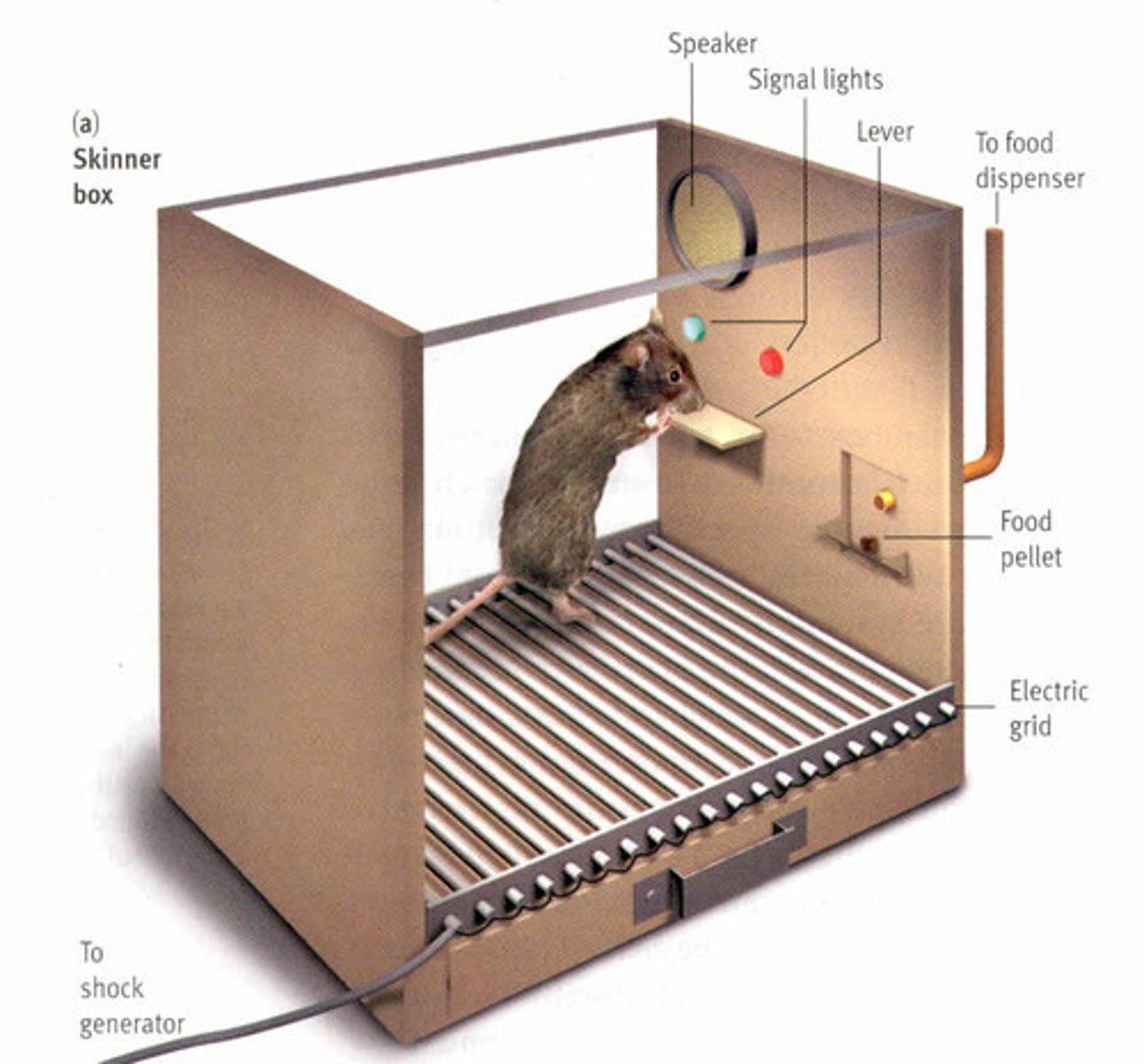

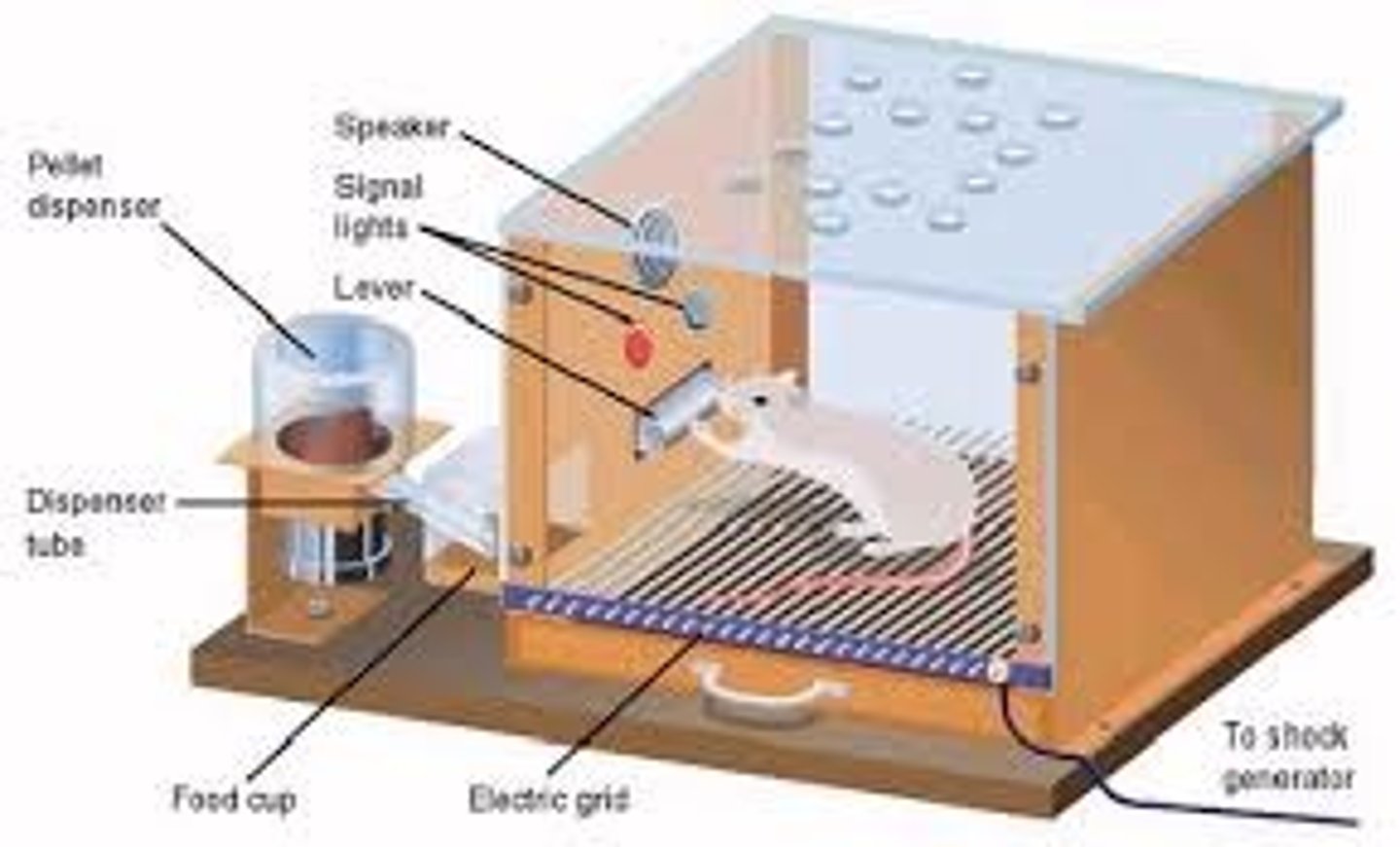

skinner box (operant chamber)

an isolated cage or chamber in which a subject (like a rat or pigeon) can do something (like push a bar or peck an object) in order to get a reward (like food or water) while a mechanical device records and counts the subject's responses

shaping

an operant conditioning procedure in which reinforcers (rewards) guide behavior toward closer and closer approximations of a desired goal

reinforcer

any event that strengthens the behavior it follows; some kind of reward, usually

primary reinforcer

an innately reinforcing stimulus, such as one that satisfies a built in biological need; a reinforcer that requires no learning in order to be rewarding (ex: if someone is hungry and you give them food as a reward, the food is a primary reinforcer)

secondary reinforcer

a stimulus that gains its reinforcing power though its association with a primary reinforcer; a reinforcer that the subject has to learn to associate with a primary reinforcer (ex: money)

how would you use shaping to get a pigeon to bowl?

the experiment would be set up so that a pigeon is inside a big cage with a bowling ball and miniature bowling pins. the pigeon needs to be hungry and you would have a system of rewarding food. in order to get the pigeon to bowl, you would need to reward each step in the right direction. do not reward steps in the wrong direction. eventually, the pigeon will accidentally bump or peck at the ball, and you will need to reward it as it pecks the ball in the right direction (towards the pins)

you can use pavlov's classical conditioning to get a pigeon to bowl

false; bowling isn't a built-in, natural response to any stimulus

you can use thorndikes law of effect to get a pigeon to bowl

false; you would need the pigeon to bowl the first time (this is difficult to do). punishing the pigeon for not bowling would be pointless because it doesn't know what bowling is

the point of operant conditioning

if you have a technique of learning so powerful that i can bring about a new way of learning (getting a pigeon to bowl), you can apply it to real life problems

animals on television, in movies, in circuses and shows, in police/ service work, etc. are trained to do what they do through operant conditioning

true; two of skinner's students founded an animal behavior enterprise and trained 15,000 animals

positive reinforcement

strengthens a response by adding a positive stimulus after that response; positive reinforcement increases the probability that the subject will repeat the response that preceded it (ex: rewarding students with a dollar to say the answer Dr. C wants) the behavior does not have to make "sense" to the subject in order for it to occur

negative reinforcement

strengthens a response by removing a negative, aversive, unpleasant stimulus after the response (ex: sounding a terrible noise to a rat in a skinner box. once the rat knows that it can push down a bar to make the noise stop, it pushes down the bar) (real world example: hitting a snooze button)

terminal goal

the target behavior, the last (terminal) response the subject makes in a chain of learned behaviors; once achieved, the conditioning process is finished (terminated). the terminal goal must be defined in objectively measurable terms, so that there is no question whether it has or has not been achieved (example: getting a child to sit in a chair and be quiet)

baseline behavior

the behavior patterns of the subject before training begins. like the terminal goal, the baseline behavior must be objectively measurable, so that it is clear whether or not any progress is being made. (example: find of average of the behavior that is happening- child runs around the room 2 minutes out of every three). it is important to observe and record the baseline behaviors because you want to know if you're going in the right direction.

reinforcing successive approximations to the goal

rewarding (reinforcing) any slight behavioral change that is a step in the right direction until you finally reach the terminal goal; steps in the wrong direction are not reinforced. this would work in a real world example by asking a child what they want and then eventually building up to that reward when they reach the terminal goal.

immediate reinforcer/immediate reinforcement/immediate gratification

a reward that comes right after the desired behavior. an example would be to give a dog a treat after they perform a desired behavior

delayed reinforcer/delayed reinforcement/delayed gratification

the reward is rewarded later; this is used in people rather than animals. an example would be going to class to get a college degree, using that degree to get a nice job, which eventually leads to the terminal goal of a nice lifestyle

how do humans and animals, and mature persons and immature persons, respond differently to delayed reinforcers?

animals do not respond well to delayed reinforcers, as well as immature persons

schedules of reinforcement

patterns of when and how often reinforcement will occur

continuous reinforcement

rewarding the subject every time they do the right thing

continuous reinforcement leads to a rapid acquisition of a response

true

continuous reinforcement isn't very vulnerable to extinction and is very practical

false- it is vulnerable to extinction and is often not practical

partial reinforcement

you do not reward the subject every time it does what you want; only sometimes

how does partial reinforcement or intermittent reinforcement influence acquisition of a response?

the acquisition phase is slower; however, there is a slow extinction of a response

skinner's experiment demonstrating intermittent reinforcement involving a pigeon

at first, the pigeon got rewarded every time he pecked a button. then, the pigeon got rewarded only some of the time when he pecked the button. at one point, the pigeon pecked the button 150,000 times before it got the reward

staying in a relationship for a very long time because sometimes the relationship is good is a human example of persistence (slow extinction) of a response maintained with intermittent reinforcement

true

fixed ratio schedule of reinforcement

schedule of reinforcement in which the number of responses required for reinforcement is always the same; since the the reinforcement is fixed, the responding is fixed and they look forward to the reward. example: part-time contractor jobs

variable ratio schedule of reinforcement

schedule of reinforcement in which the number of responses required for reinforcement is different for each trial or event (stays close to a number, but is not always that number; is an average). this schedule of reinforcement has a strong rate of responding. real world example: casinos (you might win something big on the first try)

fixed interval of reinforcement

A form of partial reinforcement where rewards are provided after a specific time interval has passed after a response. the subject typically picks up on the pattern and only works/responds near the time you picked. ex: Dr. C's class trying to hang Harriet

variable interval schedule of reinforcement

in operant conditioning, a reinforcement schedule that reinforces a response at unpredictable time intervals. this increases responding. an example would be not telling students when you'll have a test (students will be studying all the time)

punishment

an event that decreases the behavior that it follows

punishment v. negative reinforcement

punishment decreases a behavior through unpleasant consequences, by introducing something negative after an undesirable behavior; negative reinforcement increases a behavior through consequences that remove something negative after a desired behavior

positive punishment

adding an aversive stimulus usually after undesirable behavior. ex: spanking

negative punishment

removing a pleasant stimulus usually after undesirable behavior. ex: taking away video games

two main problems with punishment

1. all you get is a temporary repression of response (they do not forget about the undesirable behavior

2. it doesn't build up positive behavior

undesirable side effects of punishment

1. physical punishment creates fear

2. punishment increases aggression because it models aggression

3. the fear of punishment generalizes to the source of punishment/situation

4. punishment creates resentment

5. punishment creates resistance

6. punishment teaches you when you can get away with it

in real life, when might you use punishment anyways?

with children- child acting up in walmart

how should you use punishment?

1. sparingly

2. mildly

3. consistently

punishment works better than rewarding

false; reward works better than punishment. punishment rarely corrects the unwanted behavior in the long run.

main criticisms of skinner and other behaviorists

1. he ignores biological aspects of conditioning

2. some responses are biologically built in (taste aversion)

3. he ignores the importance of cognitive factors

there are built in biological influences on what can and cannot be conditioned

true

overjustification effect

the effect of promising a reward for doing something a person already likes to do, often with the result that, once the reward is removed, the person enjoys and engages in the activity less than if the person had never been rewarded at all. this increases extrinsic motivation (doing something to get an external reward or avoid internal punishment) and decreases intrinsic motivation (doing something for its own sake, because it is an inherently good thing to do)

how was the overjustification effect demonstrated in an experiment with children?

1st group of children: were allowed to go ahead and play with toys

2nd group of children: were paid to play with the toys

after the experiment, the 1st group wanted to stay and play with the toys, while the 2nd group didn't want to and rated them less enjoyable

real life examples of overjustification effect

paying kids for grades- once the kids are in college, they are less motivated to make good grades bc they arent getting paid

observational learning

learning by observing others; studied extensively by albert bandura (stanford psychologist)

what were the method and results of Bandura's most famous study of observational learning in children

preschoolers were taken into a room and were given coloring books. on the other side of the room, there was an adult sitting next to a bobo doll. the adult starts to yell and scream at the bobo doll. the children are taken into a second room were there are lots of toys to play with. the adult tells the children that they cannot play with them. next, the adult takes them to a third room with broken down toys, and the kids get frustrated. they start yelling and screaming at a bobo doll in the room.

vicarious learning/vicarious punishment

observing other people's rewards and punishments without experiencing them ourselves, but changing our behavior as a result of the rewards and punishments we see others recieve

there is a biological basis for observational learning

true

mirror neurons

neurons in the frontal lobes of the brain that may fire when we engage in certain actions and also when we observe other people doing so; mirror neurons may enable both imitation and empathy

observational learning does not have a very powerful effect in the real world

false; it has a very powerful effect

antisocial behavior

behavior that harms other people. alot of the time, it is a result of observational learning. real world examples: public shootings, airplane hijackings, tv violence

prosocial behavior

positive, constructive, helpful behavior. the opposite of antisocial behavior. real world examples: training/roleplaying to handle situations, letting people in during rush hour creates a chain of letting people in

what will children model: what their parents say, or what their parents do?

children will model what their parents do

memory is very important to being who you are

true; you wouldn't know who you are, your parents, why you're here, etc. if you didn't have memory

memory

the persistence of learning and representations of experiences over time, through the storage and retrieval of information

encoding

the processing of information into the memory system

storage

the retention, over time, of information encoded into memory

retrieval

the process of getting stored information back out of the memory

sensory memory

the immediate and very brief initial recording of information in memory by the sense organs

how long does visual sensory memory (iconic memory) typically last?

1/2 a second

how long does auditory memory of sounds (echoic memory) typically last?

3-4 seconds

short-term memory

memory of limited capacity that holds a few items briefly, before the information is either stored or forgotten

long-term memory

your relatively permanent store of memories, with virtually unlimited capacity

working memory

a newer conceptualization of the classic three-stage model, especially with respect to the second stage (short term memory): an active processing of selected incoming information and also relevant information retrieved from long term memory, on a temporary basis, to work with and evaluate the information until the new information is either discarded or processed into long-term memory