Linear test 2

1/41

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No study sessions yet.

42 Terms

Invertible matrix (DETREMINANT ad - bc DOES NOT EQUAL ZERO)

An n x n matrix A is invertible if there is an n x n matrix C such that CA = I and AC = I, where I is the n x n identity matrix. C, in this case, is the inverse of A.

* a non-invertible matrix is sometimes called a singular matrix, while and invertible matrix is called a nonsingular matrix.

*An n x n matrix is invertible if and only if A is row equivalent to the identity matrix

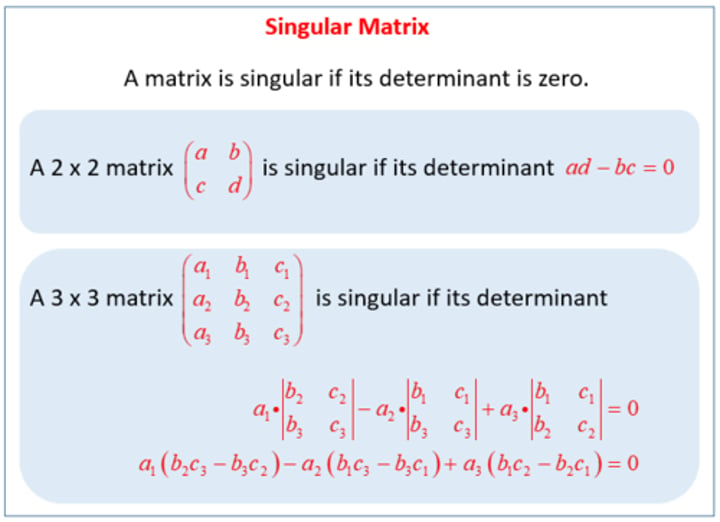

singular matrix AKA the name for a matrix that isn't invertible

A singular matrix is a square matrix with no inverse. It's determinant is zero.

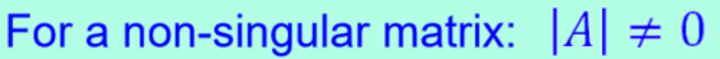

Non-singular matrix AKA the name for a matrix that IS INVERTIBLE

A square matrix with a non-zero determinant.

A matrix is invertible if ad - bc DOES NOT EQUAL ZERO

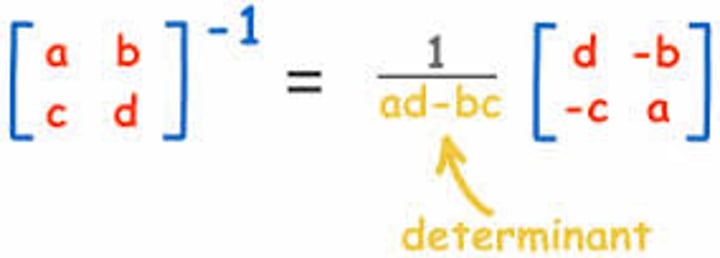

cross multiply (ad - bc) to find the determinant if the determinant is NOT Zero keep going, you can now find the inverse by putting the determinant as the denominator (1/ determinant) and multiplying this fraction by the OG matrix now written as [ d -b]

[-c a]

![<p>cross multiply (ad - bc) to find the determinant if the determinant is NOT Zero keep going, you can now find the inverse by putting the determinant as the denominator (1/ determinant) and multiplying this fraction by the OG matrix now written as [ d -b]</p><p>[-c a]</p>](https://knowt-user-attachments.s3.amazonaws.com/e096338c-92ac-4c9c-af3d-cb7bb7747b32.image/png)

Basis of a Matrix

The basis of a matrix refers to a set of linearly independent vectors that span the entire vector space of the matrix. It is the smallest set of vectors that can be used to express all other vectors in the matrix

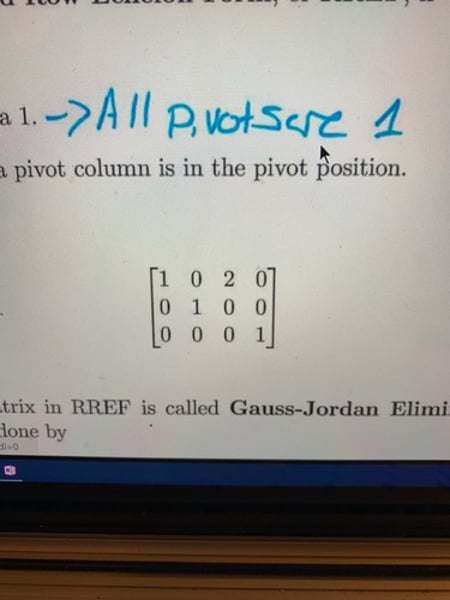

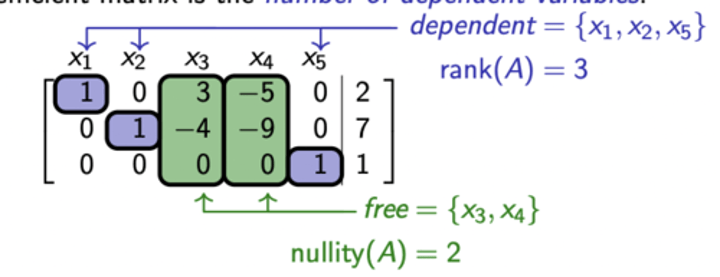

Reduced Row Echelon Form (RREF)

1. In REF

2. All leading entries are 1

3. In a column with a leading 1, all other elements are 0.

nullity of a matrix

the dimension of the null space of a matrix

null space

Set of all solution to Ax = 0 could also be seen as the columns without the leading one

a basis for a subspace of R^n is set of vectors in S that

1) Span S (ITC, OUR S is R^2)

2) Linearly independent

VECTORS MUST MEET BOTH CONDITIONS TO BE CONSIDERED A BASIS OF R^n

Span of a matrix

the spn of a matrix i.e. R^2 is telling us that all vectors in that matrix have 2 #'s in their COLUMNS & AT LEAST6 have two columns!!!

For a matrix to span r^2 it should have BOTH conditions.. see pg in 3.5

AN INVERTIBLE MATRIX IS ALWAYS LINEARLY INDEPENDENT

AN INVERTIBLE MATRIX IS ALWAYS LINEARLY INDEPENDENT

when given matrix B has operations (either plus or minus) det(B) is ..

det(B) = (det A)

when given matrix B has swapped rows det(B) is ..

det(B)= -det(A)

(A row interchange changes the sign of the determinant)

when given matrix B has been multiplied by scalar(s), then det(B) is ..

det(B) = det(A)*scalar(s)

(MULTIPLIED BY EACH SCALAR IF THERE IS MULTIPLE!!!)

(A row scaling also scales the determinant by the same scalar factor.)

Know that Ax = b can NOT be consistent when there are more ROWS THAN COLUMNS

AKA Ax=b has a unique solution for every b

kernel is the same thing as a null space (interchange the word kernel for null)

REMEMBER THAT THE NULL SPACE IS THE SET OF VECTORS! THEREFORE THE KERNEL IS ALSO THE SET OF VECTORS, THAT MAKE A MATRIX EQUAL ZERO... only write the kernel as a number if asked for dim(kernel)

rank(T)= dim(range (T))

remember that these are the NUMBER VALUES NOT SETS OF VECTORS

range is the same thing as col (A)

remember that col(A) is the set of vectors that contains the leading ones...

rank + nullity = # of columns AND dim(range) + dim(kernel) = # of columns

these are the same as one another

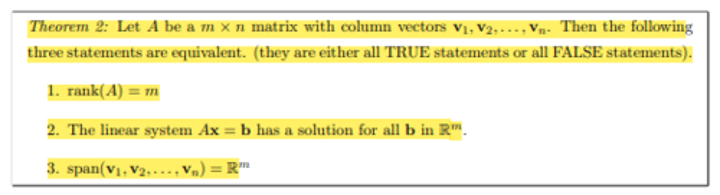

what does it mean for something to be onto?

the rank of the matrix in RREF is equal to the codomain (AKA THE # OF ROWS)

domain or R^n

# of columns (m x n is row x columns)

trivial

has no free variables

nontrivial solution

free variable vector?? (a nonzero vector x that satisfies Ax=0)

the dimcol(A) and the rank are equal

TRUE by the Rank Theorem. Also since dimension of row space = number of nonzero rows in echelon form = number leading one columns = dimension of column space. (ur pretty much just counting the # of leading ones horizontally and then vertically)

the transpose of a matrix has the same value as the OG RREF rank

transpose? SAME RANK

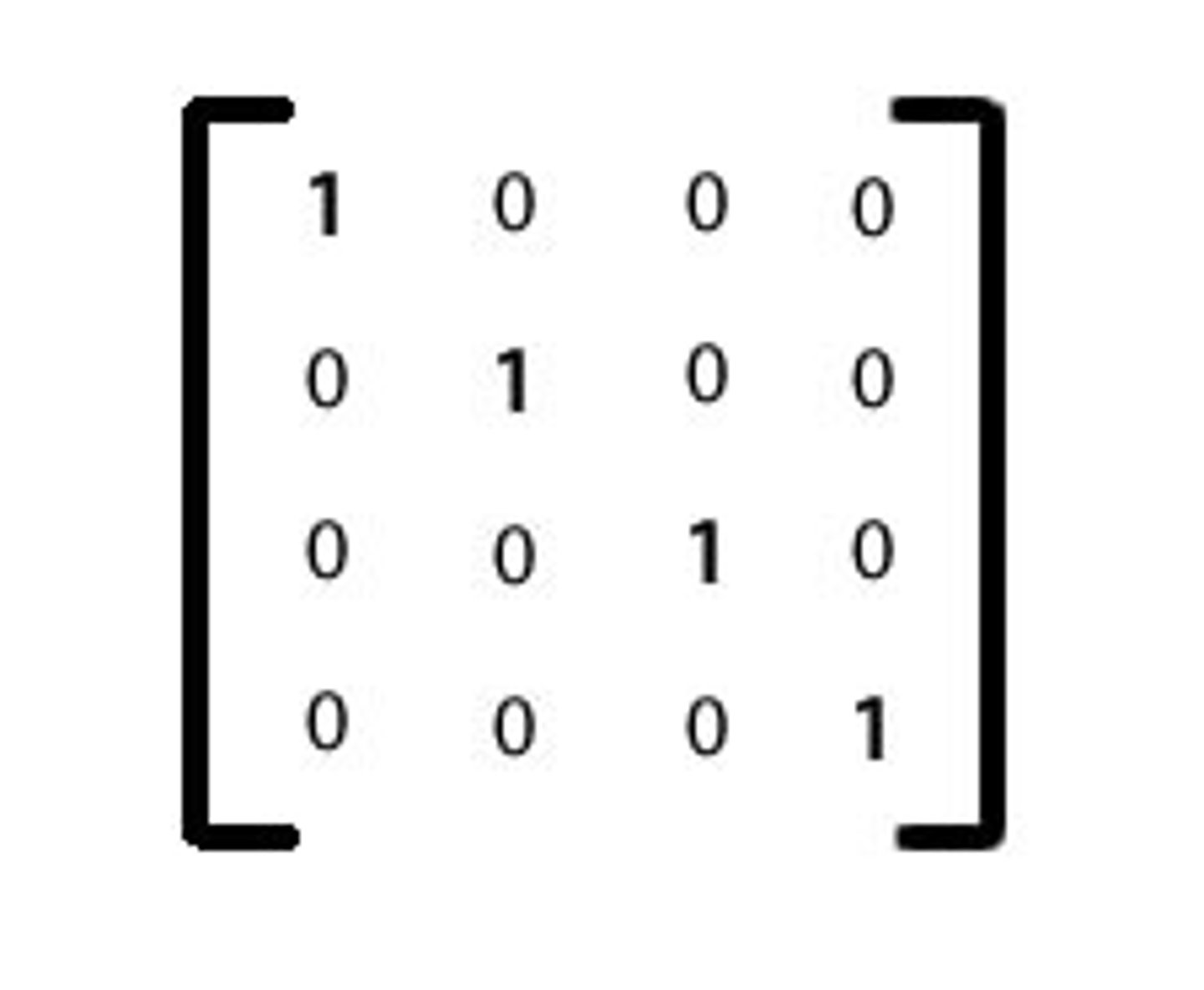

if a matrix is invertible, then its is linearly independent right. then if its linearly independent then its RREF must be the Identity matrix!!!! (or at least you know that each row has a leading one)

invertible=linearly independent=identity matrix

codomian or R^m

# of rows (m x n is rows by columns)

is Ax=b consistent for R?

-if there's a leading 1 in each row, A IS CONSISTENT

- if there's NOT a leading 1 in each row then A IS NOT CONSITENT

is T one-to-one?

T is only ONE - TO - ONE WHEN THE COLUMNS ARE LINEARLY INDEPENDENT

Know that if a matrix is invertible (ad-bc = not zero), then it is also onto and also 1-1.. this is because the matrix as a whole is linearly independent (u can check linear independence by seeing if each row has leading one in RREF)

when a matrix is linearly independent...

Ax=b & linear dependence

-Ax=b has a unique solution means only one solution & when A is linearly independent/ invertible

-when rows > columns Ax = b isn't consistent (not linearly independent)

-when a matrix is linearly dependent, Ax=b has infinitely many solutions

Algebraic multiplicity

The number of times an Eigenvalue repeats.

Geometric multiplicity

dimension of eigenspace (number of free variables)

ONTO (is the matrix onto? AKA IS THERE A LEADING 1 IN EVERY ROW OF ITS RREF)

if there is a LEADING ONE in every row then YES the matrix is ONTO

ONE- TO -ONE (AKA is there a LEADING ONE IN EVERY COLUMN OF THE RREF)

if there is a leading 1 in every column of a matrix’s RREF, the matrix is one - to one

Find a basis is NOT THE SAME AS Find a basis for col(A)!!!!

Find a basis for col(A) means find the basis for the LEADING 1 COLUMNS !!

1) find RREF of matrix if not given and circle leading 1’s

2) write the vector columns with the LEADING 1’s out AS GIVEN DO NOT WRITE THE NUMBERS FROM THE RREF U FOUND LIKE U WOULD FOR A NORMAL BASIS

3) put basis brackets around leading one vectors that were written with the numbers from the non-RREF matrix

the rank of the coefficient matrix and the augmented matrix are the SAME NUMBER!!!

got wrong on test 2 page 3, rank of the coefficient matrix and the augmented matrix are the same number

The basis for a span must

1) HAVE EXACTLY THE # of VECTOR AS THE SPANS MINIMUM, a basis of span R² can ONLY have TWO vectors

2) must be linearly independent