DAT255: Deep Learning - Lecture 18 Multimodal Transformers

1/3

Earn XP

Description and Tags

Flashcards covering key concepts from the Multimodal Transformers lecture, including visual attention, vision transformers (ViT), audio transformers, and applications like AlphaStar.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

4 Terms

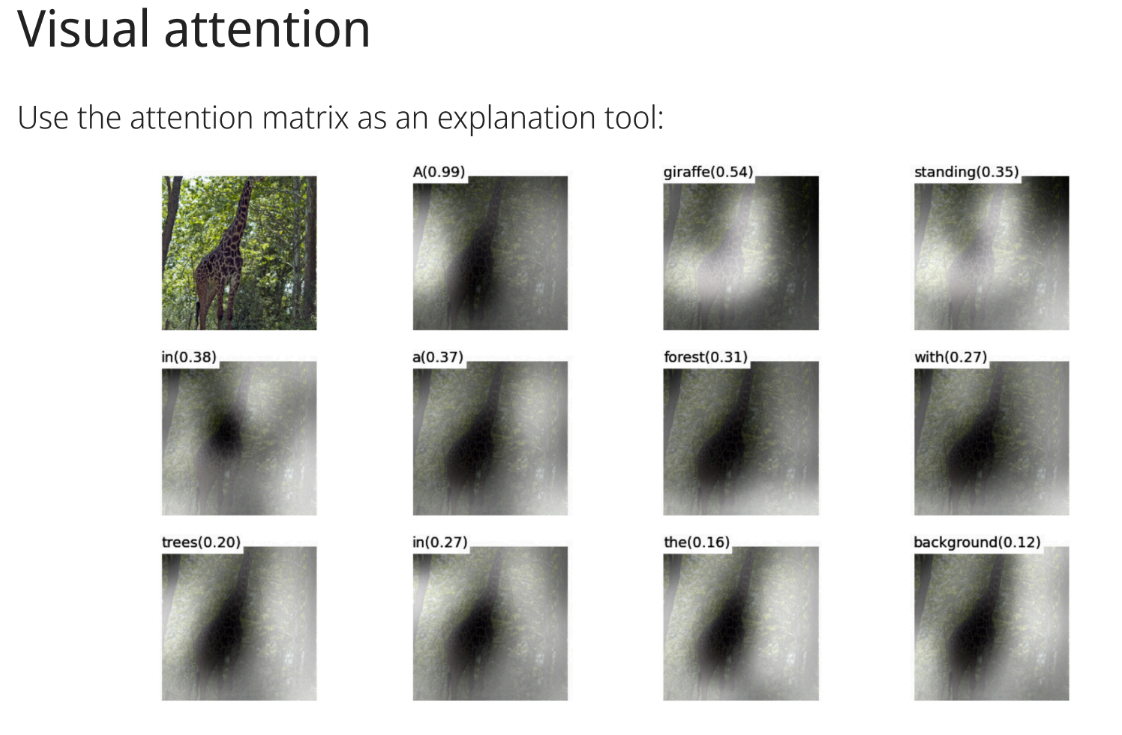

How can the attention matrix in visual attention models be utilized?

As an explanation tool to understand which parts of the image the model is focusing on.

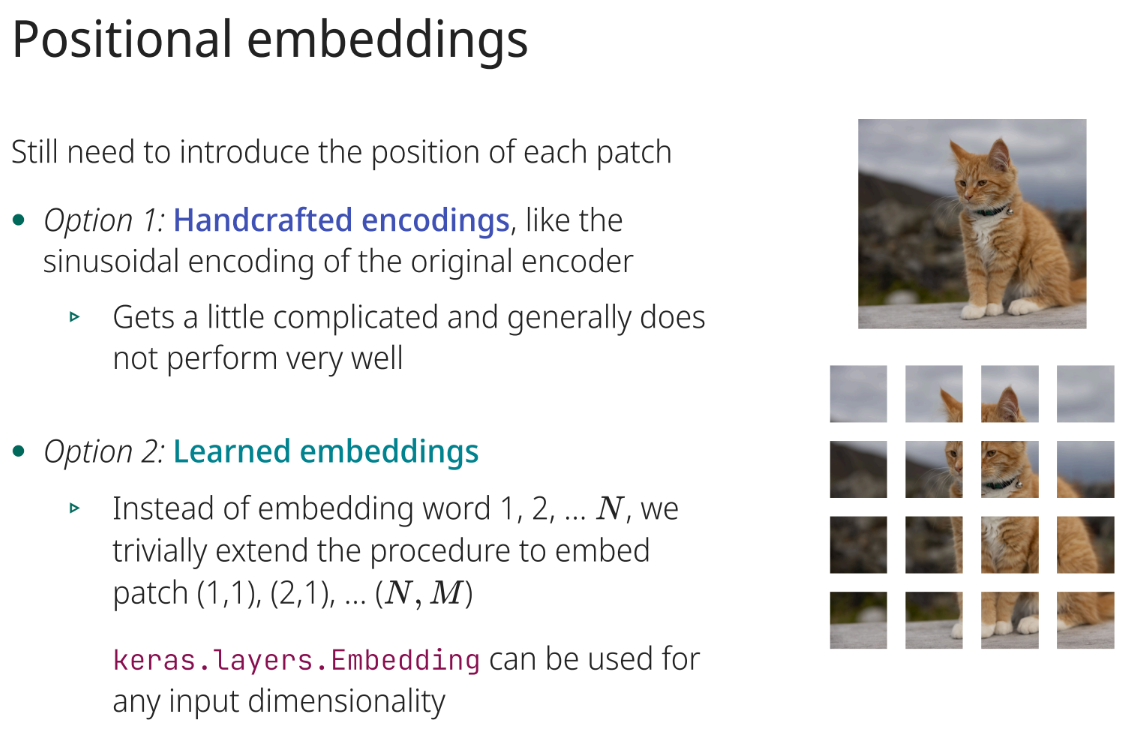

Why are positional embeddings necessary in Vision Transformers (ViT)?

To introduce the position of each patch, as transformers are permutation invariant.

What are two options for positional embeddings in ViT?

Handcrafted encodings (e.g., sinusoidal) or learned embeddings.

How does AlphaStar's neural network process game data?

It receives input data from the raw game interface, applies a transformer torso to the units, combines it with a deep LSTM core, and uses an auto-regressive policy head with a pointer network.