research design quiz 3

1/59

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

60 Terms

given a (number) x (number) design study what does the number of numbers mean?

the independent variables

given a (number) x (number) design study what do the numbers themself mean?

the levels for each IV

one-way designs

Experimental designs in which only one independent variable is manipulated(The simplest one-way design is a two-group experimental design in which there are only two levels of the independent variable)

randomized groups design

between-subjects design in which participants are randomly assigned to one of two or more conditions

matched-subjects design

participants are matched into blocks on the basis of a variable the researcher believes relevant to the experiment. Then participants in each matched block are randomly assigned to one of the experimental or control conditions.

repeated measures (or within-subjects) design

each participant serves in all experimental conditions, between-subjects, between-participants, or between groups design because we are interested in differences in

behavior between different groups of participants.

Posttest-Only One-Way Designs: randomized groups design

initial sample → randomly assigned to one of 2 + gps → IV manipulated → DV measured

Posttest-Only One-Way Designs: matched subjects design

initial sample → matched into blocks based on relevant attributes → subjects in blocks assigned to one of two+ groups → IV manipulated → DV measured

Posttest-Only One-Way Designs: repeated measures design

initial sample → receives one level of IV → DV measured → receives another level of IV → DV measured

Pretest-posttest advantages

verifies that participants in the experimental conditions did not differ with respect to the dependent variable at the beginning of the experiment, showing effectiveness of random/matched assignment

ability to see how much the IV changed participants behavior (posttest can provide baseline like this id control condition with 0 level of indp variable used)

error variance due to preexisting differences among

participants can be eliminated from the analyses, making

the effects of the independent variable easier to see, because variability in pretest can be removed

factorial design

A 2 x 2 tells us that the design had two independent variables,

each of which had two levels (see Figure 10.7 [a]).

A 3 x 3 factorial design also involves two independent variables,

but each variable has three levels

A 4 x 2 factorial design has two independent variables, one with

four levels and one with two levels

to find how many experimental conditions a factorial design has..

multiply the numbers in a design specification.

For example, a 2 : 2 design has four different cells or conditions—that is, four possible combinations of the two independent variables

12 x 2 = 42.

A 3 x 4 x 2 design has 24 different experimental conditions

Randomized Groups Factorial Design

participants are assigned randomly to one of the possible combinations of the independent variables

Matched Factorial Design

the matched-subjects factorial

design involves first matching participants into blocks

on the basis of some variable that correlates with the

dependent variable then the participants in each block are randomly assigned

to one of the experimental conditions

Repeated Measures Factorial Design

requires participants to participate in every experimental condition

Mixed Factorial Design

some independent variables in a factorial experiment may

involve random assignment, whereas other variables

involve a repeated measure. A design that combines

one or more between-subjects variables with one or

more within-subjects variables is called a mixed factorial

design, between-within design, or split-plot factorial design.

main effect

The effect of a single independent variable in a factorial

design. A main effect reflects the effect

of a particular independent variable while ignoring the

effects of the other independent variables.

interaction

when the effect of one independent variable differs across the levels of other independent variables. If one independent variable has a different effect at one level of another individual variable than it has at another level of that independent variable, we say that the independent variables interact and that an interaction between the independent variables is present

A three-way design, such as a 2 : 2 : 2 or a 3 : 2 : 4

design, provides even more information.

we can examine the effects of each of the three

independent variables separately

we can look at three two-way interactions—interactions of each pair of

independent variables while ignoring the third independent variable.

a three-way factorial design gives us information about the combined effects of all three independent variables

participant variables (also called subject variables),

an individual’s personal characteristics such as

sex, age, intelligence, ability, personality, and attitudes

expericorr (or mixed/expericorr)

factorial designs.

These designs involve one or more

independent variables that are manipulated by the experi-

menter, and one or more preexisting participant variables

that are measured rather than manipulated.

In the median-split procedure

the researcher identifies

the median of the distribution of participants’ scores on the

variable of interest (such as self-esteem, depression, or self-

control). You may recall that the median is the middle score

in a distribution, the score that falls at the 50th percentile.

The researcher then classifies participants with scores

below the median as low on the variable and those with

scores above the median as high on the variable

extreme groups procedure

the researcher pretests a large number of potential partici-

pants, then selects participants for the experiment whose

scores are unusually low or high on the variable of inter-

A well-designed experiment

1. The researcher must vary at least one independent variable

to assess its effects on participants’ responses.

2. The researcher must have the power to assign participants to the various experimental conditions in a way that ensures their initial equivalence.

3. The researcher must control all extraneous variables that

may influence participants’ responses.

conditions

the different levels of the independent

variable

Environmental manipulations

experimental modifications of aspects of the research setting.

(For example, a researcher interested in visual perception might vary the intensity of illumination, a study of learning might manipulate the amount of reinforcement that a pigeon receives, an experiment investigating attitude change might vary the characteristics of a persuasive message, and a study of emotions might have participants view pleasant or unpleasant photographs.)

Instructional manipulation

vary the independent variable through instructions or information that participants receive.

For example, participants in a study of creativity may be given one of several different instructions regarding how they should solve a particular task. In a study of how people’s expectancies affect their performance, participants may be led to expect that the task will be either easy or difficult.

Invasive manipulations

creating physical

changes in the participant’s body through physical stimu-

lation (such as in studies of pain), surgery, or the adminis-

tration of drugs. In studies that test the e!ects of chemicals

on emotion and behavior, for example, the independent

variable is often the type or amount of drug given to the

participant.

experimental group(s)

Participants who receive a nonzero level of the independent

variable

control group

those who

receive a zero level of the independent variable

pilot test

ensures that the levels of the independent variable are different enough to be detected by

participants. If we are studying the e!ects of lighting on

work performance, we could try out di!erent levels of

brightness to find out what levels of lighting pilot participants perceive as dim versus adequate versus blinding

A manipulation check

a question (or set of questions) that is designed

to determine whether the independent variable was

manipulated successfully. For example, we might ask par-

ticipants to rate the brightness of the lighting in the experi-

ment. If participants in the various experimental conditions

rate the brightness of the lights differently, we would know

that the difference in brightness was perceptible.

subject (or participant) variables

reflect existing characteristics of the participants. Designs that

include both independent and subject variables are common and quite useful, but we should be careful to distinguish the true independent variables that are manipulated

in such designs from the participant variables that are

measured but not manipulated.

ADVANTAGES OF WITHIN-SUBJECTS DESIGNS

more powerful because the

participants in all experimental conditions are identical in

every way (after all, they are the same individuals). When

this is the case, none of the observed differences in

responses to the various conditions can be due to preexist-

ing differences between participants in the groups

within-participants designs is

that they require fewer participants. Because each participant is used in every condition, fewer are needed.

every participant is their own control

DISADVANTAGES OF WITHIN-SUBJECTS DESIGNS

because each participant receives all levels of the independent variable, order effects can arise when participants’ behavior is affected by the order in which they participate in the various conditions of the experiment. When order effects occur, the effects of a particular condition are contaminated by its order in the sequence of experimental conditions that participants receive.

order effect - practice

participants’ performance

improves merely because they complete the dependent

variable several times.

order effect - fatigue

participants become tired, bored, or less motivated as the experiment

progresses.

order effect - sensitization

After receiving several levels of the independent variable and

completing the dependent variable several times, participants in a within-subjects design may begin to realize what the hypothesis is.

counterbalancing

presenting the levels of the independent variable in different orders to different participants.

Carryover effects

when the effect of a particular treatment condition persists even after the condition ends; that is, when the effects of one level of the independent variable are still present when another level of the independent variable is introduced.

experimental control.

eliminating or holding constant extraneous factors that might

affect the outcome of the study. If such factors are not con-

trolled, it will be difficult, if not impossible, to determine

whether the independent variable had an effect on participants’ responses.

No systematic differences should exist between the conditions including:

WHO is in the conditions (i.e., the participants)

Random assignment

Within-subject design

WHAT the participants in each condition experience (except what we WANT to vary)

Eliminating confounds

Random assignment

a way of ensuring that all of these individual differences are balanced (i.e., do not systematically differ) across the conditions of our experiment.

confound

Any unintended systematic difference between the levels of the IV.

unsystematic errors

Some participants are tested:

at the beginning of the day, some at the end

on a rainy day, some on a sunny day

right before spring break, some during midterms

on am empty stomach, on a full stomach

on caffeine, not on caffeine

Minimizing noise

Recruit more homogenous participants

But this may limit your generalizability/external validity

Control for transient states, if possible

e.g., ask participants to refrain from caffeine before testing

Minimize natural fluctuations in environment

e.g., maintain steady temperature, work in a sound proofed space

Script or automate procedures

Use reliable and valid measures

Experimenter expectations

When the experimenters’ expectancies affect either:

How they interact with participants

Which can in turn influence participant behavior/thoughts/emotions

Rosenthal or Pygmalion effect

How the investigators interpret ambiguous/subjective data

Which can bias results

Demand characteristics

When participants know how they “should” respond

Leaves us uncertain:

Are they being honest of just telling us what we want to hear?

If they are being honest:

Did the IV change their behavior/thought/emotion or was it just the power of suggestion?

In treatment studies, could a placebo effect be operating?

mitigate demand characteristics and experimenter expectations by

Create equally credible treatments

Inform participants that we are comparing two treatments (no placebo condition)

Train therapists to expect that both treatments could lead to benefit

Assess expectancies

look for differences between conditions and, if necessary, control for these in analyses

Video-record therapy sessions

Could allow us to rate for unintended differential treatment by condition

Encourage honesty from participants

Evaluator is independent from treatment team and blind to condition

(Long et al., 2023) What was the purpose of this research?

The purpose of this research was to determine the impact of a mental health education intervention on the life satisfaction and self-confidence of children living in rural areas of China.

(Long et al., 2023) Why did the authors choose to focus specifically on school children in rural China?

They chose to focus specifically on China because of the dramatic economic changes that led to rapid urbanization and magnified the disparities between rural and urban living standards in China. This urbanization has caused a diminished emphasis on rural education along with challenges recruiting educators to rural areas.

(Long et al., 2023) What was the primary independent variable? How many levels did it have? What did each level consist of?

The primary IV was whether the students received the intervention first or not. There were two levels, the intervention and control group

(Long et al., 2023) The investigators also included “time” as an independent variable with two levels (baseline and post-intervention). This is a common practice. Though the investigators aren’t able to “manipulate” time (they aren’t that powerful), they are choosing when to administer the outcome measures. Given that time is a second IV, how would you describe the design of this study?

The study is a 2 x 2 mixed study because there are two IVs, group (intervention & time) and time(baseline & post-intervention). One of these variables, group, is manipulated with a between subject and the the time variable is experienced by both groups, time

(Long et al., 2023) Was this study a between-subjects, within-subjects, or between-within (mixed) design? Explain your answer.

This study had a between within design because of the two IVs, group and time, group was manipulated between subjects and time was experienced by all subjects.

(Long et al., 2023) How was randomization done? Do you see any problems with this procedure (this is not a leading question–I just want you to genuinely think about it).

1001 Random students from 4-6th grade in primary schools in poverty counties were chosen. We did not identify any particular problems other than potentially demographics.

(Long et al., 2023) What evidence did they present to suggest that randomization resulted in comparable groups at baseline? What evidence suggested that it did not?

Schools were assigned to the intervention or control group based on online questionnaires filled out by the students ensuring the students at the schools eligible to participate met the required traits for the study. There is evidence that the randomization didn’t result in comparable groups because there were differences between the control and intervention group in regards to mean age and county as shown in table 1.

(Long et al., 2023) What were the dependent variables? How were they assessed?

Children's life satisfaction was assessed using The Multidimensional Student’s Life Satisfaction Scale. Children's Self Confidence was measured using The Children’s Self-Confidence Questionnaire. Both were assessed with a questionnaire before and after the experiment.

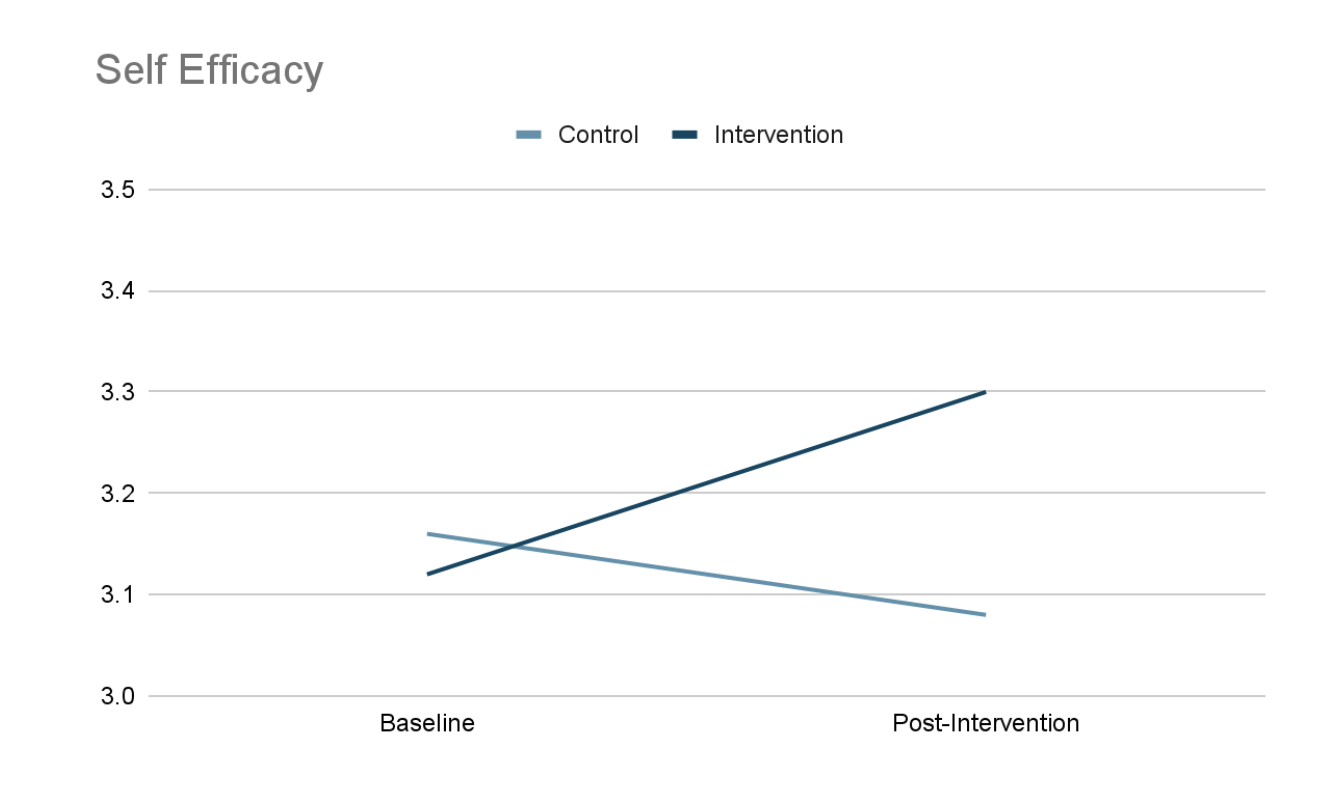

(Long et al., 2023) On page 5, the authors present their results. They conducted a statistical test called an ANOVA which allows us to test for differences between multiple groups and across multiple IVs. They conducted eight 2 x 2 ANOVAs (one for each DV) and reported that seven interactions were statistically significant. In your own words, explain what these interaction effects tell us about the efficacy of the mental health intervention.

These interaction effects tell us that the mental health intervention was helpful because there were significant interaction effects between the intervention group and increased family satisfaction, school satisfaction, environmental satisfaction, self satisfaction, self efficacy, self assurance, and self confidence.

(Long et al., 2023) Take the value for self efficacy in Table 2 and roughly sketch out the interaction with Self efficacy on the y-axis and time on the x-axis.

(Long et al., 2023) We know that most of their analyses were statistically significant, but what else could the authors have reported to tell us how meaningful the observed differences were?

They could have reported the cohen's d or the effect size to demonstrate how meaningful their results were.

Also having more figures such as the one we did for question 10.