Lecture 5- Perceptual organisation, Gestalt psychology and face perception

1/43

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

44 Terms

How do researchers typically think about the visual system?

Low-, mid- and high-level vision

What is considered to be low-, mid- and high-level vision?

Low-level or early vision extracts local information about lines, bars and edges (low level visual properties)

Mid-level vision joins isolated features into larger groups..

...forming the basis for object recognition in high-level vision

Each level is typically found in….?

Isolation (within research)

Kastner and Kanwisher stand out due to their coverage of all levels (and all methods)

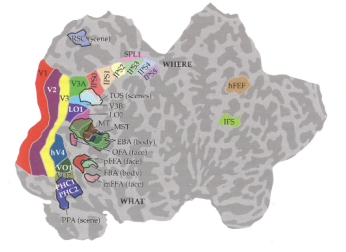

What are the ventral and dorsal streams?

Ventral ('what') and dorsal ('where') streams

Process very different aspects of visual input;

'What' stream; object identification

'Where' stream; visuo-spatial information processing

Briefly describe the anatomy of the dorsal and ventral streams

At the back in red = V1 (and V2 and 3)

Then you can observe this splitting up- into ventral and dorsal stream

What has research found in terms of ventral stream lesions?

Lesion part of the ventral stream…

Difficulty identifying certain objects but don't show this difficulty in processing which of the objects is closer to them/further away

Therefore seems to be separate streams

Lesion studies seem to indicate separate (dorsal and ventral) steams. However, there appears to be no…?

No strict anatomical or functional separation of 'what' and 'where' streams

E.g. Konen and Kastner, 2008

Complex connectivity

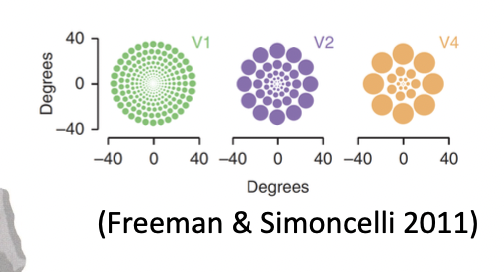

As you move from V1 along the ventral stream, what two things increase?

Receptive field sizes increases (the image shows the increase in size of receptive fields from V1 to V4 (mid-level vision))

As does tuning complexity (specific stimuli they respond to becomes more complex- receptive fields in the ventral stream that respond specifically to faces/body parts)

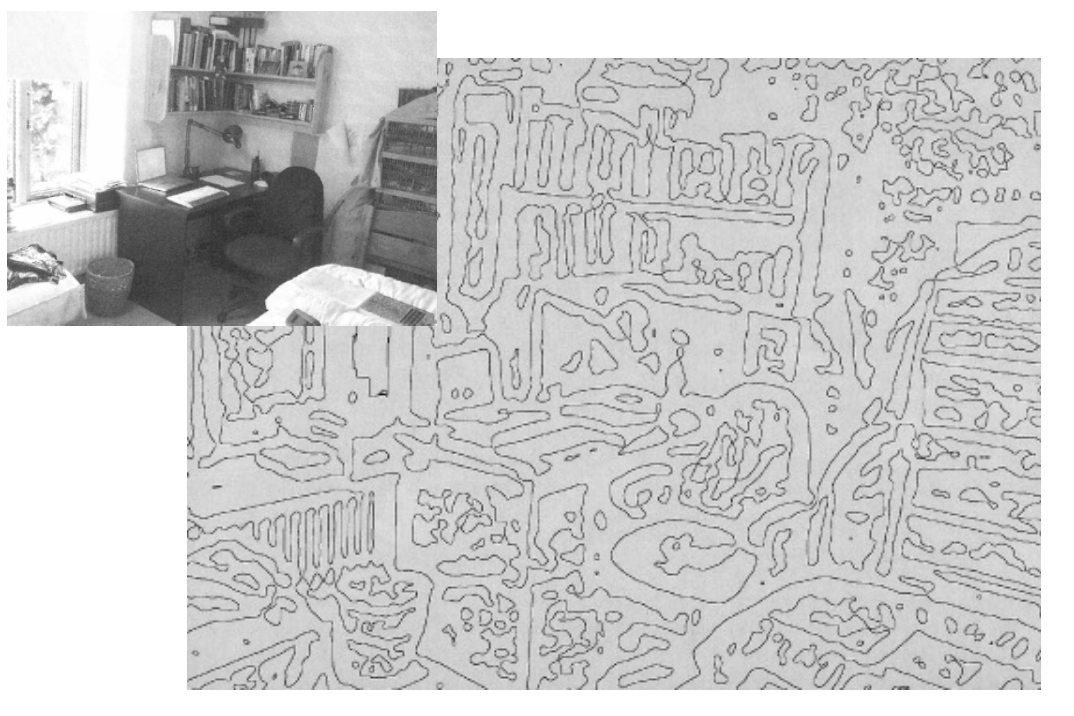

Grouping in V1 is a challenging problem. Why?

How is local information that is processed in V1 grouped into larger, meaningful units?

Challenging problem due to ambiguities about which pieces of information to group together

The input will look something like this (grainy image with just the orientations)

How do you know to bind information together from one object but not another?

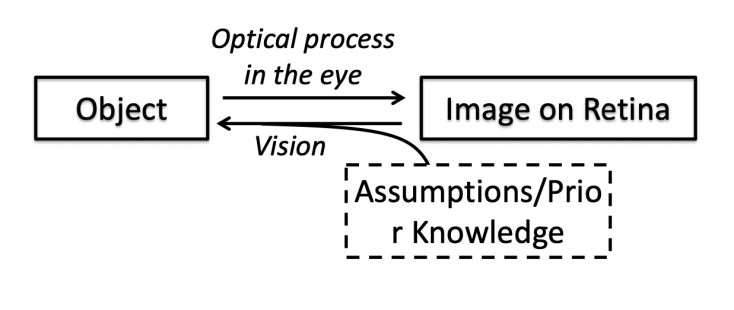

In order to resolve this ambiguity we need…?

Constraining principles

Mid-level visual system also uses assumptions/prior knowledge of what to bind together and what not to

What are the Gestalt principles of perceptual organisation?

Gestalt school of psychology described a set of 'laws' or principles of perceptual organisation

Main insight; relationships between elements are critical for perception (how things are bound together or not bound together)

The whole is greater than the sum of its parts (in this sense, we could think about it being 'different')

Give the 4 main Gestalt principles of perceptual organisation

Proximity

Similarity

Common fate

Good continuation

Other principles are mainly concerned with figure-ground assignment

How do you know what's in the foreground and background, and how do you separate these?

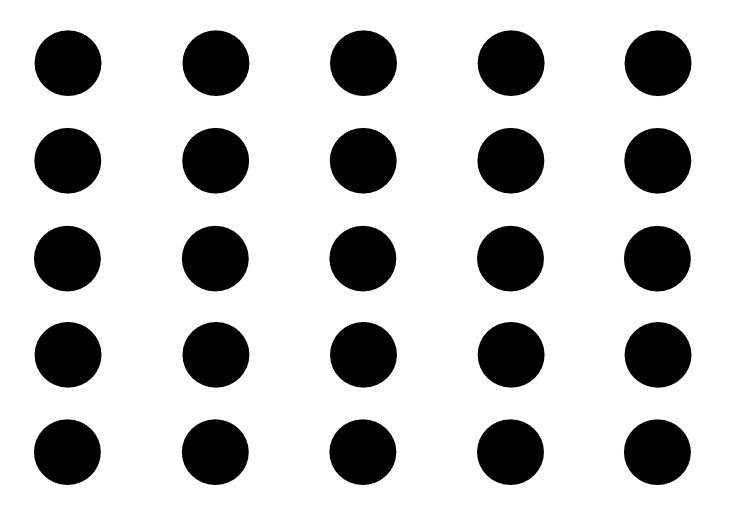

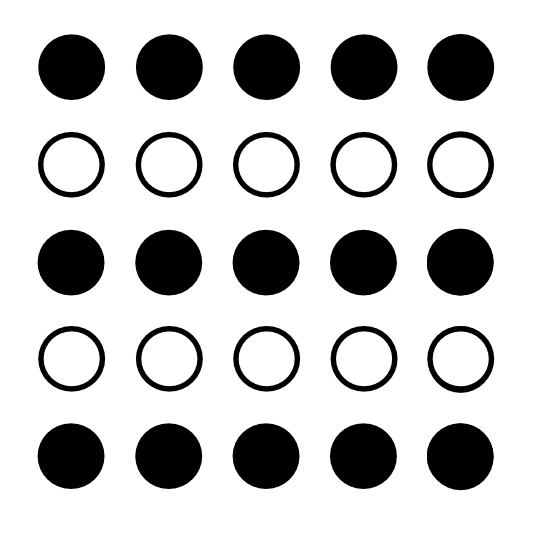

What is the principle of proximity?

Pieces of information that are close together in space are bound together

If you have these individual units, how do you bind these together?

Rows? Columns? Some other way?

However if you group them together by proximity (if the circles in the columns were further apart), you would see them as columns

What is the principle of similarity?

Piece of information that are similar in some way are grouped together

See these are rows due to the differences (black and white circles)

Proximity and similarity can lead to things being perceived as different

At what point does proximity take over from similar?

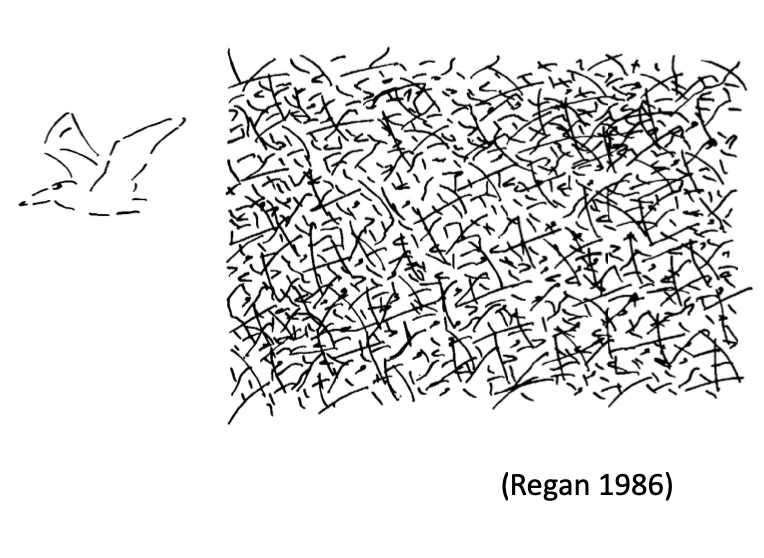

What is the principle of common fate?

Things that move together are bound together

Can't identify this bird within the squiggles, but if it begins to move, then we can perceive it

Features that move together are likely to come from the same object

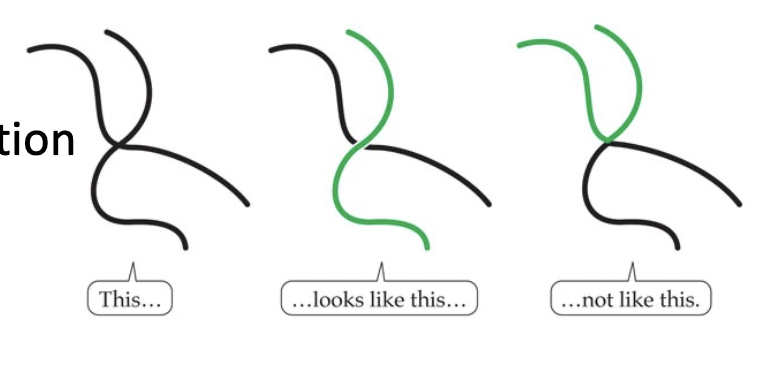

What is the principle of good continuation?

Your visual system assumes that orientation has these smooth contours rather than sudden changes

Most natural objects have smooth changes in orientation

Incorporating this knowledge of the environment

The gestalt principles providing insight concerning the role of relationships was important, but…?

Original Gestalt principles were largely descriptive

Little or no experimental evidence

Recent work in visual neuroscience and psychophysics has established the why and how of some of these principles

__ are among the most important stimuli?

Faces

Compared to other visual categories, faces are processed very efficiently

Very small changes in faces can tell you something about another person's…?

Identity, gender, age, ethnicity etc.

Faces are also important in telling us about other people's characteristics that change much more quickly, like…?

Facial expressions, gaze direction etc.

Important in facilitating social interaction, among many other things

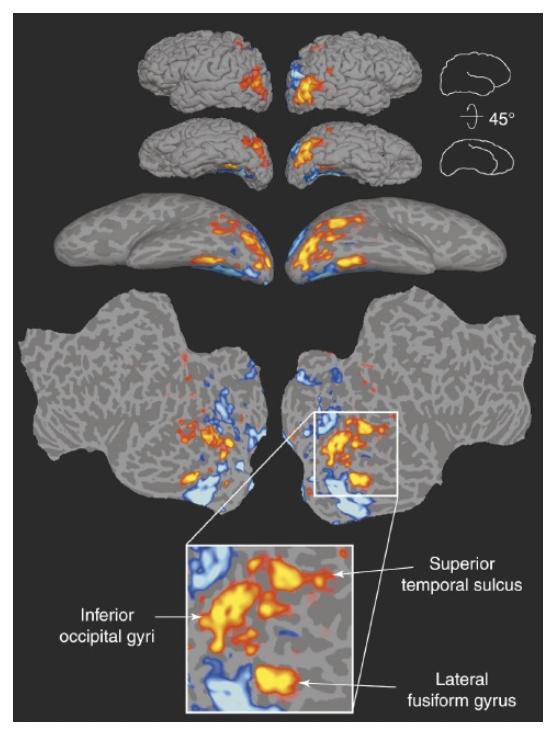

What 3 brain areas have been implicated in face processing?

Warmer colours = where there's more activity when someone's looking at faces (compared to other stimuli)

The three areas in the photo above are key areas used to process facial information

There are two different accounts of face perception. What are they?

Domain specificity; mechanisms operate independently of general object perception

Expertise; mechanism derives from general object perception but become finely tuned due to extensive experience

See faces much more than other types of stimuli = become experts at processing faces

What is holistic processing?

Representing features and their relationship as one unit

What is the part-whole effect?

Features are easier to identify when presented as part of a face

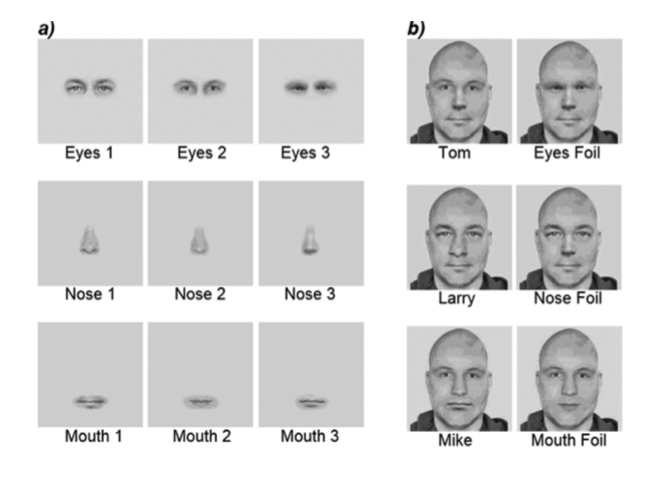

What was the procedure in Tanaka and Fara’s (1993) study on the part-whole effect?

Three different eyes/noses/mouths

Can combine these face features in different ways to construct different identities

Ask ppts to learn these identities

How good are ppts are identifying these different features depending on whether they're presented in isolation or within the context of the face?

What were the findings in Tanaka and Fara’s (1993) study on the part-whole effect?

May think that presenting just the features makes the task easier, but turns out that it's not the case

The way we process faces is different to how we process other objects. How?

Process faces by taking in the whole; the individual features and their relationships

Don't observe the part-whole effect when it comes to other objects, like houses

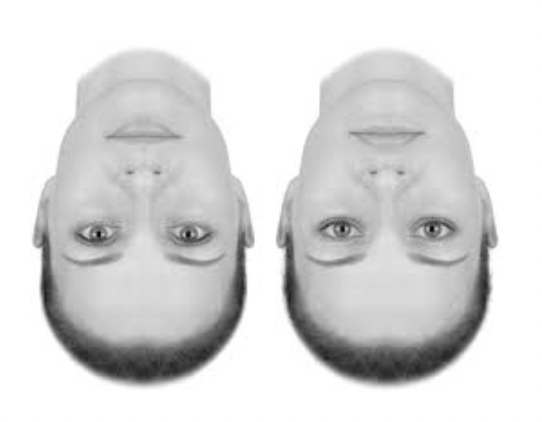

What is the face inversion effect?

Inversion disrupts processing of fine details and relationship between features

More difficult to identify individuals when you present the faces upside down

Why does the face inversion effect occur?

These specialised mechanisms are only triggered when you see the face in the configuration that you usually see it (upright)

Holistic processing is not applied when the face is upside down

Both these faces look very similar in this configuration, however when turned around, you can see that one of the faces has the lips and eyes upside down (actually looks quite grotesque)

This holistic processing is, in some ways, consistent with both accounts (domain specificity and expertise). How?

Demonstrates that faces are indeed processed differently, but the question that arises is 'why?'

Specific mechanisms in the brain or expertise?

Doesn't necessarily support one hypothesis over the other

What has been found in inversion studies done with people who are experts at processing other visual domains?

E.g. expert bird watchers- have to distinguish between birds

Early studies suggests that people who are experts in other objects also show these inversion effects (support for expertise hypothesis?)

However not been replicated

What is prosopagnosia?

Failure to identify or distinguish between faces, despite (otherwise) normal visual and cognitive ability

Both with strangers and with people they've known for years

No problem recognising other kinds of objects, but only faces that they can't recognise anymore

There are other cases of people not being able to perceive specific characteristics e.g. movement. This highlights the modularity of…?

Of (some) processes in visual perception

Lesion to a specific part of the brain -> specific deficit

Special case of visual agnosia

Prosopagnosia provides evidence of a dissociation between…?

Familiarity and identification

Could tell that their partner is familiar, without knowing who they actually are

Familiarity but no identification

People with prosopagnosia have to develop alternative strategies to recognise people. Give an example

If you can't recognise the faces, you may rely on the clothing or hair etc.

So if that changes, you lose this recognition

What is Capgras syndrome?

No problem in identifying faces, but don't get the sense of familiarity

Opposite problem

Develop all sorts of weird delusions as there would be someone living in their house who they perceive has their husband/wife's face but don't think it's actually them as they don't possess this familiarity

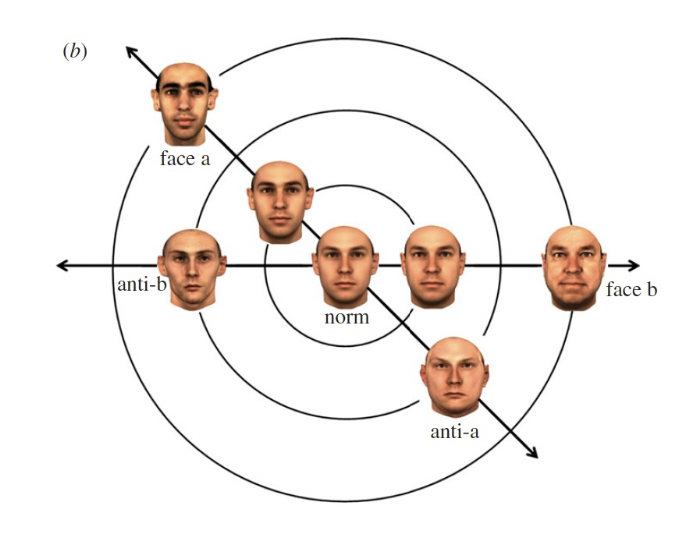

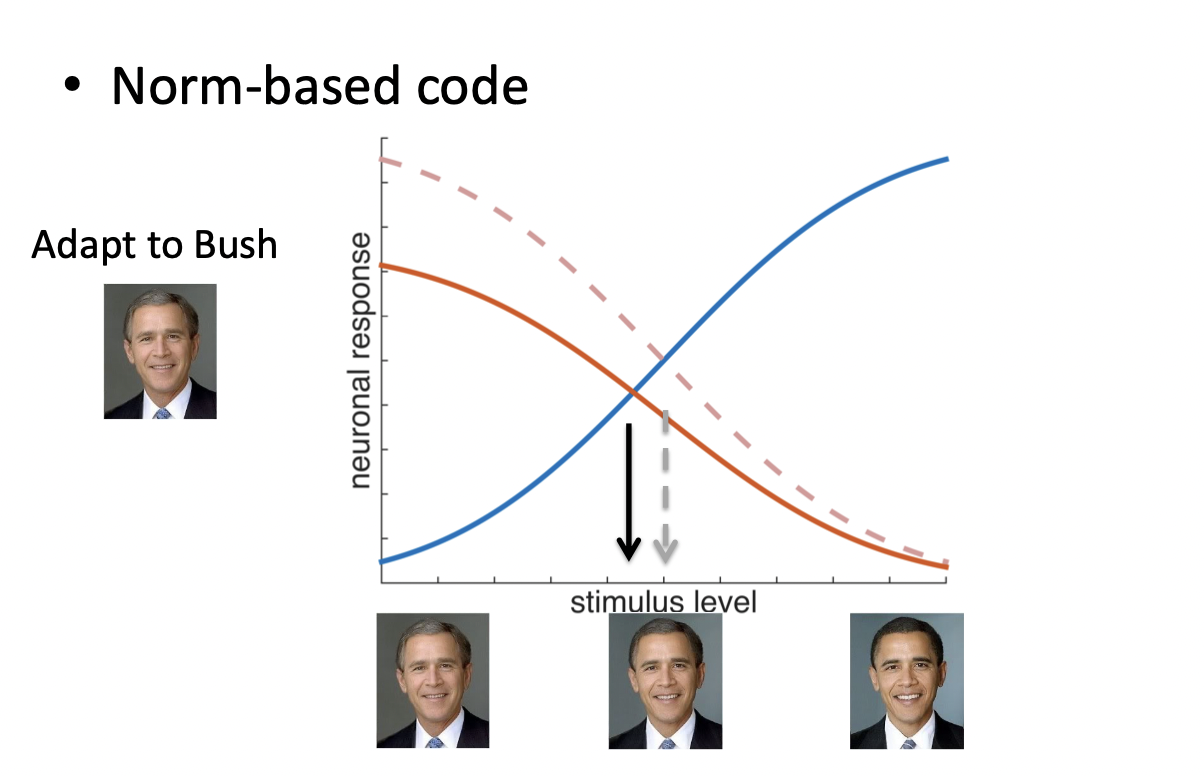

What is a norm-based code in face processing?

Facial features are represented as deviations from the average face

Average face generated from years of input about different faces

Makes explicit what is distinctive about a face

Emphasises subtle variations that define individuals

The further away from the norm the face is, the easier it is to recognise that face (and vice versa)

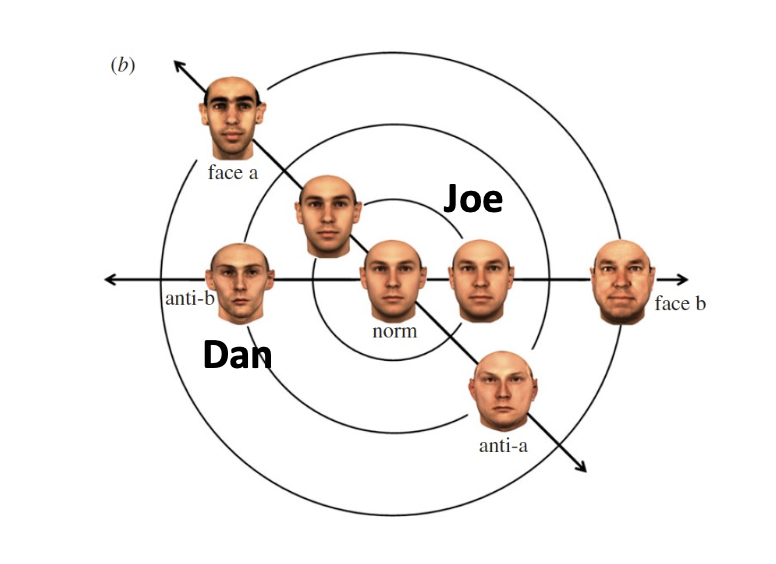

How does this image show this idea of norm based coding?

Picture in the middle is an actual image of Daniel Craig

Right picture = caricature of Daniel Craig, features more exaggerated

Left picture = anti caricature of Daniel Craig, deviations closer with the average male Caucasian face

Increased deviations from the average in this caricature

Why most people think that it's an actual picture of him- easier to recognise

Shows this idea of norm-based coding

If you change the norm, all faces have to become…?

Different

If we could change the norm to 'face b', Joe would look like Dan

Brain has defined Joe as sitting to the right but Dan is defined as sitting two spaces to the left of the norm

Therefore Joe would end up in the same position as Dan- why we would perceive his face as Dan's

That's what happens in these aftereffects

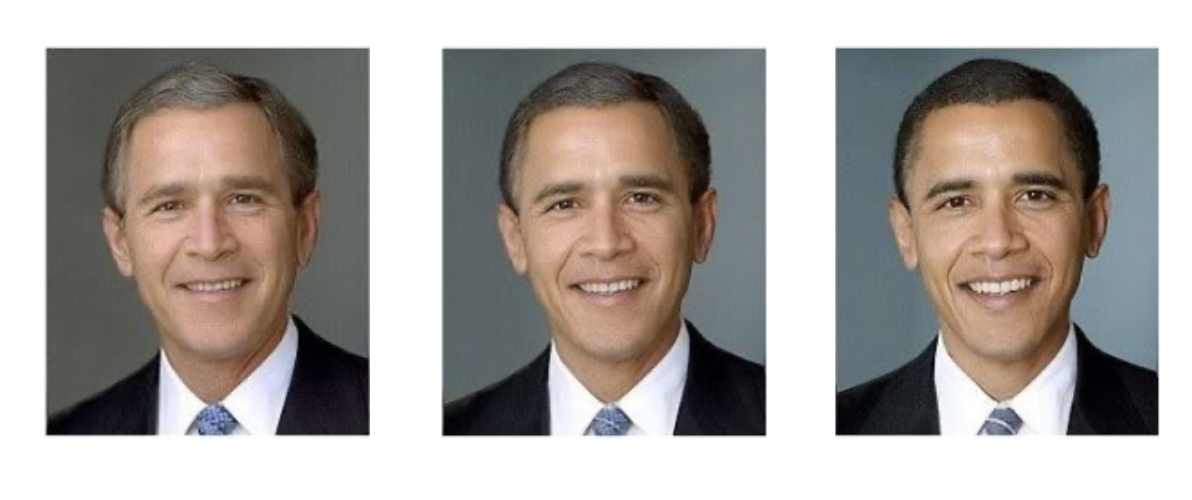

Describe what happens in this illusion

Stare at an image of either Barack Obama or George W Bush for about a minute

A subsequent image of both their faces merged together presented (middle image)

Depending on who you looked at, will perceive the other face more (if you looked at Obama's face initially, will see Bush's face in the subsequent stimulus)

How does the above illusion (George Bush/Barack Obama) work?

By staring at Obama or Bush for a minute, you shift your norm very slightly, so change your perception of this central face

Neurons that code for facial features adapt to specific characteristics of adaptor face

As a consequence, perception of subsequent face is biased away from adaptor characteristics

Describe what happens if you adapt to Bush in this illusion

If you adapt to Bush…

Neurons reduce sensitivity when you stare at that stimulus

Neurons decrease responding

Norm has slightly shifted to the left (black line)

Adapted to Bush = perceive that stimulus as Obama

Adaptation is like a re-calibration of the norm

What would happen if you adapted to the norm?

Adapt to the norm

Both decrease

So no change in perception

Is face processing special? What does the evidence suggest?

Evidence suggests…

Special neurons in inferotemporal cortex

Relies on holistic processing

Prosopagnosia can disrupt face recognition selectively

Norm-based code

Ongoing debate about whether face processing is special due to domain specificity or expertise