schedules of reinforcement

1/45

Earn XP

Description and Tags

exam 2

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

46 Terms

Schedules of Reinforcement

A rule stating how and when discriminative stimuli and behavioral consequences will be presented

The arrangement of the environment in terms of discriminative stimuli and behavioral consequences.

Specifies the requirements for reinforcement.

a statement of the conditions under which a behavior is reinforced.

The arrangement of discriminative stimuli, operants, and consequences

Performances on schedules of reinforcement have been found to be very similar across:

Species

Types of behavior

Types of reinforcing consequences

Why are schedules of reinforcement important?

Approximates some of the complex contingencies that operate with humans in everyday environment

Schedules of reinforcement tend to:

produce stereotypical response patterns

Patterns are:

a product of exposure to the contingencies of reinforcement

Contingency

the focus on the relationship between behavior and its consequences

Steady-state performance

the pattern of response that develops after repeated exposure to a contingency of reinforcement

Example: The fixed-ratio pause-and-run pattern

The Most Common Types of Schedules

Reinforcement for a particular number of responses

Reinforcement for a response after a particular interval of time has passed

what does Inter response time (IRT) mean?

time between responses

how many basic schedule types are there?

four

Continuous reinforcement (CRF):

behavior is reinforced for every response — Often used in the early stages of shaping

Examples include showering, eating, vending machines

Intermittent schedules of reinforcement

behavior reinforced occasionally — Requirements can be based on the number of responses required, time, or both, and are more resistant to extinction

Examples include checking the mail, checking your phone.

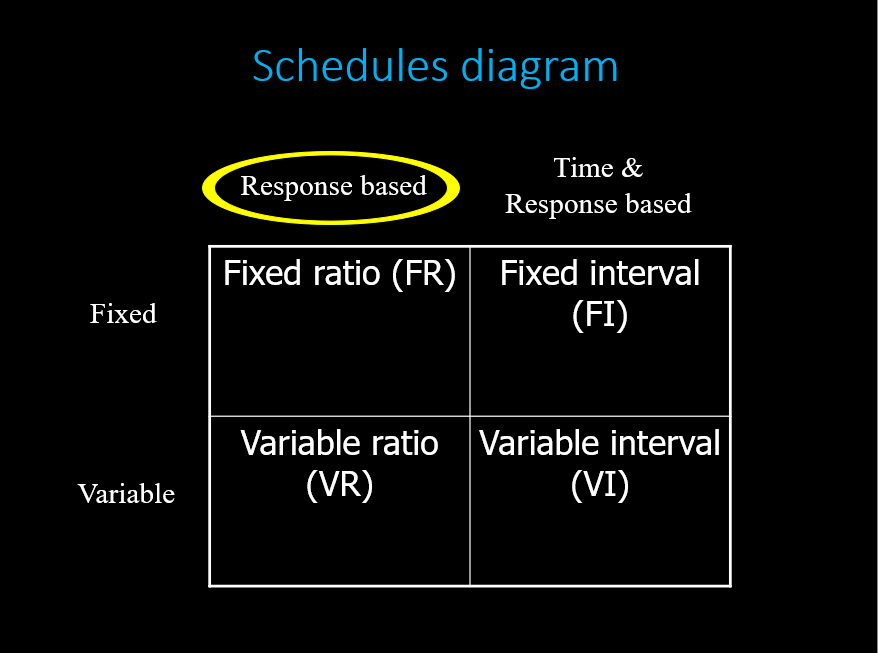

schedules diagram

Ratio Schedules

An organism has to respond a specified number of times for reinforcement

The rate of responding will influence the rate of reinforcement.

The faster you respond, the faster you earn reinforcers

Reinforces shorter interresponse time (IRT) because IRT prior to reinforcement is likely to be short

Fixed Ratio Schedules (FR)

The number of responses required for reinforcement is constant

this schedule is a:

fixed ratio

PRP

post-reinforcement pause

FR 5

5 responses to earn a reinforcer

A fixed ratio schedule on a graph is called a:

break-and-run pattern

the ‘break’ happens after the response occurs EVERY time

Characteristics of Fixed Ratio Performance:

break and run

Post reinforcement pause (PRP)

Ratio strain

Break-and-run

A typical pattern associated with fixed ratio schedules that has a high response rate until reinforcement is received and then a post-reinforcement pause or PRP

Post reinforcement pause (PRP)

A pause in responding after a reinforcer is delivered (‘break’ in break-and-run)

This occurs because of the workload ahead

“Pre-ratio pause”

Ratio strain

Extended pauses after reinforcement or during a run of responses. Occurs when shaping is done too quickly or with too large of steps

This can be avoided with proper training

It happens when the reinforcement have increased too quickly

Stops responding when everything increases too quickly

FR

LOWER resistance to extinction , and rapid response, with short pause after reinforcement

Typical FR (fixed ratio) response pattern

Higher ratios generate higher rates of responding and longer pauses

A real-life example of fixed ratio schedules is piecework…manufacturing an American flag

Variable Ratio Schedule (VR)

A changing number of responses is required for reinforcement

Specifies the average number of responses required

Generally produces the highest rate of responding

NO BREAKS/pauses

steep slope

VR3 = an average of 3 responses to earn a reinforcer (ITS AVERAGE…SO IT COULD BE 2, COULD BE 5)

this schedule is a…

Variable ratio

Characteristics of Variable Ratio Performance

PRPRs do not usually occur in variable ratio schedules

Ratio strain is possible

VR has a higher resistance to extinction

VR

HIGHER resistance to extinction, and high, steady rate without pauses

Interval Schedules

An organism’s behavior is reinforced for the first response after a ‘given time interval’ has passed

The response MUST occur for reinforcement

The rate of responding has little influence on the rate of reinforcement

Both long and short interresponse times are reinforced

There is a time component

Fixed Interval (FI) Schedule

The first response after the constant time interval has expired is reinforced

examples include waiting for the bus or checking the mail

after 10 minutes have passed a response will occur

scalloped

This graph is …

Fixed interval

FI 1 min = response after 1 min or longer earns a reinforcement

further explanation: You don’t check the oven right after you put the cookies in the oven, you check after 15 minutes to see if the cookies are ready. You have to wait a certain amount of time. so you would have FI 15 min.

Characteristics of Fixed Interval Performance

PRP is common

After a pause, behavior may progressively increase (SCALLOPED)

or, behavior may become rapid and steady (break and run)

As the interval gets closer to timing out, the probability of reinforcement increases, so your response gets faster and faster

real life example: checking the clock

FI

LOWER resistance to extinction, and long pause after reinforcement yields

Variable interval (VI) schedule

The first response after the variable time interval has expired will be reinforced. States the average interval after which a response will be reinforced.

Examples include calling someone whose line is busy, or waiting for a letter in the mail.

On average have to wait about 30 seconds for my response to occur

Shallower slope

Deals with seconds

this graph is a …

variable interval

VI 30 seconds = response after an average of 30 seconds earns a reinforcer

Characteristics of variable interval performance

Brief PRPs occur occasionally

An increase in the size of the interval produces a decrease in the response rate

Moderate

VI

HIGHER resistance to extinciton, and steady rate without pauses

real life example: surfing with waves

Response Independent Schedules (also known as time-based schedules)

fixed time (FT)

variable time (VT)

May lead to superstitious behavior (through accidental behavior)

Fixed Time (FT)

after a fixed amount of time, a consequence is delivered regardless of the responding

Variable Time (VT)

after an average amount of time, a consequence is delivered regardless of responding

Fixed

every time

variable

average amount of time

The use of Schedules of Reinforcement

form a stable baseline

The baseline can be used to test IVs such as drug administration

be used as IVs themselves

it teaches us more about basic behavioral process

to test if your lab is working properly

you should be able to get the proper response patterning for each schedule

Advantages of Intermittent Schedule

FR schedule (e.g. FR 10) generates higher rates of responding than CRF schedules

Less chance of satiation

Under some circumstances, behavior is more resistant to EXT (variable schedules)

Schedules more closely simulate the natural environment

Satiation

fully satisfied