Topic 3 - Measurement

1/41

Earn XP

Description and Tags

Ch5 RMiP and lsj

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

42 Terms

conceptual definition (construct)

A researcher’s definition of a variable at the theoretical level.

self report measure

way to measure variable

ppl answer qs about themselves (interview/questionnaire)

observational/behavioral measure

measure variable through recording observable behaviors/physical traces

physiological measure

measuring variables through recording biological data (e.g. brain activity, heart rate etc.)

fMRI

functional magnetic resonance imaging

categorical/nominal variable

a variable whose levels are categories (e.g. M or F)

quantitative/continuous variable

coded with meaningful numbers (e.g. height, weight, IQ score, Dieners scale of subjective wellbeing)

ordinal scale

a quantitative measurement scale whose levels rep a RANKED order

distances are NOT equal (e.g. time between 1st place and 2nd place is NOT the same as 2nd place and 3rd place)

interval scale

a quantitative measurement scale that has no true zero

numerals represent EQUAL intervals

e.g. temperature in degrees

ratio scale

a quantitative measurement scale in which the numerals have equal intervals AND the value of zero = 0 of the variable being measured

what are the three types of reliability

test-retest reliability

interrater reliability

internal reliability/internal consistency

what is test-retest reliability

a study participant will get pretty much the same score each time they are measured with it

what is interrater reliability

consistent scores are obtained no matter who measures the variable

2+ independent observers will have very similar/same findings

what is internal reliability/consistency

in which a study participant gives a consistent pattern of answers no matter how the researchers phrase the question

applies to measures that combine multiple items

e.g. Dieners five item subjective well-being scale (each item intended to measure the same construct)

what are the two statistical devices used for data analysis (reliability)

scatterplots

the correlation coefficient

correlation coefficient r

A single number, ranging from –1.0 to 1.0, that indicates the strength

and direction of an association between two variables.

slope direction

The upward, downward, or neutral slope of the cluster of data points

in a scatterplot.

strength

A description of an association indicating how closely the data points

in a scatterplot cluster along a line of best fit drawn through them.

average inter-item correlation

A measure of internal reliability for a set of items; it is the mean of

all possible correlations computed between each item and the others.

cronbach’s alpha/coefficient alpha

A correlation-based statistic that measures a scale’s internal

reliability.

what are the two steps in establishing construct validity

measurement reliability

measurement validity

when is construct validity especially important?

when a construct is not directly observable

what do face and content validity establish?

that the operationalizations are consistent with the conceptual definition

what is the difference between validity and reliability

validity has to do with how well a measure is associated with smth else, whereas reliability has to do with how well a measure correlates with ITSELF

face validity

The extent to which a measure is subjectively considered a plausible

operationalization of the conceptual variable in question.

criterion validity

An empirical form of measurement validity that establishes the extent

to which a measure is associated with a behavioral outcome with

which it should be associated.

known-groups paradigm

A method for establishing criterion validity, in which a researcher

tests two or more groups who are known to differ on the variable of

interest, to ensure that they score differently on a measure of that

variable.

convergent validity

An empirical test of the extent to which a self-report measure

correlates with other measures of a theoretically similar construct.

See also discriminant validity.

discriminant validity

An empirical test of the extent to which a self-report measure does

not correlate strongly with measures of theoretically dissimilar

constructs. Also called divergent validity. See also convergent

validity.

content validity

The extent to which a measure captures all parts of a defined

construct.

what graphs are best for interval or ratio scale variables?

boxplots, historgrams

kernel smoothing

a statistical technique used to estimate a real-valued function as the weighted average of neighboring observed data points. (makes it smooooth)

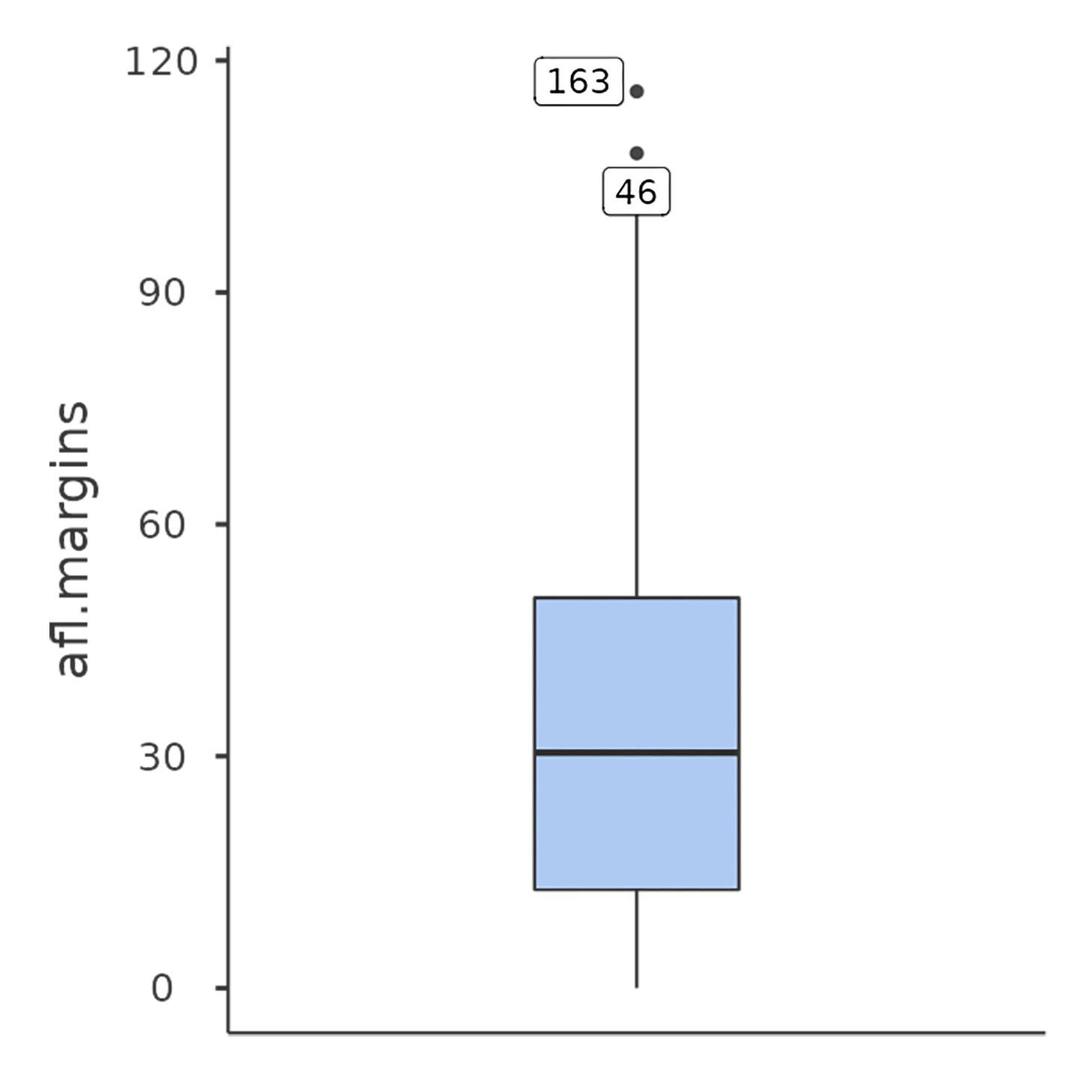

boxplot/box and whiskers plot

provides a depiction of the median, the interquartile range, and the range of data

popular for exploratory stage of data analysis

violin plot

similar to box plots except they also show the kernel probability density

what is the y variable

the variable that happens second

what i want to understand better

what is the x variable

the variable we think occurs first

variables that may contribute to our understanding of the other axis

what variables are on the y axis?

dependent variable

outcome

criterion

response variable

what variables are on the x axis?

independent variable

predictor

manipulated variable

what is construct validity

the extent to which a measurement is actually measuring the intended construct

it has two subjective ways to assess validity (face and content validity) and three empirical ways to assess validity (convergent, discriminant, criterion validity)

split half reliability

assesses the extent to which all parts of a test contribute equally to the measure of a concept or skill. It involves dividing a test into two equal halves, administering both halves to the same group, and then correlating the scores of the two halves

cronbach’s alpha

a measure of internal consistency reliability for tests and surveys, indicating how closely related a set of items are as a group.

average of all possible split halves for interval/ratio scale measures

what is the benchmark for cronbach’s alpha?

a > 0.7