Linear Algebra - Chapter 6

1/32

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

33 Terms

What is an inner product in ℝⁿ?

The inner product of vectors u and v is u·v = u₁v₁ + u₂v₂ + … + uₙvₙ.

What does it mean for two vectors to be orthogonal?

They are orthogonal if their dot product is zero: u·v = 0.

What is the norm / length of a vector v?

‖v‖ = sqrt(v·v) = sqrt(v₁² + v₂² + … + vₙ²).

What is the distance between vectors u and v?

distance(u, v) = ‖u − v‖ = sqrt((u₁ − v₁)² + (u₂ − v₂)² + … + (uₙ − vₙ)²).

What is an orthogonal set?

A set {v₁, …, vₖ} where vᵢ·vⱼ = 0 for all i ≠ j.

What is an orthonormal set?

A set of vectors that are orthogonal and each has norm 1.

What is an orthonormal basis?

A basis whose vectors are orthonormal.

What is the projection of v onto a non-unit vector u?

projᵤ(v) = [(v·u) / (u·u)]u.

How do you compute proj_W(v) when W has an orthonormal basis {u₁, …, uₖ}?

Sum the projections of V onto each basis vector.

What is the orthogonal decomposition theorem?

A vector y can be written in the form y = y-hat + (y - y-hat), where y-hat is the projection into a subspace.

What is the best approximation of the vector y?

y-hat

W^{COMPLIMENT}

All vectors orthogonal to every vector in W.

What is a least-squares solution to Ax = b?

A vector x̂ that minimizes ‖Ax − b‖.

What is the geometric meaning of Ax̂ in least squares?

Ax̂ is the projection of b onto Col(A).

What is an orthogonal matrix?

A matrix whose columns form an orthonormal set: QᵀQ = I.

What property do orthogonal matrices preserve?

Lengths and angles: ‖Qv‖ = ‖v‖.

What is the error vector in least squares?

e = b − Ax̂, and e is orthogonal to Col(A).

What are the normal equations?

AᵀA x = Aᵀb.

What are the steps for solving using normal equation?

Calculate A^{Transpose}A and a^{Transpose}b seperately

Plug answers in and solve for x-hat

Solve for b-hat with the equation b-hat = A dot x-hat

Calculate the error: b-b^{hat}

What is a unit vector?

A unit vector is a vector whose length is 1.

What is an orthogonal compliment?

The orthogonal complement of a subspace W (within a larger subspace V) is the set of all vectors in V that is orthogonal to every vector in W.

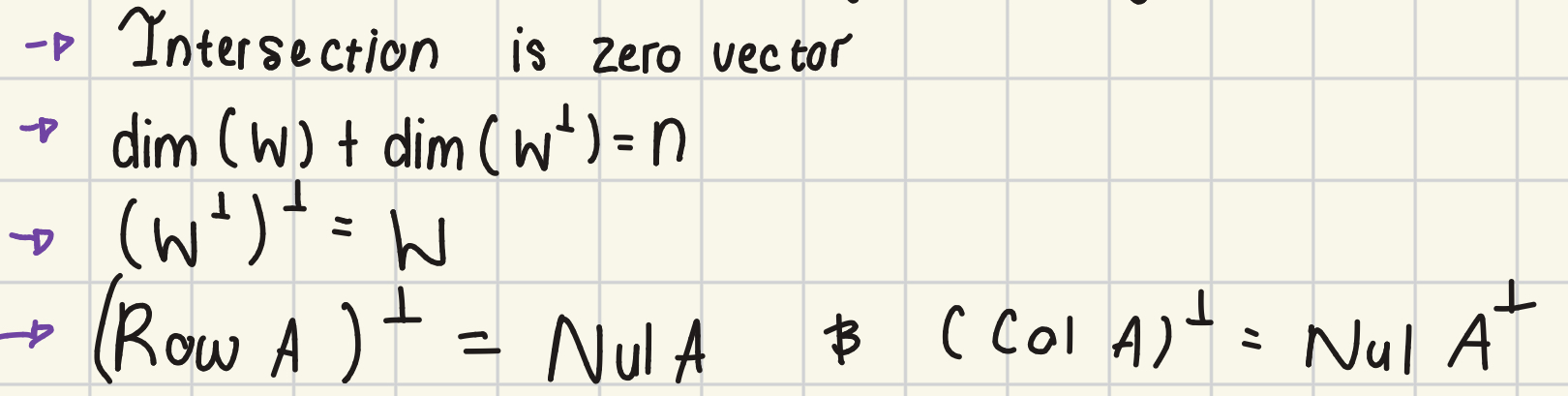

What are the properties of an orthogonal complement?

What is the formula needed to normalise a vector?

\frac{1}{\left\Vert u\right\Vert}u

Provide the pythagorean theorem for orthogonal vectors?

Two vectors u and v are orthogonal if and only if \left\Vert u+v\right\Vert^2=\left\Vert u\right\Vert^2+\left\Vert v\right\Vert^2

Provide the orthogonal complement properties?

(Row A)orthogonal complement = Nul A and (Col A) orthogonal complement = Nul A orthogonal complement

Why is the application of the Gram-schmidt process?

To take a set of linearly independent vectors and transform it into an orthogonal set that spans the same space

What are the steps of the Gram-schmidt process?

Set a vector equal to the first basis vector; Calculate preceding vectors by subtracting the vector you’re projecting from the projection of that vector onto each of the basis vectors. Finally normalise the vector

What does X, Beta and y represent in the equation X Beta = y?

X is the design matrix, Beta is the vector contiang Beta-nought and Beta-one (in least square line) and y is the observed vector.

How do you construct the design matrix X?

Set the first column to 1’s, set the following columns to the x-cordinates of a given data set.

Provide the equation for the residual vector e?

e = y - Xbeta

What does the residual vector represent?

The residual vector is the distance between y (original vector) and the projection of y into the design matrix

What is the linear model equation?

y = X Beta + e

What is the linear model normal equation?

XTranposeX Beta = X transpose y