Session 3: Epidemiological Methods (Surveys)

1/40

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

41 Terms

What is a survey?

A descriptive technique for obtaining the self-reported attitudes or behaviors of a particular group, usually by questioning a representative, random sample of the group

Generalizability of results depend on...

Extent to which surveyed population (sample) is representative

Some examples of health-related surveys

1) Face-to-face structured interview & measurements

2) Self-completed non-motor questionnaire for Parkinsonism

3) ONS Coronavirus Prevalence Survey: self-swab PCR

4) Child Development Supplement: parent-reported weight/height

The purpose of surveys

- Assess prevalence of disease (cross-sectional survey)

- Measure risk/protective factors of respondent

- Measure outcomes

- Ad-hoc data collection = collect information of interest

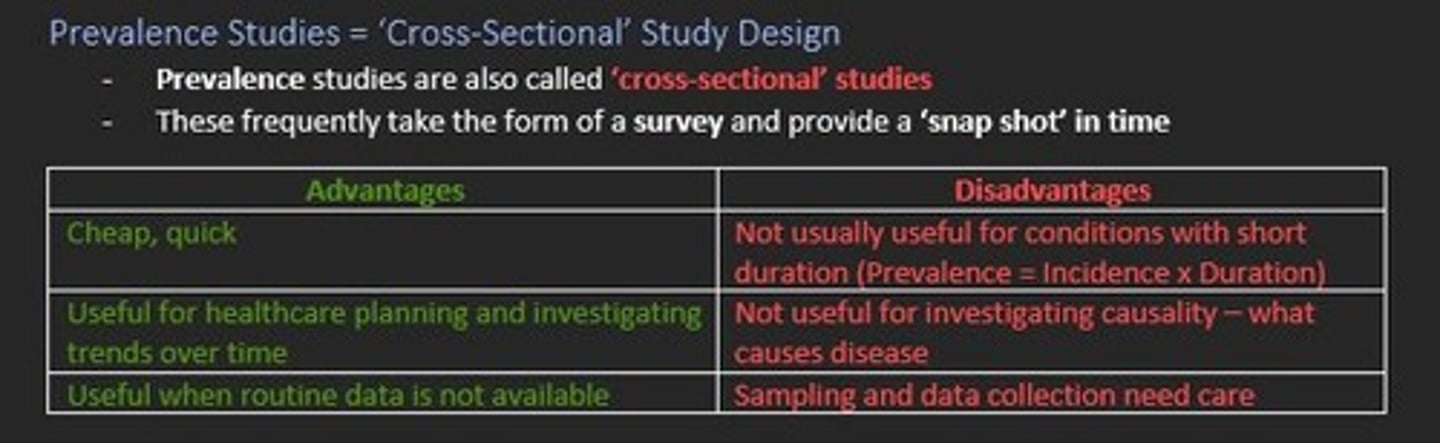

Prevalence studies are also known as...

Cross-sectional study design

Prevalence (cross-sectional) study design pros/cons

Advantages

- Cheap & quick

- Useful for healthcare planning & investigating trends/time

- Useful when routine data not available

Disadvantages

- Not useful for conditions with short duration

- Not useful for investigating causality

- Sampling & data collection need care

Population

A larger group of individuals of whom we are interested in and wish to apply the results of a survey/study to

Sample

Smaller study group taken from the larger population

We cannot survey a whole population (too many people), so we must take a ___ and make ___ from the findings of that sample about the population

We cannot survey a whole population (too many people), so we must take a sample and make inferences from the findings of that sample about the population

A sample must be ___ of the population

Representative

Sampling frame

A list of individuals from whom the sample is drawn

E.g., GP practice list, electoral register, school register, employee register

The ______ the sample size, the more accurate the survey.

Larger

Sampling methods

1) Random (probability sampling)

Everyone in sampling frame has equal probability of being chosen = important to achieve representative sample

2) Non-random (non-probability sampling)

Easier & convenient

Unlikely to be representative = beware of self-selecting samples

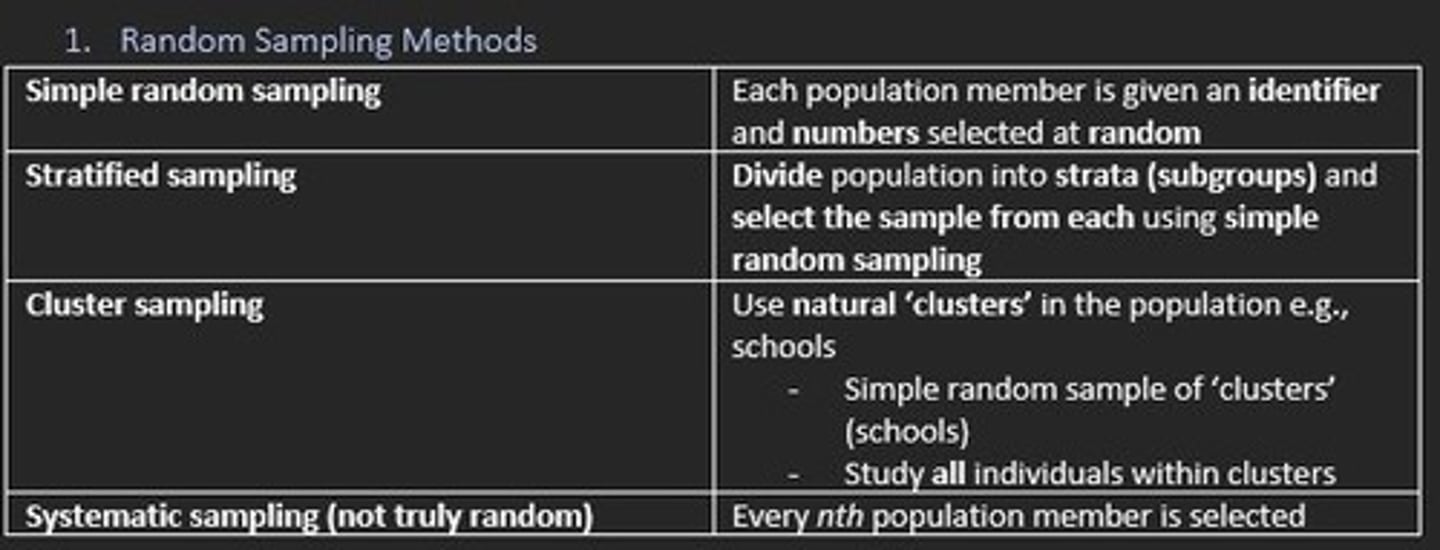

Four random sampling methods

1) Simple random sample

2) Stratified sample

3) Cluster sample

4) Systematic sampling (not truly random)

Simple random sample

Every member of the population has a known and equal chance of selection (each member given identifier and numbers selected at random)

Stratified sampling

Divides the population into subgroups (strata) and select sample from each using simple random sampling

Cluster sampling

Use 'natural clusters' in the population e.g., schools

Systematic sampling (not truly random)

Every nth population member is selected

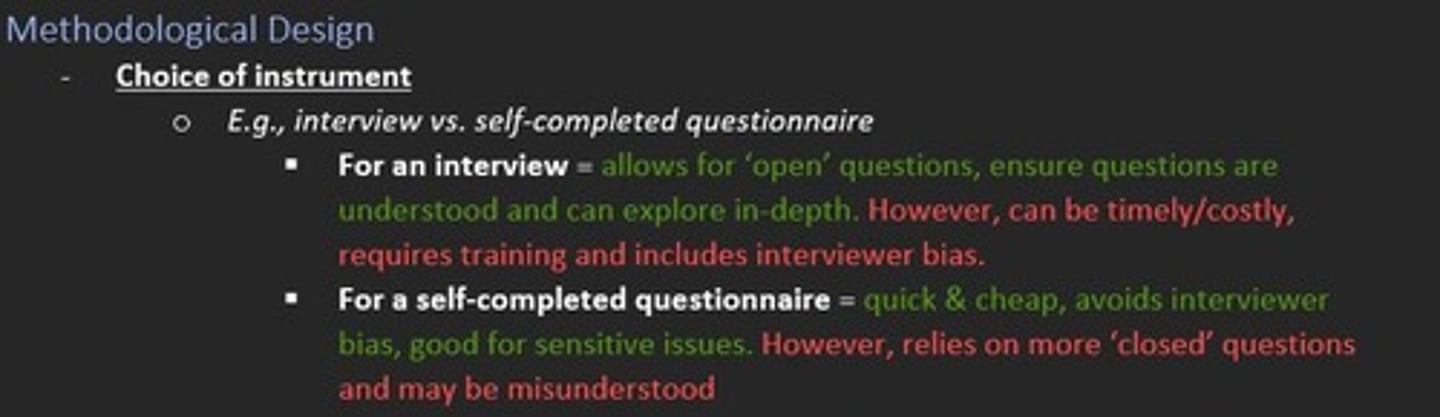

Methodological design can be influenced by two factors - what are they?

1) Choice of instrument e.g., interview vs. self-reported questionnaire

2) Quality control = standardisation of instruments, training of the interviewers

What is the pros and cons of using an INTERVIEW as the choice of instrument?

Pros

- Allows for open questions

- Ensures questions are understood

- Can explore questions in depth

Cons

- Can be timely/costly

- Requires training

- Includes interviewer bias

What is the pros and cons of using an SELF-COMPLETED QUESTIONNAIRE as the choice of instrument?

Pros

- Quick & cheap

- Avoids interviewer bias

- Good for sensitive issues

Cons

- Relies on more closed questions = risk of being misunderstood

Name some examples of how quality control of methodological design is maintained?

1) Standardisation of instruments used

2) Training for interviewers/observers

3) Structured questionnaires

Name some ways in which questionnaire design of a study (survey) can be strengthened

1) Avoid crowding questions

2) Order questions appropriately (sensitive questions later)

3) Avoid leading questions

4) Avoid ambiguity (double-barrel questions)

5) Search for validated measures in literature

Avoiding instrument bias - how can we do this?

Assessing or measuring how well the test, instrument or question performs over time, in different settings, with different groups of subjects

Two key components of measurement of instrument performance are...

1) Validity

2) Repeatability

Validity

How well the test measures what it is supposed to measure (capacity of test to give the true result)

Repeatability (reliability, reproducibility)

Degree to which measurement made on one occassion agrees with the same measurement on a subsequent occassion

Give examples of two types of error that occur in estimating the true effect

1) Random error = random imprecision or variable performance due to chance alone

2) Systematic error = systematic error in sampling or measurement e.g., bias

Selection bias

Errors in the selection and placement of subjects into groups that results in differences between groups which could effect the results of an experiment.

Differences in how subjects are selected leading to differences in characteristics within groups.

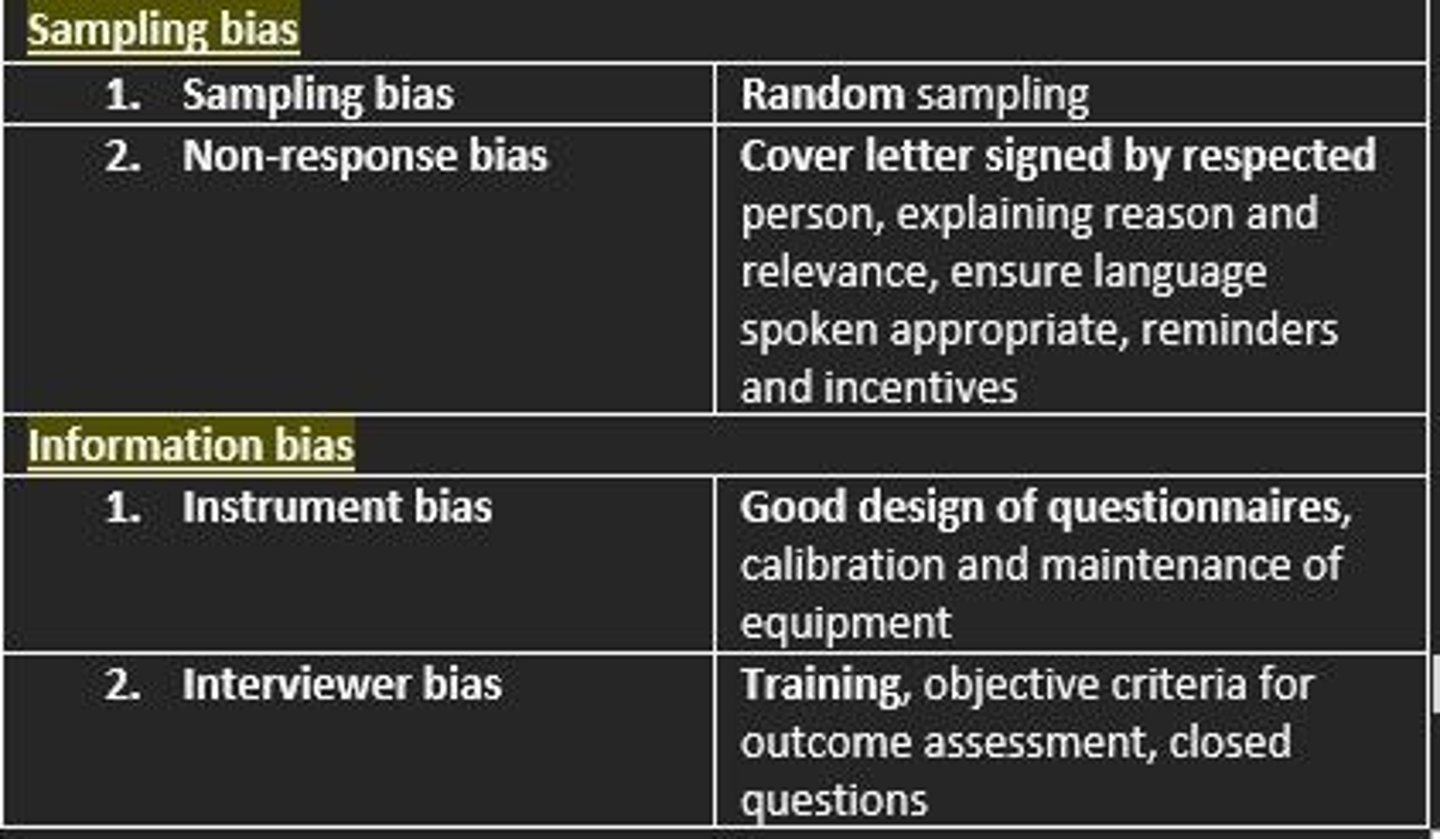

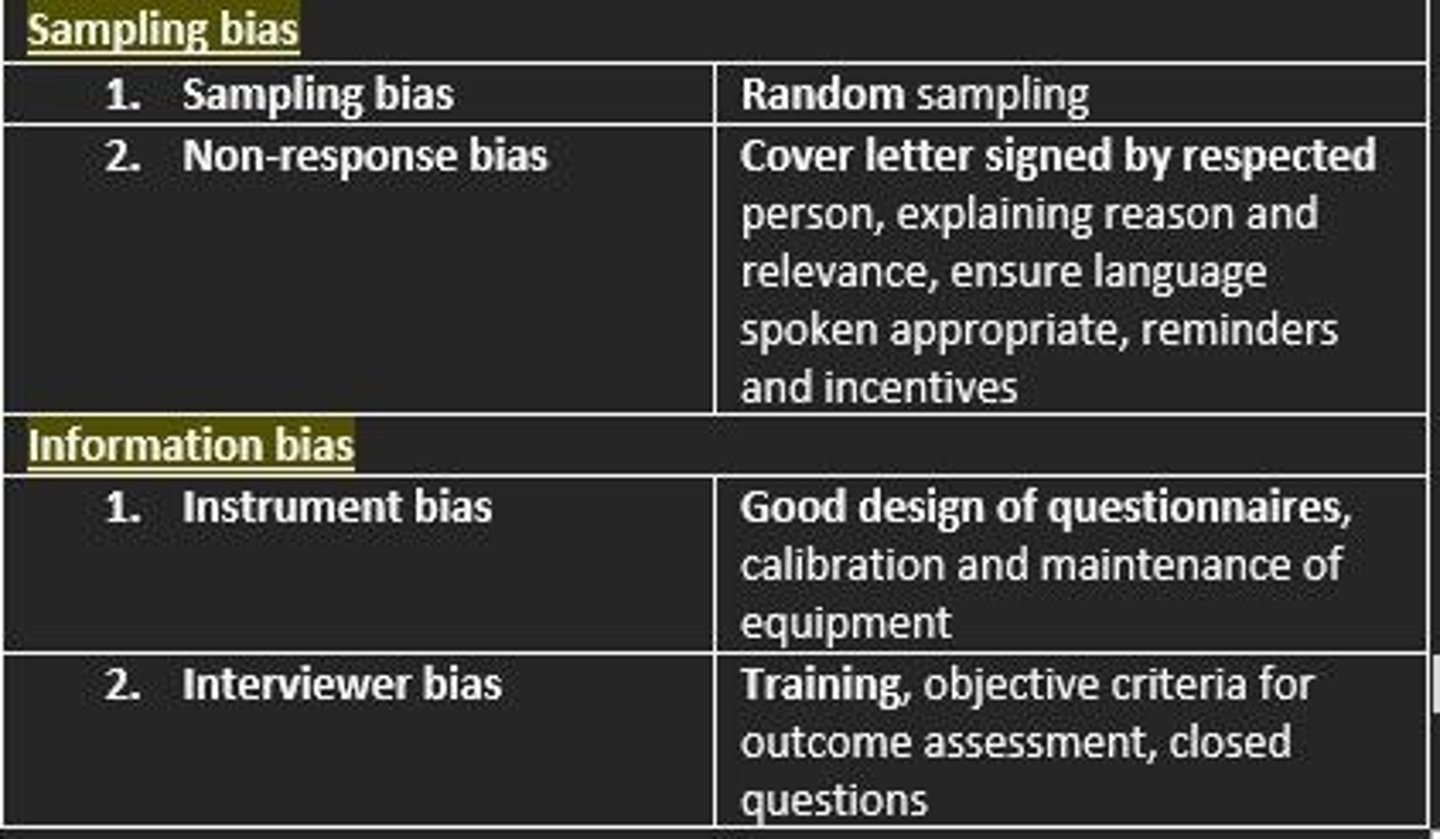

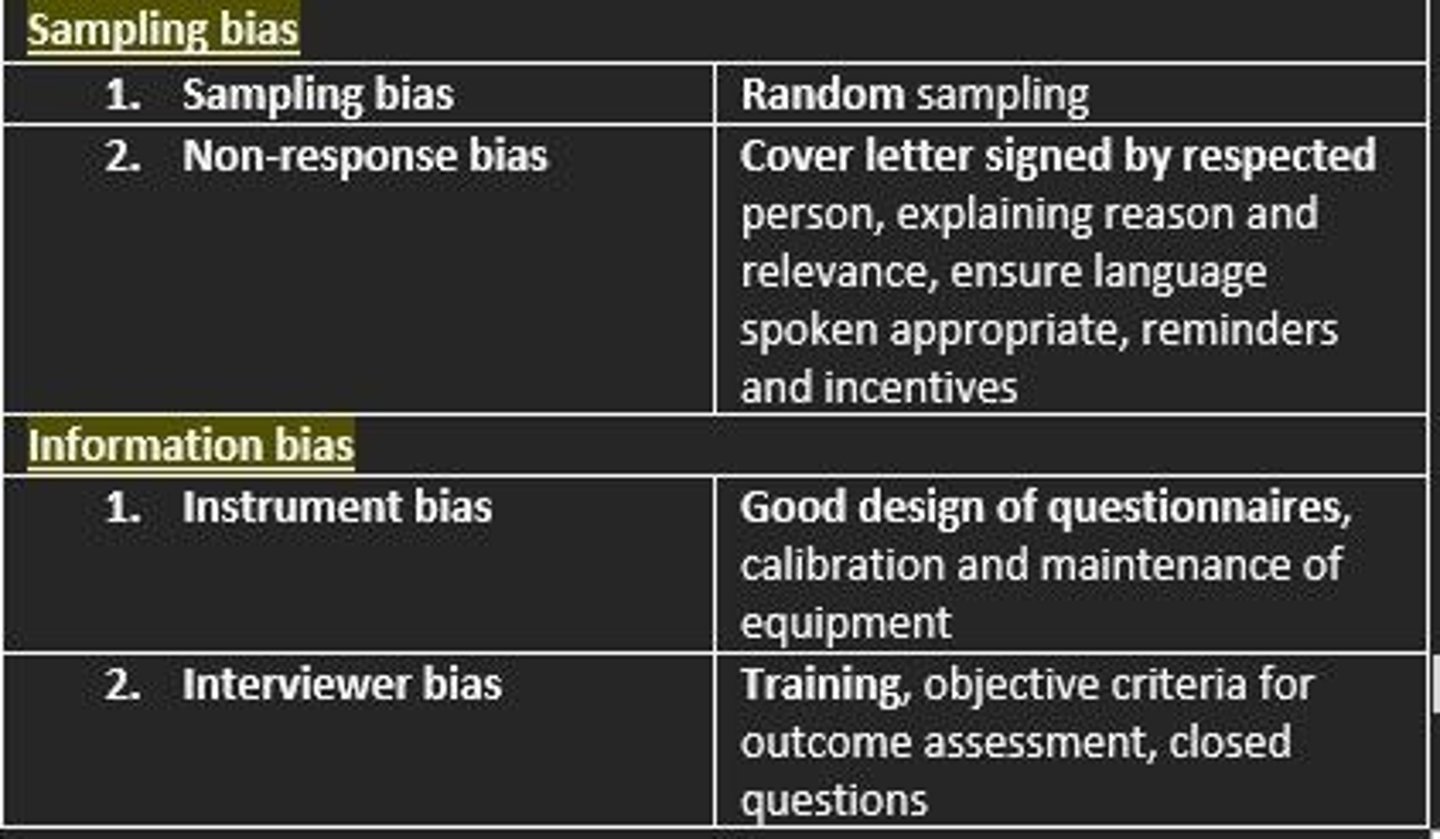

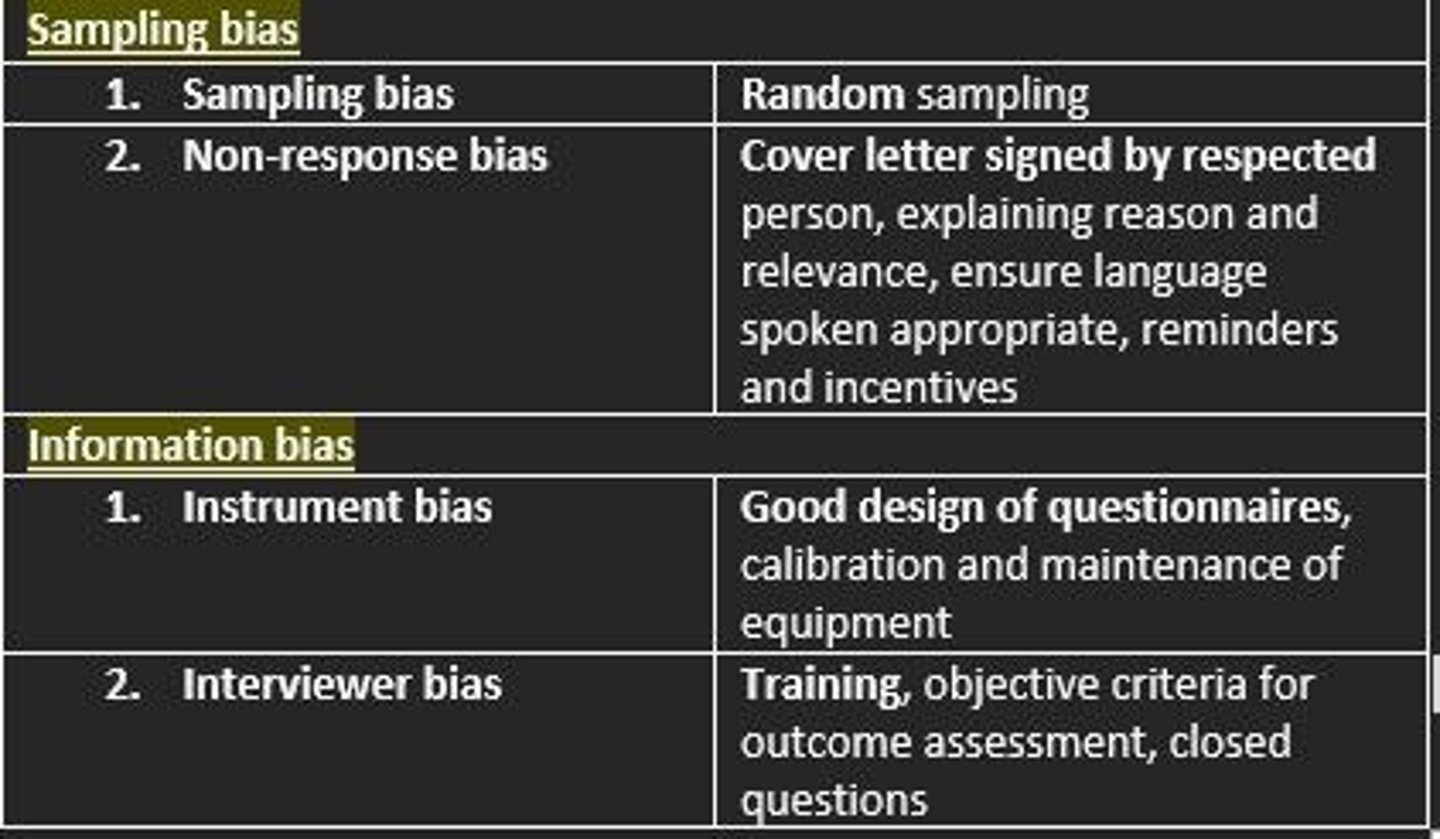

Two different TYPES of selection bias

Sampling bias = non-representative sampling

Non-response bias = respondents differ from non-respondents

Information (measurement) bias

Error due to systematic differences in measurement or classification of individuals in the groups being studied.

Two different TYPES of information (measurement) bias

1) Instrument bias = systematic error due to inadequate design, calibration or maintenance of instruments

2) Inter-observer bias = systematic error between measurements of different interviewers e.g., due to training

Measurement errors can be due to issues in ___ and ___

Measurement errors can be due to issues in precision and accuracy

Precision

If there is lots of variation (random error) - precision is poor.

If there is little random variation - measures are precise.

Accuracy

How close the average measurement is to the true value.

Poor accuracy is the result of systematic error (bias).

How could we minimise sampling bias in surveys?

Random sampling

How could we minimise non-response bias in surveys?

Cover letter signed by the respected person - explaining the reason and relevance of the survey.

Ensure language spoken is appropriate.

Provide reminders and incentives.

How could we minimise instrument bias in surveys?

Good design of questionnaires, calibration and maintenance of equipment.

How could we minimise interviewer bias in surveys?

Training, objective criteria for outcome assessment, closed questions.

Response rate

The percentage of people contacted in a research sample who complete the questionnaire

How can we check for non-response bias?

Compare the respondents' characteristics with the non-respondents' characteristics