Lecture 5: Data Analysis as Workflows, Lecture 6: Parallel Processing, Lecture 7: Distributed Computing

1/29

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

30 Terms

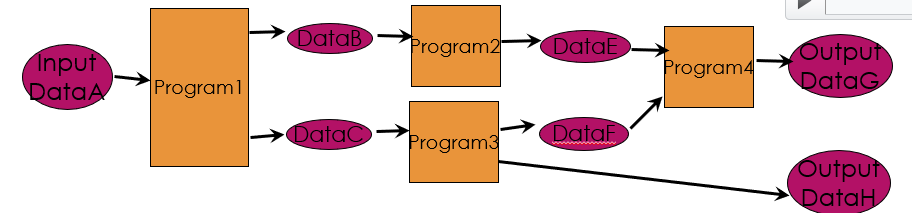

Workflow

Composition of functions

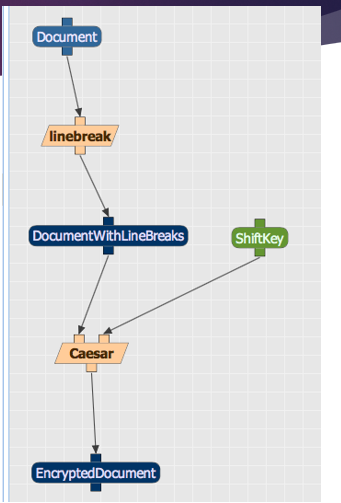

Computational workflows

Composition of programs

No user interaction during execution

No cycles allowed!

Workflow Component

Represents a function (or computation) in the workflow that is implemented as a program with inputs, parameters and outputs

Electronic Lab Notebooks

A Popular Alternative, Record data, software, results, notes, etc

Records what code was run when generating a result

Can re-run with new data

Algorithmic Complexity

Linear complexity: When its execution time grows linearly with the size of the input data

Polynomial complexity: When its execution time is bound by a polynomial expression in the size of the input data (e.g., n³)

Exponential complexity: When bound by an exponential expression (e.g., 2n)

Algorithmic Complexity: Big “O” Notation

n: size of the input data

Linear complexity: O(n)

Polynomial complexity: O(nk)

Exponential complexity: O(kn)

Divide-and-Conquer Strategy

Divide into pieces → solve each piece separately → combine the individual results

*Requires that each piece is independent of the other

Dependencies and Message Passing

The steps within an algorithm may have significant interdependencies and require exchanging information, and may not be easily parallelizable

Speedup

S = TimeSequential/TimeParallel

Critical Path

Consists of consecutive steps that are interdependent and therefore not parallelizable

Amdahl’s Law

Theoretical speedup in the execution of a task where p is the percent (proportion) of the task that is parallelizable (1/1-p)

Embarrassingly Parallel

Cleanly separable and can be carried out in parallel, typically with significant speedups

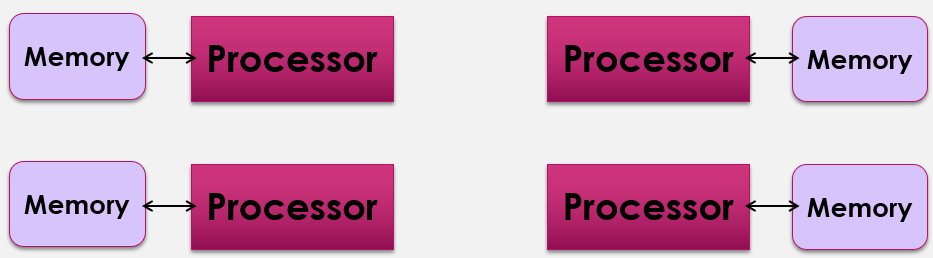

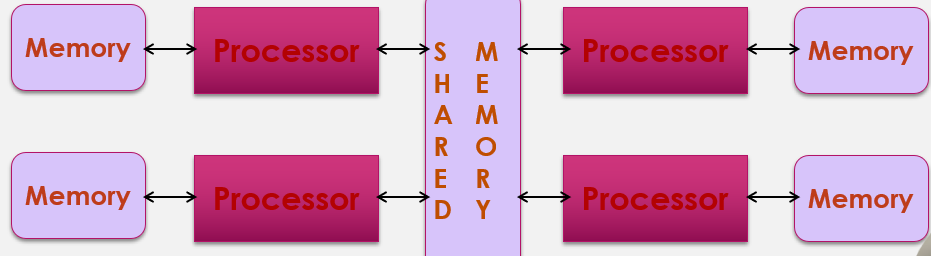

Multi-core computing

Several processors (cores) in the same computer

Shared memory

Distributed memory

Mixed-memory architecture

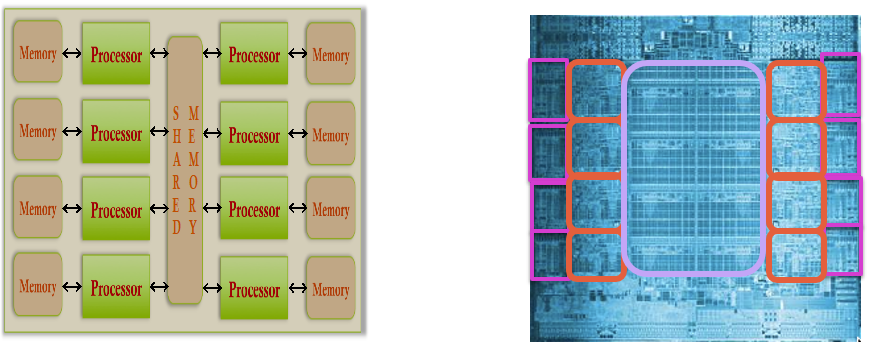

Multi Core Chips

Graphical Processing Units (GPUs)

Designed to do simple computations to display graphics and are very cheap

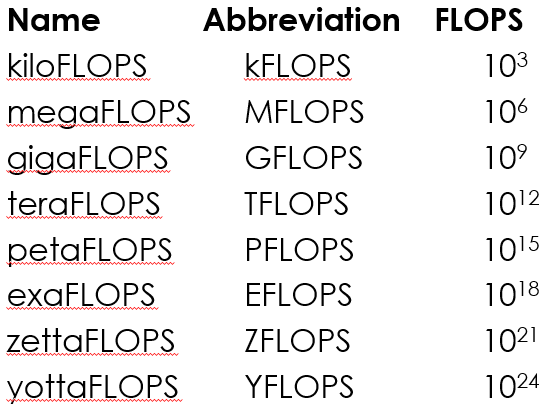

Floating points operations per second (FLOPS)

How the speed of supercomputers are measured

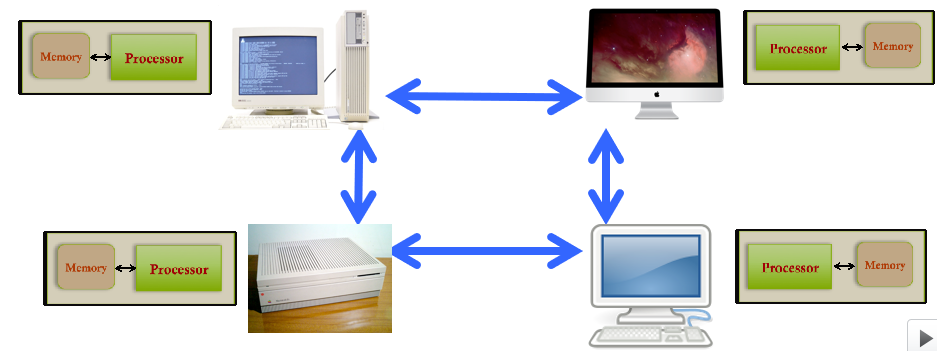

Distributed computing

A parallel computing paradigm where individual cores do computations that are orchestrated over a network (e.g., the Internet)

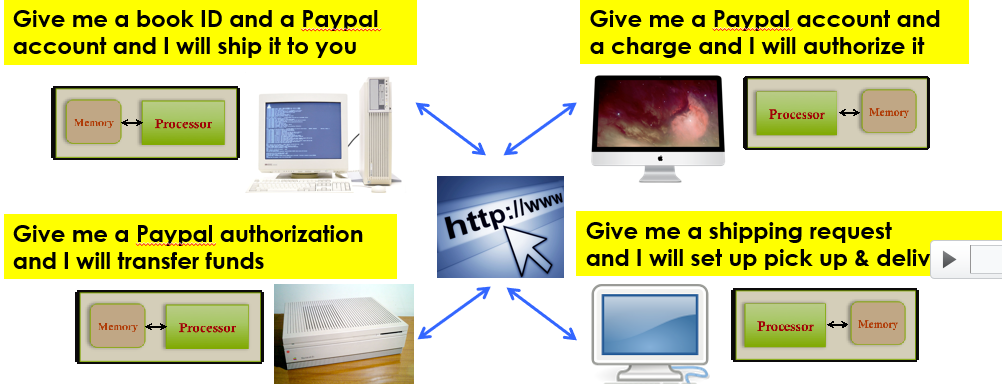

Web services

An approach to distributed computing where third parties offer services for remote execution that can be orchestrated to create complex applications

Grid computing

An approach to distributed computing where the computing power of several computers of a different nature are orchestrated through a central “middleware” control center

Cluster computing

An approach to distributed computing where the processing of several computers of very similar nature is orchestrated through a central head node

Virtual Machines

Frozen versions of all the software in a machine that is needed to run an application, including OS, programming language support, libraries, etc.

Parallel programming languages

Languages that contain special instructions to use multiple processing and memory units

MapReduce and Hadoop

Provide a programming language to implement a divide-and-conquer paradigm for distributing computations

Split (map)

Process

Join (reduce)

Manage execution failures automatically

Map Reduce was developed at Google and is proprietary. Hadoop has equivalent functionality but is publicly available (open source)

Set up to run on clusters

Hadoop has created an ecosystem of associated software with useful functionality (Spark, Pig, Hive, etc.)

Repeatability

The same lab can re-run a (data analysis) method with the same data and get the same results

Reproducibility

Another lab can re-run (a data analysis) method with the same data and get the same results

Should always be possible

Replicability

Another lab can run the same analysis with the same or different methods or data and get consistent results

Lack of this is just as important as success in this

Generalizability

Result of a study apply in other context or applications