Lectures 5-6 Social Cognitions and Attributions

1/26

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

27 Terms

social cognition

The study of how people think about the social world and make decisions about socially relevant events

social cognition: Why can’t we make perfect rationale decision (cognitive misers)

In order to make a perfect decision (to be completely rational), we need accurate, useful information and complete mental resources

But there are 2 conditions that inhibit this:

1. No one has an all-knowing, “God’s eye” view of the world

2. Even when we have adequate information, we do not have unlimited time and energy to analyze every problem

Cognitive misers: People look for ways to conserve cognitive energy by attempting to adopt strategies that simplify complex problems (heuristics)

dual process theory

System 1: Automatic processing; fast, intuitive, emotional

System 2: Controlled processing; slow, deliberate, logical

system 1 vs system 2

• Rational system (System 2): Unfamiliar tasks, tasks with a clear right answer, solving unexpected problems, goal pursuit

• Intuitive system (System 1):

Complex decisions: Dijksterhuis (2004) decision studies

Creativity: Mind-wandering promotes creative insight

heuristics: availability heuristic

When we judge the frequency or probability of some event by how readily pertinent instances come to mind.

Can lead to biased assessments of risk

• Bad news bias: Overestimation of the frequency of dramatic events

ex: overestimated bias: all accidents, motor vehicle accidents, tornadoes, flood, cancers, fire, homicide

heuristics: Representative heuristic

when we try to categorize something by judging how similar it is to our conception of the typical member of the category

Ex: Who is the prototypical Asian?

information presentation: halo effect

We tend to generalize our broad impressions to specific qualities about a person

information presentation: primacy effect (order effects)

information presented first has the most influence

In Social Psychology:

Early information shapes our overall impression more strongly than later info.

Once we form an initial impression, we tend to interpret new info in a way that confirms it (confirmation bias).

Example:

If you first hear someone is “intelligent, hardworking, and a bit arrogant,” you’re more likely to view them positively than if you first heard “arrogant, impatient, but intelligent.”

information presentation: recency effect (order effects)

information presented last has the more influence

In Social Psychology:

Happens especially when we’re distracted, tired, or haven’t made up our minds yet.

Newer info can override older info if it’s fresh or emotionally impactful.

Example:

If a patient seemed rude earlier but is now calm and cooperative, a healthcare provider might base their judgment more on the recent behavior

information presentation: framing effect

The way info is presented can “frame” the way its processed and understood

• Primacy and recency effect = type of framing effect

Spin-framing: Varies the content of what is presented

E.g., “illegal aliens” vs “undocumented workers”

E.g., ”torture” vs “enhanced interrogation”

E.g., “war department” vs “defense department

information presentation: Positive/ Negative framing

cognitive bias where the way information is presented—as a gain (positive) or a loss (negative)—influences decision-making, even when the facts are the same.

ex: Imagine a treatment for a disease is described in two ways:

Positive frame: "This treatment has a 90% survival rate."

Negative frame: "This treatment has a 10% mortality rate."

seeking information: Confirmation bias

In the social realm

• We often ask questions that will provide support for what we want to know

• Engage in a biased search for evidence

Information that supports what we want to be true is easily accepted; info that contradicts what a person would like to believe is often discounted

seeking information: bottom up processing

“data-drive” mental processing

An individual forms conclusions based on the stimuli encountered in the environment

seeking information: top down processing

“theory-driven” mental processing, where an individual filters and interprets new info in light of preexisting knowledge and expectations.

seeking information: assimilation

Interpreting new information in terms of existing beliefs. Expectations influence information processing. We see what we expect to see.

• ex: Hastorf & Cantril (1954) – Princeton-Dartmouth football game

Fans from Princeton and Dartmouth both watched the same game, but they interpreted events differently.

Each group assimilated the rough plays and penalties into their existing belief:

“Our team is fair; the other team is dirty.”

Instead of adjusting their beliefs based on the game footage (which would be accommodation), they fit the new information into their existing framework—that their team was in the right.

seeking information: belief perseverance

Persistence of one’s initial conceptions, even in the face of opposing evidence.

• Andersen et al. (1980) - Firefighter study

Participants read that either risk-taking or cautious firefighters performed better.

Later, they were told the info was made up—but they still believed what they first read.This shows belief perseverance: people stick to initial beliefs even after learning they’re false.

seeking information: false consensus

We tend to overestimate how much other people agree with us, especially when it comes to undesirable or questionable behaviors.

Undesirable? Consensus.

ex: "Everyone cheats a little on tests—it’s normal."

This is often used to justify bad behavior by assuming it's common.

seeking information: false distinctiveness

We tend to underestimate how common our positive traits or behaviors are, believing we’re more unique than we really are.

Desirable? Distinctiveness

ex: "I’m one of the few people who really cares about the environment."

This helps boost self-esteem by making us feel special.

self cognitions: egocentric bias

Tendency to focus on ourselves.

• Better memory for personally-relevant information

• Spotlight effect: people tend to overestimate how much others notice or pay attention to their appearance, behavior, or mistakes.

liking gap

After conversations, people underestimate how much their conversation partner likes them.

Boothby et al. (2018): Thoughts about own conversational performance were more negative than partner’s thoughts

thought gap

After conversations, people underestimate how much their conversation partner thinks about them (relative to the reverse).

Examples:

You think you came across as confident in a group presentation, but others saw you as cold or arrogant.

→ That’s a thought gap between your internal experience and others’ perception.A doctor believes they showed empathy during a patient consultation, but the patient feels rushed and misunderstood.

→ Another thought gap—common in healthcare communication.

causal attributions

Explanations people use for what caused a particular event or behavior.

Ex: Professor to student: “That’s a good point!”

Students: Was it really though? Did she just want to encourage participation?

causal attributions: Disposition attribution

when we explain someone’s behavior by attributing it to their internal characteristics—such as their personality, motives, attitudes, or abilities—rather than to external circumstances.

Examples:

If someone cuts you off in traffic, you might think, “They’re a rude and aggressive person” (dispositional), rather than considering that they might be late for an emergency (situational).

If a doctor seems impatient with a patient, a dispositional attribution would be, “She’s just cold and lacks empathy,” instead of “She’s under a lot of stress today.”

causal distributions: situational attribution

This occurs when someone assumes that a person's actions are due to the situation they are in, rather than their personality or character.

Examples:

If a nurse is short with a patient, a situational attribution might be: “She’s probably overworked or dealing with a difficult shift.”

If someone doesn’t respond to a greeting, you might think, “Maybe they didn’t hear me,” rather than assuming they are unfriendly

causal attributions: covariation principle

It explains how people determine whether a person's behavior is caused by internal (dispositional) or external (situational) factors.

• People determine locus of causality in terms of things that are present when the event occurs but absent when it does not.

Consistency across situations and time = dispositional

Consistency across people, not situations and time = situational

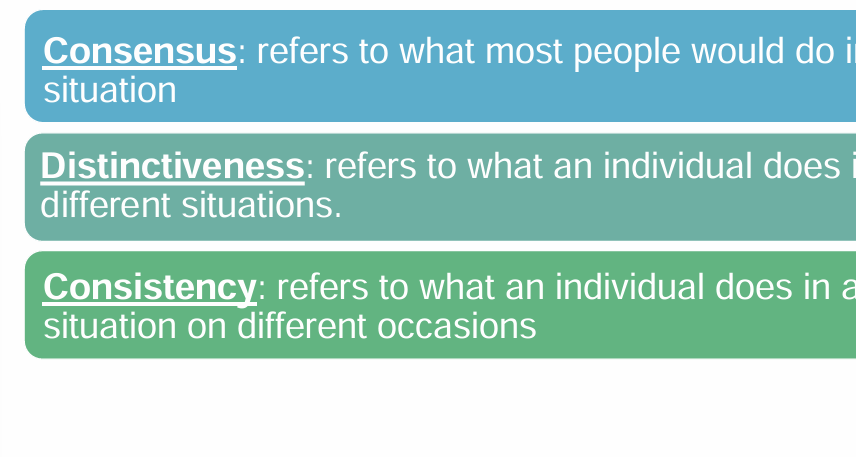

consensus- Do other people behave the same way in this situation?

distinctiveness- Does this person behave differently in other situations?

consistency- Does this person always behave this way in this situation?

attribution errors: correspondent inference

This refers to the tendency to conclude that a person’s behavior reflects their stable, internal traits (dispositional attribution)—even when the behavior could be explained by the situation.

Also called the fundamental attribution error

Example:

If a doctor speaks bluntly to a patient with a disability, someone might infer:

“That doctor is insensitive.”

Even though it might have been due to time pressure or miscommunication (a situational factor).

Cultural differences:

Collectivistic cultures = more situational attributions

Lower socioeconomic status = more situational attributions

Dispositional attribution vs corresponding inference

This is a broad explanation for behavior based on internal traits, personality, motives, or intentions.

We use it to explain why someone behaved a certain way:

“She was rude because she’s an impatient person.”

✅ It’s about general internal causes.

This is a specific type of dispositional attribution—it’s when we assume that a person’s behavior directly reflects their true character or intent, even when the behavior could have been influenced by the situation.

Introduced by Jones and Davis (1965).

It's about whether the behavior "corresponds" to a stable trait.

It's most likely when the behavior is freely chosen, unexpected, or has unique effects.

“She chose to speak harshly even though she didn’t have to—that must mean she’s naturally mean.”

✅ It’s about inferring a trait from a specific behavior, often ignoring situational influences.