Linear Algebra

1/21

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

22 Terms

One-to-one matrix

The matrix is one-to-one if the columns of A are linearly independent, meaning that no column can be expressed as a linear combination of the others (i.e. there are no free variables)

Onto matrix

The matrix is onto if the columns of A span Rm (no rows of all 0 in reduced row echelon form)

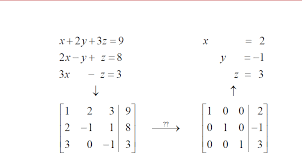

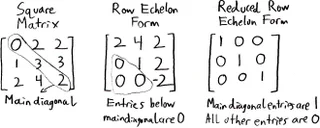

Reduced Row Echelon Form

A matrix is in reduced row echelon form if: 1) Each leading entry is 1 and is the only nonzero entry in its column. 2) The leading entry of each nonzero row appears to the right of the leading entry of the previous row. 3) Any rows consisting entirely of zeros are at the bottom.

Row Echelon Form

A matrix is in row echelon form if: 1) All nonzero rows are above any rows of all zeros. 2) The leading entry of each nonzero row (after the first) occurs to the right of the leading entry of the previous row. 3) The leading entry in any nonzero row is 1.

Invertible Matrix

A matrix A is called invertible if there is another matrix B such that AB = BA = I. A and B must be square matrices. We write A = B-1 and B = A-1. Also, A and B must be both one-to-one and onto.

Square Matrix

A square matrix is a matrix with an equal number of rows and columns (i.e an n x n matrix).

Inverse of A

Suppose A {{a, b}, {c,d}} is a 2x2 invertible matrix. The inverse of A, denoted A-1, is given by (1/det(A)) * {{d, -b}, {-c, a}}.

Determinant of a 2×2 matrix

For a 2x2 matrix A = {{a, b}, {c, d}}, this is calculated as det(A) = ad - bc.

Adjugate of a 2×2 matrix

For a 2x2 matrix A = {{a, b}, {c, d}}, this is given by adj(A) = {{d, -b}, {-c, a}}, which is the transpose of the cofactor matrix.

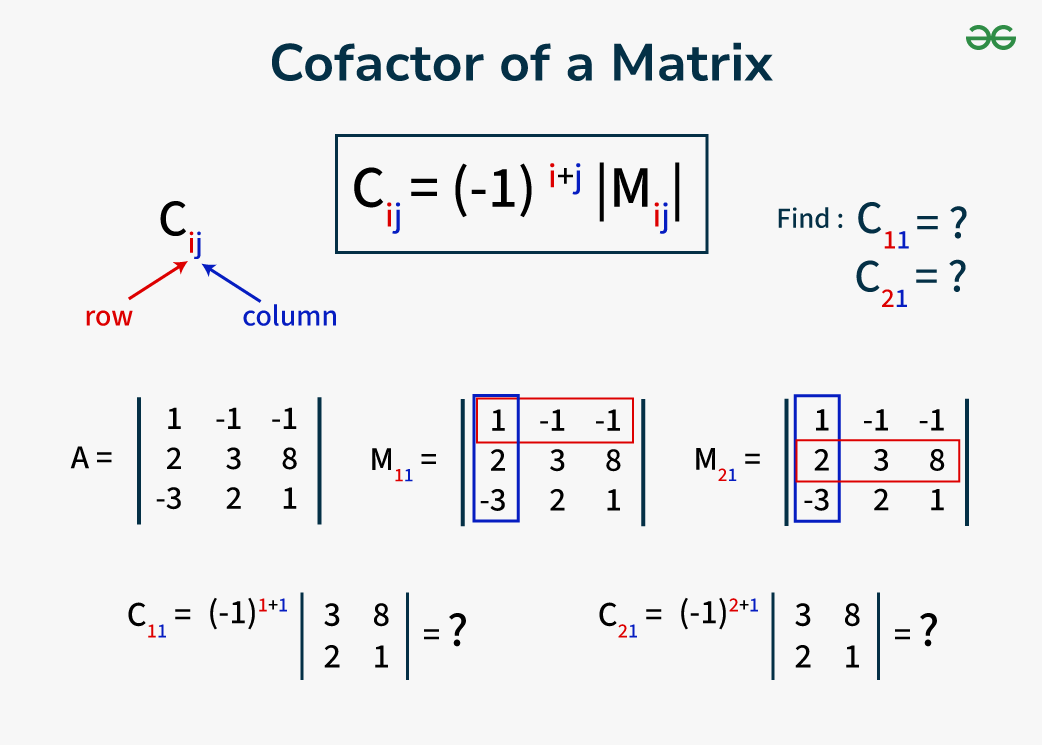

Cofactor Matrix

A matrix formed by taking the determinants of the minors of each element of a square matrix, with alternating signs applied based on the position of each element. The cofactor matrix is used in calculating the adjugate and inverse of a matrix.

Elementary Row Operations

The operations that can be performed on the rows of a matrix to achieve row echelon form or reduced row echelon form. There are three types of operations: 1) swapping two rows, 2) multiplying a row by a non-zero scalar, and 3) adding or subtracting a multiple of one row from another row.

Nullspace of a Matrix

The set of all vectors x such that Ax = 0, where A is a matrix and 0 is the zero vector. It represents the solution set to the homogeneous equation and gives insight into the linear dependencies within the columns of the matrix.

Solution Set of a Matrix

Refers to the set of all possible solutions that satisfy the matrix equation Ax = b, where A is the matrix, x is the vector of variables, and b is the resulting vector. It includes all vectors x that can be computed from the matrix.

Matrix Algebra Laws

For 3 matrices A, B, and C: (1). AB ≠ BA, (2) Multiplication is associative; C(BA) = (CB)A = CBA, (3) Multiplication is distributive; C(B+A) = CB + CA, (4) AIn = ImA if A is an m x n matrix

Identity Matrix

A square matrix with ones on the main diagonal and zeros elsewhere. Essentially a ‘1’ in matrix form.

Linear Transformation

A mapping between two vector spaces that preserves the operations of vector addition and scalar multiplication. In formal terms, a function T: V -> W is a linear transformation if for any vectors u, v in V and any scalar c, the following two properties hold: 1) T(u + v) = T(u) + T(v), and 2) T(c * u) = c * T(u).

Basis Vector

One of a set of vectors in a vector space that, when combined through linear combinations, can represent any vector in that space. Each basis vector is linearly independent from the others, meaning no basis vector can be expressed as a combination of the others.

Vector Span

The span of a set of vectors is the set of all possible linear combinations of those vectors. It represents all the vectors that can be formed by taking linear combinations of the given vectors, effectively describing a subspace in the vector space where the vectors reside.

When is matrix multiplication not defined

Not defined when the number of columns in the first matrix does not equal the number of rows in the second matrix. This requirement ensures that the matrices can be multiplied together.

Linear Dependence

A condition in which at least one vector in a set can be expressed as a linear combination of the others, indicating that the vectors are not linearly independent. If the determinant equals zero (ad - bc = 0), it indicates that the rows or columns of the matrix are linearly dependent.

Determinant

The determinant of a square matrix provides information about the matrix's properties, including whether it is invertible, the volume scaling factor of linear transformations, and the linear dependence of its rows and columns. Specifically, if the determinant is non-zero, the matrix is invertible and the vectors represented by its rows or columns are linearly independent.

How determinant interacts with row operations in a 2×2 matrix

(1) Adding a row to another row ~ determinant unchanged, (2) Multiplying by a scalar k ≠ 0 ~ determinant multiplied by k, (3) Swapping rows ~ determinant multiplied by -1