RHMS - lecture 9 - qualitative data analysis

1/14

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

15 Terms

streetlight effect / drunkard’s search

people are often looking for something where the light is good

type of bias

in research you need to look broader

what is an evaluation

a systemic determination of a subject’s merit, worth and significance, using criteria

3 core concepts in evaluating public health programs (outcome, impact, process)

outcome evaluations → ultimate goal to measure an effect

measure the effectiveness of an intervention in producing change

impact evaluations → to understand (long term impacts)

understand broader and long term impact of intervention

process evaluations → understand but also focus on barriers / facilitators

help stakeholders see how an intervention outcome or impact was achieved and what were the barriers.

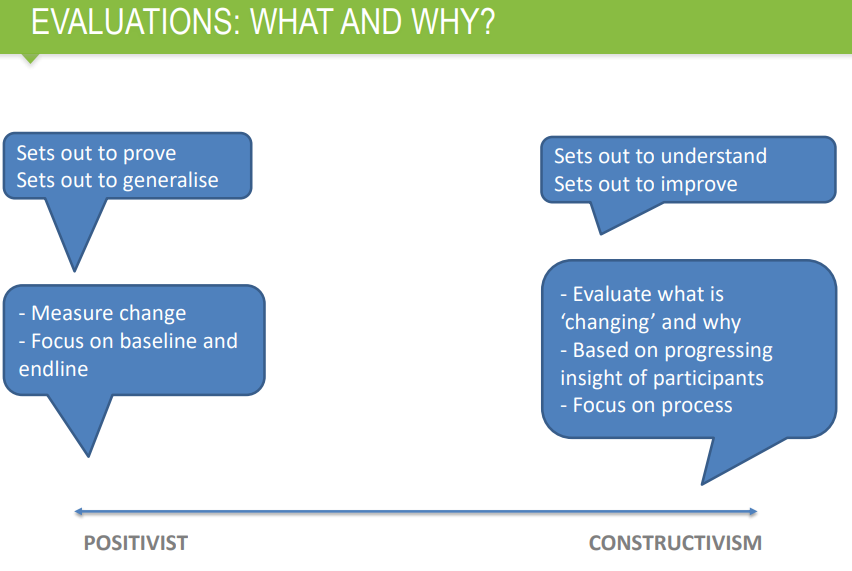

positivism versus constructivism in evaluation

positivism → prove, generalize

measure change

constructivism → understand, improve

focus on process

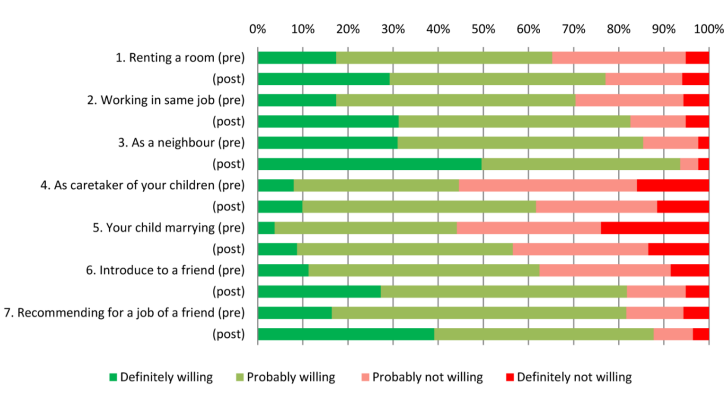

social distance scale

example of outcome evaluation

questions are asked

how do you feel renting a room in your home to someone with leprosy

how do you feel about being a worker on the same job as someone with leprosy

example impact evaluation

impact evaluation often measured via quotes

example → ‘there is a change in our attitude. we are not afraid anymore that leprosy can be infectious’ (this effect can be described as; understanding)

It is very useful! As the village chief, I can share this information to the community. They should not be afraid of leprosy. They should not excommunicate the patient. Leprosy is not infectious. If it were not for this event then I would not know anything about leprosy (effect described as broader impact)

example process evaluation

can also be measured via quotes (very important to look at long term → months after event)

about family counseling; you just meet me, it is enough. my family doesn’t need to know about this visit.

study designs

RCT

quasi experiment

qualitative evaluations

participatory action research / evaluation

randomized controlled trial

randomly

Type of scientific (often medical) experiment that aims to reduce certain sources of bias when testing effectiveness of new treatments

Accomplished by randomly allocating subjects to two or more groups, treating them differently

One or more of the groups receive treatment(s) under investigation while one group serves as a control, receiving either the standard treatment or no treatment at all

quasi experiment

when RCTs are not feasible, do not have the funds to do this, or is not ethical.

treatment and controls groups may not be comparable at baseline

pre- and post testing

difficult / not-possible to demonstrate causal link between treatment condition and observed outcomes. because of many confounding variables that cannot be controlled

no randomisation

qualitative evaluation

in-depth understanding of a program

small sample size

emphasize on the process and context (why and how)

explore the outcomes / impacts but also beyond the pre-set goals.

example; observing how employees interact in a new office environment and then conducting interviews to understand their experience

specific options

realist evaluation → context + mechanisms =outcome

reflexive monitoring in practice with e.g. dynamic learning agenda.

participatory action research / evaluation

limited control over the way the intervention is implemented by whom and in what way

characterized by strong ownreship of target group

Interested in understanding the mechanisms of change

Strong focus on process

Monitoring of change

Reflexive learning cycles, implementation of intervention as well as intervention will change based on emergent insights

confirmation bias

human observations are biased toward confirming the observer’s conscious and unconscious expectations and view of the world

in other words → we see what we expect

why use different methods can be useful

will get different answers.

evaluating real life settings is more complex compared to evaluating in a controlled lab setting.

reality is complex

understanding the process and context is important.

when asking people to make an evaluation of their day with pictures will get very different story then when you ask them to draw (only take pictures in positive situations)

unintended consequences of purposive action

Theory holds that “all social interventions have unintended consequences, some of which can be foreseen and prevented, whereas others cannot be predicted.’’