Intro to AI - Module 1

1/223

Earn XP

Description and Tags

Foundations, History, Machine Learning

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

224 Terms

AI

The study of systems that

think like humans

think rationally

act like humans

act rationally

AI is wanting to mimic ____

Human's intelligence

but some were more obsessed with "did the machine follow the steps" over "did it do it like a human"

Human decisions aren't always mathematically correct

Foundations of AI - Philosophy

Logic, mind, knowledge

Are there rules on how to draw correct conclusions?

How does the mind come from the brain, since the mind isn't the brain?

Where does knowledge come from, given everyone has different knowledge?

How is Philosophy related to AI?

How does a machine think on its own?

This has to answer why a human does something then we translate it to the machine

Empiricism Philosophy in relation to AI

Belief that nothing significant comes if not sensed, and everything comes from human senses to be significant.

Machine has to sense

Mathematics in AI

Proof, computability, probability

What are the rules to draw correct conclusions?

What can be computed?

How do we deal with uncertainties?

Economics in AI

Maximizing payoffs

How to make decisions aligned with our preferences, even if it can be realized in the future?

How do you make a machine understand this?

Thinking of the utility

Neuroscience in AI

Brain and neurons

How do brains process information?

Psychology in AI

Thought, perception, action

How do humans and animals act?

Why do I do what I do?

Computer Engineering in AI

Developments in hardware

How can we build an efficient computer?

Control Theory in AI

Stable feedback systems

How do artifacts operate on their own control?

When do you start doing an action? When do you stop?

Linguistics in AI

Knowledge representation

Syntax

How does language relate to thought?

Cultural terms

1943-1956: Inception of AI

Boolean circuit model of the brain

Neural network computers

Turing's ideas on machine intelligence

McCarthy (Dartmouth meeting)

1952-1969: Early enthusiasm, great expectations (Look Ma, no hands!)

Early AI programs

Development of logic theorists and general problem solvers

Geometry theorem prover

Checker program

LISP

Problems on limited domains

1966-1973: A Dose of Reality

AI limited by informed introspection (human approaches) and tractability of problems (hard to scale up)

Computational complexity

Neural network research almost disappears

1969-1986: Expert Systems

Early development of knowledge-based systems, specific area of know-how of specific subjects

DENDRAL

MYCIN

R1: DEC (Digital Equipment Corporation)

DENDRAL

Expert system

Infers molecular structure by being fed entire books

MYCIN

Expert system for medical diagnosis of blood infections

Knowledge from books and experts

R1: DEC (digital equipment corporation)

Configuration of computer systems

First commercially successful expert system

Boolean Circuit Model

Model of the brain

Proposed by McCulloch and Pitts

During the inception of AI

Snarc

Neural network computer

Developed by Minsky and Edmonds

Computing Machinery and Intelligence

- Turing

- Inception of AI

- Computer can think like a human

McCarthy

- Dartmouth meeting

- Artificial Intelligence

- 1943-1956: Inception of AI

Logic theorist and General problem solver

- Newell and Simon

- Can think like human

- Concern: can it follow steps like human? can it solve problems like human?

- Early enthusiasm (1952-1969)

Geometry Theorem Prover

- Gelernter

- Early enthusiasm (1952-1969)

Checker Program

- Samuel

- Early enthusiasm (1952-1969)

LISP

- A high-level programming language developed for AI

- Early enthusiasm (1952-1969)

Microworlds

- Limited domains used in early AI research to test theories and programs

- Early enthusiasm (1952-1969)

Computational Complexity

- Complexity of problems limits AI's ability to process data

- Can't deal with more data than what was limited by microworlds

Neural Network Research

A field of study that faced a decline in interest during the 1966-1973 period (Dose of Reality)

AI History 1980-1988

Expert systems industry booms

A lot of people promised that they can make the stuff and deliver it well

Advancements in AI

Improvements in computing power, memory storage, connectivity, and automation that support AI development.

AI History 1988-1993

- Expert system industry busts (AI Winter)

- They failed to make the stuff and they didn't deliver due to many issues

Contemporary AI: 1986 onwards

Neural Networks

Contemporary AI: 1987 onwards

probabilistic reasoning and machine learning

Contemporary AI: 2001 onwards

big data (user info)

Contemporary AI: 2011 onwards

deep learning, computer vision (pixels, lines, colors, position)

Contemporary AI: 2021 onwards

generative AI

Artificial intelligence Defintion

Do it like a human

Define variables like a human would

Hardcoded tech

How would a human respond

Machine learning

Do it based on past experience

Not hardcoded since there are existing models

Input data into it then it will predict as close to an actual answer as possible

Deep learning

Subset of machine learning

Can adjust by itself since it's built in

Layered so it can handle really large data

Works with nested or layered models adjusting based on the evaluation of its own results

Generative AI

Subset of deep learning

Not all deep learning is generative

4 Components of AI (TAHO)

- Technology

- Autonomy

- Human

- Outputs

4 Components of AI (TAHO): Technology

Branch of computer science concerned with automation of intelligent behavior

4 Components of AI (TAHO): Autonomy

Systems that display intelligent behavior by analyzing their environment and taking actions with some degree of autonomy to achieve specific goals

4 Components of AI (TAHO): Human

Ability to perceive, pursue goals, initiate actions and learn from a feedback loop

4 Components of AI (TAHO): Outputs

Technical scientific field devoted to the engineered system that generates outputs like content, forecasts, recommendation of decisions for a given set of human-defined objectives

What can AI do today?

- Robotic vehicles

- Autonomous planning and scheduling - spacecrafts and rovers, logistics and transportation planning, ride-hailing apps

- Machine translation

- Speech recognition

- Recommender systems - Amazon, Walmart, Netflix, YouTube

- Game playing - Deep Blue (chess), AlphaGo, Jeopardy, Poker

- Medicine - disease diagnosis (LYNA for metastatic breast cancer)

- Automated conversation (chatbots)

Systems Acting like Humans

- Turing test

- Natural language

- Knowledge representation

- Multilayer perception

- Automated reasoning

Turing test

Test for intelligent behavior

Interrogator write questions and receives answers

System passes if interrogator can't tell if answers come from a person or not

Example of Turing Test now

CAPTCHA (Completely Automated Public Turing test to tell Computers and Humans Apart)

Automated reasoning

- Follow steps and reach a certain answer, taking note of the context

- Thinking with contextualization

- How can it argue? How can it identify questions (rhetorical, open-ended)?

Systems Thinking like Humans

Formulate a theory of mind and brain

Express the theory in a computer program

2 approaches (thought vs behavior)

2 Approaches of Systems Thinking like Humans

Cognitive science and psychology

Cognitive neuroscience

Cognitive science and psychology

Testing or predicting responses of human subjects

Behavior

Thought and perceptions logic

Cognitive neuroscience

- Observing neurological data

- Actual brain, neuroscience

Rational vs Human Intelligence

Rational = ideal intelligence

Human intelligence isn't always correct

Systems Thinking Rationally

Rational thinking governed by precise "laws of thought"

Systems (in theory) can solve problems using such laws

Laws of Thought

Syllogisms (Premise and conclusion)

Notation and logic

Uncertainty and probability

Systems Acting Rationally

Rational behavior and actions to achieve the best outcome

May or may not involve rational thinking (eg. reflexes)

Subsumes the other approaches

Lends well to science development

AI standard model

Standard model often makes unrealistic assumptions

Objectives and outcomes are fully specified and understood

Specification align with human values and objectives

Possible that the specified objectives are neither complete nor entirely correct

Value alignment problem with beneficial machines

Ensuring agreement between human and agent objectives

EX. ChatGPT, find me the best hotel in south korea but does it really do it well or properly? There is news where the AI agent goes through the transaction of paying. Make sure that your objectives align with the agent

Self-driving car example

- Objective: reach destination safely

- But what about tradeoffs between trip progress and injury risk? Or interactions between other agents/drivers?

- Define tradeoffs that you're amenable to

Value alignment problem

Ensuring agreement between human and agent objectives.

AI Definition

Artificial Intelligence is the study of systems that act rationally. It is the study and construction of agents that "do the right thing".

Intelligent Agents

Anything that perceives and acts on its environment

Whatever the agent does, affects the environment

What the agent perceive comes from the environment

A rational agent carries out an action with the _______ after _______ .

A rational agent carries out an action with the best outcome after considering past and current percepts

Percepts

Content of what the agent perceives of its environment.

Agent Function

A = F(p)

F maps percepts to actions, F : P → A

p = current percept

P = set of all percepts

a = action carried out

A = set of all actions

F = agent function

True or False: An action doesn't depend on all percepts observed so far, just the current percept

False

May depend on all percepts observed so far, not just the current percept

Agent Function but considering all percepts

Ak = F (p0 p1 p2 … pk)

(p0 p1 p2 … pk) = sequence of percepts observed to date

Ak = resulting action carried out

Structure of Agents

Agent = architecture + program

Architecture

Device with sensors and actuators

EX. robotic car, a camera, a PC.

Program

Implements the agent function on the architecture

How do we specify the task environment?

PEAS

P - Performance Measure

E - Environment

A - Actuators

S - Sensors

Performance Measure

Captures agent's aspiration

EX. for a taxi driver: safe, fast, legal, comfy trip, max profit, min impact on other road users

Environment

Context, restrictions

EX. for a taxi driver: roads, other traffic, police, peds, customer, weather

Actuators

Indicate what the agent can carry out

EX. for a taxi driver: accelerate, steering, brake, signal, horn, display, speech

Sensors

Indicate what the agent can perceive

EX. for a taxi driver: camera, radar, speedometer, GPS, engine sensors, accelerometer, microphones, touchscreen

When specifying actuators and sensors, what distinction can be useful to make?

Distinguish the devices from their functions

Actions actuators can carry out or the percepts sensors can detect

Part-Picking Robot Actuator and Sensor

Actuator: jointed arm and hand; actions: pick up or drop part

Sensor: camera; percepts: (detect) type and position of a part

Properties of Environments

Fully VS partially observable

Single-agent VS multi-agent

Deterministic VS stochastic

Episodic VS sequential

Static VS dynamic

Discrete VS continuous

Known VS unknown

Fully VS Partially observable

Does agent have all the info on the environment?

If yes, fully if not, partially

EX. Solitaire is partially observable

EX. Chess is fully observable

Single-agent VS Multi-agent

Do you only have 1 agent tackling the problem or are there more?

And if multiagent, are they competitive or cooperative?

EX. Is there only 1 automated driver or are there more?

Deterministic VS Stochastic

Is the action predictable?

Deterministic: next state is dependent on current state and action

Stochastic: there's a random element

EX. TicTacToe is deterministic

EX. Poker is stochastic

Episodic VS Sequential

Episodic: episodes have distinct goals/objectives, atomic episodes, actions are independent

Sequential: actions are influence on prior action

Static VS Dynamic

Before you make the next action, will the environment change?

Static: environment may change only after an agent's action

Dynamic: environment can change independently of the agent, even while the agent is deliberating

EX. Cross word puzzles = static since actions won't change the environment

EX. taxi driver is dynamic since a lot of things can happen and the environment can change even as you're deliberating

Discrete VS Continuous

Measurements of state, time, percepts, and actions

Discrete: Environment has a finite number of clearly defined states, percepts, or actions.

You can differentiate between state 1 and state 2

Continuous: Environment involves measurements that vary smoothly over a range (state, time, percepts, or actions).

No sharp separation between states

EX. driving a taxi is continuous

Known VS Unknown

If all the rules of the environment are known

EX. Solitaire is known

Crossword Puzzle

Full observable

Single agent

Deterministic

Sequential

Static

Discrete

Image Analysis

Fully observable

Single agent

Deterministic

Episodic

Semi static and Semi dynamic

Continuous

English Tutor

Partially observable

Multi-agent

Stochastic

Sequential

Dynamic

Discrete

Medical Diagnosis

Partially observable

Single agent

Stochastic

Episodic

Dynamic

Continuous

Chess with Clock

Fully observable

Multi agent

Deterministic

Sequential

Semi-static Semi-dynamic

Discrete

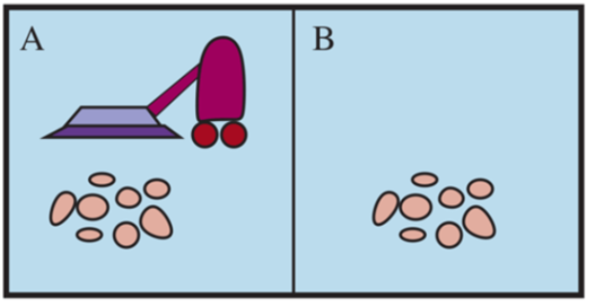

Vacuum world: What is the performance measure?

clean both rooms, fewest steps

Vacuum world: Properties

Fully observable

Single Agent

Deterministic

Sequential (if room is dirty, move left or right)

Static

Discrete

Known

State Management

The process of determining the next state and action based on the current state and percept.

Types of Agents

Reflex Agent

Reflex Agent with State (Model-based Reflex Agent)

Goal-based Agent

Utility-Based Agent

Learning Agent

Reflex Agent

Current state → current condition → act

Only if environment is fully observable

Function: reflex agent (percept) returns an action

Rules are stored and repeatedly checked

Reflex Agent Algorithm

State ← interpret-input (percept)

Convert raw percept (EX. sensor reading) into internal state (EX. "dirty")

Rule ← rule-match (state, rules)

Look up which rule matches this state

Action ← rule, action

Take the action specified by the rule

Return action

Output the action to environment