Linear Algebra Final: Terms and Practice

1/32

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

33 Terms

Vector

a matrix of size 1 x n or m x 1

s:= (s1,…, sn) (row vector)

or

x:=(x1,…,xm)T (column vector)

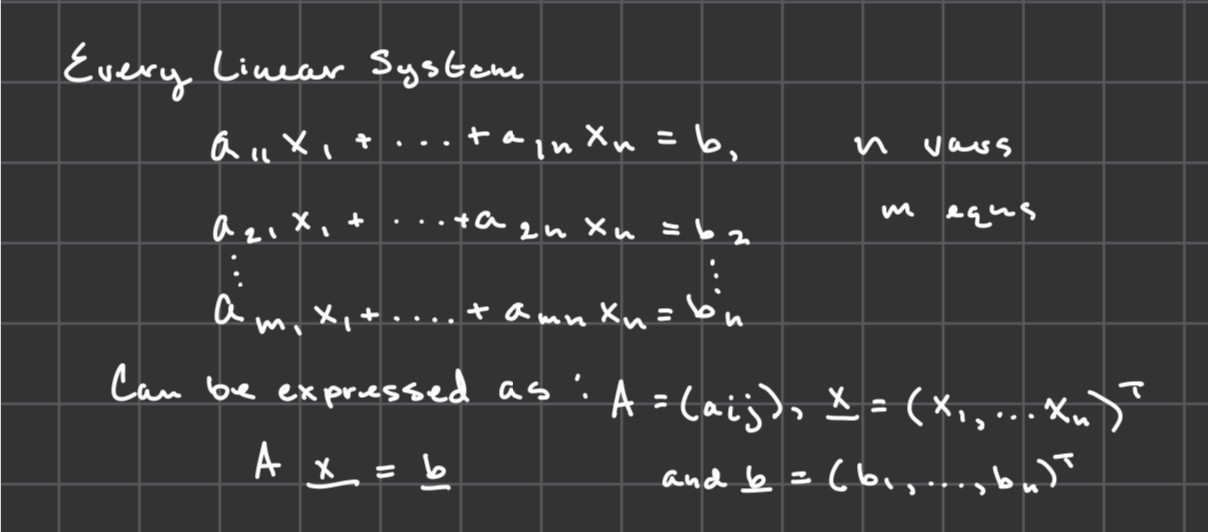

Matrix

an m x n matrix is an array of numbers:

A:=

(a11 a12 … a1n,

a21 a22 … a2n

.

.

.

am1 am2 …amn)

What can solution sets contain?

they can be empty, 0, 1 thing, or infinitely many things

Matrix product/Dot product

If v = (v1,…, vn), and w = (w1,…,wm)T are vectors, then the dot product v dot w := (v1w1 +…+vnwm) ∈R

For dot product v must have the same number of rows as w has columns or be a square matrix. vw and wv are not the same product.

Row Operation Rules

Switch two rows

Scale a row

Replace a row with the sum of rows

For homogenous systems

-there is always at LEAST a trivial solution

-it is consistent (no empty rows/sets)

To find pivots and free variables

-put into REF (row eschelon form)

-if last column has no pivot, and there is a zero, the zero is the given free variable

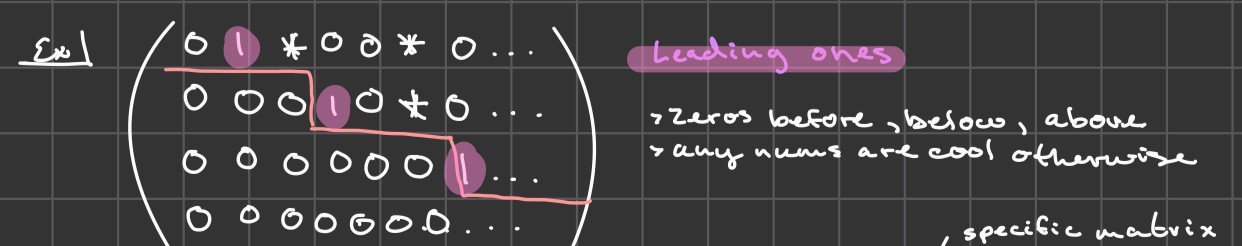

Reduced Row Eschelon Form (RREF)

Any non-zero row comes before all zero rows

Any non-zero row has first entry 1, and zeros above and below leading 1

leading ones must stagger to the right, creating stair step

RREF U thm

Every matrix A can be put into RREF U by row operations and moreover U is unique. Thus, solutions to Ax=0 and Ux=0 are the same.

For inhomogeneous systems such as Ax=b, b≠0, there are 2 ways to solve

Augmented matrix

Transfer Principle

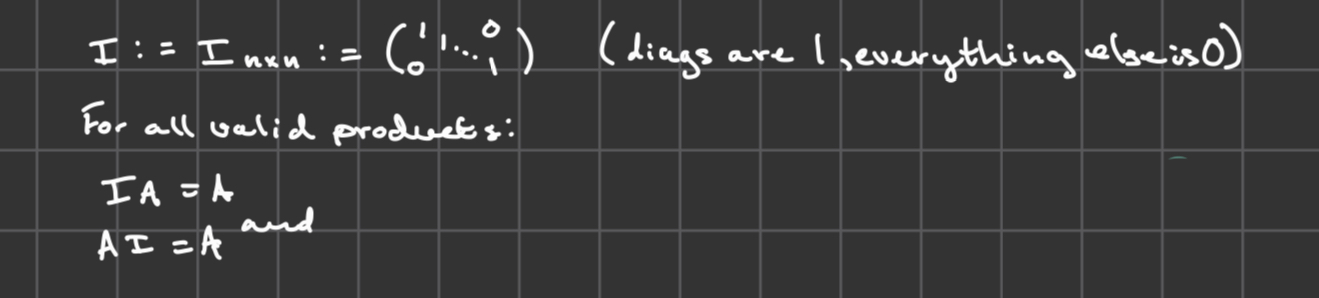

A square matrix A is invertible if there is a matrix A-1 such that:

A-1A= I

AA-1= I

If A= (a b, c d) then,

A-1 = (1/ad-cb)(d -b, -c a)

A is not invertible if ad-cb=0

If A in invertible, any system Ax=b has one unique solution: x= Ix = A-1Ax = A-1b.

So, a square matrix A is invertible iff its RREF is U=I.

Transfer Principle

Let A be a matrix and Sh : {sh: Ash = 0} the set of solutions to Ax = 0. For any b with a particular solution sp to Ax = b, i.e. Asp=b, the solutions to Ax=b is {sp + sh: sh∈ Sh}

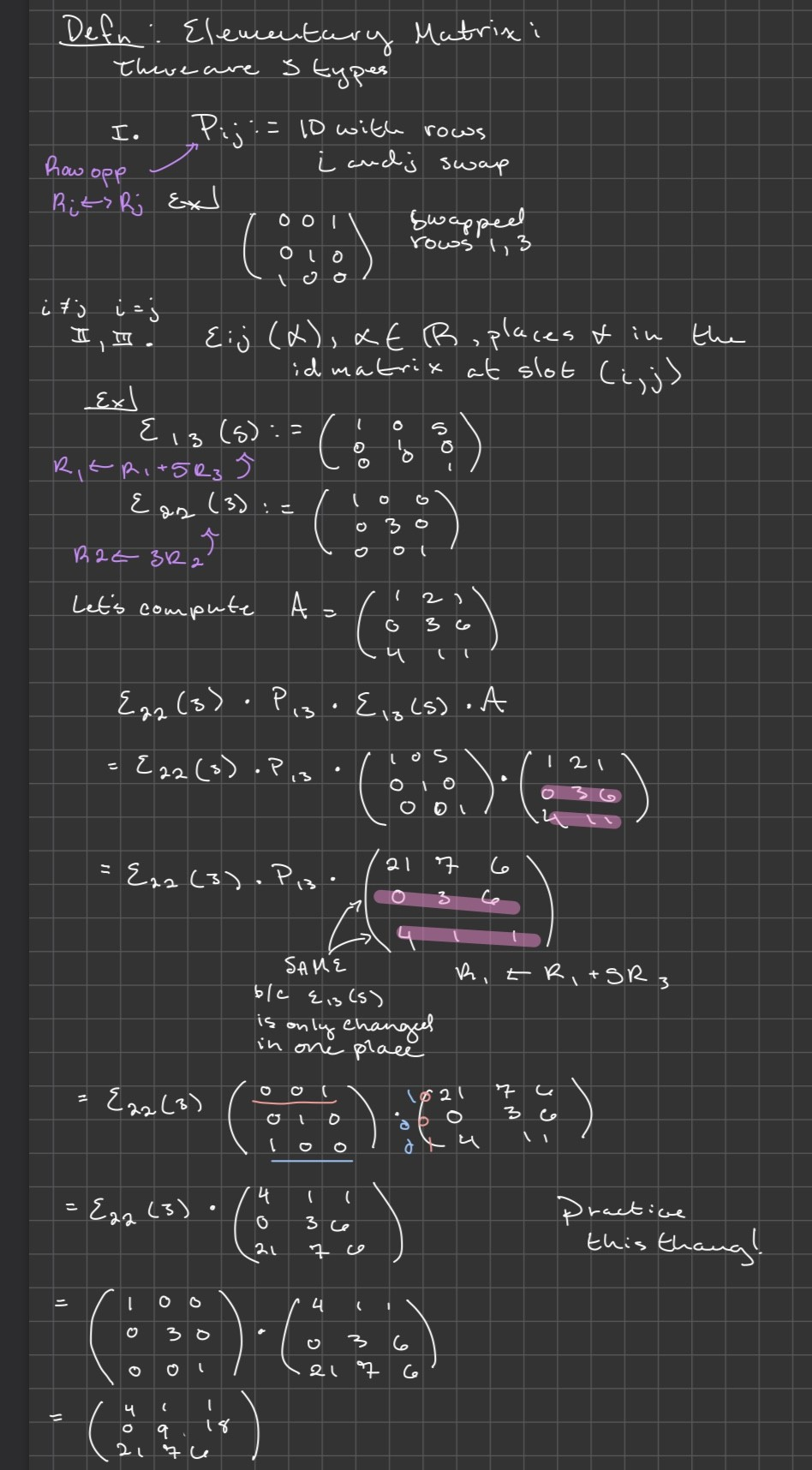

Elementary matrices: 3 types

Pij: identified as an identity matrix with ij rows swapped

(i≠j), 3. (i=j), Eij (α): identified as α∈R, places α in the identity matrix at slot (i,j)

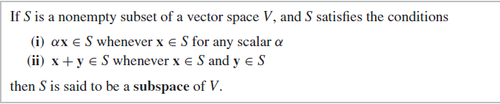

Subspace of a vector space

If it meets these properties, the subset is a subspace. Use generic elements to prove that it is one, and specific elements (witnesses) to prove that it is not! (If any of the functions are non-linear, it is more than likely not a subspace)

Linear Combinations

Fix v1, … ,vn in Rα.

A linear combination of v1, …, vn is a vector of the form c1v1+ …+ cnvn for c1,…,cn ∈ R.

Defn Spanning set

For β:={v1,…,vx} ∈Rd, a finite set. We set

Span(β):={c1v1+ …+ cnvn: c1,…, cn∈R} ⊆Rd, the set of all linear combinations of v1,…, vn.

Span(β) is always a subspace

Every subspace V ⊆ Rd, V=Span(β), for any finite set β

We call β a spanning set for V.

Null space

Null(A):={x ∈ Rn: Ax=0} ⊆Rn

Is the solution to the homogeneous system, called the Null Space of A.

Null(A) is always a subspace

Let s1, s2 ∈ Null(A) generic α∈R.

As1=0, As2=0 and A(s1 + s2)=0

A(αs1) = α(As1)= α * 0 = 0.

Linear independence and Basis Definition

Fix β = {v1,…,vn} ∈Rd.

We call β linearly independent provided:

c1v1+ …+ cnvn=0 => c1=0, c2=0,..., cn=0

If β is not linearly independent, we say β is linearly dependent.

We call a set β a basis for a subspace V if:

β is a spanning set for V

there are c1,c2, ∈ R such that c1b1+ c2b2=b

iff Ac=b i.e. Ax=b has a solution

β is linearly independent

iff Ax = 0, A=(—b1—,…, —bn—)T

dim(V) is the same size as any basis, bn ⊆ V

dim(Rd) = d,

as Rd = Span{e1,…, ed}.

Thus any set β with #β>d cannot be linearly independent, and #β<d cannot be a spanning set for Rd.

Moreover, #β=d, then β is linearly independent iff β is a spanning set for Rd.

Three important subspaces attached to an m x n matrix A:

Null Space

Null(A) := {x ∈ Rn: Ax=0} ⊆ Rn

dimNull(A):= nullity(A) [num of free vars!!]

Row Space/Rank

Row(A):= Span(rows of A) ⊆ Rn

Rank(A) = dimRow(A)

Column Space

Col(A) = Span(cols of A)

Rank- Nullity Theorem

For A, an m x n matrix,

Rank(A) + nullity(A) = n = number of columns

β coordinate of v definition

if V is a subspace and β = {b1,…,bn} is a basis for V,

every vector v ∈ V, has a unique linear combination (c1, c2,…,cn)T such that

v= b1c1+…+bncn, we call

[v]β:= (c1, c2,…,cn)T the β coordinates of v.

Change of basis: is a matrix

Fix V a vector space with basis’ β, β’.

For any vector v ∈ V, we can calculate

[v]β ∈Rd

[v]β’ ∈Rd.

There is an invertible d x d matrix(change of basis matrix):

Aβ→β’ such that

Aβ→β’[v]β=[v]β’.

(therefor [v]β=A-1β→β’[v]β’)

So

A-1β→β’=Aβ’→β.

Injective

β is linearly independent

Surjective

β is a spanning set for V

Bijective

Injective and surjective

Linear Transformation definition

Let V, W be two vector spaces. A Linear Transformation is a function L: V→W such that

L(v1 + v2) = L(v1) + L(v2)

L(αv1) = αL(v1)

For all α ∈ R, v1, v2 ∈ V.

Linear Operator definition

If V = W, we call a linear transformation L: V→V a linear operator

Kernal of L and Image of L-

2 important subspaces attached to a linear transformation

Ker L := {v ∈ V: L(v) = 0} ⊆ V

Information lost by L

Im L ;= {w ∈ W: there is v ∈ V, L(v) = w} ⊆ W

What L touches

Correspondence for any linear transformation: L: V →W:

Pick basis βv for V, dv := dimV; βw for W, dw := dimW,

then correspondance:

[—]βv

V→Rdv

v |→ [v]βv

[—]βw

W→Rdw

w |→ [w]βw.

Under these identifications,

as

[L(v)]βw = [L]βvβw * [v]βv.

So. this identifies:

kerL ∈ V with Null([L]βvβw) ∈ Rdv

and

imL ∈ W with Col([L]βvβw) ∈ Rdv

By definition L is surjective iff imL = W, and L is injective iff kerL = {0}.

L(v1) = L(v2) iff

L(v1) - L(v2) = 0

= L(v1 - v2),

So v1 - v2 ∈ kerL

For each L we obtain a #, vol(L)

Systematic process to find

vol[L] from [L]βvβw. This is called the determinate.

Thm: for L : V → W linear transformation:

|det [L]βvβw| = vol(L) for any choice of basis.

![<p>Systematic process to find</p><p>vol[L] from [L]<sub>βv</sub><sup>βw</sup>. This is called the determinate.</p><p>Thm: for L : V → W linear transformation:</p><p>|det [L]<sub>βv</sub><sup>βw</sup>| = vol(L) for <strong>any</strong> choice of basis. </p>](https://knowt-user-attachments.s3.amazonaws.com/6d0d9ad6-6fdd-434f-94da-e3c8a54254fe.png)