Key NOSE2 Concepts

0.0(0)

Card Sorting

1/100

Earn XP

Description and Tags

Key concept as listen in the NOSE2 revision lecture

Last updated 2:10 PM on 12/12/22

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

101 Terms

1

New cards

what are the OSI layers?

* application

* presentation

* session

* transport

* network

* data link

* physical

* presentation

* session

* transport

* network

* data link

* physical

2

New cards

physical layer focus

transmission of raw data bits

3

New cards

physical layer service for data link layer

sequence of bits

4

New cards

baseband data encoding

encoding performed by varying the voltage in an electrical cable or the intensity of light in an optical fibre (physical layer)

5

New cards

baseband data encoding scheme examples

* NRZ

* NRZI

* Manchester

* NRZI

* Manchester

6

New cards

carrier modulation

* carrier wave applied to channel at frequency C

* signal modulated onto the carrier

* allows multiple signals on a single channel

* provides carriers spaced greater than bandwidth, H, of the signal

* physical layer

* signal modulated onto the carrier

* allows multiple signals on a single channel

* provides carriers spaced greater than bandwidth, H, of the signal

* physical layer

7

New cards

maximum transmission rate of a channel

* depends on the channel’s bandwidth

* subject to noise that corrupts the signal

* can measure channel’s signal power S, and noise floor N, and compute its signal-to-noise ratio (SNR)

* maximum transmission rate grows logarithmically to the SNR

* physical layer

* subject to noise that corrupts the signal

* can measure channel’s signal power S, and noise floor N, and compute its signal-to-noise ratio (SNR)

* maximum transmission rate grows logarithmically to the SNR

* physical layer

8

New cards

data link layer focus

arbitrate access to the physical

9

New cards

data link layer service for network layer

structured communication (addressing, packets)

10

New cards

addressing

* physical links can be point-to-point or multi-access

* host addresses may be link-local or global scope

* sufficient to be unique only amongst devices connected to a link

* many data link layer protocols use globally unique addresses

* host addresses may be link-local or global scope

* sufficient to be unique only amongst devices connected to a link

* many data link layer protocols use globally unique addresses

11

New cards

framing

* physical layer provides unreliable raw bit stream

* data link layer corrects this

* break the raw bit stream into frames

* transmit and repair individual frames

* limit scope of any transmission errors

* data link layer corrects this

* break the raw bit stream into frames

* transmit and repair individual frames

* limit scope of any transmission errors

12

New cards

error detection and correction

* noise and interference at physical layer can cause bit errors

* error detecting codes can be extended to also correct errors

* transmit error correcting code as additional data within each frame

* data link layer

* error detecting codes can be extended to also correct errors

* transmit error correcting code as additional data within each frame

* data link layer

13

New cards

error detection and correction examples

* parity codes

* internet checksum

* internet checksum

14

New cards

data link synchronisation

* how to detect the start of messages

* leave gaps between frame

* problem - physical layer doesn’t guarantee timing

* precede each frame with a length field

* add a special code to beginning of frame

* leave gaps between frame

* problem - physical layer doesn’t guarantee timing

* precede each frame with a length field

* add a special code to beginning of frame

15

New cards

contention based MAC

* contention based means multiple hosts share channel in a way that can lead to collisions

* two stage access to channel

* detect that a collision is occurring/will occur

* send if no collision or back off and/or retransmit data according to some algorithm to avoid/resolve collision

* data link layer

* two stage access to channel

* detect that a collision is occurring/will occur

* send if no collision or back off and/or retransmit data according to some algorithm to avoid/resolve collision

* data link layer

16

New cards

contention based MAC examples

* ALOHA network

* CSMA

* CSMA-CD

* CSMA

* CSMA-CD

17

New cards

network layer focus

responsible for end-to-end delivery of data

18

New cards

the internet

the globally interconnected networks running the internet protocol (IP)

19

New cards

IP service model

* just send - no need to setup a connection first

* network makes its best effort to deliver packets but provides no guarantees

* easy to run over any type of link layer

* network layer

* network makes its best effort to deliver packets but provides no guarantees

* easy to run over any type of link layer

* network layer

20

New cards

IPv4 addressing

* every network interface on every host is intended to have a unique address

* 32 bits (eg. 130.209.241.197)

* significant problems due to lack of addresses

* 32 bits (eg. 130.209.241.197)

* significant problems due to lack of addresses

21

New cards

consideration regarding IPv6 adoption

* will increase the size of the address pace to allow more hosts on the network

* simplifies the protocol and makes high speed implementations easier

* straight-forward to build applications that work with both IPv4 and IPv6

* simplifies the protocol and makes high speed implementations easier

* straight-forward to build applications that work with both IPv4 and IPv6

22

New cards

fragmentation

* link layer has a maximum packet size (MTU)

* IPv4 routers will fragment packets that are larger than the MTU

* IPv4 routers will fragment packets that are larger than the MTU

23

New cards

session layer focus

manages transport layer connection

24

New cards

inter-domain routing

find the best route to destination network

25

New cards

routing in the DFZ

* core networks are well connected and must know about every other network

* the default free zone where there is no default route

* route based on policy non necessarily shortest path

* requires complete AS-level topology information

* the default free zone where there is no default route

* route based on policy non necessarily shortest path

* requires complete AS-level topology information

26

New cards

distance vector protocols

* each node maintains a vector containing the distance to every other node in the network

* forward packets along route with least distance to destination

* forward packets along route with least distance to destination

27

New cards

link state routing

* nodes know the link to their neighbours and the cost of using those links - the link state information

* reliably flood this information giving all node complete map of the network

* each node then directly calculates shortest path to every other node locally

* reliably flood this information giving all node complete map of the network

* each node then directly calculates shortest path to every other node locally

28

New cards

distance vector routing advantages

* simple to implement

* routers only store the distance to each other node

* routers only store the distance to each other node

29

New cards

distance vector routing disadvantages

suffers from slow convergence

30

New cards

link state routing advantages

faster convergence

31

New cards

link state routing disadvantages

* more complex

* requires each router to store complete network map

* requires each router to store complete network map

32

New cards

transport layer focus

* isolate upper layers from the network layer

* provide a useful, convenient and easy to use service

* provide a useful, convenient and easy to use service

33

New cards

end-to-end principle

only put functions that are absolutely necessary within the network and leave everything else to end systems

34

New cards

transport layer framing

applications may wish to send structured data

* transport layer responsible for maintaining the boundaries

* transport must frame the original data if this is part of the service model

* transport layer responsible for maintaining the boundaries

* transport must frame the original data if this is part of the service model

35

New cards

congestion control

* transport layer controls the application sending rate

* to match rate at which network layer can deliver data

* to match rate at which network layer can deliver data

36

New cards

UDP applications

* useful for application that prefer timeliness to reliability

* voice-over-IP

* streaming video

* must be able to tolerate some loss of data

* must be able to adapt to congestion in the application layer

* voice-over-IP

* streaming video

* must be able to tolerate some loss of data

* must be able to adapt to congestion in the application layer

37

New cards

TCP applications

* useful for applications that require reliable data delivery and can tolerate some timing variations

* file transfer

* email

* instant messaging

* file transfer

* instant messaging

38

New cards

TCP connection setup

* 3 way handshake

* the SYN and ACK flags the TCP header signal connection progress

* initial packet has SYN bit set, includes randomly chosedn initial seq number

* reply also has SYN bit set and randomly chosen sequence number ACKnowledges initial packet

* handshake completed by ACKnowledgement of second packet

* the SYN and ACK flags the TCP header signal connection progress

* initial packet has SYN bit set, includes randomly chosedn initial seq number

* reply also has SYN bit set and randomly chosen sequence number ACKnowledges initial packet

* handshake completed by ACKnowledgement of second packet

39

New cards

loss detection

* triple duplicate ACK → some packets lost but later packets arriving

* timeout → send data but acknowledgements stop returning

* timeout → send data but acknowledgements stop returning

40

New cards

reordering of packets

* TCP will retransmit the missing data transparently to the application

* a recv() for missing data will block until it arrives

* a recv() for missing data will block until it arrives

41

New cards

TCP congestion control

can implement congestion control at either the network or the transport layer

42

New cards

conservation of packets

* the network has a certain capacity

* when in equilibrium at that capacity send one packet for each acknowledgement received

* when in equilibrium at that capacity send one packet for each acknowledgement received

43

New cards

addictive increase multiplicative decrease (AIMD)

* start slowly increase gradually to find equilibrium

* respond to congestion rapidly

* faster reduction than increase → stability

* respond to congestion rapidly

* faster reduction than increase → stability

44

New cards

network address translation (NAT)

suppose an internet service provider owns an IP address prefix

* an address is assigned for a single host but when an additional host is added they get the previous address

* ISP assigns a new range of IP addresses to customer and gives each host an address from that range

* hides a private network behind a single public IP address

* an address is assigned for a single host but when an additional host is added they get the previous address

* ISP assigns a new range of IP addresses to customer and gives each host an address from that range

* hides a private network behind a single public IP address

45

New cards

content negotiations

* many protocols negotiate the media formats used

* ensures sender and receiver have common format both understand

* the offer lists supported formats in order of preference

* receiver picks highest preference format it understands

* negotiates common format in one round trip time

* ensures sender and receiver have common format both understand

* the offer lists supported formats in order of preference

* receiver picks highest preference format it understands

* negotiates common format in one round trip time

46

New cards

text data transfers

* flexible and extensible

* high-level application layer protocols

* email, web, instant messaging….

* high-level application layer protocols

* email, web, instant messaging….

47

New cards

binary data transfers

* highly optimised and efficient

* audio and video data

* low-level or multimedia protocols

* audio and video data

* low-level or multimedia protocols

48

New cards

DNS zones

* hierarchy of zones

* one logical server per zone

* delegation follows hierarchy

* hop-by-hop name look-up, follows hierarchy via root

* one logical server per zone

* delegation follows hierarchy

* hop-by-hop name look-up, follows hierarchy via root

49

New cards

presentation layer focus

manages the presentation, representation and conversion of data

50

New cards

uniform resource identifier (USI)

consists of 4 parts

1. protocol (eg. https)

2. hostname (IP address or DNS domain name)

3. port number (if omitted, the protocol’s default port is used)

4. path and filename (location of requested resource)

1. protocol (eg. https)

2. hostname (IP address or DNS domain name)

3. port number (if omitted, the protocol’s default port is used)

4. path and filename (location of requested resource)

51

New cards

application layer focus

user application protocols

52

New cards

CIA triad

* confidentiality - who is allowed to access what?

* integrity - data to not be tampered by unauthorised party

* availability - data protected but available when needed

* integrity - data to not be tampered by unauthorised party

* availability - data protected but available when needed

53

New cards

symmetric encryption

* function converts plain text into cipher text

* conversion is protected by a secret key

* fast - suitable for bulk encryption

* key distribution problem

* conversion is protected by a secret key

* fast - suitable for bulk encryption

* key distribution problem

54

New cards

asymmetric encryption

* public key cryptography

* public key - widely distributed

* private key - must be kept secret

* encrypt using public key → need private key to decrypt

* problem → very slow to encrypt and decrypt

* public key - widely distributed

* private key - must be kept secret

* encrypt using public key → need private key to decrypt

* problem → very slow to encrypt and decrypt

55

New cards

transport layer security (TLS)

* de facto standard/protocol for internet security

* enables privacy and data integrity between two communicating applications

* reliable in terms of CIA

* enables privacy and data integrity between two communicating applications

* reliable in terms of CIA

56

New cards

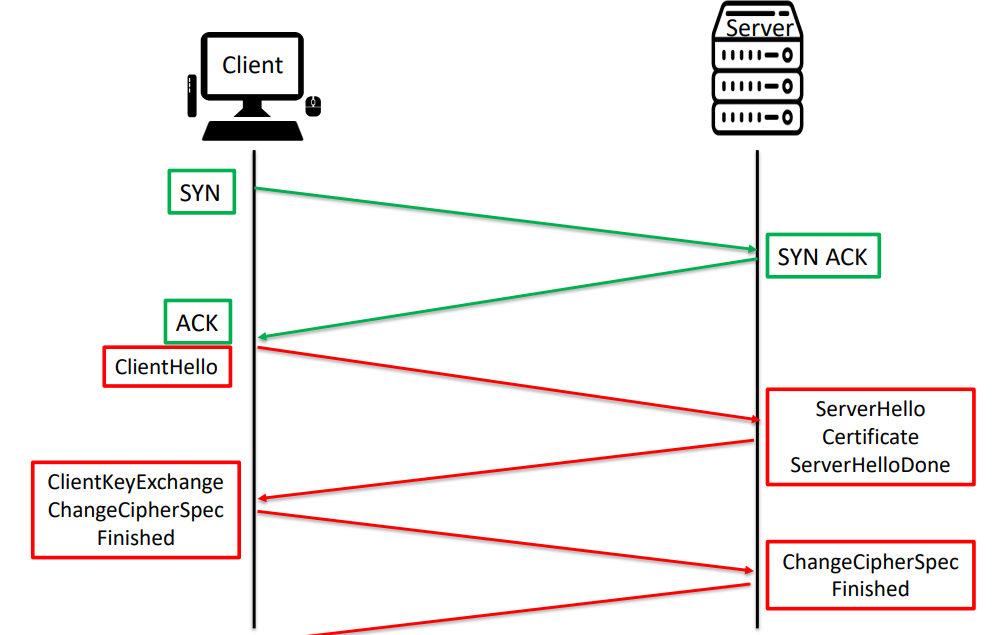

TLS handshake

57

New cards

IPSec

* a set of protocols

* resides on network layer

* can encrypt from network layer and above

* resides on network layer

* can encrypt from network layer and above

58

New cards

ISAKMP

* part of IPSec

* establishing security associations

* procedures for

* authentication

* creation/management of SAs

* key generation/key transport techniques

* threat mitigation

* establishing security associations

* procedures for

* authentication

* creation/management of SAs

* key generation/key transport techniques

* threat mitigation

59

New cards

IPSec modes

* gateway-to-gateway

* end device-to-gateway

* gateway-to-server

* end device-to-gateway

* gateway-to-server

60

New cards

IKE

internet key exchange → provides perfect forward secrecy

61

New cards

ESP

payload encryption

62

New cards

AH

packet header encryption

63

New cards

GDPR principles

* lawfulness, fairness and transparency

* purpose limitation

* data minimisation

* accuracy

* storage limitation

* integrity and confidentiality

* accountability

* purpose limitation

* data minimisation

* accuracy

* storage limitation

* integrity and confidentiality

* accountability

64

New cards

privacy criteria

* anonymity

* pseudonymity

* unlinkability

* unobservability

* pseudonymity

* unlinkability

* unobservability

65

New cards

man-in-the-middle examples

* ARP poisoning

* port stealing

* SSL stripping

* port stealing

* SSL stripping

66

New cards

storage/memory hierarchy

* registers

* cache

* main memory

* solid-state disk

* magnetic disk

* optical disk

* magnetic tapes

* cache

* main memory

* solid-state disk

* magnetic disk

* optical disk

* magnetic tapes

67

New cards

main components of a processor

* cache memory

* control unit

* arithmetic/logic unit (ALU)

* control unit

* arithmetic/logic unit (ALU)

68

New cards

instruction set architecture (ISA)

the interface of the processor for programs running on that processor

69

New cards

operating system roles

* acts as a resource allocator

* manages all resources

* acts as a control program

* try to prevent errors and improper use of computer system

* manages all resources

* acts as a control program

* try to prevent errors and improper use of computer system

70

New cards

direct memory access (DMA)

* used by high-speed I/O devices

* direct transfer from device controller buffer’s storage to main memory region

* use of a single purpose processor

* direct transfer from device controller buffer’s storage to main memory region

* use of a single purpose processor

71

New cards

system calls

provides a programming interface to the service provided by the OS

72

New cards

system call parameter passing

* pass the parameters in registers

* parameters stored in memory and address passed as a parameter in a register

* placed/pushed onto the stack by the program and popped off the stack by the OS

* parameters stored in memory and address passed as a parameter in a register

* placed/pushed onto the stack by the program and popped off the stack by the OS

73

New cards

process

a program in execution

74

New cards

threads and processes differences

* threads share memory and resources by default

* threads are faster/more economical to create

* in older operating systems, it was much faster to context switch between threads than processes

* can have different schedulers for processes and threads

* if a single thread in a process crashes, the whole process crashes as well

* threads are faster/more economical to create

* in older operating systems, it was much faster to context switch between threads than processes

* can have different schedulers for processes and threads

* if a single thread in a process crashes, the whole process crashes as well

75

New cards

multiprogramming

have some program/process running at all times

76

New cards

time-sharing

switch among (concurrently running) processes quickly enough to give the impression of parallel execution

77

New cards

concurrency vs parallelism

* concurrency: multiple tasks make progress over time

* time-sharing system, requiring scheduling and synchronization

* parallelism: multiple tasks (threads/processes) execute at the same time

* requires multiple execution units (CPUs, CPU cores, CPU vCores, etc.)

* time-sharing system, requiring scheduling and synchronization

* parallelism: multiple tasks (threads/processes) execute at the same time

* requires multiple execution units (CPUs, CPU cores, CPU vCores, etc.)

78

New cards

process control block (PCB)

information associated with each process

* also known as process table entry

* also known as process table entry

79

New cards

process creation

parent process → child processes

* a “process tree”

* a “process tree”

80

New cards

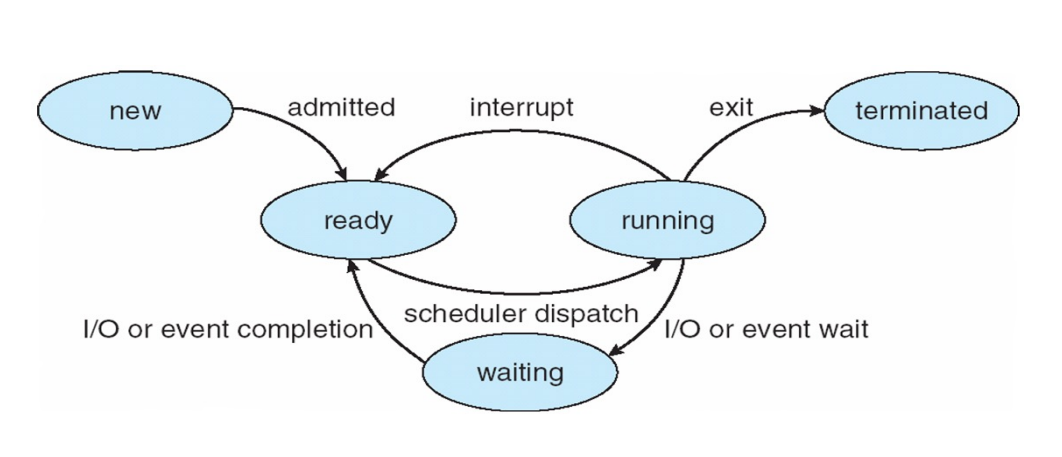

process states

81

New cards

message passing

requires a “communication link” between communicating processes

82

New cards

direct communication

* processes must name each other explicitly (symmetric)

* or only the recipient is names (asymmetric)

* communication links are established by the OS

* exactly one link between a pair of communicating processes

* links may be unidirectional but are usually bidirectional

* or only the recipient is names (asymmetric)

* communication links are established by the OS

* exactly one link between a pair of communicating processes

* links may be unidirectional but are usually bidirectional

83

New cards

indirect communication

* messages are directed and received from mailboxes

* mailboxes are dedicated memory managed by the OS for the communication

* links between processes are established only if processes share a common mailbox

* a mailbox may be associated with many processes

* each pair of processes may share several communication links that may be unidirectional or bi-directional

* mailboxes are dedicated memory managed by the OS for the communication

* links between processes are established only if processes share a common mailbox

* a mailbox may be associated with many processes

* each pair of processes may share several communication links that may be unidirectional or bi-directional

84

New cards

synchronous communication

* blocking send → sender blocked until the message is received by the recipient

* blocking receive → receiver blocked until the message is available

* blocking receive → receiver blocked until the message is available

85

New cards

asynchronous communication

* non-blocking send → sender enqueues the message and continues operation

* non-blocking receive → receiver receives either a valid message or a null

* non-blocking receive → receiver receives either a valid message or a null

86

New cards

critical section problem

1. mutual exclusion: no two processes in their critical section at the same time

2. progress: entering one’s critical section should only be decided by its contenders in due time; a process cannot immediately re-enter its critical section if other processes are waiting for their turn

3. bounded waiting: it should be impossible for a process to wait indefinitely to enter its critical section

87

New cards

starvation

processes/threads unable to enter their critical section because of “greedy” contenders

88

New cards

livelock

special case of starvation where competing parties both try to “avoid” each other at the same time

89

New cards

priority inversion

special case where lower priority processes can keep higher priority processes waiting

90

New cards

deadlock

all parties of a group are waiting indefinitely for another party to take action (but no one can)

91

New cards

cycle of a process

CPU burst → IO burst

92

New cards

long term scheduler

loads programs into memory (turns a program into a process)

93

New cards

medium term scheduler

freezes and unloads (swaps out) a process, to be reintroduced at a later stage

94

New cards

short-term scheduler

selects which process to execute next

95

New cards

non pre-emptive scheduling

let the process give up the CPU

96

New cards

preemptive scheduling

the scheduler decides when a process yields the CPU

97

New cards

dispatcher

gives control of the CPU to the process selected by the short-term scheduler

98

New cards

dispatcher tasks

* switch context

* switch to user mode

* jump to proper instruction in the user program to continue execution

* switch to user mode

* jump to proper instruction in the user program to continue execution

99

New cards

first come first served (FCFS)

* processes are added to a queue in order of arrival

* algorithm picks the first process in the queue and executes it

* if the process needs more time to complete, it places it at the end of the queue again

* algorithm picks the first process in the queue and executes it

* if the process needs more time to complete, it places it at the end of the queue again

100

New cards

shortest job first (SJF)

* non-preemptive

* processes are added to a queue; queue is kept ordered by remaining CPU burst time

* algorithm picks the first process in the queue and executes it

* processes are added to a queue; queue is kept ordered by remaining CPU burst time

* algorithm picks the first process in the queue and executes it