Learning

5.0(5)

Card Sorting

1/70

Last updated 8:49 PM on 12/18/22

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

71 Terms

1

New cards

Learning

a semi-permanent change in behavior due to experience

2

New cards

Classical Conditioning

Learning to associate a random stimulus to a reflex

“learning is making associations”

“learning is largely reflexive”learning

“learning is making associations”

“learning is largely reflexive”learning

3

New cards

Unconditioned Stimulus (US)

stimulus that naturally produces a response

4

New cards

Unconditioned Response (UCR)

a natural response

5

New cards

naturally feeling pain when pricked with a pin

an example of a UR

what happens when you get pricked with a pin?

what happens when you get pricked with a pin?

6

New cards

UR

dogs salivating naturally

7

New cards

US

meat

8

New cards

Neutral Stimulus (NS)

a stimulus that doesn’t produce a response (pavlov’s bell, at first)

9

New cards

Conditioned Stimulus (CS)

the action paired with the unconditioned stimulus (US) so it eventually changes from the NS to the _____ after the acquisition period

ex. Pavlov ringed a bell at the same time as presenting meat to dog so it associates the 2

ex. Pavlov ringed a bell at the same time as presenting meat to dog so it associates the 2

10

New cards

Conditioned Response (CR)

Trained response to an originally neutral stimulus (which now is the CS)

ex. dogs drooling after associating bell and meat

ex. dogs drooling after associating bell and meat

11

New cards

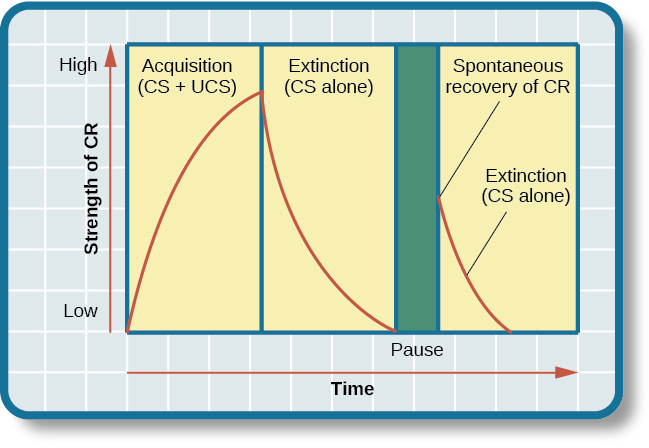

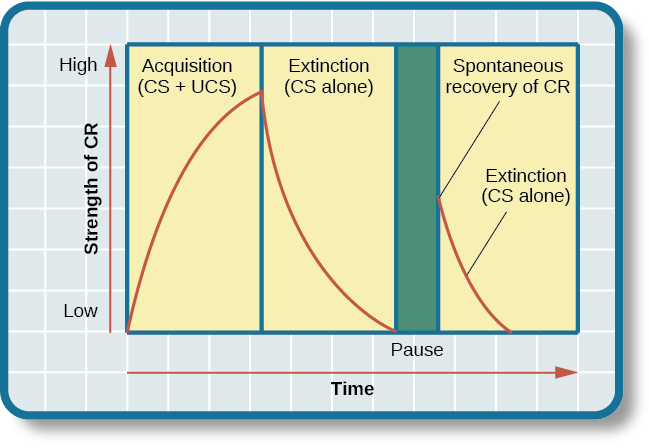

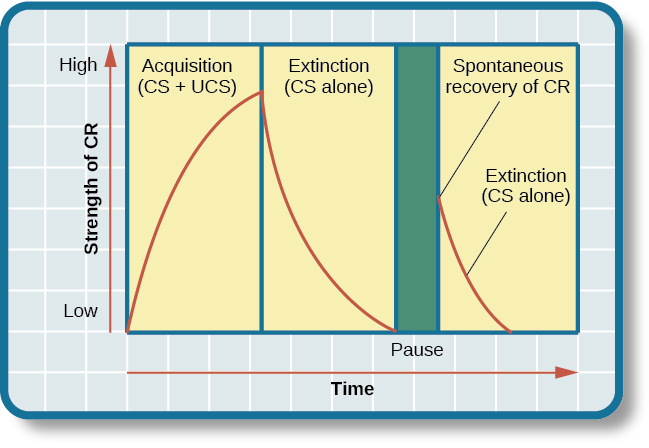

Acquisition

when the US and the CS are presented together to produce CR

AKA the period of time where a CR is learned

AKA the period of time where a CR is learned

12

New cards

Extinction

when CR stops because the CS hasn’t been presented with the US for a while (it diminishes over time)

ex. dogs stop salivating because you rang the bell without the meat for like the 10th time

ex. dogs stop salivating because you rang the bell without the meat for like the 10th time

13

New cards

Spontaneous Recovery

when the CS produces CR after behavior has been extinct after a short pause

ex. dogs salivating for the 11th time when you ring the bell

(extinction only __suppresses__ CR rather than eliminating it completely)

ex. dogs salivating for the 11th time when you ring the bell

(extinction only __suppresses__ CR rather than eliminating it completely)

14

New cards

CS

What does the NS become after the acquisition period?

15

New cards

CR

What does the UCR become after the acquisition period?

16

New cards

UCS

before learning, the NS must be paired with the __ to produce the UCR

17

New cards

CR

after learning, the CS induces __?

18

New cards

Contiguity Explanation for Classical Conditioning

“classical conditioning happens because the NS and the UCS happen at the same time (they overlap/touch)”

ex. Pavlov

(but it’s kinda obsolete now, everyone believes in contingency theory now)

ex. Pavlov

(but it’s kinda obsolete now, everyone believes in contingency theory now)

19

New cards

Contingency Explanation for Classical Conditioning

“CC happens because the UCS __depends__ on the NS”

AKA (the NS *predicts* the UCS)

\

ex. you ring bell (NS), wait some time, and the dogs *still* respond (the NS and the UCS don’t have to overlap/touch/happen at the same time)

AKA (the NS *predicts* the UCS)

\

ex. you ring bell (NS), wait some time, and the dogs *still* respond (the NS and the UCS don’t have to overlap/touch/happen at the same time)

20

New cards

Biological Predisposition

What an animal can or can’t do impacts their ability to classically condition them

\+

The senses that an animal relies on the most is going be the best neutral stimulus to classically condition them with

\+

The senses that an animal relies on the most is going be the best neutral stimulus to classically condition them with

21

New cards

Taste Aversion

John Garcia studied ___?

22

New cards

Children had a bad habit of sucking thumbs (the bad habit = NS)

Put bad-tasting lemon juice (UCS) which produced a a natural reaction “EW!” (UCR)

\

So sucking thumbs (NS) became a CS which produced an aversion to the bad taste “EW!” (CR)

Put bad-tasting lemon juice (UCS) which produced a a natural reaction “EW!” (UCR)

\

So sucking thumbs (NS) became a CS which produced an aversion to the bad taste “EW!” (CR)

Example of Taste Aversion:

23

New cards

Little Albert Experiment (by Watson and Raynor)

previously-liked white rat (NS) + fearful noise (UCS) = fear response (UCR)

24

New cards

Conditioned emotional responses

any negative emotional response, typically fear or anxiety, that becomes associated with a neutral stimulus as a result of classical conditioning

25

New cards

Phobia

____ develops as a result of having a negative experience or panic attack related to a specific object or situation

\

an overwhelming and debilitating fear of an object, place, situation, feeling or animal.

\

an overwhelming and debilitating fear of an object, place, situation, feeling or animal.

26

New cards

Superstitions

an irrational belief in the significance or magical efficacy of certain objects or events

ex. omens, lucky charms

ex. omens, lucky charms

27

New cards

Aversion Therapy

used to help a person give up a behavior or habit by having them associate it with something unpleasant.

__ex. ____ ______is most known for treating people with addictive behaviors, like those found in alcohol use disorder.

ex. putting lemon juice on thumbs to stop children from sucking them

__ex. ____ ______is most known for treating people with addictive behaviors, like those found in alcohol use disorder.

ex. putting lemon juice on thumbs to stop children from sucking them

28

New cards

Higher-Order Conditioning

(AKA second-order conditioning)

(AKA second-order conditioning)

procedure in which the conditioned stimulus of one experiment acts as the unconditioned stimulus of another, for the purpose of conditioning a neutral stimulus

\

* tends to be weaker than initial conditioning

ex. guard dog makes us afraid because of getting a dog bite, THEN, hearing a dog bark also may make us afraid

(different than stimulus generalization because the new stimulus is not similar to the original stimulus)

\

* tends to be weaker than initial conditioning

ex. guard dog makes us afraid because of getting a dog bite, THEN, hearing a dog bark also may make us afraid

(different than stimulus generalization because the new stimulus is not similar to the original stimulus)

29

New cards

Behaviorism

a key idea of __this psychological approach__:

“observable behaviors are more important than inner experiences or mental processes”

“observable behaviors are more important than inner experiences or mental processes”

30

New cards

Stimulus Generalization

extending fear-related behaviors of conditioned stimulus to *anything that is similar*

ex. Little Albert being afraid of anything that resembled a white rat after acquisition period

ex. Little Albert being afraid of anything that resembled a white rat after acquisition period

31

New cards

Stimulus Discrimination

ability to tell the difference and only respond to the conditioned stimulus but not other stimuli

32

New cards

Operant (Instrumental) Conditioning

“Learning is based on behavior and its consequences”

“Instruments” (rewards or punishments) are the reason people change their behaviors

“Instruments” (rewards or punishments) are the reason people change their behaviors

33

New cards

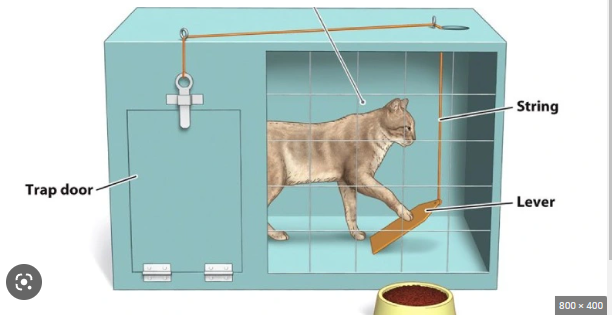

Edward Thorndike

researcher who put cats in puzzle boxes

* cats eventually notice that stepping on lever opens door (trial and error)

* __This researcher__ puts food outside (and cat knows how to get it)

* But cat does not go out when food is not tasty

* cats eventually notice that stepping on lever opens door (trial and error)

* __This researcher__ puts food outside (and cat knows how to get it)

* But cat does not go out when food is not tasty

34

New cards

Law of Effect

Rewards are useful to get people/animals to do a wanted behavior

\

“Behavioral responses that were most closely followed by a satisfying result were most likely to become established patterns and to occur again in response to the same stimulus”

\

“Behavioral responses that were most closely followed by a satisfying result were most likely to become established patterns and to occur again in response to the same stimulus”

35

New cards

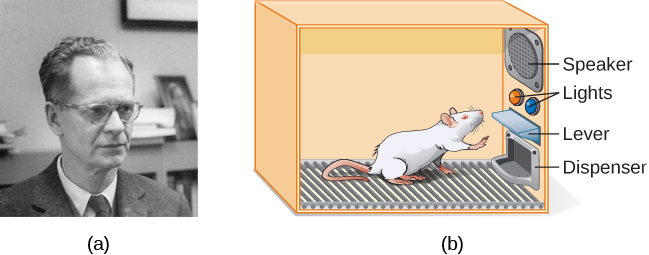

B. F. Skinner

this researcher put animals in Skinner boxes (which rewards animals for certain behavior)

36

New cards

The Thorndike box was invented by E. L. Thorndike in the 1830s. The Skinner box came later and was considered an improvement in the device. Thus, it is the Skinner box that is the apparatus that has become the prototypical device for the controlled laboratory study of operant behavior.

\n The Skinner box also produces more accurate and useful results compared to Thorndike's puzzle box:

\

Skinner box lets you measure a number of successful responses on the part of the animal because the animal stays in the box and can push the lever (or other mechanism) many times to receive multiple rewards

On the other hand,

in a Thorndike box, an animal must escape from the box in order to earn its' reward. Once it is outside the box the experiment is over

\n The Skinner box also produces more accurate and useful results compared to Thorndike's puzzle box:

\

Skinner box lets you measure a number of successful responses on the part of the animal because the animal stays in the box and can push the lever (or other mechanism) many times to receive multiple rewards

On the other hand,

in a Thorndike box, an animal must escape from the box in order to earn its' reward. Once it is outside the box the experiment is over

What is the difference between the Skinner Box and Thorndike’s Puzzle Box?

37

New cards

Positive Reinforcement

A reward

38

New cards

Negative Reinforcement

A coping mechanism

“if you do \[the desired behavior\], then \[a bad thing does not happen\]”

\

ex. using an umbrella to avoid getting wet

ex. taking allergy medicine to avoid allergy symptoms

“if you do \[the desired behavior\], then \[a bad thing does not happen\]”

\

ex. using an umbrella to avoid getting wet

ex. taking allergy medicine to avoid allergy symptoms

39

New cards

Punishment

discourages bad behavior

40

New cards

Positive (Aversive) Punishment

a type of punishment where you ADD something you don’t want

ex. a speeding ticket

ex. getting hit with a ruler

ex. a speeding ticket

ex. getting hit with a ruler

41

New cards

Negative Punishment

a type of punishment where you withdraw something you like

ex. taking away a phone

ex. taking away a phone

42

New cards

Both types of reinforcement REINFORCE a good behavior while Punishment discourages a bad behavior.

What’s the difference between Positive/Negative Reinforcement vs. Punishment?

43

New cards

Premack Principle

In order to get better results, you must tailor the reward/punishment to your subject

\

ex. saying: “if you behave on the road trip, you will get chocolate” doesn’t work if your child doesn’t like chocolate

\

ex. saying: “if you behave on the road trip, you will get chocolate” doesn’t work if your child doesn’t like chocolate

44

New cards

Overjustification

Losing motivation for doing something that you used to do for free once you get rewarded for it

* decreases __implicit motivation__

\

ex. you love reading, but when school rewards reading, you start to think it’s a chore, so you like it less

* decreases __implicit motivation__

\

ex. you love reading, but when school rewards reading, you start to think it’s a chore, so you like it less

45

New cards

Primary Reinforcer

a reinforcer that automatically gives you pleasure

* nobody has to teach you that it is a good thing

\

ex. food, toy, hug

* nobody has to teach you that it is a good thing

\

ex. food, toy, hug

46

New cards

Secondary Reinforcer

a reinforcer that you have to learn has value

\

ex. money (a toddler would pick nickel over dime since it’s bigger, they haven’t learned the different values associated with each)

ex. grades (you have to learn the difference between an A and an F)

\

ex. money (a toddler would pick nickel over dime since it’s bigger, they haven’t learned the different values associated with each)

ex. grades (you have to learn the difference between an A and an F)

47

New cards

Continuous Reinforcement

a type of reinforcement where you get rewarded every time you do something

* you learn behavior fastest on __this schedule of reinforcement__

* but you also lose learned behavior the fastest in the absence of the reward

* you learn behavior fastest on __this schedule of reinforcement__

* but you also lose learned behavior the fastest in the absence of the reward

48

New cards

Partial Reinforcement

a type of reinforcement where you DON’T get rewarded every time you do something

* it takes longer to learn behavior

* but learned behavior stays longer

* it takes longer to learn behavior

* but learned behavior stays longer

49

New cards

Ratio Schedule

a type of *partial* reinforcement where the reward is based on # of behaviors

ex. for every 5 cars you sell, you get a reward

\

^^FIXED^^ ratio schedule:

ex. on a fixed ratio of 1:1, you get 1 reward for every 1 good behavior (this is AKA continuous reinforcement)

\

@@VARIABLE@@ ratio schedule:

ex. you “approximately” get a reward for every 5-ish cars

ex. this is like gambling, slots (it keeps effort up, because you never know when reward is coming)

ex. for every 5 cars you sell, you get a reward

\

^^FIXED^^ ratio schedule:

ex. on a fixed ratio of 1:1, you get 1 reward for every 1 good behavior (this is AKA continuous reinforcement)

\

@@VARIABLE@@ ratio schedule:

ex. you “approximately” get a reward for every 5-ish cars

ex. this is like gambling, slots (it keeps effort up, because you never know when reward is coming)

50

New cards

Interval Schedule

a type of *partial* reinforcement where the reward is based on duration of time

ex. paycheck every 2 weeks

\

^^FIXED^^ schedule:

* you are certain of intervals

ex. official paychecks

\

@@VARIABLE@@ interval schedule:

ex. allowance given by absent-minded parents (allowance given every friday-ish, so maybe given on Saturday or Sunday)

ex. paycheck every 2 weeks

\

^^FIXED^^ schedule:

* you are certain of intervals

ex. official paychecks

\

@@VARIABLE@@ interval schedule:

ex. allowance given by absent-minded parents (allowance given every friday-ish, so maybe given on Saturday or Sunday)

51

New cards

Shaping

training an individual to do a __complicated__ behavior

* reward is given step-by-step (reward given then withheld until next step is achieved)

\

ex. training service dogs

Step 1 = going to the refrigerator

no reward until…

Step 2 = opening the refrigerator

no reward until…

Step 3 = getting food

etc.

* reward is given step-by-step (reward given then withheld until next step is achieved)

\

ex. training service dogs

Step 1 = going to the refrigerator

no reward until…

Step 2 = opening the refrigerator

no reward until…

Step 3 = getting food

etc.

52

New cards

Even though they are both based on a reward system, the difference is the THINKING component

* cognitive learning does not require the same reinforcement that operant conditioning requires

Cognitive learning = your brain does the work of acquiring knowledge. Conditioned learning = your brain is not involved

* cognitive learning does not require the same reinforcement that operant conditioning requires

Cognitive learning = your brain does the work of acquiring knowledge. Conditioned learning = your brain is not involved

What is the difference between Cognitive Learning and Operant Conditioning?

\

\

53

New cards

Latent Learning

a type of learning where the learning of something is not apparent, it’s hidden until it is needed later (a suitable motivation and circumstances appear)

ex. David learning about Great Wall of China, but it’s not apparent until the topic came up in the waiting room

\

Unlike Operant conditioning, this shows that learning can occur without any reinforcement of a behavior.

ex. David learning about Great Wall of China, but it’s not apparent until the topic came up in the waiting room

\

Unlike Operant conditioning, this shows that learning can occur without any reinforcement of a behavior.

54

New cards

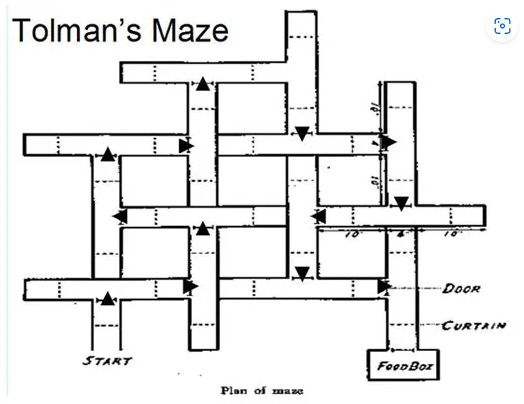

Tolman and rats with cognitive maps

Experiment

Experiment

Group 1: food reward every time they got out of maze successfully

Group 2: no food reward

Group 3: no food reward for first 10 days, but given incentive after

\

results:

group 3 rats took longer to reach end of maze during first 10 days b/c they didn’t have motivation, BUT they were the fastest out of all the rats after 10 days

they latently learned their way around the maze in first 10 days, but it didn’t become apparent until they were given a food incentive

Group 2: no food reward

Group 3: no food reward for first 10 days, but given incentive after

\

results:

group 3 rats took longer to reach end of maze during first 10 days b/c they didn’t have motivation, BUT they were the fastest out of all the rats after 10 days

they latently learned their way around the maze in first 10 days, but it didn’t become apparent until they were given a food incentive

55

New cards

Cognitive Map

a mental representation of an external environment (feature/landmark)

56

New cards

Insight Learning

when you’re stuck on a problem until an answer suddenly appears

“AHA!”

usually solved as you let the problem “incubate”

\

ex. Kohler places food in unreachable places while chimps are nearby with tools, they eventually came to a cognitive understanding/solution

ex. chimps stack boxes on top of one another to get banana

“AHA!”

usually solved as you let the problem “incubate”

\

ex. Kohler places food in unreachable places while chimps are nearby with tools, they eventually came to a cognitive understanding/solution

ex. chimps stack boxes on top of one another to get banana

57

New cards

Learned Helplessness

learning that you can’t help yourself but transferring the assumption that you’re helpless to situations where you CAN escape the situation

\

ex. Seligman and shocked dogs who learn to give up even when there’s an exit

\

ex. Seligman and shocked dogs who learn to give up even when there’s an exit

58

New cards

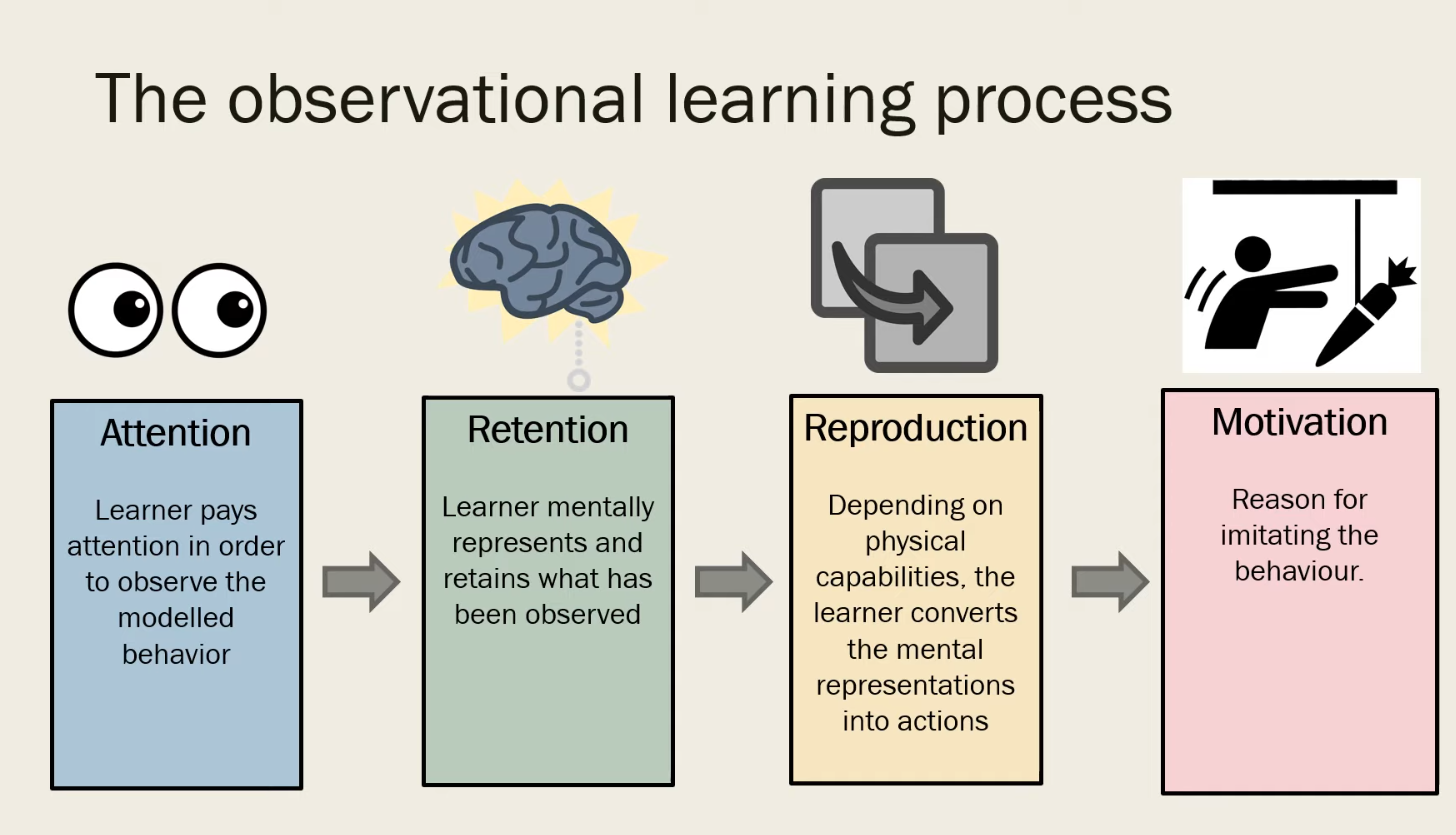

Social (Observational) Learning

learning from others (directly or by observation)

59

New cards

Bandura and Bobo doll

kids learn to either beat up or play nice with doll (Bobo) by observing adults

Modeling: kids mimicked behavior of adults being violent or gentle

\+

Vicarious learning: kids watched film of others

* depending on whether adults rewarded or punished, kids either beat down or play nice with Bobo

Modeling: kids mimicked behavior of adults being violent or gentle

\+

Vicarious learning: kids watched film of others

* depending on whether adults rewarded or punished, kids either beat down or play nice with Bobo

60

New cards

Modeling

a type of social learning where you imitate role-models in your environment

61

New cards

Vicarious Learning

a type of social learning where

* a person indirectly learns how to behave through a medium (story, video, etc.)

* and they look at whether someone else receives a %%reward%% or ==punishment== for their behaviors

\

* a person indirectly learns how to behave through a medium (story, video, etc.)

* and they look at whether someone else receives a %%reward%% or ==punishment== for their behaviors

\

62

New cards

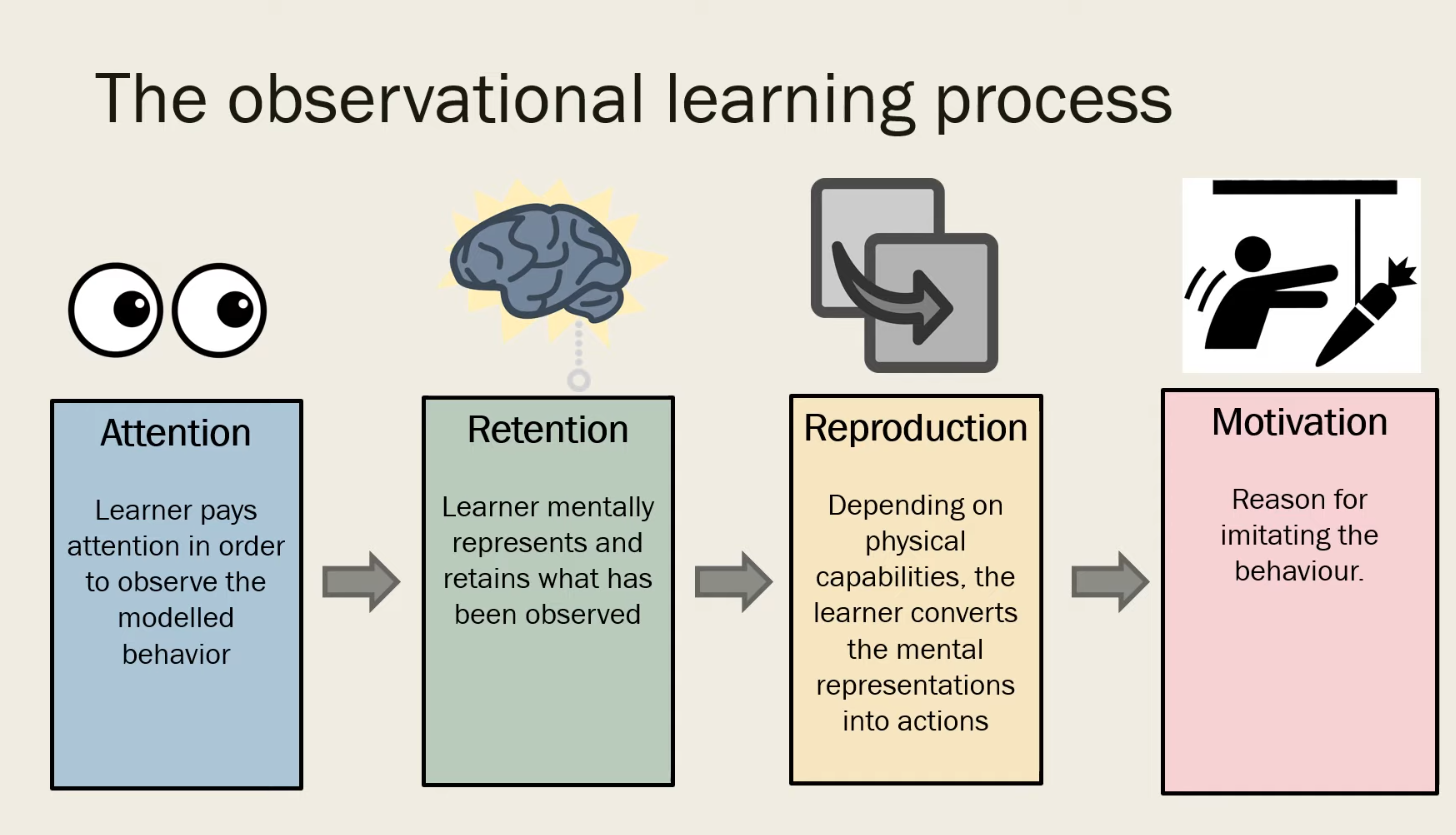

Attention

1st step of social learning = _____

\

learner pays attention in order to observe the modelled behavior

\

learner pays attention in order to observe the modelled behavior

63

New cards

Retention

2nd step of social learning

Learner mentally represents and retains what has been observed (of both actions and their consequences)

Learner mentally represents and retains what has been observed (of both actions and their consequences)

64

New cards

Reproduction

3rd step of social learning

Depending on physical capabilities, the learner converts the mental representations into actions

Depending on physical capabilities, the learner converts the mental representations into actions

65

New cards

Motivation

4th step of social learning

Reason for imitating the behavior

Reason for imitating the behavior

66

New cards

Factors that Influence Social Learning

* observer attributes

* model factors

* consequences

\

Observational learning - YouTube

* model factors

* consequences

\

Observational learning - YouTube

67

New cards

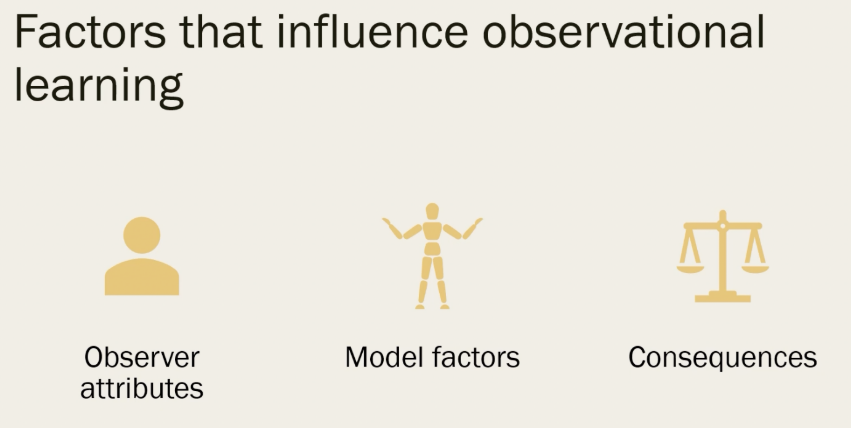

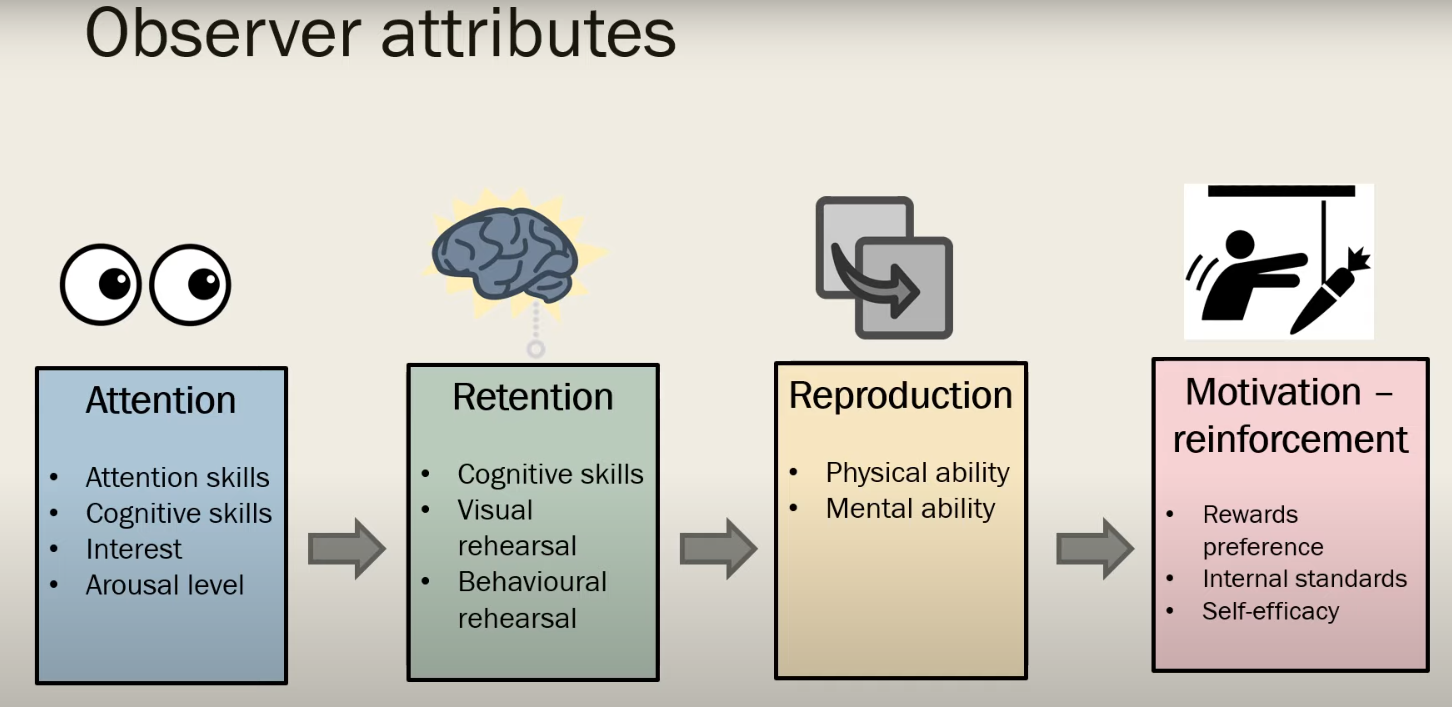

Observer Attributes

a factor of Social Learning that depends on the observer’s ability to pay attention, retain info, and physically/mentally reproduce the behavior. it also depends on their reward preference, internal standards and self-efficacy (the belief that they can do the behavior)

68

New cards

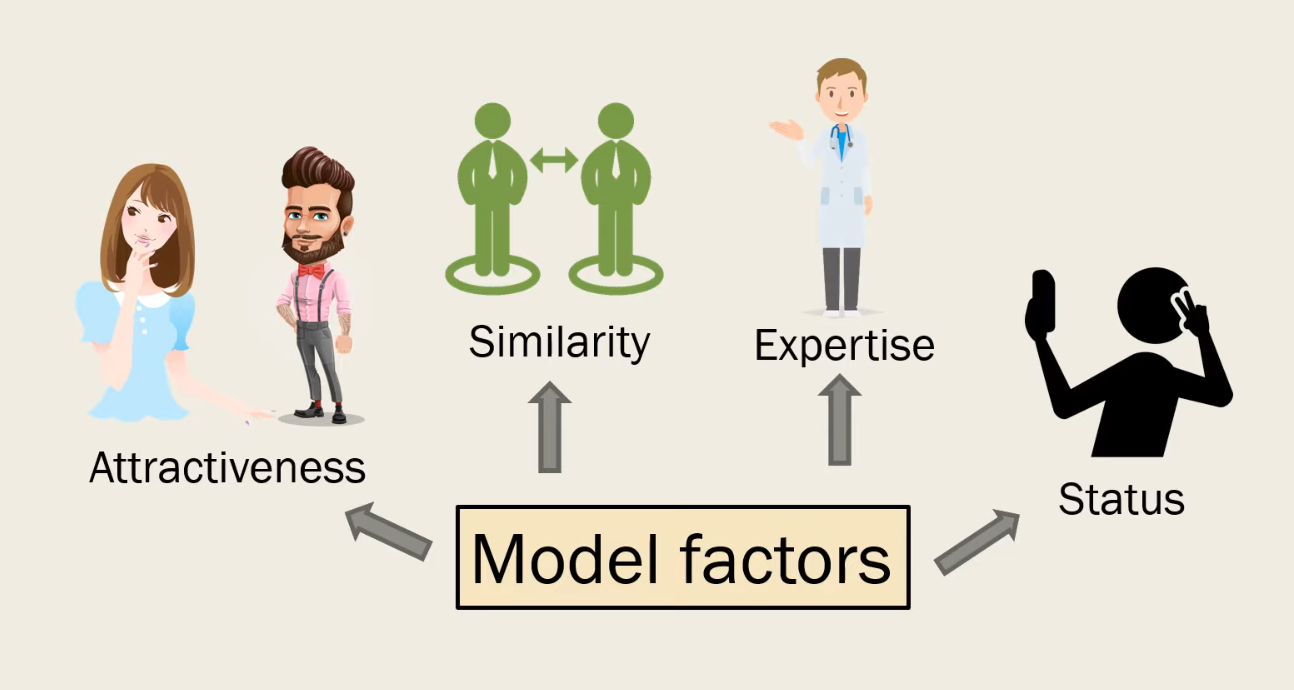

Model Factors

a factor of Social Learning that depends on the qualities of models

* attractiveness

* similarity to observer

* expertise

* status

* attractiveness

* similarity to observer

* expertise

* status

69

New cards

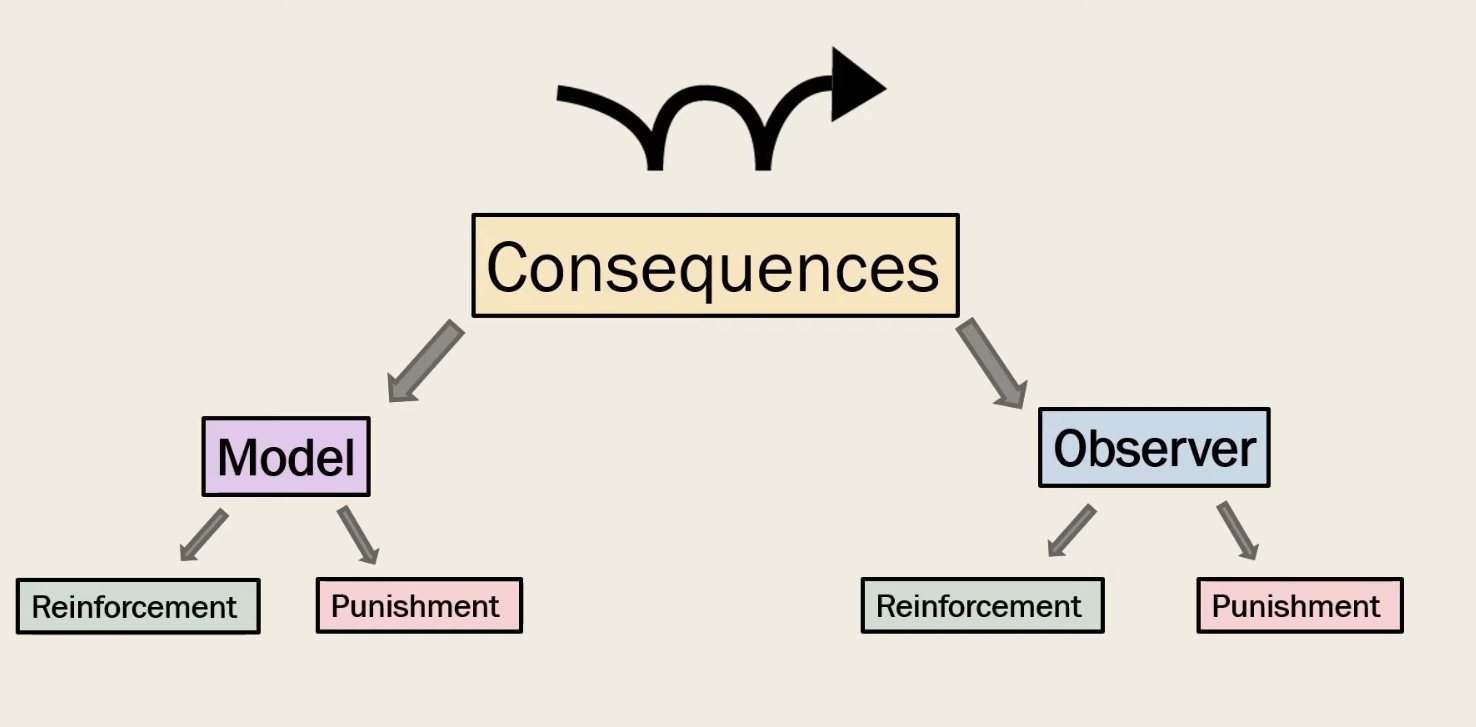

Consequences

a factor of Social Learning that depends on reinforcement or punishment received by the model and imitator

70

New cards

Mirror Neurons

a type of brain cell that respond equally when we perform an action and when we witness someone else perform the same action

\

* they provide a neural mechanism to understand actions, emotions, and intentions which is fundamental to social behavior

* strongly linked to our ability to show empathy

* also play a part in social learning

* help to interpret facial expressions

\

* they provide a neural mechanism to understand actions, emotions, and intentions which is fundamental to social behavior

* strongly linked to our ability to show empathy

* also play a part in social learning

* help to interpret facial expressions

71

New cards

Biofeedback

an operant conditioning technique that teaches people to gain voluntary control over bodily processes like heart rate and blood pressure

* helps you make subtle changes in your body, such as relaxing certain muscles, to achieve the results you want, such as reducing pain.

* helps you make subtle changes in your body, such as relaxing certain muscles, to achieve the results you want, such as reducing pain.