Operating Systems Exam 4单词卡 | Quizlet

1/63

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

64 Terms

In an operating system, we are concerned about deadlock involving "resources." Consider a laptop with four CPU cores. Are these multiple instances of the same resource, or separate resources? Explain

These are multiple instances of a single resource (CPU cores) since they are interchangeable with no significant distinction. If differentiation were necessary, they would be considered separate resources.

One of the four necessary conditions for deadlock is "hold and wait." What does it mean for a process to "hold" a resource?

It means the process requested that resources, and now the resource has been assigned to the process for it to use.

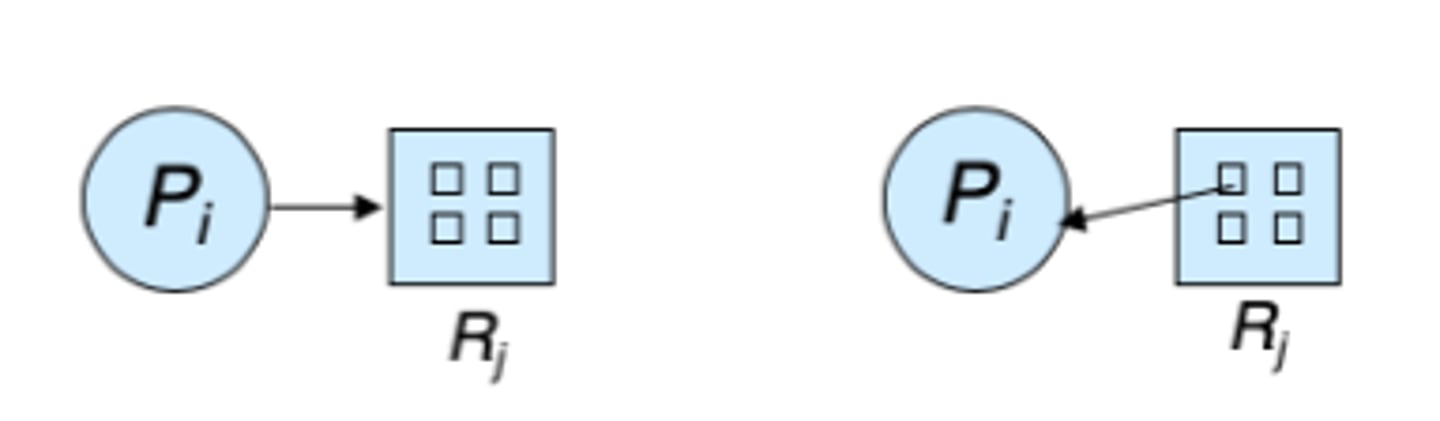

Consider the Resource Allocation Graphs shown. Why does the arrow in the left figure go to the outer box, but the arrow in the right figure comes from an inner box?

The left figure is illustrating a request edge, which is to the resource as a whole since any of the resources instances would be acceptable. The right figure is illustrating the specific instance of the resource that has been assigned to the process.

What is the basic idea of Deadlock Prevention, and why does it work?

Deadlock requires four conditions: mutual exclusion, hold and wait, no preemption, and circular wait. Deadlock prevention works by eliminating at least one of these conditions, ensuring deadlock cannot occur. If all 4 did not occur, no deadlock.

One possible solution for deadlock is deadlock detection plus recovery, but recovery is problematic. Explain.

It is difficult to know which process to terminate, and it may be difficult for that process to restart after it has been terminated.

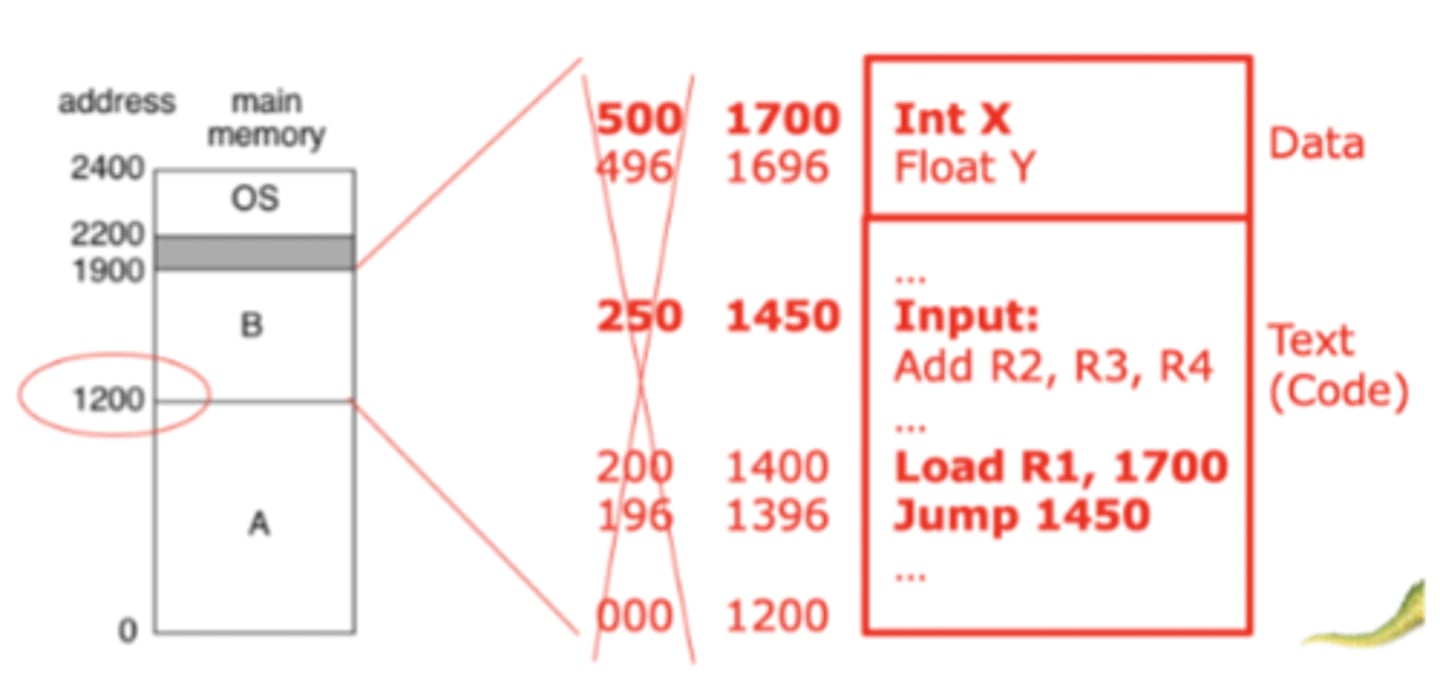

What is being illustrated by the figure? Be specific, and answer in your own words - do not copy text from the slide.

This figure is illustrating static relocation, with the process being loaded into memory at address 1200. Every instruction that referred to an address, such as the Jump and Load instructions, must now have 1200 added to their address so they will address the proper location.

In dynamic relocation, the Memory Management Unit (MMU) translates logical addresses into physical addresses as the program runs. How does it do this? Be specific.

While the process is loaded into memory at some address, that address is stored in the Base Register in the MMU. The MMU then takes any logical addresses that it receives, adds that base value to the address, which produces a physical address.

In dynamic relocation, the Memory Management Unit (MMU) provides protection. What does protection mean in the is context, and why is it important?

A process can only access its own memory space; it cannot access the memory space of other processes or the operating system. That is important to prevent accidental or malicious damage to another process.

If there isn't room enough in memory for all process, some processes can be swapped out to make room. When one of those swapped-out processes needs to run again, the operating system must swap it back in (into memory). How is the CPU involved in this procedure?

The CPU scheduler selects a process to run. If that process is swapped out, the CPU scheduler calls the swapper to bring it back in, possibly swap one or more process to free space.

Consider the two diagrams that show fixed-sized partitions. What advantage does the one on the right have over the one on the left? What complications would it add?

It supports larger processes than the one on the left, but it adds the need for an algorithm such as first-fit or best-fit to choose the appropriate partition.

Segmentation is very similar to contiguous allocation in the sense that it still requires searching to find an unused space in memory and can still have fragmentation problems. However, instead of keeping the process whole, it divides it into a number of smaller pieces, called segments. Why is this an improvement?

The main reason is that smaller memory segments are easier to fit into free spaces than a large entire process. Additionally, segmentation allows processes to share code or data segments.

Segmentation is implemented using a Segment Table and a Segment-Table Base Register. Where are these two items found?

The Segment Table is stored in the Process Control Block, and the Segment-Table Base Register is inside the Memory Management Unit.

Does paging suffer from external fragmentation?

No. External fragmentation is wasted space outside partitions, but since all the frames are the same size, all of them are unusable.

Briefly explain (textually) how paging translates a logical address into a physical address.

The page number is used as an index into the page table, where the frame number is found. That frame number is then combined with the displacement from the logical address to build the physical address.

What problem does the Translation Lookaside Buffer (TLB) sole, and how does it solve that problem?

With paging, each memory reference requires an extra access to the page table, doubling memory access time. The TLB solves this by caching sets of page number and frame numbers, providing much faster access than searching the page table in memory.

Consider the C program below, which copies anything typed after a program name to a buffer. What is the problem with this code?

#include

#define BUFFER_SIZE 256

int main(int argc, char *argv[]){

char buffer[BUFFER_SIZE];

if (argc < 2)

return -1;

else{

strcpy(buffer, argv[1]);

return 0;

}

}

The buffer only holds 256 characters, so if the input is larger than 256 characters it will overflow the buffer.

Deadlock prevention tries to eliminate deadlock by eliminating one of the four conditions. How can hold and wait be eliminated?

It can be eliminated by ensuring that a process holds no resources when requesting another. This means either requesting all resources at once (at beginning or when first needed) or releasing current resources before acquiring new ones. However, this approach is ineffective as it is hard to predict resource needs, wastes resources, and can lead to starvation.

If a resource allocation graph does not contain a cycle, there is no deadlock. If it does contain a cycle, is there deadlock or not? Explain.

If a cycle exists, deadlock is possible but not certain. If all resources have only a single instance, deadlock is guaranteed. If multiple instances exist, there is a possibility of deadlock.

The basic idea of deadlock detection is to look for deadlock occasionally. Assuming deadlock is found, what must be done to break the deadlock?

One or more of the deadlocked processes must be terminated.

The four conditions that characterize deadlock are mutual exclusion, no preemption, hold and wait, and circular wait. If the system is deadlocked, will all four of these conditions hold? Answer Yes or No, and then explain.

Yes, since these conditions are necessary for deadlock.

In the Banker's Algorithm for deadlock avoidance, we define the system as being in a "safe state" if there exists a sequence of all processes in the system such that, for each process, the resources that the process can still request can be satisfied by the currently available resources plus resources held by all the earlier processes in the sequence. As we try to determine this sequence of processes, what do we do if we find a process that has resource needs greater than the available resources?

It is assumed that the process must wait until sufficient resources are available. If running the process could result in additional requests that exceed available resources and create an unsafe state, the next process is examined instead.

List the 4 necessary and sufficient conditions for deadlock.

Mutual exclusion, No preemption, Hold and wait, and Circular wait

In an operating system, we are concerned about deadlock involving "resources". List three examples of operating system resources that we may be concerned about in this context.

CPU cycles, memory space, I/O devices, file, printer, network connection, mutex locks.

In the context of Deadlock Avoidance, what is a safe state?

A state in which there exists a sequence of resource allocations that will allow all processes to complete their execution.

The MMU contains a Base Register. What is stored in this register?

It holds physical address where the process is loaded in memory — the "base" of the process in memory.

How does the MMU use the value in the Base Register to produce the physical address?

The MMU adds the Base Register value to a logical address in the process to get a physical address.

The swapper, sometimes known as the medium-term scheduler, swaps processes between physical memory and swap space, also called backing store. Under what conditions would a process that is in swap space be swapped back into physical memory?

If the CPU scheduler chooses a process to run, and it is in swap space, it must be swapped back into physical memory before it can run.

With contiguous allocation, the entire process must be placed into memory as one contiguous unit using either fixed-sized partitions or dynamic partitions. Why is this a problem?

It may be difficult to find a large enough space in memory.

Segmentation breaks a process up into a collection of segments. What criteria are used to determine what is in each segment?

The segments come from the program and represent logical units, such as the code, data, and stack. Segments can also be portions of the code, like individual procedures or functions, or parts of the data, such as an array.

The main idea behind virtual memory and demand paging is to only load program code and data when it is needed. Why is this an advantage?

The operating system avoids loading unnecessary code or data pages, speeding up context switches by reducing I/O for disk accesses. Keeping only parts of processes in memory allows more processes to load and share memory and enables execution of processes larger than physical memory.

What are the four conditions necessary for deadlock?

mutual exclusion, hold and wait, no preemption, circular wait

Mutual Exclusion

Only one process at a time can use a resource

No preemption

A resource can be released only voluntarily by the process holding it, after that process has completed its task

Hold and wait

A process holding at least one resource is waiting to acquire additional resources held by other processes

Circular Wait

A set {P0, P1, ..., Pn} of waiting processes exists where P0 waits for a resource held by P1, P1 waits for a resource held by P2, ..., Pn-1 waits for a resource held by Pn, and Pn waits for a resource held by P0, forming a circular wait condition.

Can a graph with NO cycles contain a deadlock?

No

Can a graph with a cycle contain deadlock?

Yes, but if there is only one instance per resource type

Three methods for handling deadlock

Deadlock handling strategies include preventing or avoiding deadlock to ensure it never occurs, allowing deadlock to happen and then recovering, or ignoring the issue and assuming deadlocks do not occur.

Safe State

System is in a safe state if there exists a sequence of ALL the processes in the systems such that for each Pi, the resources that Pi can still request can be satisfied by currently available resources + held by all the Pj, with j

Claim edge

Pi->Rj indicated that process Pj may request resource Rj, represented by a dashed line

What does a process include?

Stack, Heap, Data, program code (text section), and state

Compiler

(System Program) generates an object file for each source file

Linker

(System program; often thought of as part of the compiler) Combines all of the object files into a single executable object file (program)

Loader

(part of the os) Loads an executable object file into memory at location(s) determined by the operating system

Relocatable loader

Loads the program at a starting memory location specified by the OS, modifying all addresses by adding the real starting location to those addresses

Problems with static relocation

Processes cannot move, and no protection

Dynamic reloaction

Dynamically change each memory address as the process runs

Memory management Unit (MMU)

Converts logical addresses to physical addresses

Variable-partition

sizes for efficiency (sized to a given process' needs)

hole

block of available memory; holes of various size are scattered throughout memory

First-fit

Allocate the first hole that is big enough

best-fit

Allocate the smallest hole that is big enough; must search entire list unless ordered by size. Produces the smallest leftover hole

worst-fit

Allocate the largest hole; must search entire list. Produces the largest leftover hole

external fragmentation

total memory space exists to satisfy a request, but it is not contiguous

internal fragmentation

Allocated memory may be slightly larger than the requested memory, resulting in unused space within a partition. This unused space is known as internal fragmentation.

Compaction

Used to reduce external fragmentation. Done by shuffling memory contents to place all free memory together in one large block

segmentation

memory-management scheme that supports user view of memory

Basic idea of segmentation

using the programmer's view of the program, divide the process into separate segments in memory

Do segments that make up a process have to be loaded into memory contiguously?

no

segment table

maps two-dimensional physical addresses

Each segment table entry has these

base (contains the starting physical address where the segments reside in memory) and limit (specifies the length of a segment)

Segment Table Base Register (STBR)

points to the segment table's location in memory

segment table length register (stlr)

indicates number of segments used by a program

paging

the logical memory space of each segment is divided into a number of small, fixed-suze partitions called pages