cognition 6 (selective attention)

1/36

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

37 Terms

why is attention important for memory?

incoming information → sensory memory

information will only go from sensory to short term if attention paid to it

no attention = information lost

we cannot attend to everything, so we attend to some things and not others

can we divide attention?

no, this can be demonstated by the ‘cocktail party’ effect

we cannot understand/remember the contents of two concurrent spoken messages (cherry, 1950s)

instead we alternate between attending selectively to the speakers

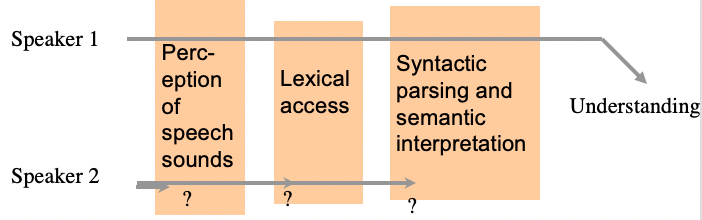

want to know if the bottle neck is

at the perception of speech sounds

lexical access

syntactic parsing anf semantic interpretation

how is this investigated in the lab

Moray

focus attention on one of simultaneous messages (dichotic listening task)

shadowing on of the messages is successful if messages differ in

physical properties (location, voice)

semantic content

in the unattended message, participants notice:

→ physical changes (location, voice, volume) NOT semantic changes (from meaningful to meaningless)

word repeated 35 times in unattended message not remembered better in later recognition test than word heard once

where is the bottle neck?

unattended words are filtered out, after analysis of physical attributes, before access to meaning

we are aware of unattended speech sounds (pitch, volume, phonetic characteristics) but do not process meaning

to extract identity, we have to switch attention filter between them- slow and effortful

Broadbent’s (1958) dichotic split-span experiment

→ left ear: 3, 7, 6, right ear: 9, 4, 2

easier to report as 376942 (one switch) than 397462 (switch after each digit)

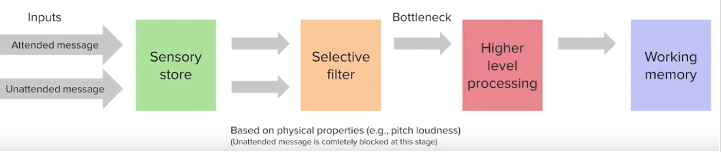

broadbents filter model

sensory features of speech sources are processed in parallel and stored in sensory memory (echoic memory)

selective filter directed only to one source at a time

filter is early in processing so only information that passes through the filter can be

recognised

meaning activated

representation in memory

control of voluntary action

access to conscious awareness

additional assumptions of broadbent’s account

filter is all or none

filter is obligatory ‘structural’ bottleneck

but is filtering all or none?

examples of partial breakthrough of meaning in unattended speech in shadowing experiments

own name noticed in unattended speech (Moray)

interpretation of lexically ambiguous words in attended message influenced by meaning of words in unattended message (Lackner and Garrett)

saw port for the first time (attended) drank until bottle was empty (unattended)

another way this has been investigated

condition a galvanic skin response to the word Chicago (through mild electric shock)

galvanic skin response evoked by the word Chicago in the unattended message

participant does not remember hearing Chicago!

generalises to other city names

→ GSR to unattended weaker than to attended names

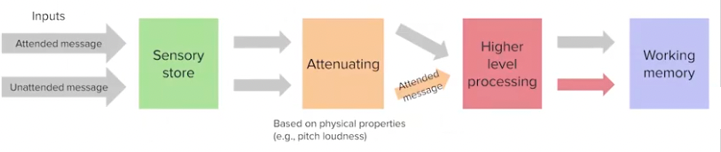

semantic activation by unattended input is attenuated, not blocked

how were theories modified

argued attentional filter after higher level processing, not before

theory:

both attended and unattended words processed up to identification and meaning activation

relevant meanings then picked out on basis of permanent salience or current relevance

but doesn’t explain:

selection on the basis of sensory attributes so much more effective than selection on basis of meaning

GSR to unattended probe words weaker than to attended

further modified theory

filter attenuation theory (Treisman, 1969)

there is an early filter but:

it is not all-or-none

it attenuates (turns down) information from unattended sources (so unattended words can still activate meanings if salient or contextually relevant)

early filtering is optional (not a fixed structural bottleneck)

experiments for this early selection option (not structural bottleneck) notion

monitoring for target word

lots of words played in let or right ear

press L or R key when animal name heard

after practice, finding the target word is equally accurate whether it's heard in one ear or both (unless selective understanding/repetition of one message is required)

→ can monitor easily in both channels, but when we have to shadow and monitor then filter has to be applied early

now we stop talking about auditory attention and move to visual attention- how we can influence where participants attended to

we can use endogenous cueing

probable stimulus location indicated by arrow cue or neutral cue (*)

participant responds as fast as possible to stimulus (maintaining central fixation)

different tasks

simple RT to onset

choice ‘spatial’ RT (left or right from centre)

choice ‘symbolic’ RT (letter/digit)

→ all faster for expected location and slower for unexpected

can voluntarily choose where to attend to

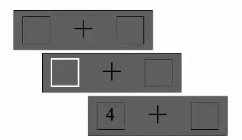

another way of cueing

exogenous

instead of endogenous (voluntary top-down), its exogenous (stimulus driven, bottom up) shifts

digit appears on left or right and one of the squares lights up

→ RT faster just after sudden onset/change at stimulus location, although does not predict stimulus location

→ timing of exogenous cueing different from endogenous

exogenous attraction of the spotlight is fast (<200 ms)

endogenous movement of spotlight takes several hundred ms

how do ERP’s link to this

voluntary attention to spatial locus modulates early components of ERP in extra-striate visual cortex

participants focus on fixation cross

cued to one of the locations and stimulus appeared

→ if stimulus appeared in attended location (big P1), stimulus in unattended = smaller P1

suggests attention helping processing of the stimulus

how early does attention work

early selection in V1 and LGN (geniculate-striate pathway)

fixation maintained on central point

series of digits appears at fixation and

high or low contrast checkerboards appear in left and right periphery

participant either counts digits at fixation or detects random luminance changes on left or right checkerboard

BOLD signal in LGN/V1 that reacts to checkerboard luminance change is greater when attention directed to that side than with attention to fixation

→ so, some selection (for regions in visual field) occurs very early in processing

overall, visual attention is

not all-or-none

an optional process

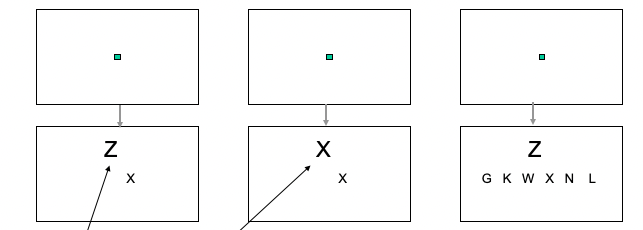

experiment as evidence:

participants had to attend to:

central letter

whole word (real or not-real word)

occasionally probe, had to classify a letter (z or 7 etc)

→ in the central letter condition, if the probe was int he centre= fast RT, probe not in the centre= slower RT

→ in the whole word condition= RT constant independent of location of the probe

how efficient is our attention

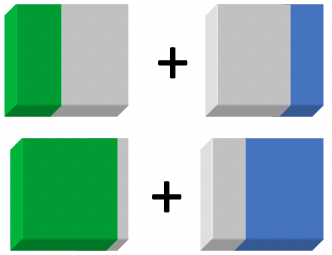

Lavie

‘flanker’ task

press left key for little X, right key for little Z on midline

ignore big letter above or below mid-line (distracting flanker)

incongruent distractor slows response, compared to congruent if processing load is low

→ this effect does not occur if participant has to pick target out of several irrelevant letters (irrelevant distractor does not distract as much)

how do we miss information

inattentional blindness (simons and chabris)

participants attend closely to one coherent stream of visual events

highly salient events in the unattended stream are missed

though happening in the visual field, information in unattended stream not processed to the level of meaning

capacity and multitasking- the limiting factors

attention

WM capacity

speed of processing

multi-tasking and cognitive capacity

there are limits to our cognitive capacity

all processes take time

there are limits to the input any process can handle (syntactic parsing)

representational/stroage capacity is limited (WM)

capacity limits become even more obvious when resources must be shared between tasks (multi-tasking)

→ limitations are important theoretically (architecture of the brain) and practically (human error- driving)

the demands of multi-tasking

when we try to do tasks simultaneously

there is competition for shared resources (dual-task interference)

when we try to switch between tasks

retrospective memory

prospective memory

demands on executive control

planning, scheduling, coordinating two task streams

trouble shooting when there is an error

multitasking is not a single competence, executive control processes are critical

example of limited capacity

using mobile phone while driving, this is illegal

hands free not illegal but:

studies show increased accidents

delayed braking at T-junction

impaired braking, detection of potential hazards

especially true for young drivers

study shows even with handsfree, cannot give full attention to driving when load is increased through the phone (50% increase in RT)

study on relative risk of mobile phone use and drinking

driver in stimulator, follows pacer car in slow lane of motorway (15 mins), tries to maintain distance, pacer brakes occasionally

baseline vs alcohol

casual talk on hand held vs hands free

→ mobile phone users: slower RT, more tail ended collisions, slower recovery

→ alcohol: more aggressive driving (closer following, harder braking)

no significant difference between hand-held vs hands-free

what do further driving stimulator studies show

hands-free

reduced anticipatory glances to safety critical locations

reduced later recognition memory of objects in driving environment

increased probability of unsafe lane change

→ actual passenger does not have the same effect, passengers sensitive to drivers load and can help to spot hazards

how can we measure dual-task interference

two tasks designed for measurement and manipulation

measures performance on:

tasks A and B alone

tasks A and B combined

does performance on each task deteriorate when the other must also be performed?

→ slower, less accurate performance in dual task conditions

why is there this slower, less accurate performance?

competition for use of specialised domain-specific resources

parts of body can only do one thing at a time

brain modules (processing certain things)

competition for use of general purpose processing capacity

central processor

pool of general process processing resource

limited capacity of executive control mechanisms that set up and manage the flow of information through the system and/or suboptimal control categories

competition for domain-specific resources

two continuous speech inputs cannot but simultaneously understood or repeated

performing a spatial tracking task interferes with use of visual imagery to remember stuff- both use visuo spatial WM

two different tasks need to use the same perceptual processes, response mechanisms, we should expect dual-task interference

→ unless info is low enough to switch us of that resource between tasks, but concurrent performance of any pair of tasks results in some interference

competition for general process processor

broadbent- there is a general purpose, limited-capacity central processing processor needed fro high level cognitive processes like

pattern identification

attention selection

decision making, planning

access to memory

→ everything goes through this for an overt action

or is it a general purpose resource pool

Kahneman (1975)

proposed a pool of general-purpose resource that is shared among concurrent tasksk (mental energy, attention and effort)

the capacity of this general purpose resource may vary:

between people

within people over states of alertness

diminish with boredom or fatigue

increases with time of day, stressors up to optimal, emotional arousal and conscious effort

if there is central processor or resource pool, whose capacity is shared by two tasks?

sum of capacity demands does not exceed available total

no interference

sum does exceed available total

interference

increasing difficulty of one task should reduce the capacity available for another task

hard to know how much capacity a given task should use, so test theory by using pairs of tasks for which it seems obvious that each would require all or most of central capacity

how was this tested

demanding tasks combined without interference

music students

task a. sight read grade 2 (easy) vs grade 4 (hard) piano pieces

task b. shadow prose from auten novel (easy) or old norse (hard)

little practice

experiment:

two sessions of 2 × 1 min dual task for each combination of easy and hard and one min sight reading or shadowing alone

findings:

→ rate of shadowing and number of shadowing errors no different with or without concurrent sight reading

→ more shadowing errors for harder text, more sight reading errors for grade 4 pieces but by session 2, not difference for difficulty levels

two more examples

tasks combined without interference

skilled visual copy-typing can be combined with shadowing prose without interference

one task insensitive to the difficulty of the other

continuous tracking and digit key task

no effect of difficulty of digit task on tracking delays

rad ass claim

there is no central general purpose processor

pairs of complex input-output translation can be combined with little or no interference if they use non-overlapping modules

→ but even when tasks use completely different modules, some interference ariss due to coordination and control demands

how does this link to driving and phone

could argue:

a. driving and navigation (visuospatial input → hand and foot responses)

b. conversation (speech input → speech production)

different modules

but both require construction of a mental model

→ when both tasks require construction of mental model then we get interference

importance of practice

tasks which cannot be combined without interference become easier to combine with practice (changing gear while driving)

example study:

85 hours of practice at

reading stories at the same time as writing to dictation

reading stories with writing category of spoken words

→ participants showed little dual-task interference and practicing automates task- reduces need for executive control

broadbents arguments

with pairs of continuous tasks like shadowing and sight reading, there is

predictability in the input (can anticipate)

lag between input and output (so must be temp storage in WM of input/output)

could still be a central processor switching between the two tasks (time sharing)

→ test: processor switching should be revealed if we use concurrent tasks with very small lags between input and output and the next stimulus is unpredictable (RT tasks)

example of this

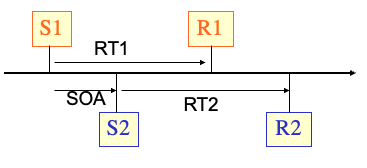

the psychological refractory period (PRP)

welford (1952)

two choice RT tasks

stimulus onsets separated by a variable, short interval '(stimulus onset asynchrony/SOA)

findings:

RT1 always the same, uninfluenced by second

RT2 (long lag/SOA) = performance unaffected, short lag/SOA = greater RT

→ must be some central processor or we’d be able to do these two things at one time

another way of explaining this

is it the response selection that is the bottleneck (pashler’s theory)

can be performed for only one task at a time

if a second stimulus arrives and is identified, it must wait until the response selection mechanism is free

→ have to respond to first before you can respond to the next