Parallel Computing Final Review

1/17

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

18 Terms

NOT a reason vendors have switched from producing single-core processors to producing multicore processors

It is easier to write parallel programs than it is to write sequential programs

What is the difference between parallelism and concurrency?

Parallelism is about using additional computational resources to speed up execution of a program, whereas concurrency is about correctly controlling access to shared resources by multiple threads.

Describe the sharing of register and memory resources in multithreaded shared memory programming

Each thread has its own program counter, registers, and call stack, but threads share access to static data and to objects in heap memory.

Describe the concept of data parallelism

Applying the same operation to different pieces of data at the same time.

Describe the output of the code

class ExampleThread extends java.lang.Thread {

int i;

ExampleThread(int i) { this.i = i; }

public void run() {

System.out.println("Thread " + i + " says hi");

System.out.println("Thread " + i + " says bye");

}

}

class M {

public static void main(String[] args) {

for (int i=1; i <= 20; ++i) {

ExampleThread t = new ExampleThread(i);

t.start();

}

}

}Each thread will always say hi before it says bye, but tother than that any interleaving of the output statements is possible.

Describe the 3 goals of parallelism

Solve a fixed size problem faster

Solve a larger problem in close to the same time as a smaller problem by using more processors

Fit a larger problem that will not fit in the memory of a single node by splitting the problem across multiple nodes

Describe the properties of a concurrent multithreaded program

Access to shared memory locations must be controlled to prevent race conditions.

Threads may execute in different orders on different runs.

Each thread has its own private program counter and call stack.

What can limit the amount of speedup it is possible to obtain by parallelizing computations?

Resource Contention

Communication Overhead

Critical Path Length

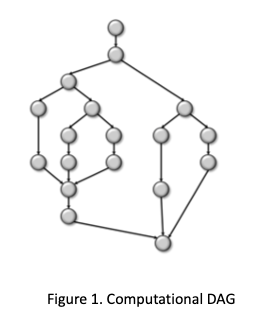

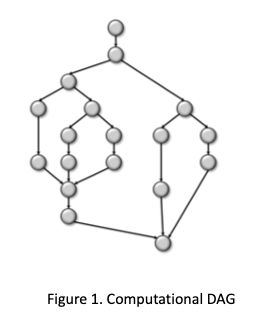

In a DAG that represents a parallel computation, a node represents a serial computation and a direct edge from node A to node B represents

A dependency from A to B i.e., node B cannot be scheduled until node A completes.

Consider the DAG, where each node represents a single time unit of work. What is T_1, the amount of time required to execute the DAG on a single processor?

18 (T_1 = number of nodes)

Consider the DAG, what is T_infinity, the time required to execute the DAG if you had an infinite number of processors?

9 (T_infinity is the length of the longest path (nodes))

What are lower bounds for T_P, the execution time using P processors, assuming no superlinear speedup?

T_infinity and T_1/P

Suppose a parallel algorithm has T_1 in O(n²) and T_infinity in O(n). Then the number of processors that can profitably be used to parallelize the program is

O(n)

The purpose of a sequential cutoff in a divide-and-conquer parallel algorithm is to

limit the overhead of excessive thread creation

What is true about Amdahl’s law?

It assumes that linear speedup is obtained on the portion of the code that can be parallelized

Given the proportion of the sequential execution time that cannot be parallelized, it gives an upper bound on the speedup that can be achieved by parallelization no matter how many processors are used.

If the proportion of the sequential execution time of a program that cannot be parallelized is 10%, then Amdahl’s Law predicts that the maximum speedup that can be achieved using 8 processors cores is

4.7

If the proportion of the sequential execution time of a program that cannot be parallelized is 10%, then Amdahl’s Law predicts that the maximum speedup that can be achieved using an infinite number of processors cores is

10