DIAGS SLPM

1/46

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No analytics yet

Send a link to your students to track their progress

47 Terms

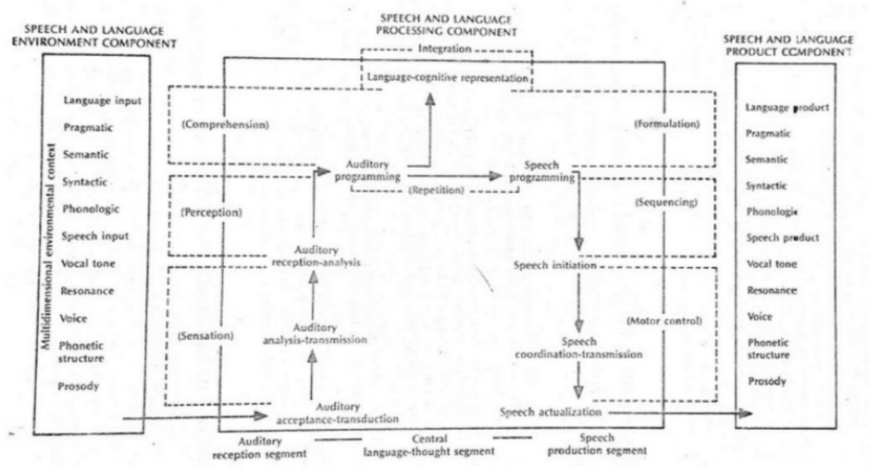

Speech and Language Processing Model

Focuses on Speech and Language

How Nation and Aram DESIGNED it to be:

The model wants to help in the diagnosis of speech and language issues

Almost all the components should be related to speech and language

Speech and language environmental component

Speech and language processing component

Speech and language product component

Auditory-Oral Modalities

How Nation and Aram DESIGNED it to be:

The basic modalities are through the auditory and oral means

In communication, there is the speaker and the listener. By being able to listen and speak, it enhances the exchange of information

Describe and delineate observable S/L behavior

How Nation and Aram DESIGNED it to be:

Since our goal is to diagnose and manage S/L issues, we need to have terminologies available to be used in speech and language processes, to be used as basis for decisions during therapy and assessment.

Specify the Anatomy and Physiology underlying internal process

How Nation and Aram DESIGNED it to be:

There should be a biological basis of all the observable events that were earlier discussed

There should be an alignment between anatomy and physiology with that of the behaviors

Assist in developing the interface between the physical basis and the observable S/L behaviors

How Nation and Aram DESIGNED it to be:

There should be an alignment, and the flow should make sense from our sensation-perception up until the motor control.

Understand causal factors and hypothesize cause-effect relationships

How Nation and Aram DESIGNED it to be:

It should help diagnosticians to understand cause-and-effect relationships.

Hence, it has the environmental component as the input, and it should have a plausible effect on the product component.

The product component is both a factor of something internal to the patient (speech and language component) and something external (environmental component)

Measure S/L disorders

How Nation and Aram DESIGNED it to be:

We look into the breakdown

In all of our patients, we can hypothesize possible issues, and based on those issues, we are able to manage them correctly.

Environment Component, Processing Component, Product Component

SLPM Components

Input, context, and time dimensions; Input that the individual is receiving, such as language stimulation

Physical processes and behavioral correlates; Something internal to the patient

Language and speech, Output that we see and measure

Language input, Pragmatic, Semantic, Syntactic, Phonologic, Speech input, Vocal tone, Resonance, Voice, Phonetic Structure, Prosody

Speech and Language Environment Component (11)

Speech and Language input

Speech and Language Environmental Component

Involves the input from the environment of the patient that includes S/L.

Perceive, see, or listen to the vocal tone, resonance, voice, phonetic structure, and prosody.

Receive pragmatic, semantic, and syntactic, and phonological input from the environment.

Interpersonal context, Multisensory context, Sociocultural context

Multidimensional Environmental Context

Where we usually consider certain personalities, that we have different ways of expressing ourselves.

From the type of environment or actual environment they are in.

E.g. “How many people are in his immediate environment?”, “Is he the first child? Second child?” “What is the usual culture/traditions/beliefs that they have in their family?”

Historical, Immediate/Contemporary

Time Dimension

Typically, we do our case history to check the environmental component that would have an effect on the history

When we actually do the evaluation with the patient and if and when there is an effect on the patient’s performance.

We try to manipulate the environment to see if there is an effect in the processing component

If it has an effect on the processing component, we will be able to see that in the product itself

This is when we do our standardized testing and even our dynamic assessment to see if the child is able to gain from or learn from the techniques that we are trying to see if they work.

Speech and Language Processing Component

Internal to the patient

It has 3 segments, similar to a computer

Whatever goes in our head is processed by this component

Auditory reception, Central language thought, Speech production

3 segments/components of Speech and Language Processing Component

Primary recognition system made up of the bilateral structure of auditory modalities

Prelinguistic processes

Because those are the things that happen before the auditory input has been processed in our brain.

It is linguistic in nature; It is where linguistic and cognitive processing is being done

It helps process what we have heard

Primary production system: How we produce the sounds and words

Post-linguistic processes: Final outcome is the speech and language output

Tie the anatomy and physiology along with the physical processes to make sense of the input and output

Within the segment are physical processes

Auditory acceptance transduction, Auditory analysis transmission, Auditory reception analysis, Auditory programming I

Auditory Reception segment (4)

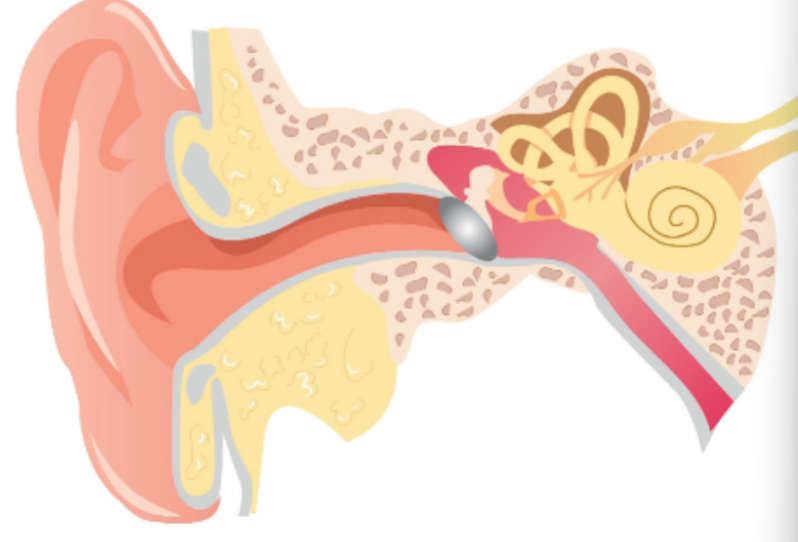

Auditory acceptance transduction (AAcT)

changing the acoustic signal into something that can be processed in our brain

First physical process

ANA: Involves the outer to inner ear

Changes the acoustic signal to mechanical, hydraulic, and electrochemical for further neurological processing

What we are perceiving are the sounds, but the sound did not actually travel from the source to our ear.

What travels is the propagation of the molecules from the source, and as it influences or moves the next molecules, it is propagated and then reaches our eardrums which we will then convert into mechanical, hydraulic, electrochemical for further neurological processing.

Energy is something that we cannot destroy, but what we can do is to change it, that’s why we use the term "transduction".

Changes the acoustic signal from the air and as it reaches the eardrum, which vibrates similarly to the frequency and intensity of the sound source, that becomes mechanical energy. → As the eardrum is moving and the 3 little tiny bones (malleus, incus, stapes), it is going to move the water in our cochlea. → Water in our cochlea becomes more hydraulic when it moves. → As it moves, it changes the connection of the basal membrane and the hair cells of our auditory nerve, which is connected to the inner ear.

Signal changes from: acoustic → mechanical → hydraulic → electrochemical so that it reaches our brain.

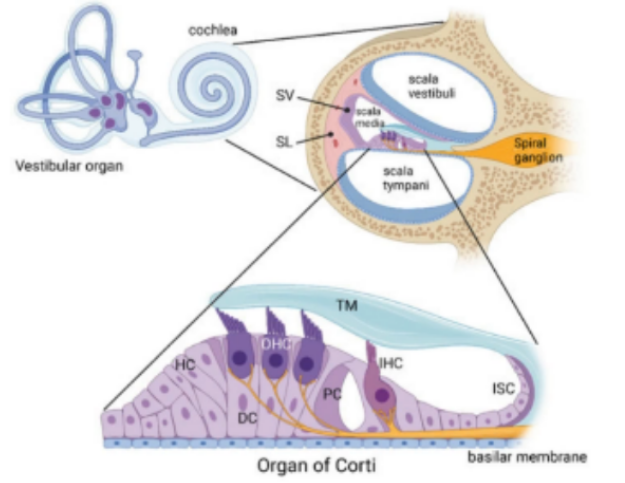

Auditory analysis transmission (AAnT)

being able to code them in terms of their frequency, intensity, and duration at the level of the organ of corti to the heschl’s gyrus

ANA: When sounds reaches the brain, it will involve the Organ of Corti to Helsh’s Gyrus (Primary Auditory Cortex)

Where coding of frequency, intensity, and duration of auditory stimuli happens.

The fluid in the middle ear vibrates, according to the signal from the sound source.

When they vibrate, what happens is that the sound pressure is transmitted to the fluids of the middle ear by the stapes, the pressure wave deforms the basilar membrane in an area specific to the frequency of the vibration.

Higher frequencies cause movement and the base of the cochlea and deeper frequency works at the apex.

This characteristic is known as “cochlear tonotopy.”

High frequencies are identified at the base of the cochlea, while low frequencies are identified at the apex.

Helps in determining the frequency, intensity, and duration of the auditory stimuli.

Cochlear tonotopy

The type of frequency will hit different levels of the cochlea, determining the type of frequency (high or low).

High frequency → makes the basilar part of the cochlea react

Low frequency → makes the apical side of the cochlea react

Intensity, Frequency, Duration

refers to how hard it hits the basilar membrane.

allows the cochlea to code by the location of vibration.

allows the cochlea to code by how long the vibration lasts

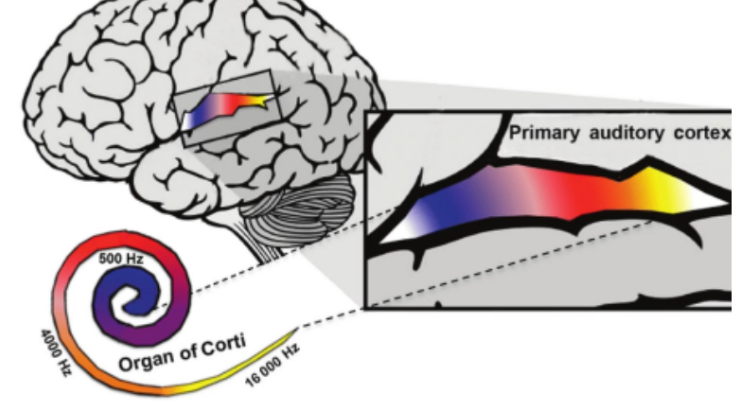

Auditory reception analysis (ARA)

because it has the same coding as the organ of corti, we are able to compare. At this level, you’re thinking “Is it high or low frequency, did I hear that sound before?”

At the level of the Helsh’s gyrus (primary auditory cortex), the same thing is also happening

The tonotopy of the auditory system.

Tonotopy is defined as the spatial mapping of sound frequencies across the auditory system

The different regions of the basilar membrane and the organ of corti, located in the cochlea vibrates at different frequencies, usually from 500 to 16,000 Hz

The topographical mapping or tonotopy is maintained along the stations of the auditory pathway all the way to the primary auditory cortex in the temporal lobe.

In the auditory cortex, neighboring neurons respond to nearby frequency as shown in the different colors.

At the ARA, you can see that coded auditory data is further analyzed and restructured into complex auditory patterns and compared with previously established patterns.

Additional notes:

From the cochlea, it will go to the brain and the primary auditory cortex

It has an equivalent frequency at the level of the auditory cortex

It will code the data for further analysis and restructure the previously learned patterns

Able to determine whether the sound is familiar or not

Only at the level of the auditory cortex, so when the listener compares to what is stored, they can determine if it is familiar or not.

Auditory Programming 1 (AP1)

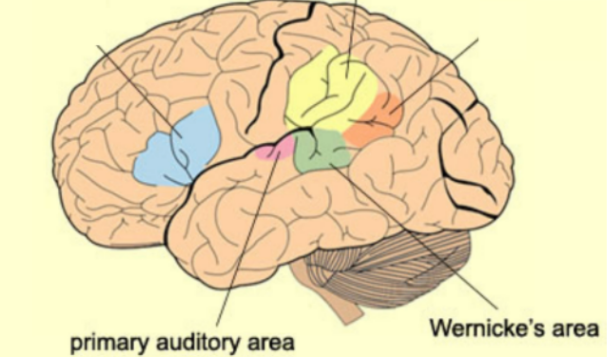

In the ARS, because it involves the Wernicke’s area, an association area, it helps us understand these, prelinguistically what those words are

Heschl’s gyrus (primary auditory cortex) in interaction with the Wernicke’s area

Prelinguistic functions include sorting speech from non-speech patterns, changing auditory patterns into phonetic (feature) patterns, storing these phonetic patterns.

Auditory patterns become phonetic patterns.

Before, it may have just seemed like random vibrations. But now, through patterns in those vibrations, we can recognize specific sounds—like the letter 'f', 's', or 't'. That’s how we’re able to understand phonetic features

Additional notes:

Wernicke’s aphasia

Part of the temporal lobe

The PAC is also located here

The PAC put together the sounds and make it familiar

Wernicke’s will figure out what kind of sound pattern it is

The auditory patterns to phonetic patterns

The sound being heard at this level is transcribed by Wernicke's area

Figure out sound based on connection with association areas in the brain

Needs to reach temporal lobe from PAC to Wernicke's

Sound information is first broken down into basic components in the PAC, then transmitted to Wernicke’s area. There, it's matched with previously stored language knowledge to make sense of what was heard.

The sound or word becomes meaningful when it's processed at the conceptual-lexical level

Not just heard as sound, but understood as a word with meaning.

Sensation

Behavioral Correlates of the Auditory Reception Segment (ARS)

Low-level behavioral response to the presence of sound (on-off registration process).

Ability to hear/threshold of hearing regardless of knowing the characteristic has cortical activation but no meaning is given, because it is still in the prelinguistic level.

How to test: Use of pure tone audiometry/noise

Involved with the presence or absence only

No analysis yet

Perception

Behavioral Correlates of the Auditory Reception Segment (ARS)

Happens from the Auditory Reception & Analysis to the Auditory Programming 1.

Because it involves both the primary gyrus & Wernicke’s area, there is recognition and sorting of the auditory stimulus.

Can derive significance

The end result of all activities in the auditory modality active process.

An active process that involves interpretation

We can react to the sounds by our perceptual processes.

Perceptual Processes: Discrimination, attention, localization, sequencing, & memory

These can be done based on minute differences, and how we perceive the sound by left & right ear

Auditory programming 2, Central language thought, Speech programming 1

Central language though segments (3)

Auditory programming 2

Where the Wernicke’s area interacts with the remainder of the auditory association cortex

Even if we do not know the meaning of the word, we are able to understand its functions.

Has the ability to connect with other parts of the brain to make sense of information

Linguistic functions include both decoding and encoding, e.g., decoding phonetic features into phonemes and phonologic sequences

Language component is involved

Decoding some aspects of syntax

Encoding the phonetic to phonologic pattern and certain syntactic units into the message being expressed.

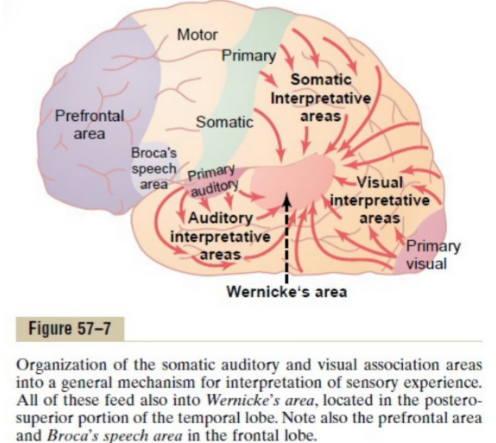

Organization of the Wernicke’s Area

Prior to the Wernicke’s area, the signal first passes the Heschl’s gyrus.

The somatic (senses), auditory (hearing), & visual (vision) interpretative areas are organized into a general mechanism for interpretation of sensory experiences.

These all feed into the Wernicke’s Area, which is located in the posterosuperior portion of the temporal lobe.

Issues in Wernicke’s area cause problems in comprehension due to the inability to process the incoming information from these other areas.

Language Cognitive Representation (LCR)

Where the angular and supramarginal gyri interact with the auditory, visual, somesthetic association areas bilaterally through various association pathways.

Allows language decoding, integration, and encoding.

Language decoding: understanding

Language integration: higher level of comprehension

Put together multimodal information

Language encoding: formulating

Linguistic processing of the message occurs as well as other higher order language, i.e., cognitive interpretation of messages.

Important in the LCR: The multi-modality analysis and synthesis of past and present language cognitive-information.

This is the only process where pragmatics happens to derive meaning.

Not just sounds and words. It also involves vision and memory since the frontal lobe is connected to the LCR.

Can help in reacting appropriately

Pragmatics occur

Able to make sense based on what you heard, see

Most of the time, children with autism will have problems in LCR.

Speech Programming 1 (SP1)

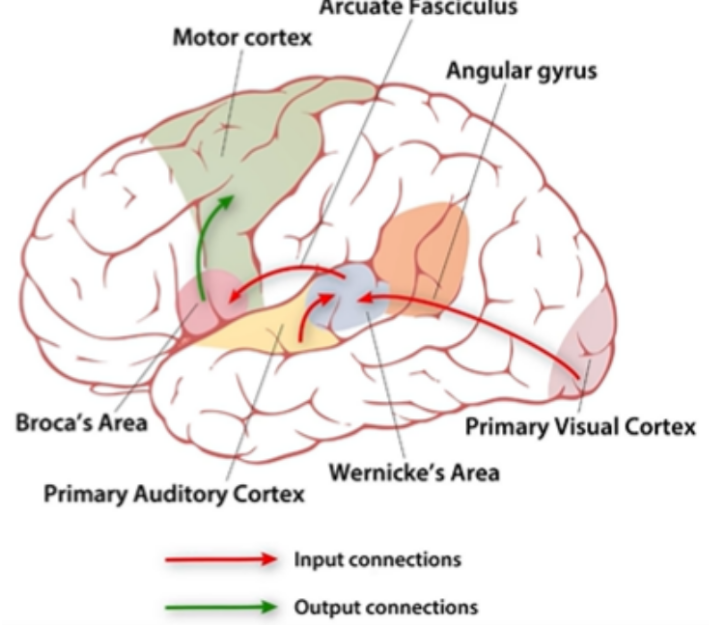

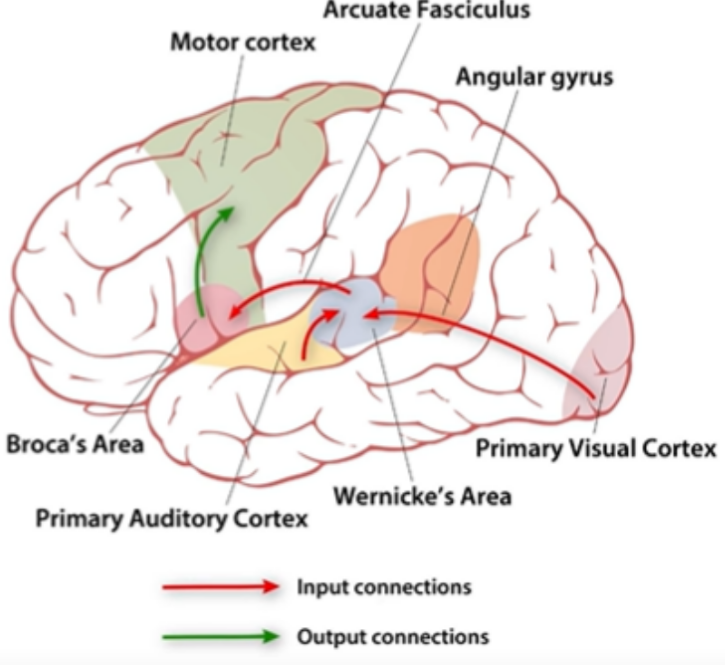

Where the Broca’s area interacts with the auditory association areas and the primary motor cortex for encoding

Process of changing the auditory program of the message into a motor program; i.e, a series of motor speech commands.

An auditory-motor transducer; "read" the phonologic representation of the message and transduces it into a series of motor speech representations incorporating both phonologic and prosodic features.

Change from auditory to something motoric

Before saying, you have to have a plan

Change to a program, a command

Up until this process, all of them are linguistic. After SP1, it becomes post-linguistic.

Comprehension, Formulation, Integration, Non-meaningful Repetition

Behavioral Correlates of the Central Language Thought (CLT) Segment (4)

Comprehension

Behavioral correlates of SLPM and CLT

Involves the Auditory Programming 2 and the Central Language Thought

Phonologic comprehension

Understand sound and recognize it

Some aspects of syntactic decoding

Marking input as “symbolic” or “non symbolic”

Symbolic - stands for something

Syntactic and semantic comprehension

E.g. In CELF, the first two children are in line, while the third child is still playing. Point to the picture.

We are able to understand it as a whole

Linguistic processing of messages

Understanding what was heard (phonemes, syntax)

Integration

More than understanding sounds and words, we have to integrate incoming information from a multimodality analysis

All senses (cognitive, linguistic, social)

Higher order language - cognitive interpretations of messages (+pragmatic)

Includes pragmatics

Multi-modality analysis (all senses: cognitive; linguistic; social)

Synthesis of past and present information

Make sense of incoming information, and make comparison to have an appropriate response

Physical processes taps to almost all association areas

increased complex meaning as sensory perceptual info added;

Increased abstraction, increased number of comparison, increased number of adaptation of old concepts with new information

Pragmatics is important in integration

E.g. Which two words are related?

Visual, integration of past experiences, response

Formulation

Central Language Thought + Speech Programming 1

Once we’re able to integrate and make sense of all incoming information. The CLT would be involved in the formulation of responses

Formulation of linguistic map

Answer to the question and the question will be processed in the CLT

Mental images are pulled up, followed by determining symbols that represent these images

Phonological, lexical and morphosyntactic rules are derived as well

In the formulating sentences part of CELF-4, the child is given a visual cue and word that they need to formulate a sentence

Have a mental image of the word

Nonmeaningful repetition

Possible because of the direct connection of the Broca’s area to the Wernicke’s area, called the Arcuate Fasciculus

Formulation of nonmeaningful sound sequences

Bypasses comprehension and language cognitive representation

Transcortical sensory aphasia

Speech programming 2, Speech initiation, Speech coordination-transmission, Speech actualization

Speech programming 2 (SP2)

Speech Programming 2

Early in SP1, we ended with a linguistic map (motor program). Now we need to transform that to motor commands which happens in SP2.

Broca’s area in interaction with the primary motor cortex

It transduces the motor program of the message into a series of motor commands

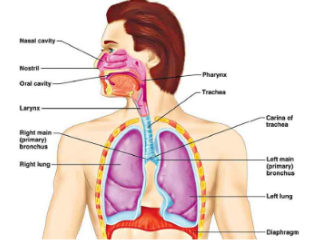

We involve the mouth, lips, vocal folds since it’s a voice sound, we are able to identify the exact motor command.

E.g., the word “apple” first we have to have an outgoing airstream and as it moves out the vocal tract we need the vocal folds to vibrate so it will close then pop open so that the vowel /a/ is produced and the mouth should be open in order for this sound to be produced

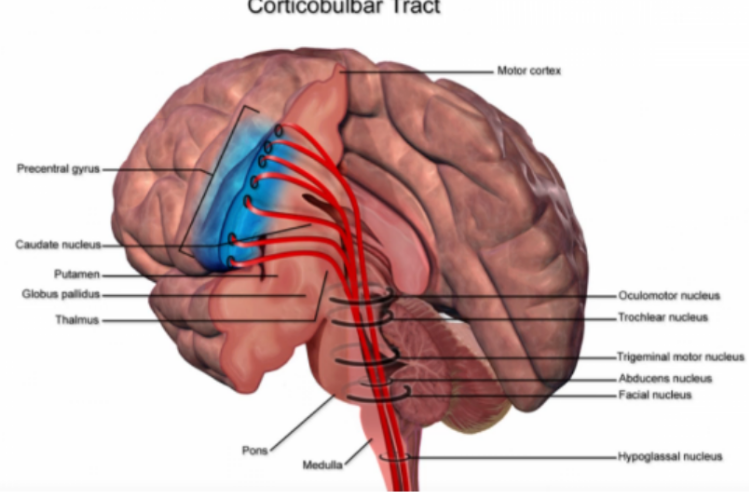

Speech Initiation (SI)

Primary motor cortex and corticobulbar pathways within the CNS

From the cortex to the cranial nerves

Initiates neuromotor impulses that results in sequential muscular movements for speech production; both segmental and suprasegmental features

Once we turn it on, it doesn’t mean its perfect

Remember that it is just an on and off signal

Hence why we need something that would coordinate that motor movement

Speech-Coordination-Transmission (SCT)

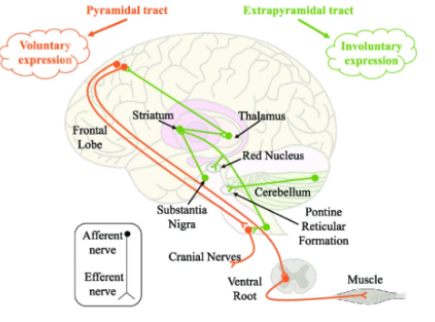

Exprapyramidal, cerebellar, reticular motor systems in interaction with the primary motor cortex and pyramidal systems

The speech initiation started the process but that process needs to be smooth and on target which is the use of this system

Mediates, transmits, modulates, coordinates, facilitates and inhibits neural impulses to allow for controlled, smooth, on-target, sequential muscular movements

Patrol the movement

Makes the flow of speech smoother

In summary:

We know what to say because the brain processes it → Broca’s area tell what we need to do → Primary motor cortex receive information → Connection with brain stem → Impulses will go down to brain stem → corticobulbar → descending pathway → CN 5, 7, 8, 12

Initiation happens when the primary cortex and corticobulbar sends the information down the pathways to the CNs

Because this is an on/off process, the extrapyramidal targets coordinated movements

Basal ganglia problems manifest as tremors

Cerebellar problems manifest as ataxic dysarthria

Speech Actualization (SA)

Once we have the signal, they are coordinated we would then involve our speech processes through our speech actualization

OPM including the PNS, primary motor cranial nerves

The organized, controlled, and coordinated neuromuscular activities of the speech mechanism that results from all previous motor processes allowing for accurate force, timing, and speed

Motor Formulation, Sequencing, Initiation, Motor Control

Behavioral correlates of the Speech Production Segment (SPS) (4)

Motor formulation

Behavioral correlates of the Speech Production Segment (SPS)

Auditory-motor transducer

Involves the formulation of the motor map

Incorporates both phonologic and prosodic features

Includes motor ideation and motor planning

Linguistic formulation happens in the CLT, while motor happens in the SPS.

Problems manifest as apraxia

Sequencing

Behavioral correlates of the Speech Production Segment (SPS)

Identification and assembly of phonetic or syllabic strings

Provides speech mechanism with “procedures for operation”

Which happen first, then second, and which one should be at the same time

Sequence of sound we produce

SP2

Seen when movements are not coordinated - translocute the sounds

Initiation

Behavioral correlates of the Speech Production Segment (SPS)

Commands are launched to selected parts of speech mechanism (segmental, suprasegmental)

Start the movement of the articulators

At the level of speech programming

Motor control

Behavioral correlates of the Speech Production Segment (SPS)

Because it needs to be on target, smooth, from the involvement speech initiation to the actualization there should be:

Initiation of motor control (not yet execution)

Should be followed by regulation of firing of neural impulses

Geared towards organized, controlled and coordinated neuromuscular activity

Only possible if the patient is able to get feedback from the movement

Internal feedback mechanism

Continuous process

Happens between speech initiation to actualization

Product

What we do as Speech-Language pathologist is we assess the _, and once we assess we able to infer about the status of the different SLPM components

Vocal tone, Resonance, Phonetic Structure

Speech product (3)

Phonologic, Syntactic, Semantic, Pragmatic

Language product (4)