Parallel and Distributed Computing: Module 1, 2, 3

1/116

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

117 Terms

Serial Computing

involves processing instructions one at a time, using only a single processor, without distributing tasks across multiple processors.

Sequential Computing

Another term for serial computing.

Parallel Computing

Uses multiple computer cores to attack several operations at once.

It addresses the slow speeds of serial computing.

Parallel Programming

Enables computers to run processes and perform calculations simultaneously, a method called parallel processing.

Parallel Architecture

It can break down a job into its parts and multi-task them unlike serial computing.

Parallel Computer Systems

Well suited to modeling and simulating real-world phenomena.

Task Distribution

Example of Parallel Computing.

The process by which a supercomputer can split the whole grid into sub-grids.

Simultaneous Computation

Example of Parallel Computing.

Thousands of processors work simultaneously on different parts of the grid to calculate the data which is stored at different locations.

Communication between Processors

Example of Parallel Computing.

The main reason for processors to communicate with each other is the fact that the weather for one part of the grid can have an impact on the areas adjacent to it.

Multicore Processors

They consist of multiple processing units, or cores, on a single integrated circuit (IC).

This structure facilitates parallel computing, enhancing performance while potentially reducing power consumption.

Speed and Efficiency

Parallel Computing Benefits.

Allows tasks to be completed faster by dividing them into smaller sub-tasks that can be processed simultaneously by multiple processors or cores.

Handling Large Data Sets (Scalability)

Parallel Computing Benefits.

Essential for processing large data sets that would be impractical or too slow to handle sequentially.

Parallel Computing Models the Real World

Parallel Computing Benefits.

To crunch numbers on data points in weather, traffic, finance, industry, agriculture, oceans, ice caps, and healthcare.

Saves Time & Saves Money

Parallel Computing Benefits.

By saving time, parallel computing makes things cheaper; the more efficient use of resources may seem negligible on a small scale.

Solving Complex Problems

Parallel Computing Benefits.

With AI and big data, a single web app may process millions of transactions every second; parallel computing helps achieve this faster.

Leverage Remote Resources

Parallel Computing Benefits.

With parallel processing, multiple computers with several cores each can sift through many times more real-time data than serial computers working on their own.

Fault Tolerance

Parallel Computing Benefits.

Parallel systems can be designed to be fault-tolerant, meaning they can continue to operate even if one or more processors fail.

HP Z8

The world's packs in 56-cores of computer power that lets it perform real-time video editing in 8K video or run complex 3D simulations.

ILLIAC IV

Developed at the University of Illinois in the 1960s with help from NASA and the U.S. Air Force.

It had 64 processing elements capable of handling 131,072 bits at a time.

Search for Extraterrestrial Intelligence (SETI)

Monitors millions of frequencies all day and night.

It uses parallel computing through the Berkeley Open Infrastructure for Network Computing (BOINC).

Distributed Computing

Originally referred to independent computers interconnected via a network, that is capable of collaborating on a task.

Distributed Computing

Networks of interconnected computers that work together to achieve a common goal.

Distributed Computing

These computers are often spread across different locations and connected through a network, such as the internet or a local area network (LAN).

Distributed Computer System

Consists of multiple software components that are on multiple computers but run as a single system.

Local Network

A network where computers are physically close to each other.

Wide Area Network

A network that connects computers that are far apart.

Cloud Computing

Services like AWS, Microsoft Azure, and Google Cloud Platform that rely on distributed computing for scalability.

Von Neumann Architecture

This architecture introduced the concept of storing both data and instructions in the same memory.

Reduced Instruction Set Computing (RISC)

Its processors are characterized by a small, highly optimized set of simple instructions.

Complex Instruction Set Computing (CISC)

Its processors feature a large and complex instruction set, with instructions that can perform multiple operations simultaneously.

Memory Address

Specifies the location in memory where data or instructions are stored or retrieved.

Memory Data

The actual information (either data or instructions) stored in memory.

Control in Memory

Manages the flow of data and instructions between memory and the CPU.

ALU or Arithmetic Logic Unit

Performs arithmetic and logical operations.

PC or Program Counter

Keeps track of the address of the next instruction to be executed.

IR or Instruction Register

Holds the current instruction being executed.

MAR or Memory Address Register

Stores the address of the memory location being accessed.

MDR or Memory Data Register

Temporarily holds data being transferred to or from memory.

CU or Control Unit

Coordinates the activities of the CPU, managing the flow of data and instructions.

Accumulator

A register that stores intermediate results of arithmetic and logic operations.

General Purpose Registers

Used for temporary storage of data during processing.

I/O Bus

A communication pathway connecting the CPU and memory with I/O devices.

I/O Interface

Acts as a bridge between the CPU/memory and the I/O devices.

Flynn's Taxonomy

Classifies computer architectures according to how many instruction streams (processes) and data streams they can process simultaneously.

Instruction Pool

A collection or queue of instructions that are waiting to be fetched, decoded, or executed by the CPU

Single-Instruction, Single Data (SISD)

A uniprocessor machine that is capable of executing a single instruction, operating on a single data stream.

Single-Instruction, Multiple-Data (SIMD)

A multiprocessor machine capable of executing the same instruction on all the CPUs but operating on different data streams.

Multiple-Instruction, Single-Data (MISD)

A multiprocessor machine capable of executing different instructions on different processors, but all of them operate on the same dataset.

Multiple-Instruction, Multiple-Data (MIMD)

A multiprocessor machine that is capable of executing multiple instructions on multiple data sets. Each processor works independently, running its own program on its own data.

Devices or Systems

Key Components of a Distributed Computing System.

Each of these have their own processing capabilities and may also store and manage their own data.

Network

Key Components of a Distributed Computing System.

It connects the devices or systems in the distributed system, allowing them to communicate and exchange data.

Resource Management

Key Components of a Distributed Computing System.

Used to allocate and manage shared resources such as computing power, storage, and networking.

Client-Server Architecture

Type of Distributed Architecture.

The most common method of software organization on a distributed system.

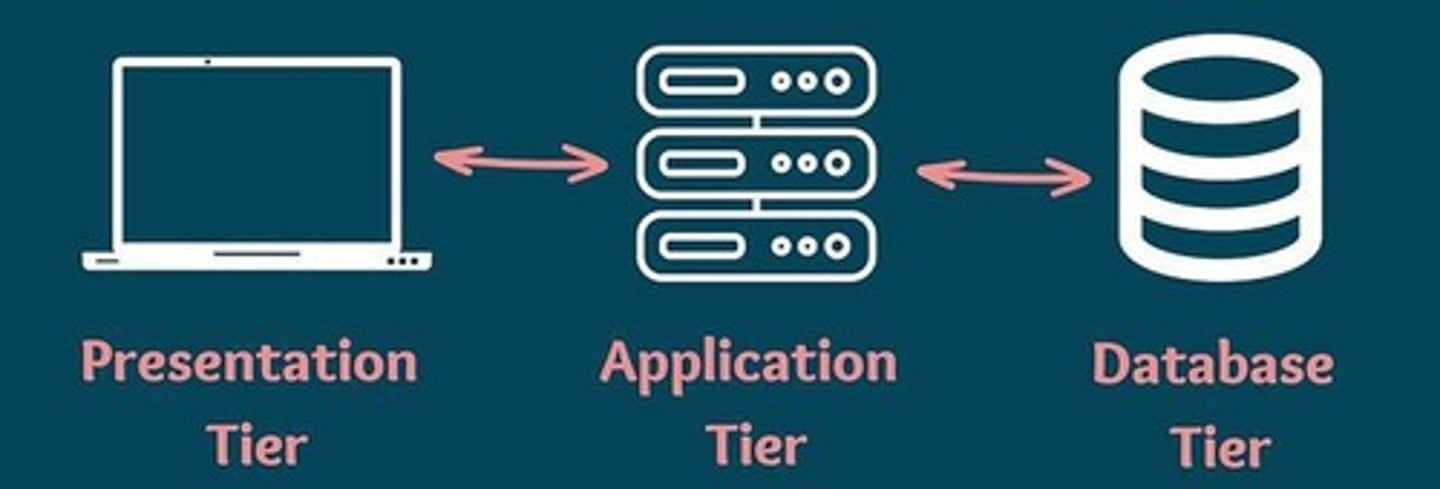

Three-Tier Architecture

Type of Distributed Architecture.

Client machines remain as the first tier you access.

Server machines, on the other hand, are further divided into two categories.

N-Tier Architecture

Type of Distributed Architecture.

Includes several different client-server systems communicating with each other to solve the same problem.

Peer-to-Peer Architecture

Type of Distributed Architecture.

Distributed systems assign equal responsibilities to all networked computers.

Message Passing Interface (MPI)

A standardized and portable message-passing system designed for parallel computing architectures.

Message passing

Sending of a message to an object, parallel process, subroutine, function, or thread, which is then used to start another process.

Communicator

Defines a group of processes that can communicate with one another.

Message Passing Paradigm

A basic approach for Inter-Process Communication.

The data exchange between the sender and the receiver.

Client Server Paradigm

The server acts as a service provider, the client issues the request and waits for the response from the server.

Here, server is a dump machine. Until the client makes a call server doesn’t communicate.

Peer-to-Peer Paradigm

Direct communication between processes.

Here is no client or server; anyone can request others and get a response.

Message systems

Act as an intermediate among independent processes.

Acts as a switch through which processes exchange messages asynchronously in a decoupled manner.

The sender sends a message, which is dropped at first in the message system, then forwarded to the message queue which is associated with the receiver.

Synchronous Message Passing

Also called rendezvous or handshaking.

The sender and receiver have to 'meet' at their respective send/receive operations to transfer data.

Asynchronous Message Passing

The sender does not wait for the receiver to reach its receive operation; rather, it gets rid of the prepared data and continues its execution.

Message Queue System

A common architecture in distributed computing and asynchronous communication.

Message Queue

It acts as an intermediary in a Message Queue System.

It collects incoming messages and holds them temporarily.

Collective Communication

Where data is aggregated or disseminated from/to multiple processes.

Broadcast

Distributing data from one process to all processes in the group.

Scatter

Takes an array of elements and distributes the elements in the order of process rank.

Gather

This is the inverse of MPI Scatter; it takes elements from many processes and gathers them into one single process.

It takes elements from many processes and gathers them into one single process.

Reduce

Takes an array of elements on each process and returns an array of output elements to the root process.

MPI_Allgather function

A collective communication operation in MPI that gathers data from all processes in a group and distributes it to all members of the group.

Barrier Synchronization

Collective Communication Pattern.

All processes in a communicator must reach the barrier before any can proceed.

It enforces a synchronization point.

Data Movement

Collective Communication Pattern.

Data is distributed or collected among processes.

Collective Operations

Collective Communication Pattern.

One process from which the communicator collects data from each process and operates on that data to compute a result.

Reduce-Scatter

Combines and distributes partial results.

Unique Identifier (UID)

A numeric or alphanumeric string that is associated with a single entity within a given system.

Process Rank

Type of Unique Identifier.

Simple integer: Each process is assigned a unique integer value.

Process ID

Type of Unique Identifier.

System-assigned identifier: The operating system assigns each process a unique identifier.

Logical Topology

Type of Unique Identifier.

Hierarchical or grid-based structure: Processes are organized into a logical topology, such as a tree or a grid.

Custom Identifiers

Type of Unique Identifier.

User-defined identifiers: Processes can be assigned unique identifiers based on various criteria, such as location, function, or workload.

Process Identifiers

These identifiers are crucial for tracking, managing, and controlling processes and users on a system.

User ID

A numerical identifier that represents the user associated with the process, used for security and access control purposes.

Process ID

A numerical identifier for a process in the system calls or commands.

Process Group ID

A numerical identifier for groups that process together a single unit.

Session ID

Associates a group of processes with a particular user session.

A unique number that a server assigns to requesting clients.

Session

A temporary connection between a server and a client.

ID

Stands for identifier and is used to identify and track user activity.

Fork-Join Parallelism

A programming model that allows tasks to be split into subtasks (forking) and later combined (joining) after execution.

Fork

The step that splits the task into smaller subtasks executed concurrently.

Join

The step that merges the results of the executed subtasks into one result.

Divide and Conquer

The strategy that fork-join parallelism use.

Fork-Join Parallelism

Its framework supports a style of parallel programming that solves problems by divide and conquer.

Fork-Join Parallelism

It delineates a set of tasks that can be executed simultaneously, beginning at the same starting point, the fork, and continuing until all concurrent tasks are finished, having reached the joining point.

Multithreading

The ability of a program or an operating system to enable more than one user at a time without requiring multiple copies of the program.

Multithreading

A CPU feature that allows programmers to split processes into smaller subtasks called threads that can be executed concurrently.

Context Switching

The state of a thread is saved and the state of another thread is loaded whenever any interrupt (due to I/O or manually set) takes place.

Process

A program being executed.

Thread

Independent units into which a process can be further divided.