ENGR110 - Machine Learning

1/28

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

29 Terms

What is the main idea of Machine Learning (ML)?

Creating systems that learn patterns from data, instead of being explicitly programmed with rules.

What are the two main types of ML discussed?

1. Logic-based (rule-based AI). 2. Neural Networks (inspired by the brain).

What is the essential ingredient for training a Neural Network?

A training dataset with inputs and the correct answers (called "targets").

What is the difference between Training and Working stage?

Learning from data with known answers. Working: Making predictions on new, unseen data.

What is a Neural Network?

A complex formula (or hardware) that maps inputs to outputs. It's made of interconnected "neurons."

For an image, what are the INPUTS to a NN?

The pixel values of the image.

For a classification task, what are the OUTPUTS of a NN?

One output per category. The highest output value is the network's prediction (e.g., Output 1: Cow, Output 2: Dog).

What is the main goal during NN training?

To adjust the internal parameters (weights & biases) to minimize the error between its output and the target.

What is the basic building block of a Neural Network?

A neuron.

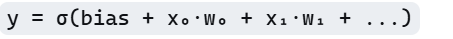

What is the mathematical formula for a single neuron's output?

y = σ(bias + x₀·w₀ + x₁·w₁ + ...)

What is the purpose of a "weight" (w) in a neuron?

It determines the importance of a specific input.

What is the purpose of the "bias" in a neuron?

It shifts the decision boundary, making the neuron fire easier or harder.

What is the activation function (σ) and why is it used?

A function (like Sigmoid) that decides if and how much a neuron should "fire." It introduces non-linearity.

What is the Sigmoid function?

σ(z) = 1 / (1 + e^{-z}). It squishes any value of z into a number between 0 and 1.

What does a neuron output (y) of ~0.9 mean?

The neuron is very confident and "firing" (saying YES).

What does a neuron output (y) of ~0.1 mean?

The neuron is very confident and "dormant" (saying NO).

What does a neuron output (y) of ~0.5 mean?

The neuron is uncertain or "not sure."

How is error calculated for a single data point?

error = y - t (or (y - t)²), where y is the output and t is the target.

What is the name of the algorithm used to minimize error?

Gradient Descent (or Gradient Search).

What is the "Learning Rate" (η)?

A parameter that controls how big a step the algorithm takes when adjusting weights.

What happens if the Learning Rate is too HIGH?

Learning is fast but unstable; it might overshoot the best solution.

What happens if the Learning Rate is too LOW?

Learning is very slow and might get stuck.

Why are weights and biases initialized to RANDOM values?

To break symmetry (so neurons learn different things) and avoid the "vanishing gradient" problem.

Why can't we use a simple STEP function instead of Sigmoid?

Because the step function's slope is flat everywhere except 0. You can't do gradient descent on a flat line!

What is Backpropagation?

A fast algorithm for calculating how to adjust every weight in the network by propagating the error backwards.

What does a single neuron create in the input space?

A linear decision boundary (a straight line in 2D).

Why do we need multi-layer networks?

Because single neurons can only draw straight lines. Multiple layers can combine them to create complex, non-linear boundaries.

Where is a neuron's decision boundary located?

Where bias + w₀·x₀ + w₁·x₁ + ... = 0

epoch

represents one complete pass through the entire training dataset, allowing the model to learn from every training example once. Weights and biases adjusted after each epoch