Digital Inequalities

1/5

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

6 Terms

Unequal Outcomes

Artificial intelligence (AI) and machine-learning tools promise of efficiency make algorithmic systems attractive

This leads to complex social issues being increasingly automated, creating a false sense of solution and safety

Algorithmic decision making increasingly pervades the social sphere having a great impact on medical care, to predicting crimes, selecting social welfare beneficiaries, and identifying suitable job candidates

Computer scientists are not simply dealing with purely technical aspects but are engaged in making moral and ethical decisions that impact on people’s life

The harm, bias, and injustice that emerge from algorithmic systems varies and is dependent on the training and validation data used, the underlying design assumptions, and the specific context in which the system is deployed

As results it impacts individuals and communities that are at the margins of society

Targeting Unequal Outcomes

For challenging the power asymmetries and structural inequalities which are ingrained into the society

A shift from asking ‘‘how can we make a certain dataset representative?’’

To focus on ‘‘what is the product or tool being used for? Who benefits? Who is harmed?’’

The idea of focusing on the people disproportionately impacted is aligned with participatory design and human-centred design which are based on the concept that the design process as a fundamentally participatory process

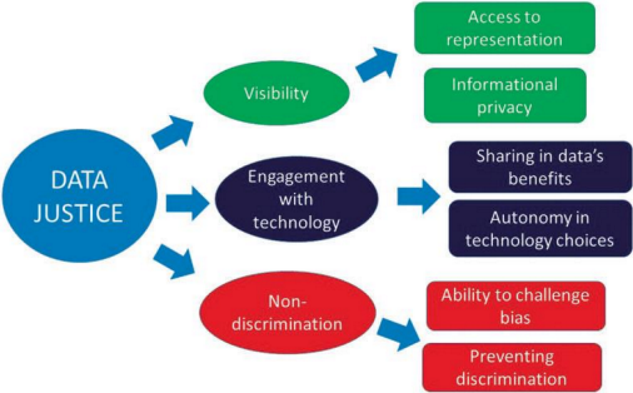

Data Justice

Data justice concerns the ways in which (big) data systems can discriminate, discipline and control

The use of data for governance to support power asymmetries (Johnson, 2014),

The way data technologies can provide greater distributive justice through making the poor visible (Heeks and Renken, 2016)

The impact of dataveillance practice on the work of social justice organisations (Dencik et al., 2016)

What is it about the Social Impacts of Digital Data that suggests a Social Justice Agenda is Important?

As the social impacts of big data are very different depending on:

one’s socio-economic position,

e.g. data-driven law enforcement focuses unequally on poor neighbourhoods which experience certain types of criminality (O’Neil, 2016);

gender, ethnicity and place of origin,

e.g. transgender citizens have been dealt with by population databases in the US shows that one’s ability to legally identify as a different gender depends to a great extent on one’s income (Moore and Currah’s 2015)

Algorithmic Social Justice

Algorithmic Social Justice addresses how AI-driven systems reinforce, mitigate, or reshape social inequalities

For instance, digital content produced by generative AI has a propensity to reinforce prevalent stereotypes by representing people in traditional, normative gender roles and appearances which can significantly impact people's perceptions and attitudes about themselves and others (Metaxa et al., 2021)

Responses to Algorithmic Bias

Algorithmic Auditing

Technical/Statistical Responses - Fairness Metrics