Domain 1 Review: AWS Certified Machine Learning - Specialty (MLS-C01 - English)

1/40

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced | Call with Kai |

|---|

No study sessions yet.

41 Terms

a data lake

With _____ , you can store structured and unstructured data

AWS Lake Formation

_____ is your data lake solution, and Amazon S3 is the preferred storage option for data science processing on AWS

Amazon S3 Standard

For active, frequently accessed data

Milliseconds access

≥ 3 Availability Zones

Amazon S3 INT

For data with changing access patterns

Milliseconds access

≥ 3 Availability Zones

Amazon S3 S-IA

For infrequently accessed data

Milliseconds access

≥ 3 Availability Zones

Amazon S3 1Z-1A

For re-creatable, less accessed data

Milliseconds access

1 Availability Zone

Amazon Glacier

For archive data

Select minutes or hours

≥ 3 Availability Zones

Amazon S3 with Amazon SageMaker

You can use Amazon S3 while you’re training your ML models with Amazon SageMaker. Amazon S3 is integrated with Amazon SageMaker to store your training data and model training output.

Amazon FSx for Lustre

When your training data is already in Amazon S3 and you plan to run training jobs several times using different algorithms and parameters, consider using Amazon FSx for Lustre, a file system service. FSx for Lustre speeds up your training jobs by serving your Amazon S3 data to Amazon SageMaker at high speeds. The first time you run a training job, FSx for Lustre automatically copies data from Amazon S3 and makes it available to Amazon SageMaker. You can use the same Amazon FSx file system for subsequent iterations of training jobs, preventing repeated downloads of common Amazon S3 objects.

Amazon S3 with Amazon EFS

Alternatively, if your training data is already in Amazon Elastic File System (Amazon EFS), we recommend using that as your training data source. Amazon EFS has the benefit of directly launching your training jobs from the service without the need for data movement, resulting in faster training start times. This is often the case in environments where data scientists have home directories in Amazon EFS and are quickly iterating on their models by bringing in new data, sharing data with colleagues, and experimenting with including different fields or labels in their dataset. For example, a data scientist can use a Jupyter notebook to do initial cleansing on a training set, launch a training job from Amazon SageMaker, then use their notebook to drop a column and re-launch the training job, comparing the resulting models to see which works better.

batch processing

With _____, the ingestion layer periodically collects and groups source data and sends it to a destination like Amazon S3. You can process groups based on any logical ordering, the activation of certain conditions, or a simple schedule. _____ is typically used when there is no real need for real-time or near-real-time data, because it is generally easier and more affordably implemented than other ingestion options.

Stream processing

_____, which includes real-time processing, involves no grouping at all. Data is sourced, manipulated, and loaded as soon as it is created or recognized by the data ingestion layer. This kind of ingestion is less cost-effective, since it requires systems to constantly monitor sources and accept new information. But you might want to use it for real-time predictions using an Amazon SageMaker endpoint that you want to show your customers on your website or some real-time analytics that require continually refreshed data, like real-time dashboards.

Kinesis Video Streams

You can use Amazon _____ to ingest and analyze video and audio data. For example, a leading home security provider ingests audio and video from their home security cameras using _____. They then attach their own custom ML models running in Amazon SageMaker to detect and analyze objects to build richer user experiences.

Kinesis Data Analytics

Amazon _____ provides the easiest way to process and transform the data that is streaming through _____ or Kinesis Data Firehose using SQL. This lets you gain actionable insights in near-real time from the incremental stream before storing it in Amazon S3.

Kinesis Data Firehose

As data is ingested in real time, you can use Amazon _____ to easily batch and compress the data to generate incremental views. _____ also allows you to execute custom transformation logic using AWS Lambda before delivering the incremental view to Amazon S3.

Kinesis Data Streams

With Amazon _____, you can use the Kinesis Producer Library (KPL), an intermediary between your producer application code and the _____ API data, to write to a Kinesis data stream. With the Kinesis Client Library (KCL), you can build your own application to preprocess the streaming data as it arrives and emit the data for generating incremental views and downstream analysis.

True

The raw data ingested into a service like Amazon S3 is usually not ML ready as is. The data needs to be transformed and cleaned, which includes deduplication, incomplete data management, and attribute standardization. Data transformation can also involve changing the data structures, if necessary, usually into an OLAP model to facilitate easy querying of data.

Apache Spark on Amazon EMR provides a managed framework

Using _____ provides a managed framework that can process massive quantities of data. Amazon _____ supports many instance types that have proportionally high CPU with increased network performance, which is well suited for HPC (high-performance computing) applications.

True

A key step in data transformation for ML is partitioning your dataset

True

You can store a single source of data in Amazon S3 and perform ad hoc analysis

Use Amazon S3 Standard for the processed data that is within one year of processing. After one year, use Amazon S3 Glacier for the processed data. Use Amazon S3 Glacier Deep Archive for all raw data.

A data engineer needs to create a cost-effective data pipeline solution that ingests unstructured data from various sources and stores it for downstream analytics applications and ML. The solution should include a data store where the processed data is highly available for at least one year, so that data analysts and data scientists can run analytics and ML workloads on the most recent data. For compliance reasons, the solution should include both processed and raw data. The raw data does not need to be accessed regularly, but when needed, should be accessible within 24 hours.

What solution should the data engineer deploy?

Amazon Kinesis Data Streams to stream the data. Amazon Kinesis Client Library to read the data from various sources.

An ad-tech company has hired a data engineer to create and maintain a machine learning pipeline for its clickstream data. The data will be gathered from various sources, including on premises, and will need to be streamed to the company’s Amazon EMR instances for further processing.

What service or combination of services can the company use to meet these requirements?

A healthcare company using the AWS Cloud has access to a variety of data types, including raw and preprocessed data. The company wants to start using this data for its ML pipeline, but also wants to make sure the data is highly available and located in a centralized repository.

What approach should the company take to achieve the desired outcome?

A Data Scientist wants to implement a near-real-time anomaly detection solution for routine machine maintenance. The data is currently streamed from connected devices by AWS IoT to an Amazon S3 bucket and then sent downstream for further processing in a real-time dashboard.

What service can the Data Scientist use to achieve the desired outcome with minimal change to the pipeline?

A transportation company currently uses Amazon EMR with Apache Spark for some of its data transformation workloads. It transforms columns of geographical data (like latitudes and longitudes) and adds columns to segment the data into different clusters per city to attain additional features for the k-nearest neighbors algorithm being used.

The company wants less operational overhead for their transformation pipeline. They want a new solution that does not make significant changes to the current pipeline and only requires minimal management.

What AWS services should the company use to build this new pipeline?

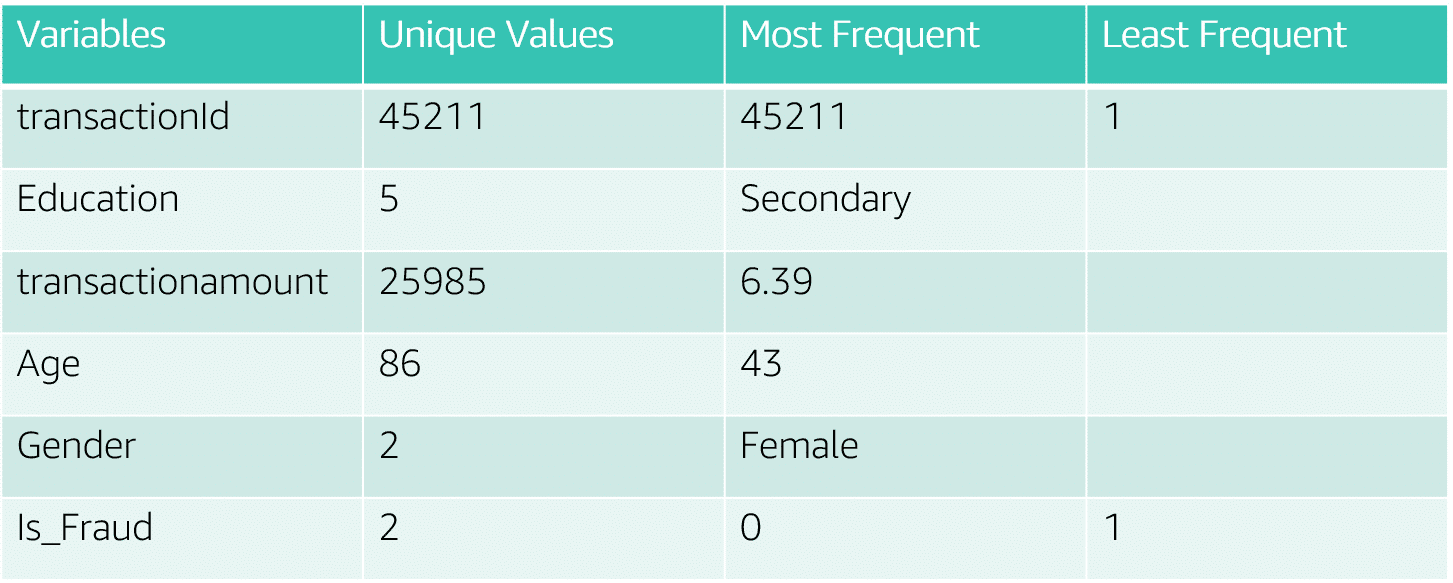

Overall statistics

Overall statistics include the number of rows (or instances) and the number of columns (or features or attributes) in your dataset. This information, which relates to the dimensions of your data, is really important. For example, it can indicate that you have too many features, which can lead to high dimensionality and poor model performance.

Overall statistics

Attribute statistics are another type of descriptive statistic, specifically for numeric attributes, and are used to get a better sense of the shape of your attributes. This includes properties like the mean, standard deviation, variance, and minimum and maximum values.

Multivariate statistics

Multivariate statistics mostly have to do with the correlations and relationships between your attributes.

True

Identifying correlations is important, because they can impact model performance

True

When features are closely correlated and they’re all used in the same model to predict the response variable, there could be problems—for example, the model loss not converging to a minimum state.

True

When you have more than two numerical variables in a feature dataset and you want to understand their relationship, a scatter plot is a good visualization tool to use. It can help you spot special relationships among those variables. In this plot, for instance, you can see that for some of the variables, there are pretty strong linear relationships, but for other variables, the linear relationship is not as strong.

True

Correlation matrices help you quantify the linear relationships among variables

True

When this happens, the question becomes: How can you quantify the linear relationship among these variables? A correlation matrix is a good tool in this situation, because it conveys both the strong and weak linear relationships among numerical variables.

For correlation, it can go as high as one, or as low as minus one. When the correlation is one, this means those two numerical features are perfectly correlated with each other. It's like saying Y is proportional to X. When those two variables’ correlation is minus one, it’s like saying that Y is proportional to minus X. Any linear relationship in between can be quantified by the correlation. So if the correlation is zero, this means there's no linear relationship—but it does not mean that there's no relationship. It's just an indication that there is no linear relationship between those two variables.

Make sure the data is on the same scale

Your dataset might also include data that is on very different scales. For example, here we have one column called Length, but that column has different units for data, like kilometers, meters, and miles. This is a common occurrence in many numerical datasets, especially if your dataset is a result of merging data from multiple sources.

True

Make sure a column doesn’t include multiple features

True

Outliers are points in your dataset that lie at an abnormal distance from other values. They are not always something you want to clean up, because they can add richness to your dataset. But they can also make it harder to make accurate predictions, because they skew values away from the other, more normal, values related to that feature. Moreover, an outlier can also indicate that the data point actually belongs to another column.

True

You may also find that you have missing data. For example, some columns in your dataset might be missing data due to a data collection error, or perhaps data was not collected on a particular feature until well into the data collection process. Missing data can make it difficult to accurately interpret the relationship between the related feature and the target variable, so, regardless of how the data ended up being missed, it is important to deal with the issue.

True

Common techniques for scaling

So how do we do it, exactly? How can we align different features into the same scale?

Keep in mind that not all ML algorithms will be sensitive to different scales of inputted features. Here is a collection of commonly used scaling and normalizing transformations that we usually use for data science and ML projects:

Mean/variance standardization

MinMax scaling

Maxabs scaling

Robust scaling

Normalizer

Use data augmentation techniques to add more images so that the model can generalize better.

A team of data scientists in a company focusing on security and smart home devices created an ML model that can classify guest types at a front door using a video doorbell. The team is getting an accuracy of 96.23% on the validation dataset.

However, when the team tested this model in production, images were classified with a much lower accuracy. That was due to weather: The changing seasons had an impact on the quality of production images.

What can the team do to improve their model?

A team of data scientists in a financial company wants to predict the risk for their incoming customer loan applications. The team has decided to do this by applying the XGBoost algorithm, which will predict the probability that a customer will default on a loan. In order to create this solution, the team wants to first merge the customer application data with demographic and location data before feeding it into the model.

However, the dimension of this data is really large, and the team wants to keep only those features that are the most relevant to the prediction.

What techniques can the team use to reach the goal? (Select TWO.)