RM1 Step 3b: Operationalise your variable (reliability)

1/32

There's no tags or description

Looks like no tags are added yet.

Name | Mastery | Learn | Test | Matching | Spaced |

|---|

No study sessions yet.

33 Terms

3 types of reliability:

Across time

Across raters

Across items within the test

Random error

May not get identical scores when repeating a measure on the same person due to:

Participant-driven error: mood, hunger, fatigue

Environment-driven error: temperature, noise, time of the day

2 procedures to establish Reliability Across Time:

Test-retest reliability

Parallel-forms reliability

Test-retest reliability

The extent to which scores on identical measures correlate with e/o when administered at two different times

General procedure

Administer the test

Get the results

Have the interval/time gap

Repeat step 1&2

Correlate both results

Significance of r-value

The more reliable; same scores observed again and again, the higher the r-value (from 0.00-1.00)

Limitation of TRR

The completion of the 1st test may influence one’s knowledge in completing the second test

Eg. it is easier to complete an IQ test the 2nd time when we already know the questions

To fix that limitation, we may use:

Parallel-forms reliability

Parallel-forms reliability

The extent to which scores on similar, but not identical, measures correlate with e/o when administered at two different times

General procedure

Administer the test (form A)

Get the results

Have the interval/time gap

Administer the other test (form B)

Get the results

Correlate both results

Limitations of PFR

Expensive to create double the number of tests

Difficult to make sure the two tests are equivalent

Reliability estimates are only meaningful when:

the construct does not change over time

Example: what is the significance of low reliability in measuring children’s IQ at age 5 and 10?

Low reliability (r= 0.5) may not be meaningful as the difference in IQ scores could be due to changes in intelligence from age 5 vs 10

Why are changes in intelligence not considered random error?

Because intelligence is the construct we are measuring, and there is a genuine change in the construct between age 5 and 10, that is not due to random error

Example #2: low reliability with BFLM scale administered to a 1 year r/s couple and a 10 year r/s couple

Low reliability (r= 0.3) could be due to the fact that love can change after 10 years

What time interval to choose then?

Choose a time interval that makes sense, depending on CONTEXT and what we are measuring

Example: 3 day time interval for BFLM scale and found low TRR

Since love cannot change so quickly, low TRR is more likely due to random error > consider to revise

When is appropriate to use short/long intervals?

Long: when the construct is more resistant to change eg. personality

Short: when the construct is more susceptible to change eg. moods

Possible limitation of short intervals

Susceptible to low TRR

Eg. 5 min interval between eating two plate of Hokkien mee, the low consistency in taste could be due to fullness

Two ways to improve TRR & PFR:

Revise your measurement to increase resistance to random error

remove subjective questions, or make them more specific to avoid multiple interpretations

Administer the measure more times (across the day) and aggregate the scores together

over a series of measurements, the inconsistencies in scores caused by random error should be averaged to 0

Reliability across raters; inter-rater reliability

The extent to which the ratings of one or more judges correlate with e/o

Why do we need inter-rater reliability (IRR)?

Observer error may arise as raters may differ in moods, attention, motivation, interests etc

3 ways to improve low IRR:

Train your raters and provide clearer guidelines for ratings

Revise your scale (similar to TRR & PFR)

Have more no. of raters before aggregating the scores

the overestimates and underestimates caused by observer error should be averaged to 0

Reliability across items within the test; internal consistency

The extent to which the scores of the different items on a scale correlate with e/o, thus measuring the true score

Why do we need internal consistency?

Most measures consist of >1 item to fulfil content validity, and each item is assumed to measure a part of the total construct

2 methods to calculate internal consistency:

Split-half reliability

Cronbach’s alpha

Split-half reliability

The extent to which scores between two halves of a scale are correlated

Procedure example: Odd-even order

One half consists of items 1&3, the other half consists of items 2&4

If the scores are similar for both halves, then the scale has good SHR

Limitation of using SHR

The 2 halves/versions may not really be equivalent

To fix that limitation, we may use:

Cronbach’s alpha

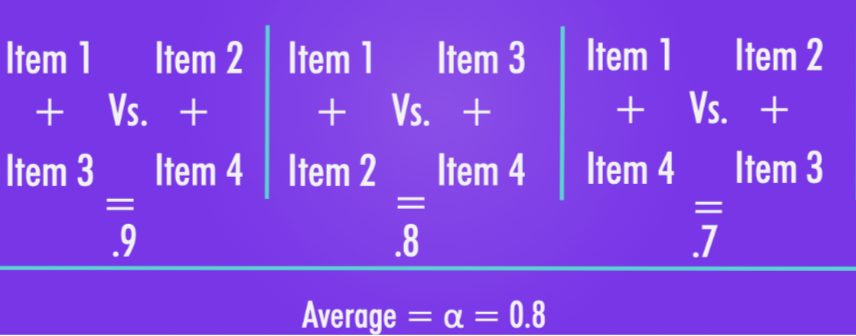

Cronbach’s alpha

An estimation of the average correlation among all the items on the scale = the average of all possible SHR outcomes

What is a suitable Cronbach’s alpha score for an acceptable scale?

>0.7

Relationship between reliability and validity

Reliability is a pre-requisite for validity; a measure must first be reliable then it can be valid, but a measure can be reliable without being valid